💡 TL;DR - The 30 Seconds Version

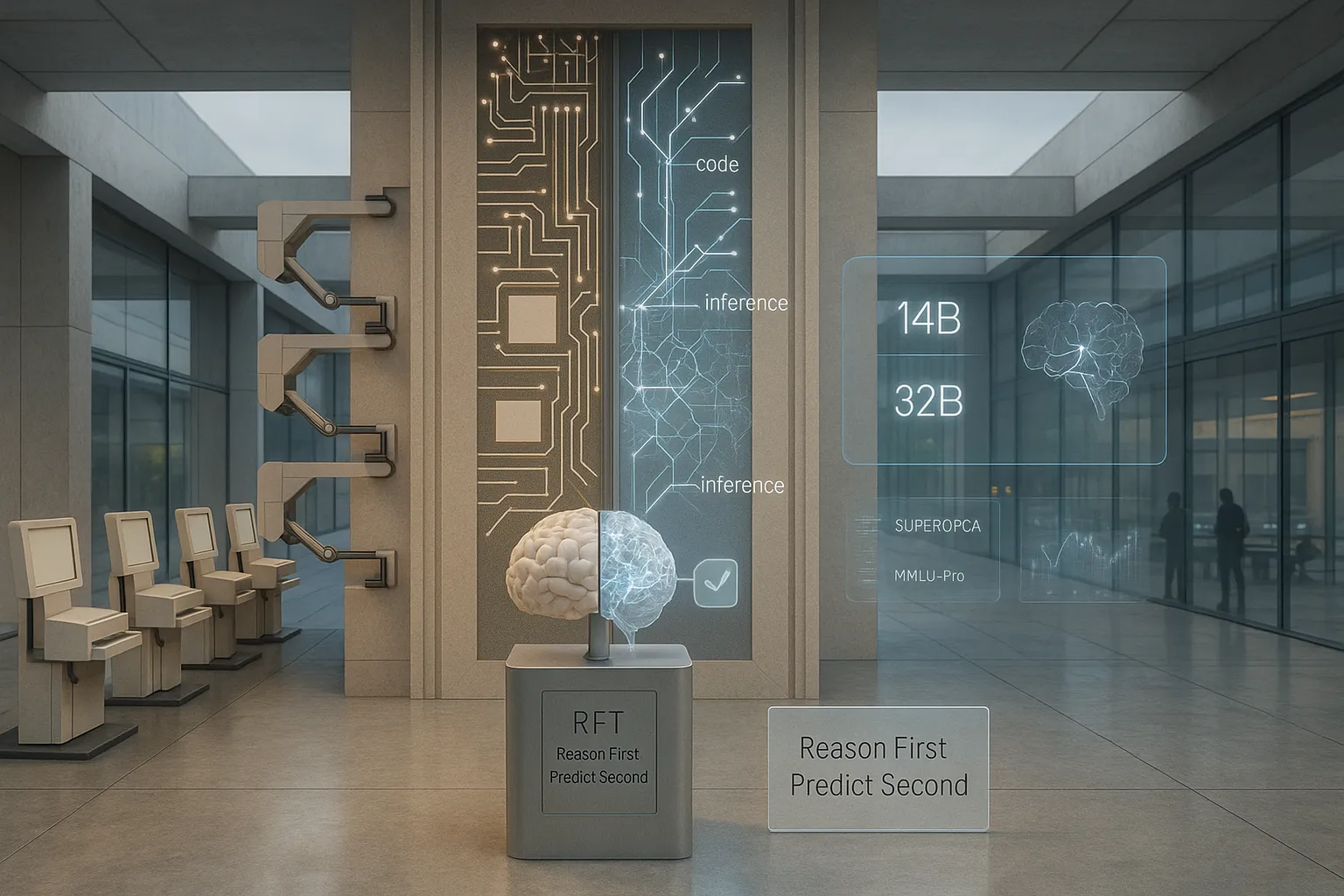

🧠 Microsoft Research and Tsinghua University created a training method that teaches AI models to think before answering during basic training.

📊 Their 14-billion parameter model matches the performance of a 32-billion parameter baseline model with half the size.

⚡ The method uses reinforcement learning with simple rewards: models get points for correct predictions, zero for wrong ones.

🧮 Testing focused on mathematical reasoning problems from OmniMATH dataset, where step-by-step thinking is essential for success.

🔄 Traditional AI training teaches pattern matching first, then adds reasoning later as an afterthought through separate fine-tuning.

🚀 This approach could cut AI deployment costs in half while creating more reliable models that reason naturally.

Researchers from Microsoft Research Asia and Tsinghua University just figured out how to make AI models think before they answer. It's called Reinforcement Pre-Training, and it changes everything about how we build language models.

The research, published on arXiv in June 2025, comes from a team led by Qingxiu Dong and includes Li Dong (Principal Researcher at Microsoft Research Asia), Yutao Sun (Tsinghua University), and four other collaborators.

Traditional AI training works like this: show the model billions of text snippets and teach it to predict the next word. Simple. Effective. But not particularly thoughtful.

The new approach adds a twist. Instead of jumping straight to predictions, models must generate a chain of reasoning first. Think of it as forcing the AI to show its work, like a math teacher who won't accept answers without explanations.

Join 10,000 readers who get tomorrow's tech news today. No fluff, just the stories Silicon Valley doesn't want you to see.

The Method That Makes Models Think

The researchers took a 14-billion parameter model and trained it to reason through each prediction. When faced with a context, the model generates a reasoning sequence, then makes its prediction. If the prediction matches reality, it gets rewarded. If not, it learns from the mistake.

Here's the clever part: they don't need human feedback or external annotations. The correctness of each prediction serves as its own reward signal. Right answer equals reward. Wrong answer equals learning opportunity.

They tested this on mathematical problems from the OmniMATH dataset. Math problems are perfect for this approach because they require deliberation. You can't guess your way through calculus.

Results That Raise Eyebrows

The numbers tell a striking story. Their 14-billion parameter model matched the performance of a 32-billion parameter baseline model. Same accuracy, half the parameters.

That's not just impressive – it's economically significant. Smaller models cost less to run, use less energy, and fit on more hardware. Getting equivalent performance from fewer parameters could democratize access to advanced AI.

The model also performed better on general reasoning tasks. SuperGPQA scores jumped from 36.1% to 39.0%. MMLU-Pro improved from 68.9% to 71.1%. The reasoning skills transferred beyond mathematics to diverse domains.

Why Standard Training Falls Short

Current AI training follows a two-phase approach. First, massive self-supervised pre-training on text prediction. Second, reinforcement learning for alignment and safety. The phases operate separately, limiting potential synergies.

This separation creates a fundamental mismatch. Pre-training optimizes for pattern matching and statistical correlation. Post-training tries to add reasoning and alignment on top. It's like teaching someone to memorize answers, then expecting them to explain their logic.

Reinforcement Pre-Training bridges this gap. It embeds reasoning directly into foundational training. Models learn to deliberate from day one, not as an afterthought.

The Technical Details That Matter

The implementation focuses on mathematical reasoning data, leveraging problems that inherently require step-by-step thinking. The system uses entropy-based filtering to identify challenging positions that benefit from deliberation.

Training configuration includes 8,000-token sequences, learning rates of 1e-6, and batch sizes of 256 questions with 8 responses each. The base model starts from Deepseek-R1-Distill-Qwen-14B, chosen for existing reasoning capabilities.

The reward system uses prefix matching. If the predicted sequence exactly matches the ground truth and aligns with valid token boundaries, it receives a reward of 1. Otherwise, 0. This binary approach minimizes reward hacking while providing clear correctness signals.

Scaling Laws Still Apply

The researchers found that performance improvements follow predictable scaling relationships. As training compute increases, accuracy improves according to power-law curves with R² values exceeding 0.989.

This suggests the approach scales reliably. More compute leads to better reasoning in predictable ways. The scaling characteristics match those of traditional language model training, indicating robust foundations.

Current Limitations and Future Directions

The work has clear boundaries. Experiments focus on a 14-billion parameter model trained primarily on mathematical data. Scaling to larger models and broader domains remains unproven.

The approach requires generating multiple reasoning trajectories during training, increasing computational overhead compared to standard pre-training. Current work also initializes from an already reasoning-capable model rather than training from scratch.

Future research directions include establishing scaling laws specific to reinforcement pre-training, exploring adaptive reasoning based on token difficulty, and integrating the approach with other training paradigms.

The computational overhead question matters. If the method requires significantly more compute than traditional pre-training, the parameter efficiency gains might not translate to cost savings. Real-world deployment will depend on this trade-off.

Beyond Mathematical Reasoning

While current experiments focus on mathematics, the principles extend to other domains. Any area where correctness can be verified could benefit from this approach. Code generation, logical reasoning, and factual question answering all have clear right and wrong answers.

The key insight is that vast amounts of text contain implicit reasoning tasks. Every prediction about what comes next involves some level of inference and logic. Reinforcement Pre-Training makes this reasoning explicit and rewardable.

The Bigger Picture

This work represents a shift from separate training phases to integrated learning. Instead of teaching pattern matching first and reasoning second, models learn both simultaneously.

The approach addresses a fundamental weakness in current AI development. Models that memorize patterns without understanding logic struggle with novel situations. Building reasoning into foundational training could produce more robust and reliable systems.

The parameter efficiency gains also matter for practical deployment. If smaller models can match larger ones through better training, advanced AI becomes more accessible. This could accelerate adoption across industries and applications.

Why this matters:

- Training efficiency breakthrough: A 14B model now matches 32B performance, potentially cutting AI deployment costs in half while maintaining capability

- Reasoning becomes foundational: Instead of bolting reasoning onto pattern-matching models after the fact, AI systems now learn to think during their basic training – creating more reliable and robust intelligence from the ground up

Read on, my dear:

❓ Frequently Asked Questions

Q: How much more expensive is RPT training compared to regular pre-training?

A: RPT requires generating 8 reasoning trajectories for each prediction, increasing computational costs. The paper doesn't provide exact cost comparisons, but the trade-off appears worthwhile given that a 14B RPT model matches 32B baseline performance, potentially cutting deployment costs in half.

Q: Why did researchers only test this on math problems instead of general text?

A: Math problems have clear right and wrong answers, making them perfect for the reward system. The researchers used the OmniMATH dataset because mathematical reasoning inherently requires step-by-step thinking. They acknowledge that testing on general web-scale text is a key future research direction.

Q: What's the difference between this and existing reasoning methods like Chain-of-Thought?

A: Chain-of-Thought is added after training through prompting. RPT builds reasoning directly into the model during pre-training using reinforcement learning. This creates models that naturally think before answering, rather than needing special prompts to trigger reasoning behavior.

Q: Can this method work with models trained from scratch, or does it need an already-smart starting point?

A: The current experiments started with Deepseek-R1-Distill-Qwen-14B, which already had reasoning capabilities. The researchers acknowledge this limitation and note that testing from standard base models is important future work to understand RPT's full impact.

Q: When will this be available in commercial AI models?

A: The paper was published in June 2025, but it's still research-stage work. The authors plan to scale up experiments and test on larger models and broader datasets. Commercial deployment would likely require significant additional development and testing.