💡 TL;DR - The 30 Seconds Version

🚀 Alibaba stock jumped 9% to four-year highs after CEO Eddie Wu pledged to spend beyond the company's $53 billion AI infrastructure commitment.

🤖 The company launched Qwen3-Max, a trillion-parameter AI model ranking third globally behind Gemini 2.5 Pro and GPT-5.

💸 Despite the optimism, Alibaba burned through $2.6 billion in quarterly free cash flow as capital spending more than tripled.

🌍 New data centers will open in Brazil, France, and the Netherlands, with international scale set to be five times larger by 2032.

🏆 Wu predicts only "five to six" AI platforms will survive globally, positioning this as a winner-take-most consolidation battle.

🎯 The move reflects capital choosing strategic scale over near-term profits as AI competition merges with geopolitical rivalry.

Qwen3-Max launch signals China’s full-stack ambitions, stock surges despite a $2.6 billion cash outflow

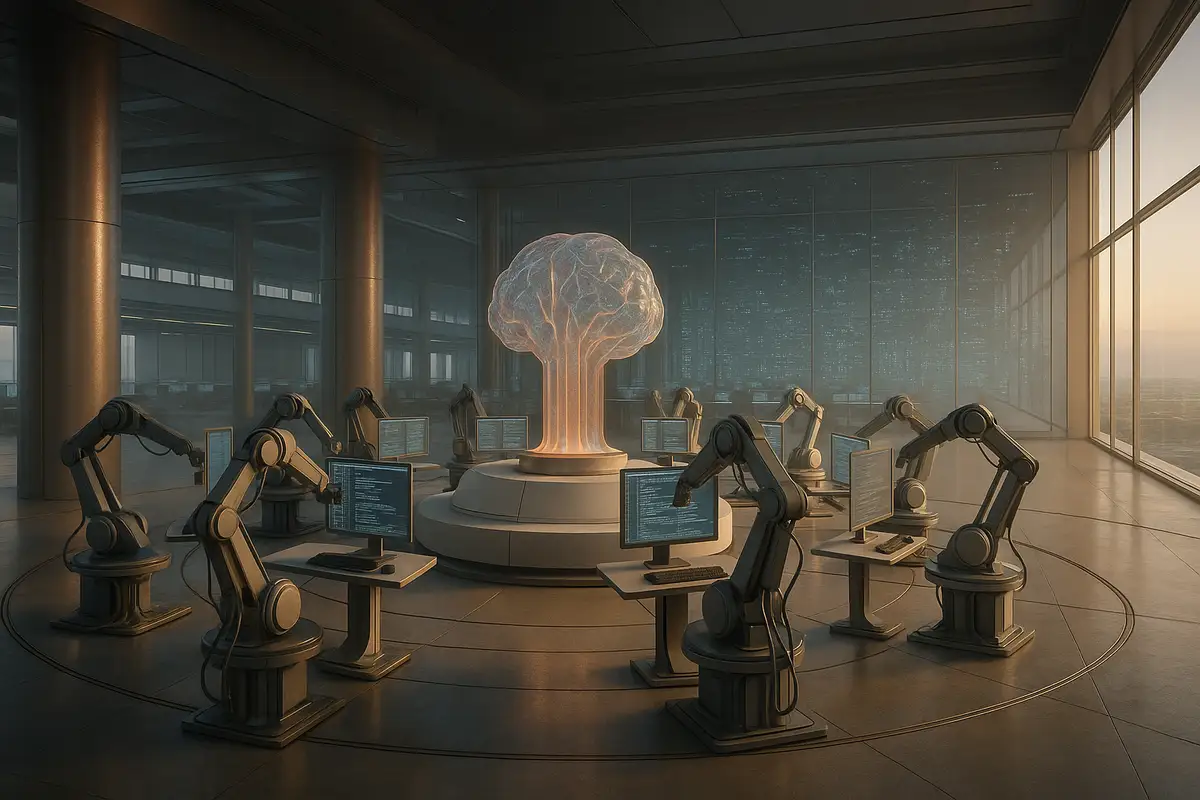

Claim: Alibaba can spend its way to AI leadership. Reality: investors cheered a bigger budget as free cash flow slid into the red. At its Apsara developer conference in Hangzhou, CEO Eddie Wu said Alibaba will invest beyond its February pledge of 380 billion yuan (~$53 billion) over three years and unveiled Qwen3-Max, a trillion-parameter flagship model, alongside a new tie-up to integrate Nvidia’s robotics software into Alibaba Cloud.

What’s actually new

Wu didn’t give a new top-line capex figure. He framed the bump as a response to “demand far exceeding expectations” and said global AI investment could top $4 trillion over five years. That’s posture as much as plan, but it signals management is comfortable being judged on capacity, not near-term profits.

On footprint, the cloud unit will open its first data centers in Brazil, France, and the Netherlands in the coming year, with additional sites planned in Mexico, Japan, South Korea, Malaysia, and Dubai. The company says its international data-center scale should be five times 2022 levels “in a few years,” with energy consumption up tenfold by 2032. Today, Alibaba Cloud operates across 29 regions. The direction is clear. So is the power bill.

SPONSORED

The model play

Qwen3-Max is pitched as Alibaba’s most capable model to date: over one trillion parameters, stronger coding, and agentic features designed for enterprise workflows. A preview version placed in the top tier on the LMArena leaderboard, which tracks multi-task text performance. It is not open-sourced; access runs through Alibaba’s own platform. That choice favors control and monetization over open-weights mindshare, while still letting Alibaba tout benchmark parity with Western peers. Benchmarks are not production. But they are the marketing battlefield.

The “physical AI” angle

Export restrictions have forced Chinese firms to hedge their bets on hardware while staying plugged into global software ecosystems. Alibaba’s answer is twofold. First, it is advancing its in-house T-Head accelerators, with state media flagging deployments at China Unicom. Second, it is partnering where it can: the new integration of Nvidia’s “physical AI” development stack gives customers robotics and autonomy tooling even when top-shelf Nvidia training parts are constrained. This is how you keep developers close without the silicon.

Evidence investors noticed

Shares jumped about 9% in Hong Kong and U.S. premarket trade, hitting their highest levels in nearly four years. That pop arrived despite awkward math: in the most recent quarter, free cash flow swung to a roughly $2.6 billion outflow as capex more than tripled. Cloud revenue growth, however, accelerated to about 26%, with management crediting AI products for the lift. The market is rewarding trajectory over tidy margins.

There’s also a sentiment story. Two ARK Invest funds re-established positions in Alibaba for the first time since 2021—small in dollars, big in symbolism. The read-across: global allocators see Beijing marginally warmer to private enterprise and like Alibaba’s overseas expansion path. In a year when Chinese tech names have whipsawed on policy headlines, any hint of policy stability matters.

Competitive math, not vibes

Wu projects only “five to six” super-scale AI platforms will matter globally. If that proves right, the game is consolidation by capacity, distribution, and developer gravity. Alibaba has two strategic levers:

- Stack control. Models (Qwen), middleware, cloud, and—eventually—domestic accelerators. Fewer vendor chokepoints, more margin capture if it works.

- Overseas reach. New data centers reduce single-market exposure and create local-latency moats for enterprise workloads. They also complicate sanctions risk and compliance.

For customers, the agentic tilt is what to watch. If Qwen-based agents can reliably plan tasks, call tools, and handle structured workflows at lower cost, that’s a real differentiator in cloud accounts. If not, the model remains a capable chatbot wearing a CFO-unfriendly price tag.

The caveats

Start with the numbers. Higher capex during a cash outflow is a statement of intent, not a proof of return. Bloomberg Intelligence and others warn the ROI may lag the hype for years. On the model side, Alibaba cites third-party leaderboards, but most results are self-reported slices that don’t reflect messy production constraints: latency under load, long-context throughput, safety guardrails, and cost per million tokens at enterprise scale. And while the Nvidia partnership helps on software, the chip supply question remains unresolved under U.S. export rules.

Finally, Qwen3-Max’s access model is closed. That keeps guardrails and pricing aligned with Alibaba’s platform strategy but limits the grassroots adoption that open-weights rivals use to seed ecosystems. It is a defensible call—just a different one than Meta’s.

The bottom line

Alibaba is making the only move that keeps it in the first rank of AI contenders: spend aggressively on compute, ship a credible flagship model, and give developers a reason to build on your cloud. The stock reaction shows investors will tolerate ugly near-term cash dynamics if the growth narrative is intact. The risk is obvious. Capacity is not the same as durable advantage.

Why this matters

- Capital is choosing strategic scale over near-term profits, reshaping the AI stack around a handful of global platforms.

- China’s push for AI independence is building parallel ecosystems that could permanently shift supply chains, standards, and where value accrues.

❓ Frequently Asked Questions

Q: How does Qwen3-Max actually compare to ChatGPT and Gemini?

A: Qwen3-Max ranks third globally on LMArena benchmarks, behind Gemini 2.5 Pro and GPT-5. With over one trillion parameters, it matches the scale of frontier Western models but trails in overall performance. Its strength lies in autonomous agent tasks and coding, where it outperforms some competitors like DeepSeek V3.1.

Q: Why is Alibaba losing money if AI demand is so strong?

A: Alibaba's free cash flow turned negative at $2.6 billion quarterly because capital spending more than tripled, primarily on data centers and chips. While cloud revenue grew 26% with AI services showing triple-digit growth, the infrastructure buildout costs currently exceed revenue gains. Management is prioritizing capacity over near-term profits.

Q: What exactly do US export controls prevent Alibaba from buying?

A: Export controls block Alibaba from purchasing Nvidia's most advanced AI training chips like H100s and A100s. The restrictions force Chinese companies to use less powerful alternatives or develop domestic chips. This explains Alibaba's investment in its T-Head semiconductor unit, which recently secured deployment with China Unicom for AI accelerators.

Q: What does "physical AI" mean in the Nvidia partnership?

A: Physical AI refers to AI systems that interact with the real world through robots, self-driving cars, and industrial automation. Alibaba is integrating Nvidia's development tools for building these applications into Alibaba Cloud. This partnership focuses on software rather than restricted hardware, allowing continued collaboration despite export controls.

Q: How much more is Alibaba actually planning to spend beyond the $53 billion?

A: Wu didn't specify the new total, only stating demand "far exceeded expectations." The original commitment was 380 billion yuan ($53 billion) over three years starting in February 2024. Wu projects global AI infrastructure investment could reach $4 trillion over five years, suggesting Alibaba's increase could be substantial.