💡 TL;DR - The 30 Seconds Version

🚀 Alibaba released two major AI models within 24 hours, claiming both outperform Claude Opus 4 and GPT-4 on key coding and reasoning benchmarks.

📊 The massive Qwen3-Coder contains 480 billion parameters but activates only 35 billion at a time, scoring 69.6% on SWE-bench Verified vs Claude's 70.4%.

⚡ An FP8 compressed version cuts memory requirements from 88GB to 30GB while running twice as fast at 60-70 tokens per second.

🆓 Both models use Apache 2.0 open source licenses, letting companies use them commercially without restrictions or vendor lock-in.

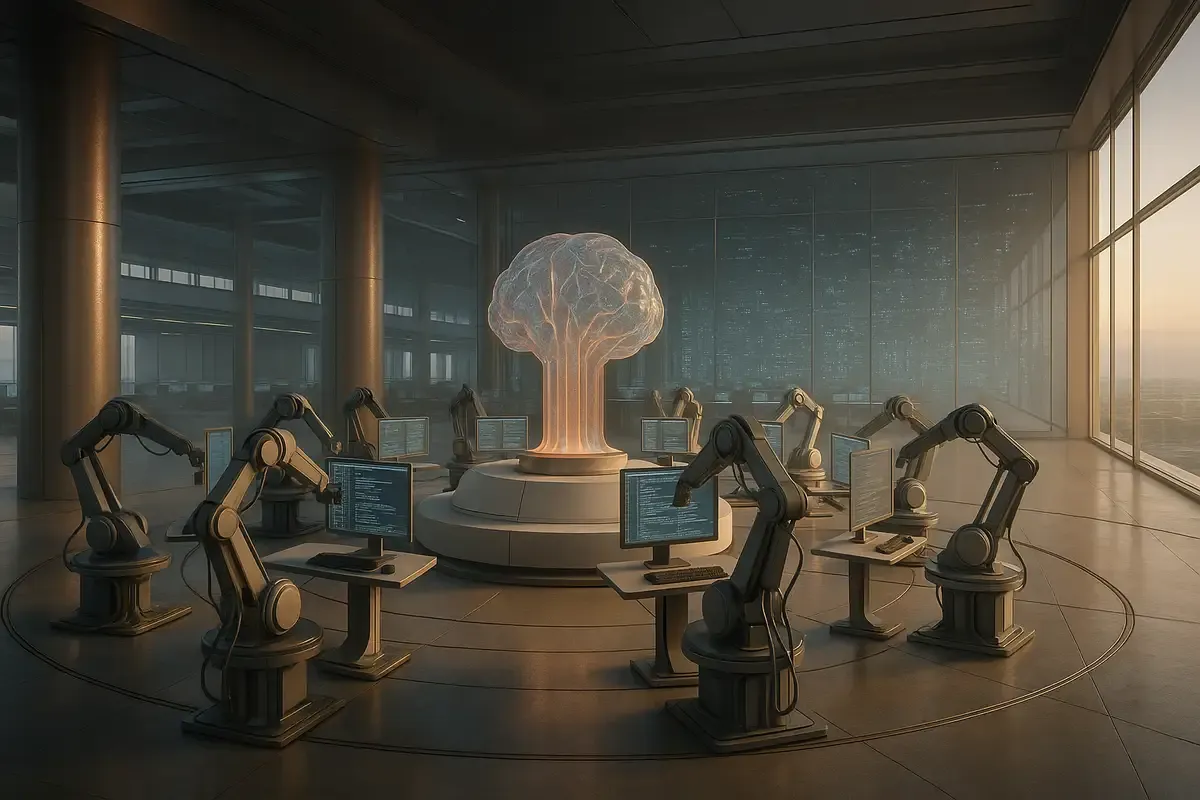

🏭 Alibaba trained the coding model using 20,000 parallel virtual environments on Alibaba Cloud to teach autonomous problem-solving through reinforcement learning.

⚠️ The launch comes days after researchers alleged Alibaba's previous model cheated on benchmarks by memorizing test data rather than learning to reason.

Alibaba just pulled off one of the most aggressive AI model launches in recent memory. The Chinese e-commerce giant released not one but two major language models within 24 hours, claiming both can outperform leading American competitors like Claude Opus 4 and OpenAI's GPT-4 on key tests.

The timing feels deliberate. While the AI world was still processing allegations that Alibaba's previous model cheated on benchmarks, the company doubled down with Qwen3-235B-A22B-Instruct-2507 and the massive Qwen3-Coder-480B-A35B-Instruct. Both models are open-source with Apache 2.0 licenses, meaning companies can use them commercially without restrictions.

But there's more happening here. Alibaba isn't just releasing better models—they're pushing hard into autonomous AI systems that can actually work independently to solve problems. It's a big bet on where AI is heading next.

Two Models, One Strategy

The release strategy tells you everything about Alibaba's approach. Instead of their previous "hybrid" model that could toggle between reasoning and non-reasoning modes, they're now building separate models for different tasks. The Qwen3-235B handles general instruction-following, while the 480-billion-parameter Qwen3-Coder focuses entirely on autonomous coding.

This isn't just a technical decision—it's philosophical. As the Qwen team explained: "After talking with the community and thinking it through, we decided to stop using hybrid thinking mode. Instead, we'll train Instruct and Thinking models separately so we can get the best quality possible."

The numbers back up their confidence. The updated Qwen3-235B jumped from 75.2 to 83.0 on MMLU-Pro tests, while reasoning tasks like AIME25 more than doubled in performance. The coding model scored 69.6% on SWE-bench Verified—nearly matching Claude Sonnet 4's 70.4%.

The Efficiency Play That Changes Everything

Here's where things get interesting for enterprises. Alibaba released an FP8 version of their model that compresses performance into far less memory. Instead of needing 88GB of GPU memory, the compressed version runs on about 30GB while actually running faster—60-70 tokens per second versus 30-40 for the full version.

Those aren't just incremental improvements. They're the difference between needing eight high-end GPUs and four, between cloud computing bills that break budgets and ones that make sense. For companies wanting to run these models locally, it's the difference between possible and impossible.

The technical specs are impressive on their own. The coding model contains 480 billion total parameters but only activates 35 billion at a time using a mixture-of-experts architecture. It handles 256,000 tokens natively—enough for entire codebases—and can stretch to one million tokens using extrapolation techniques.

20,000 Virtual Worlds and the Future of Coding

Alibaba didn't just train these models on more data. They built what they call a "scalable system capable of running 20,000 independent environments in parallel" on Alibaba Cloud. Think of it as 20,000 virtual worlds where AI agents learned to code by actually writing, testing, and debugging real programs.

This "long-horizon reinforcement learning" approach tackles what Alibaba calls "hard to solve, easy to verify" problems. The AI learns by doing, getting feedback from actual code execution rather than just predicting the next token in text.

The results show up in practical ways. Companies like Goldman Sachs are already piloting AI agents like Devin for what they call a "hybrid workforce." As Goldman's tech chief Marco Argenti put it, the future involves "people and AIs working side by side," with engineers describing problems while AI handles the tedious coding work.

The Ecosystem War

Alibaba isn't just releasing models—they're building an entire ecosystem. They open-sourced "Qwen Code," a command-line tool forked from Google's Gemini CLI, specifically tuned for their models. But in a clever move, they made sure their models work with existing tools like Anthropic's Claude Code and the popular Cline editor.

This compatibility strategy matters more than the technical specs. Developers won't switch to new models if it means learning new tools. By working with existing workflows, Alibaba removes friction and lets performance speak for itself.

The pricing structure also signals their intentions. Through Alibaba Cloud, they're offering tiered pricing based on input length—something that reflects the real computational costs but that most providers hide in flat rates. It's transparent and potentially much cheaper for typical use cases.

The Benchmark Problem Nobody Wants to Talk About

All these impressive numbers come with a massive asterisk. Just days before the launch, researchers from Fudan University alleged that Alibaba's Qwen2.5 model "cheated" on the MATH-500 benchmark by memorizing test data rather than actually learning to reason.

The AI community remains split on whether this constitutes cheating or just good training practice. LMArena CEO Anastasios Angelopoulos defended it as "part of the standard workflow of model training," while others worry about a disconnect between test scores and real-world performance.

The timing couldn't be worse for Alibaba. As AI strategist Nate Jones noted, "The moment we set leaderboard dominance as the goal, we risk creating models that excel in trivial exercises and flounder when facing reality."

Global Competition Gets Personal

This release isn't happening in a vacuum. It's part of an increasingly personal competition between Chinese and American AI companies. Elon Musk's xAI reportedly hired contractors specifically to train Grok 4 to beat Anthropic's Claude. Google poached the CEO and top talent from coding startup Windsurf, killing a planned OpenAI acquisition.

Within China, the competition is just as fierce. Alibaba's models compete directly with Moonshot AI's 1-trillion-parameter Kimi K2 and DeepSeek's latest offerings in what industry observers call the "war of a hundred models."

The geopolitical stakes add another layer. Chinese companies face pressure to build self-reliant AI ecosystems, while American firms worry about losing technological leadership. Both sides are pouring enormous resources into the race, with usage costs forcing even premium providers like Anthropic to tighten subscription limits.

Why this matters:

- Autonomous AI is becoming real - Companies are moving past chatbots toward systems that can actually complete tasks independently, which changes how we'll work with computers.

- Test scores might not mean much anymore - With mounting evidence of benchmark manipulation, the industry needs better ways to measure what these systems can actually do.

❓ Frequently Asked Questions

Q: What exactly is "agentic AI" and how is it different from regular chatbots?

A: Agentic AI works independently to complete tasks without constant human input. Instead of just answering questions, it can plan steps, use tools, get feedback, and adjust its approach. Think of it as the difference between asking for directions versus having someone navigate for you.

Q: How much does it cost to run these models compared to competitors?

A: Alibaba Cloud offers tiered pricing based on input length, which is typically cheaper than flat rates. The FP8 version cuts operational costs by 30-50% through lower power usage and reduced GPU requirements—four high-end GPUs instead of eight.

Q: What evidence suggests Alibaba's previous model cheated on benchmarks?

A: Fudan University researchers found that Qwen2.5 achieved top scores on MATH-500 by memorizing test questions during training rather than learning to reason. This practice, called data contamination, inflates scores but doesn't improve real-world performance.

Q: How can companies actually use these models if they're open source?

A: The Apache 2.0 license lets companies download, modify, and use the models commercially without restrictions. They can run them locally, fine-tune with proprietary data, or deploy through cloud services like vLLM and SGLang without paying licensing fees.

Q: What does "mixture-of-experts" mean in practical terms?

A: The model contains 480 billion parameters but only activates 35 billion for each task. It's like having 160 specialists but only consulting 8 relevant experts per question. This delivers massive model performance while using far less computing power.

Q: Are these models actually better than Claude and GPT-4 overall?

A: They beat Claude and GPT-4 on specific benchmarks like MMLU-Pro (83.0 vs 86.6 for Claude) and coding tests, but perform worse on others. Real-world performance depends heavily on your specific use case and the benchmark gaming concerns.

Q: Why did Alibaba abandon the hybrid reasoning approach?

A: The hybrid toggle created inconsistent behavior and put the burden on users to decide when to use reasoning mode. Separate models allow better optimization for each task and more predictable performance without user guesswork.

Q: How long does it take to download and set up these massive models?

A: The full model is 438GB while the FP8 version is 220GB. Download time depends on your internet speed, but setup can take hours. The FP8 version runs at 24 tokens/second on a Mac Studio with 512GB RAM using 272GB memory.