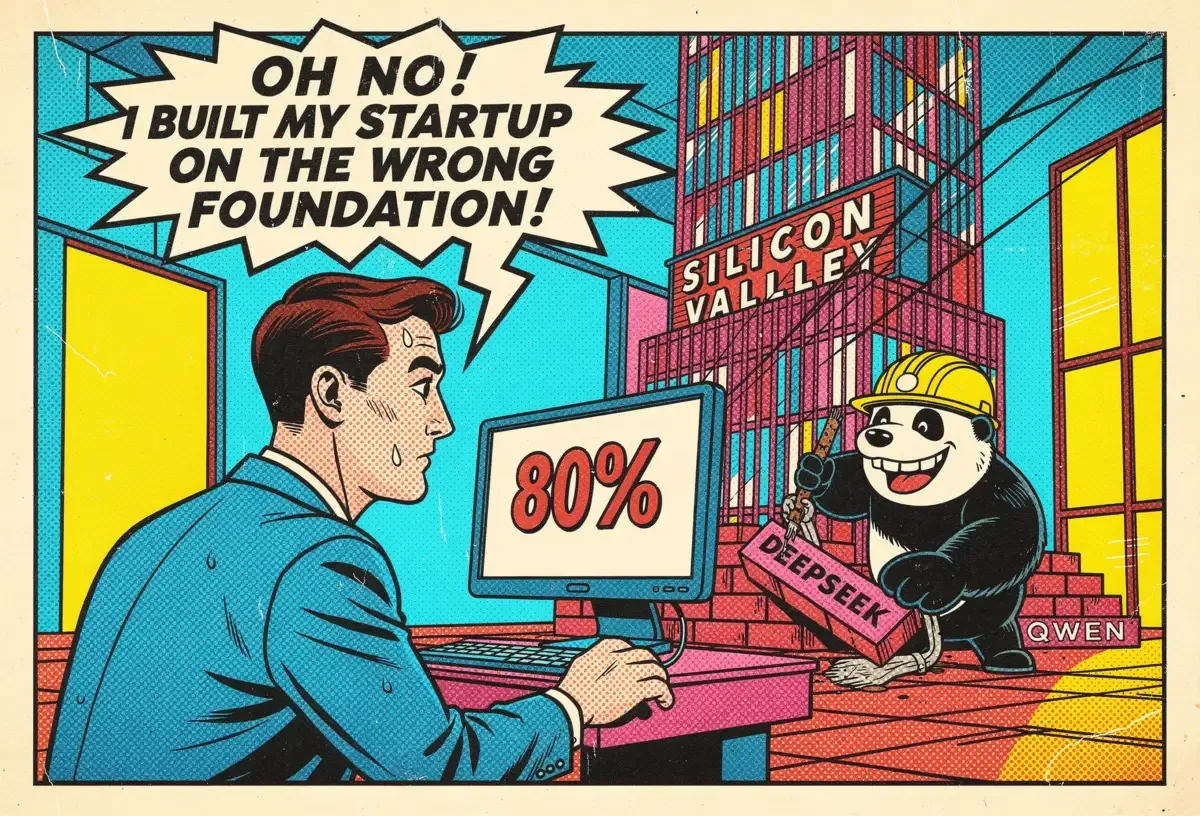

Martin Casado dropped a number that should unsettle Washington. The Andreessen Horowitz partner told The Economist that up to 80% of AI startup pitches his firm receives now feature American companies building on Chinese open-source models. Not 20%. Not half. Eighty percent. The infrastructure layer of America's AI startup ecosystem has quietly shifted to foreign foundations, and most observers missed the transition entirely.

Key Takeaways

• A16z reports 80% of AI startup pitches now feature US companies building on Chinese open-source models, signaling an infrastructure shift already underway.

• MIT and Hugging Face data shows Chinese models captured 17% of global downloads, surpassing US developers' 15.8% for the first time.

• Chinese open models carry documented CCP biases that activate on sensitive topics—constraints that travel with the weights into global applications.

• US labs bet on proprietary perfection while Chinese competitors optimized for diffusion. The adoption race increasingly favors Beijing's approach.

A joint study from MIT and Hugging Face, the leading repository for open AI models, confirms the trend extends beyond Silicon Valley pitch decks. Chinese-made open models captured 17% of global downloads over the past year, surpassing the 15.8% share held by American developers including Google, Meta, and OpenAI. First time ever. DeepSeek and Alibaba's Qwen family account for the vast majority of those Chinese downloads, with DeepSeek's R1 reasoning model, which matched US competitors at a fraction of the cost and compute, proving particularly popular.

The numbers tell one story. The motivations tell another.

The Economics of Openness

Chan Yip Pang, executive director at Vertex Ventures Southeast Asia, captured the dynamic precisely at Fortune's Innovation Forum in Kuala Lumpur: American AI companies "build for perfection" while Chinese companies "build for diffusion." OpenAI, Anthropic, and Google DeepMind chase artificial general intelligence and benchmark supremacy through proprietary models monetized via subscriptions and enterprise deals. Chinese labs ship weekly iterations optimized for practical deployment.

Wendy Chang, senior analyst at the Mercator Institute for China Studies, observes that open source has become "a more mainstream trend" in China than in the US. "US companies have chosen not to play that way," she notes. "They're making money on these high valuations. They don't want to open source their secrets."

The startup calculus is straightforward. Why pay OpenAI's API rates when DeepSeek offers comparable performance for pennies? Why risk a proprietary vendor raising prices or changing terms when open weights guarantee permanent access? Jinhui Yuan, CEO of SiliconFlow, a Chinese AI cloud hosting service, told Fortune that his company developed techniques to run open-source models so cost-effectively that fine-tuning them on customer data frequently beats proprietary model performance, without any risk of leaking competitive information.

Pang emphasizes the control dimension. Proprietary providers offer fine-tuning services with assurances that customer data won't feed broader training, "but you never know what happens behind the scenes." For startups building AI-native applications as their core product, he advises: "You better jolly well control the technology stack, and to be able to control it, open source would be the way to go."

The math doesn't lie. Chinese models offer better value. But value calculations ignore what comes bundled with the weights.

The Invisible Strings

Researchers examining Chinese open models have documented clear Chinese Communist Party biases embedded in the systems. Ask about Taiwan's sovereignty. Request information on the Tiananmen Square massacre. Probe sensitive topics in Chinese history or politics. The models decline, deflect, or provide CCP-aligned responses.

This isn't accidental contamination. Beijing officials have actively encouraged Chinese AI companies to offer wider model access, a strategy that gains outsized influence over how AI systems worldwide process and present information. When an American startup fine-tunes DeepSeek for customer service, or a European research team builds educational tools on Qwen, the underlying ideological constraints travel with the weights.

The FT reports that the popularity of Chinese open models is "already influencing the information people receive." For applications handling factual queries, news summarization, or content generation, inherited biases shape outputs in ways users rarely examine. Most developers grabbing Chinese models from Hugging Face aren't auditing political sensitivities. They're checking benchmark scores and cost structures.

And here's the wrinkle: the biases don't necessarily trigger in English-language consumer applications. A chatbot answering questions about restaurant recommendations in Ohio won't surface CCP sensitivities. But the same underlying architecture deployed for journalism tools, educational content, or policy analysis carries constraints that activate situationally. The exposure is latent until it isn't.

US export controls on advanced Nvidia chips forced Chinese labs into creative efficiency. Cut off from brute-force compute advantages, researchers at DeepSeek, Alibaba Cloud, and others pioneered distillation techniques to create smaller yet powerful models. They leaned heavily into video generation, where China now dominates the open-source landscape according to Hugging Face data. Constraint bred innovation, and innovation bred global adoption.

The Bridge Country Gambit

In Kuala Lumpur, executives from across Southeast Asia articulated priorities that diverge sharply from Silicon Valley assumptions. Cost matters. Data sovereignty matters. Local language performance matters. Proprietary model superiority on English-language benchmarks matters less than many Americans assume.

Cynthia Siantar, CEO of Singapore-based Dyna.AI, which builds AI applications for financial services, observed that some Chinese open-source models perform notably better in local languages. For companies serving Malaysian, Indonesian, Thai, or Vietnamese markets, this performance gap inverts the usual quality hierarchy.

Cassandra Goh, CEO of Malaysian financial technology provider Silverlake Axis, addressed security concerns directly. Models require securing within systems regardless of origin, she argued. Screening tools for prompts, output filtering, jailbreak prevention, these apply equally to proprietary and open-source deployments. The security surface isn't fundamentally different.

A coalition of policy experts from institutions including Mila, the Quebec AI Institute founded by Yoshua Bengio, and Oxford's AI Governance Initiative recently proposed that "bridge countries," middle-income nations positioned between US and Chinese technological spheres, band together to develop shared AI capacity. The proposal envisions a non-aligned movement for artificial intelligence, reducing dependence on either superpower's technology stack.

Whether such diplomatic coordination can materialize remains uncertain. What's already clear is that countries from Malaysia to the UAE to Brazil increasingly view Chinese open models as viable alternatives to American proprietary systems. The 8% performance gap separating frontier proprietary models from leading Chinese alternatives on software development benchmarks, a gap that matters intensely for some enterprise applications, registers as acceptable for many global use cases.

Janet Egan, senior fellow at the Center for a New American Security, put it bluntly: "It should be of concern to the US that China is making great strides in the open model domain."

The Download Deception

One critical caveat deserves examination. Download statistics from Hugging Face measure popularity, not production deployment. Researchers experimenting with models, developers evaluating alternatives, students completing coursework, all generate downloads without indicating commercial adoption.

The gap between downloaded and deployed creates interpretive challenges. DeepSeek's R1 attracted enormous attention when it shocked Silicon Valley in early 2024. How much of its download volume represents genuine production integration versus curiosity-driven examination? The MIT and Hugging Face study doesn't distinguish.

But Casado's 80% figure addresses pitches, not downloads. When four out of five American AI startups seeking venture funding have built on Chinese foundations, the infrastructure shift extends beyond hobbyist experimentation. These are companies betting their business models on foreign open-source technology.

Shayne Longpre, an MIT researcher involved in the download study, emphasizes the "paradigm shifting" pace of Chinese model releases. Weekly or biweekly iterations with multiple variations offer users choices that American labs, with their semi-annual or annual release cycles, simply don't match. Volume breeds familiarity. Familiarity breeds adoption.

Meta's Llama family held the gold standard for open-weight AI development for years. But as the company shifted strategy to invest more heavily in closed models, chasing the superintelligence race against OpenAI and Anthropic, Chinese competitors filled the vacuum. OpenAI introduced its first open-weight models in August 2024, years after DeepSeek and Alibaba had proven the commercial viability of openness.

The Allen Institute for AI launched Olmo 3, a fully open-source model, in November. That's one significant independent American contribution to open AI development in recent memory. One.

The Strategic Miscalculation

American AI labs made a bet. They wagered that performance superiority would outweigh accessibility, that developers would pay premium prices for premium capabilities, that proprietary moats could be defended through technical excellence. The bet assumed global developers shared American tolerance for subscription costs and vendor lock-in.

It turns out much of the world values control over capability margins. When a startup can fine-tune an open model on proprietary data and achieve results within striking distance of GPT-4, then keep those weights forever, the economic logic tilts decisively. Chinese labs understood this. American labs are belatedly learning it.

The Trump administration's AI Action Plan seeks to convince US companies to invest in open-source models with "American values." Good luck. The window for establishing American open-source leadership closed while US labs chased valuations and benchmark supremacy.

Why This Matters

- For US policymakers: The national security implications extend beyond obvious concerns. American startups building critical applications on Chinese foundations create dependencies that won't reverse easily, regardless of future export controls or diplomatic pressure.

- For enterprise AI buyers: Procurement decisions increasingly involve evaluating geopolitical exposure alongside technical specifications. Organizations deploying Chinese open models should audit bias patterns in sensitive application domains.

- For investors: Casado's 80% figure signals that AI startup economics have fundamentally shifted. The question isn't whether a portfolio company uses open-source models. It's which ones, from where, and what happens if that changes.

- For everyone watching this space: Capability doesn't guarantee adoption. Adoption guarantees influence. China figured that out first.

❓ Frequently Asked Questions

Q: What's the difference between "open-weight" and "open-source" AI models?

A: Open-weight models provide the numerical parameters (weights) for free download and use, but not the training code or data. Fully open-source models include everything: code, weights, and training data needed to reproduce the model from scratch. OpenAI's August 2024 release was open-weight, not fully open-source. Chinese labs like DeepSeek offer more comprehensive access.

Q: Can companies remove CCP biases by fine-tuning Chinese models on their own data?

A: Fine-tuning adjusts model behavior for specific tasks but doesn't reliably eliminate base model biases. The underlying constraints are embedded deep in the weights through pre-training on filtered Chinese internet data. A customer service bot might never trigger these biases, but applications involving news, history, or geopolitics will encounter them unpredictably.

Q: What is model distillation and why did it help Chinese labs?

A: Distillation trains a smaller "student" model to mimic a larger "teacher" model's outputs. Cut off from advanced Nvidia chips by US export controls, Chinese researchers used distillation to create compact models that punch above their weight computationally. DeepSeek's R1 matched competitors using a fraction of the compute, partly through these efficiency techniques.

Q: Why did Meta step back from open-source leadership with Llama?

A: Meta shifted investment toward closed models as it races OpenAI, Anthropic, and Google toward artificial general intelligence. Building frontier capabilities requires protecting competitive advantages. The company calculated that the superintelligence race matters more than open-source community leadership. Chinese competitors filled the vacuum Meta left behind.

Q: What is Hugging Face and why do its download numbers matter?

A: Hugging Face is the largest repository for AI models, hosting over 900,000 models that developers download for research and production use. It functions like GitHub for machine learning. Download statistics indicate which models developers actually grab and experiment with, though downloads don't directly measure production deployment or commercial adoption.