💡 TL;DR - The 30 Seconds Version

👉 Anthropic CEO Dario Amodei told staff via Slack the company will pursue investments from UAE and Qatar, reversing its ethical stance against authoritarian funding.

📊 The company rejected Saudi money in 2024 over national security concerns but now chases $100+ billion in available Middle East capital.

🏃♂️ OpenAI's $500 billion UAE data center project and other competitor moves created pressure that forced Anthropic to abandon its principles.

🤝 Trump's recent Middle East tour included tech leaders like Musk and Altman meeting Saudi royals, but Anthropic executives were notably absent.

💰 Amodei admits the move will enrich "dictators" but claims staying competitive requires access to Gulf capital pools.

🌍 The decision signals that AI's leading "safety-first" company chose market position over its stated democratic values when money was on the line.

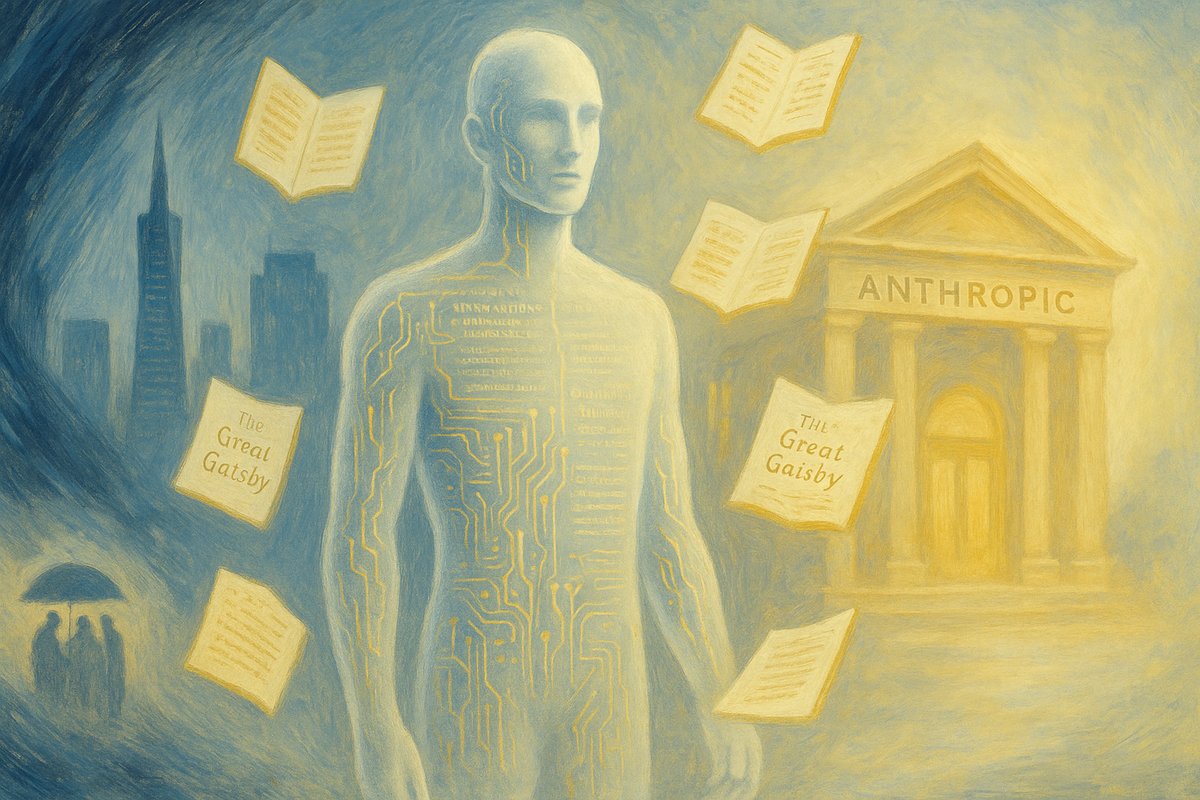

A leaked internal message reveals what happens when AI companies choose money over morals. Anthropic CEO Dario Amodei told staff Sunday that the company will pursue investments from UAE and Qatar—despite previously calling such moves dangerous to democracy.

The Slack memo, obtained by WIRED, shows Amodei wrestling with a decision that contradicts everything his company claimed to stand for. "This is a real downside and I'm not thrilled about it," he wrote about potentially enriching "dictators." But he concluded that running a business on the principle of "No bad person should ever benefit from our success" isn't realistic.

The announcement marks a stunning reversal for a company that built its brand on AI safety and ethical development. Just last year, Anthropic rejected Saudi funding over national security concerns. Now they're actively courting the same authoritarian regimes they once warned against.

The Race Changed Everything

Amodei's memo reads like a surrender document in the AI arms race. He admits Anthropic got outmaneuvered by competitors who grabbed Gulf state money first. "Unfortunately, having failed to prevent that dynamic at the collective level, we're now stuck with it as an individual company," he wrote.

OpenAI announced a $500 billion data center project with UAE backing in January. Four months later, they committed to building AI infrastructure in Abu Dhabi. Meanwhile, Anthropic watched from the sidelines, clinging to principles that apparently had an expiration date.

"There is a truly giant amount of capital in the Middle East, easily $100B or more," Amodei noted. "If we want to stay on the frontier, we gain a very large benefit from having access to this capital. Without it, it is substantially harder to stay on the frontier."

Translation: everyone else took the money, so now we have to.

From Principles to Pragmatism

The reversal stings because Anthropic made such a show of being different. In his essay "Machines of Loving Grace," Amodei argued that "democracies need to be able to set the terms by which powerful AI is brought into the world" to avoid being "overpowered by authoritarians."

That was then. Now Amodei describes a "race to the bottom where companies gain a lot of advantage by getting deeper and deeper in bed with the Middle East." His solution isn't to resist—it's to find a way to join that race while looking slightly less objectionable.

The company plans to pursue "narrowly scoped, purely financial investment" to avoid giving foreign investors operational leverage. It's a distinction that might matter in boardrooms but probably won't fool anyone watching from the outside.

Damage Control Mode

Amodei sees the optics problem coming. His memo has a whole section called "Comms Headaches" where he gets ahead of the hypocrisy accusations. He calls critics "very stupid" and says they don't understand the real issues.

Here's his defense: we wanted everyone to avoid this mess, but they didn't listen. Now that competitors grabbed the money, we can't stay pure. He quotes himself: "It's perfectly consistent to advocate for a policy of 'No one is allowed to do x,' but then if that policy fails and everyone else does X, to reluctantly do x ourselves."

That sounds like someone who got caught breaking his own rules. It's rationalization dressed up as strategy. The memo shows Amodei wants Gulf money without Gulf criticism. He wants to compete while keeping his moral high ground.

Good luck with that.

The Real Competition

This isn't really about Anthropic versus OpenAI. It's about American AI companies competing against each other for access to sovereign wealth that doesn't ask awkward questions about governance or human rights.

The irony runs deep. These companies spent years warning about AI falling into authoritarian hands. Now they're lining up to put it there themselves—as long as the check clears.

President Trump's recent Middle East tour, complete with tech executives like Elon Musk, Sam Altman, and Jensen Huang meeting with Saudi Arabia's crown prince, shows how the landscape shifted. Notably, Anthropic's leadership skipped that particular photo op. They preferred to make their deal privately, through leaked Slack messages.

Amodei tries to frame this as serving customers in the region, claiming it could have "important benefits for the world including improving human health, aiding economic development." But that's not what this memo is about. This is about accessing capital, not serving customers.

When Ethics Become Expensive

The memo exposes the fundamental tension in AI development. Building frontier models requires massive capital. Massive capital increasingly comes with strings attached—or from sources that don't align with stated values.

Anthropic's decision reveals what happens when ethical positions become competitive disadvantages. You can stand on principle right up until standing costs you market position. Then, apparently, you write a memo explaining why principles were always more flexible than advertised.

The company isn't alone in this calculation. But they are uniquely positioned as the AI safety company that decided safety was worth less than staying competitive.

Why this matters:

• When AI's leading "ethical" company abandons its principles for cash, it signals that the race for AI dominance has officially entered its ruthless phase—where money talks louder than values.

• This memo accidentally reveals the real AI arms race isn't between countries, but between companies willing to compromise their stated beliefs to access the deepest pockets available.

❓ Frequently Asked Questions

Q: How much money could Anthropic raise from Gulf states?

A: Amodei estimates $100+ billion in available Middle East capital. While he doesn't specify Anthropic's target, he mentions getting "many billions" without compromising on data center restrictions. For context, Anthropic raised $2.75 billion from Google in 2024.

Q: What does "narrowly scoped, purely financial investment" actually mean?

A: Anthropic wants Gulf money without giving investors control over operations or access to technology. This means no board seats, no say in AI development, and no data centers in authoritarian countries. Just cash for equity stakes.

Q: How does this compare to OpenAI's Gulf deals?

A: OpenAI went much further. Their $500 billion Stargate project involves UAE state firm MGX building actual data centers. They're also constructing AI infrastructure in Abu Dhabi. Anthropic claims it won't allow such arrangements.

Q: Why did Anthropic reject Saudi money but accept UAE/Qatar funding?

A: The memo doesn't explain the distinction. It only mentions Saudi Arabia raised "national security concerns" in 2024. UAE and Qatar may be seen as less risky, or Anthropic's risk tolerance changed as competitors grabbed Gulf money first.

Q: Which other AI companies have taken Middle East investments?

A: Besides OpenAI's UAE partnerships, the memo references a "race to the bottom" but doesn't name specific companies. A UAE firm already owns nearly 8% of Anthropic through FTX bankruptcy proceedings, worth about $500 million in 2024.

Q: What are the national security risks Amodei mentions?

A: Amodei warns that AI is "likely to be the most powerful technology in the world" and authoritarian governments could use it for "military dominance or leverage over democratic countries." The main risk is losing control of AI development to hostile states.

Q: When might this Gulf funding actually happen?

A: The memo doesn't provide a timeline. Amodei describes it as exploring opportunities rather than completed deals. He mentions testing "how much we can get" from Gulf investors without compromising on operational independence.

Q: How does this change Anthropic's AI safety mission?

A: Officially, it doesn't. Anthropic says it will maintain usage policies and avoid building Gulf data centers. But the memo shows the company prioritizing competitive position over ethical concerns when forced to choose between them.