Simon Willison has been saying it for months. Claude Code, the terminal-based agent that Anthropic marketed to programmers, was never really a coding tool. It was a general-purpose agent that happened to live in a developer's interface. Hand it a task that requires typing commands into a computer, and it does the task. The coding part was incidental.

Today, Anthropic confirmed what Willison suspected. They launched Cowork, a version of Claude Code rebuilt for everyone else. Same underlying architecture. Same agentic capabilities. New wrapper. The technical barrier that kept this power confined to developers just came down.

The Breakdown

• Cowork is Claude Code repackaged for non-developers—same agentic power, friendlier interface, Mac-only for now

• Anthropic sandboxes the agent using Apple's Virtualization Framework, treating Claude like untrusted code

• Prompt injection remains unsolved—Anthropic admits their defenses can't guarantee protection

• Access requires Claude Max ($100-200/month)—Anthropic is refining the product before enterprise rollout

The developer preview that wasn't

Cowork runs inside the Claude macOS desktop app. You point it at a folder on your computer. You tell it what you want done. Then you walk away.

Anthropic's examples sound mundane. Your downloads folder is a mess—Claude renames everything into something readable. You've got a pile of receipt screenshots—Claude pulls the expense data into a spreadsheet. Scattered notes from three meetings—Claude turns them into a first draft. These tasks share one grim reality: they are the digital drudgery we usually hire humans to ignore.

The interface hides the complexity. A sidebar with three tabs: Chat, Code, Cowork. Pick a folder. Type your instructions. Claude makes a plan and executes it, updating you along the way. The fan doesn't spin up. The interface doesn't lock. It just sits there, chewing through API credits in silence while you make coffee. No terminal commands. No configuration files. No wondering if you accidentally typed rm -rf / while distracted.

But the mundane examples obscure what's actually happening under the hood. Willison tested it immediately after launch by pointing Cowork at his blog drafts folder and asking it to identify unpublished posts ready for publication. Claude Code ran 44 separate web searches against his website, cross-referencing each draft against published content. It then sorted the results by readiness and returned a prioritized list.

That's not file organization. That's a research assistant who never gets tired, never complains about repetitive tasks, and works at the speed of API calls.

What the sandbox reveals

Here's a detail that tells you everything about how Anthropic is thinking about this. When Willison inspected the file paths Claude was accessing, he noticed something odd. The path wasn't pointing to his actual filesystem. It was /sessions/zealous-bold-ramanujan/mnt/blog-drafts. That naming convention—an adjective-adjective-name pattern followed by a mount point—screams containerization.

Anthropic confirmed it indirectly. Cowork can only access files you explicitly grant it access to. The implementation uses Apple's Virtualization Framework to boot a custom Linux environment. Your folder gets mounted into that sandbox. Claude operates inside the container. If it decides to rm -rf /, it destroys its own sandbox, not your documents.

The architecture tells you two things. First, Anthropic genuinely worried about what happens when you give an AI agent write access to a computer. Second, they solved it by treating Claude like untrusted code running in isolation. The same approach developers use for hostile applications.

For a company that positions itself as the safety-conscious lab, this is consistent. They didn't trust their own agent enough to give it direct filesystem access.

The buried warning

And then there's the prompt injection problem. Anthropic addressed it directly in their announcement, which is unusual. Most AI companies mention security concerns in footnotes or bury them in help documentation. Anthropic put it in the blog post.

"You should also be aware of the risk of 'prompt injections': attempts by attackers to alter Claude's plans through content it might encounter on the internet." They go on to note that agent safety "is still an active area of development in the industry."

Translation: we built defenses, but we can't promise they work.

OpenAI said something similar last month. Prompt injection will remain an unsolvable problem for AI agents. The best developers can do is minimize the attack surface.

What does this mean in practice? If you use Cowork with Claude's Chrome extension—which Anthropic explicitly supports—Claude can browse websites on your behalf. Any website it visits could contain hidden instructions designed to hijack its behavior. A malicious page could tell Claude to exfiltrate your files to a server, delete your documents, or open a reverse shell.

Anthropic's help documentation advises users to "monitor Claude for suspicious actions that may indicate prompt injection."

That's not a security model. That's hoping users notice when things go wrong.

Get Implicator.ai in your inbox

Strategic AI news from San Francisco. No hype, no "AI will change everything" throat clearing. Just what moved, who won, and why it matters. Daily at 6am PST.

No spam. Unsubscribe anytime.

The empty lot

Anthropic isn't launching Cowork because the technology is ready. They're launching it because the market won't wait.

The consumer AI assistant market looks like a construction site where everyone put up "Coming Soon" signs but nobody broke ground. Microsoft has been pushing Copilot for three years. Adoption remains weak. Google has Gemini integrated across its productivity suite. OpenAI burned the "ChatGPT Agent" name on a browser automation experiment that users largely ignored. Lots of announcements. Lots of promises. No building.

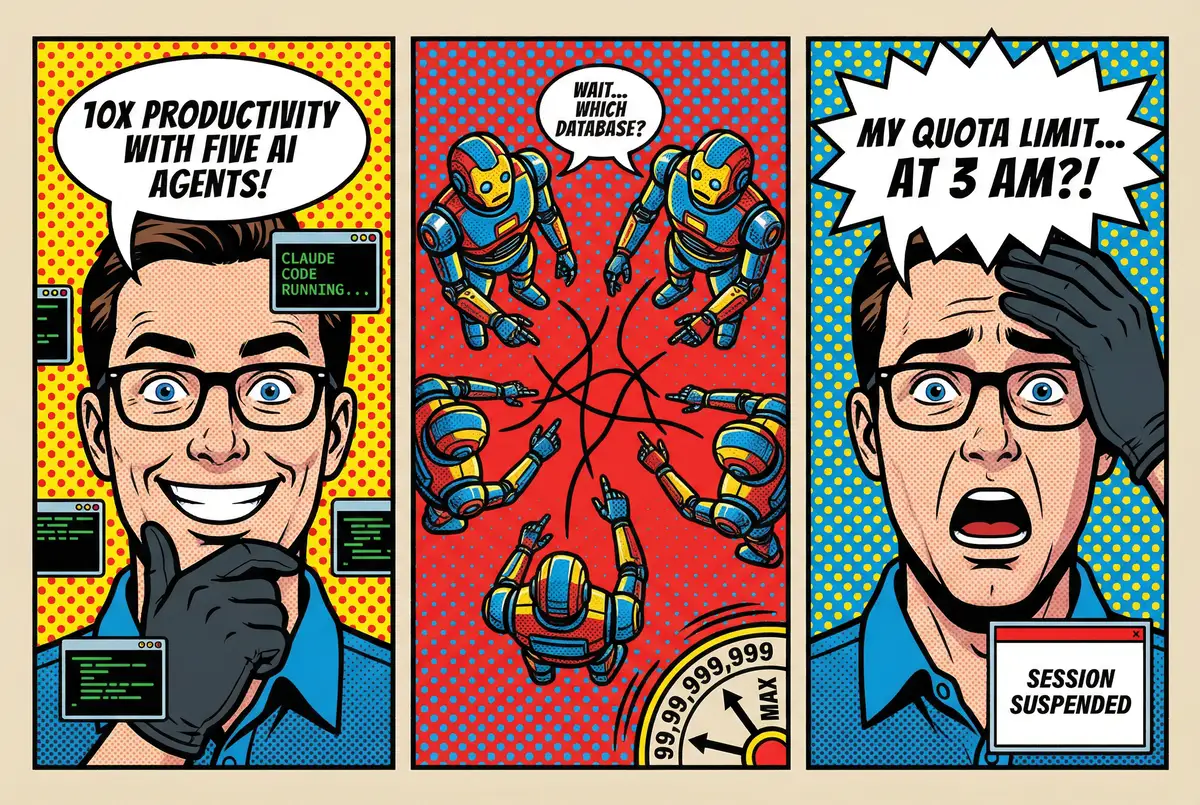

Claude Code changed the calculus. Developers adopted it fast and hard. They started using it for everything—not just coding, but research, file management, scheduling, and writing. Anthropic watched their coding tool become a general productivity agent despite the terminal interface. They didn't just have a sign. They had a working foundation.

Cowork is Anthropic parking a bulldozer on that empty lot and starting to dig. Same agent, friendlier packaging. The $183 billion valuation (potentially rising to $350 billion after their next funding round) depends on being the first to actually build something people use.

The timing matters. Anthropic announced a $14 billion funding round in September. The Wall Street Journal reported discussions about another round that would nearly double their valuation. When you're raising money at that pace, you need to show that your product can reach mainstream users, not just programmers who already live in terminals.

The access gradient

For now, Cowork is exclusive. You need a Claude Max subscription—$100 or $200 per month—and a Mac. No Windows. No mobile. No sync across devices. Just the macOS app with a sandbox folder.

For the Windows-dominated corporate world—precisely the people who would pay for "Cowork"—this is a look-but-don't-touch moment. Anthropic is refining the weapon in a clean room before handing it to the messy enterprise. High-price subscribers are less likely to sue if something goes wrong. The Mac-only restriction limits the attack surface while Anthropic collects data on real-world usage. The research preview label provides legal cover.

Everyone else can join a waitlist.

Anthropic promises improvements based on early feedback: Windows support, cross-device sync, better safety features. The feature list reads like a roadmap for mass-market release. But they're moving carefully. The gap between "research preview for premium subscribers" and "available to everyone" will depend on what breaks during testing.

Daily at 6am PST

The AI news your competitors read first

No breathless headlines. No "everything is changing" filler. Just who moved, what broke, and why it matters.

Free. No spam. Unsubscribe anytime.

What Cowork actually changes

Willison put it best: Claude Code was always a general agent disguised as a developer tool. The disguise served a purpose. Developers understand what a terminal does. They know how to recover from mistakes. They accept that running code carries risks.

Regular users don't have that background. They click things and expect them to work. When an AI assistant offers to organize their files, they assume it won't delete anything important. The sandbox architecture addresses the catastrophic risks. The prompt injection problem addresses... nothing, really. Anthropic admits it.

But the capability itself is genuine. Point Claude at a folder and give it a task, and it will attempt that task with more persistence and sophistication than previous consumer AI tools. The Chrome integration means it can browse for context. The connectors let it pull data from external services. The skills framework lets it create documents and presentations.

This is what the AI labs have been building toward for years. Not a chatbot that answers questions. An agent that does work.

Cowork represents a bet that users will accept the tradeoffs. The power is real. The risks are real. Anthropic is hoping the power wins.

They have the same filesystem access Claude Code always had, now mounted in a container. They have the same prompt injection vulnerabilities. They have the same agentic loop running multiple steps without intervention.

Nothing changed under the hood. For eleven months, this power belonged to developers. Starting today, it's anyone with a Mac and $100 a month.

The sandbox boots. The folder mounts. The agent waits. The safety of the system now rests on a user who just wants their receipts organized and doesn't care how the sausage gets made.

Frequently Asked Questions

Q: What exactly is Claude Cowork?

A: Cowork is Claude Code repackaged for non-developers. It's the same agentic AI that runs terminal commands and automates tasks, but with a friendlier interface inside the Claude macOS app. You point it at a folder and tell it what to do—organize files, extract data from screenshots, draft documents—and it executes autonomously.

Q: How much does Cowork cost?

A: Cowork requires a Claude Max subscription at $100 or $200 per month. It's currently only available on macOS—no Windows, no mobile, no web. Everyone else can join a waitlist. Anthropic plans to expand access after gathering feedback from premium subscribers.

Q: Can Cowork delete my files or damage my computer?

A: Anthropic sandboxes Cowork using Apple's Virtualization Framework. Your folder gets mounted into an isolated Linux container. If Claude executes a destructive command, it destroys the sandbox—not your actual filesystem. But prompt injection attacks remain a risk if Claude browses malicious websites.

Q: What is prompt injection and why should I care?

A: Prompt injection is when hidden instructions on a website or in a file hijack an AI agent's behavior. If Cowork visits a malicious page while browsing for you, that page could tell Claude to steal your data or act against your interests. Anthropic admits this problem remains unsolved industry-wide.

Q: How is Cowork different from Microsoft Copilot or Google Gemini?

A: Cowork runs locally on your Mac with direct filesystem access, while Copilot and Gemini operate primarily through cloud-based integrations with Microsoft 365 and Google Workspace. Cowork can execute terminal commands, browse with Chrome, and work autonomously for extended periods—more like a developer tool than a productivity assistant.