A single software update enabled the change.

In the release notes of macOS 26.2 beta, issued in late November 2025, Apple included a technical feature that transforms consumer hardware into something resembling enterprise AI infrastructure. RDMA over Thunderbolt. Four words that mean nothing to most users, but they just slashed the cost of running frontier-class AI models locally by roughly 94%.

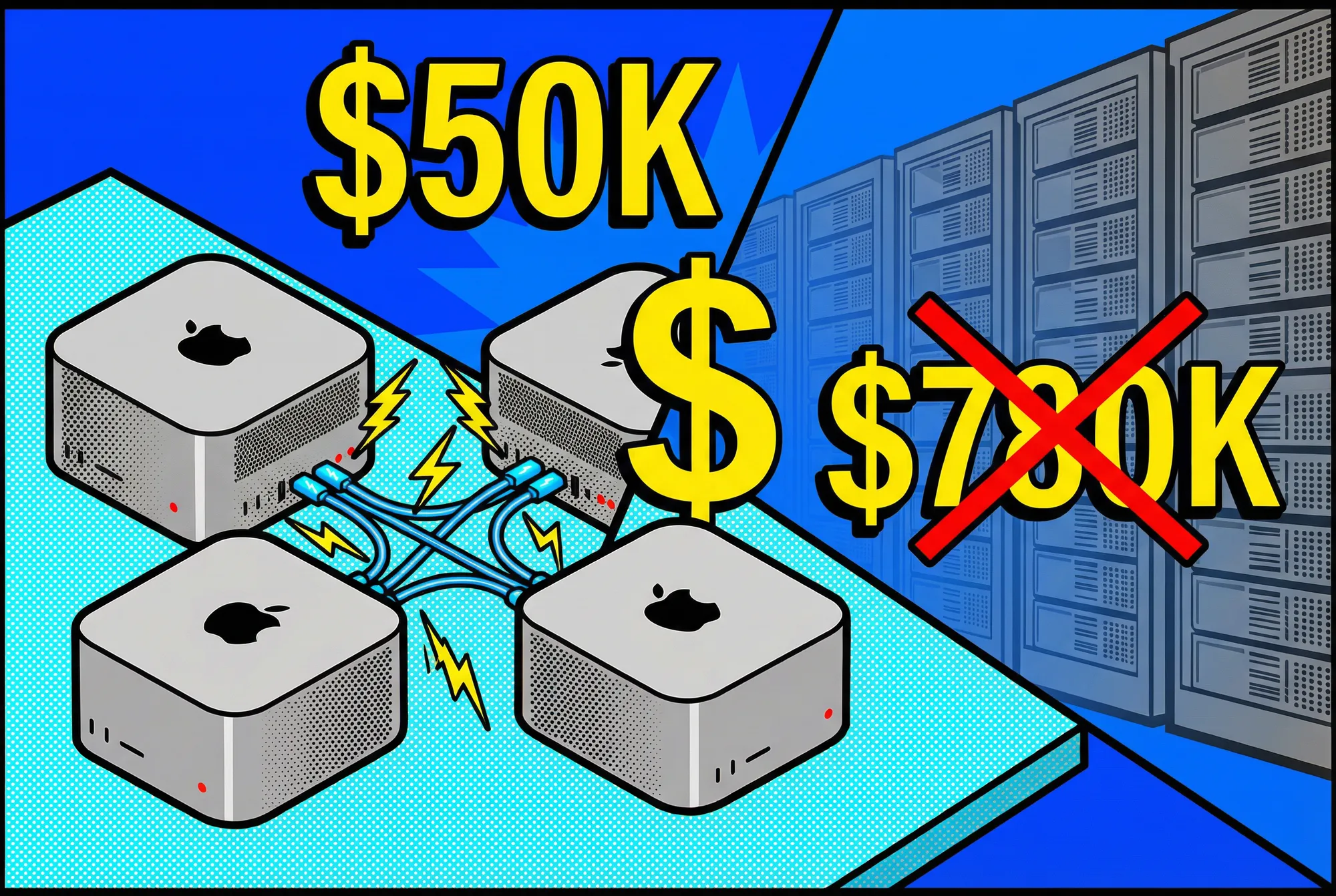

Four Mac Studios with M3 Ultra chips, 512GB of unified memory each, run about $50,000 total. Hook them together with Thunderbolt 5 cables in a mesh configuration, enable RDMA through recovery mode, install EXO 1.0, and you can run Deep Seek V3.1 at 24-26 tokens per second. That's a 671-billion-parameter model. Over 700 gigabytes just to load it. Want the same capability from NVIDIA? You're looking at H100 hardware north of $780,000, and that's before anyone builds out the racks and cooling to make those GPUs actually function.

Apple said nothing about this publicly. No keynote slide. They enabled a networking protocol that enterprise data centers have relied on for years and waited for enthusiasts to figure out what became possible.

Quick Summary

• macOS 26.2 beta enables RDMA over Thunderbolt, cutting inter-machine latency from 300 microseconds to 3 and unlocking tensor parallelism

• A $50,000 four-Mac cluster now runs 700GB models like Deep Seek V3.1; comparable NVIDIA hardware starts at $780,000

• Dense models show near-linear scaling across nodes; mixture-of-experts architectures scale poorly due to communication overhead

• Requires Thunderbolt 5 (M4 Pro/Max or M3 Ultra), EXO 1.0 software, and manual RDMA activation through recovery mode

The Latency Problem Nobody Could Solve

In early 2025, clustering Mac Studios for AI produced counterintuitive results. More machines made inference slower. Network Chuck published a video in March showing 91% performance degradation when he clustered five Mac Studios together. Hardware worked fine. Software worked fine. The networking wrecked everything.

Here's why. When you split an AI model across multiple machines, those machines talk constantly during inference. Every single token requires information bouncing between systems. Standard Thunderbolt networking introduced roughly 300 microseconds of delay per message. That sounds fast until you realize what it forces.

At 300 microseconds per exchange, clusters get stuck with pipeline parallelism. Sequential processing. Machine one calculates its layers, stops, ships results to machine two. Machine two receives, calculates, stops, ships to machine three. A relay race where runners freeze until the baton lands in their hand. Only one machine actually computes at any moment. The other three sit idle, expensive space heaters.

Pipeline parallelism worked for capacity. You could load models too big for one machine. Speed? Forget it. The cluster was just an elaborate way to run models slowly.

RDMA blows up that bottleneck. Remote Direct Memory Access cuts out the traditional networking stack, no TCP/IP overhead, no CPU processing the packets first. GPU memory talks directly to GPU memory across the Thunderbolt cables. Apple's implementation drops latency from 300 microseconds down to 3. Hundred times faster.

With latency that low, you can run tensor parallelism. All four machines working on every layer simultaneously, each handling 25% of the math, recombining results, pushing forward together. The cluster actually clusters.

What the Numbers Actually Show

Performance gains depend heavily on model architecture, and the pattern matters if you're planning hardware purchases.

Dense models scale almost linearly. Every parameter activates during inference, so the computation at each layer runs heavy. Communication overhead between machines gets buried under all that math. More nodes, more parallel calculation, faster output.

Mixture-of-experts models tell a different story. They activate maybe 5% of their parameters per token. Lighter computation, but the cross-machine chatter stays constant. Overhead that barely registers on dense models starts choking performance.

Llama 3.3 at 70 billion parameters, full FP16 precision, shows the ideal case. One Mac Studio gets about 5 tokens per second. Two machines with RDMA push to 9 tokens per second. Four machines hit 15-16 tokens per second, time-to-first-token dropping from over two seconds to around one. 3.25x faster on hardware that showed zero improvement from clustering six months ago.

Devstral 2 at 123 billion parameters, dense architecture built for coding tasks, scales the same way. Single node: 9.2 tokens per second. Four nodes over RDMA: 22 tokens per second. Bump the quantization from 4-bit to 6-bit and the single-node speed drops to 6.4 tokens per second, but four nodes recover to 17. Nearly triple. Heavier weights, more math per layer, better scaling.

Kimi K2 runs a trillion parameters total. The model needs 660 gigabytes just to load. No single consumer machine touches it. The cluster handles it at 28-35 tokens per second while pulling less than 500 watts.

Deep Seek V3.1 shows where mixture-of-experts hits the wall. 671 billion parameters, 8-bit quantization, over 700 gigabytes. Performance: 24-26 tokens per second. Fine for interactive use. But only 37 billion parameters activate per token, roughly 5% of the model. All that coordination overhead, not much computation to amortize it against.

Qwen 3 at 235 billion parameters demonstrates the limit directly. One node gets 30 tokens per second. Four nodes manage 37. Improvement exists. Nothing like the dense model scaling.

Power consumption stays remarkably low throughout. Dense model inference on four Macs pulls around 600 watts total. Less than a single NVIDIA H200 at full load. Mixture-of-experts workloads drop to 480 watts. The whole cluster runs within the electrical budget of a gaming PC.

Three Technologies Had to Converge

Apple enabling RDMA wasn't enough on its own. Three pieces had to land within weeks of each other.

macOS Tahoe 26.2 shipped in beta November 18, 2025, with RDMA over Thunderbolt buried in the feature list. Works only on Thunderbolt 5, which means M4 Pro, M4 Max, or M3 Ultra. Users have to boot into recovery mode and flip the setting manually. Not exactly plug-and-play.

Get Implicator.ai in your inbox

Strategic AI news from San Francisco. No hype, no "AI will change everything" throat clearing. Just what moved, who won, and why it matters. Daily at 6am PST.

No spam. Unsubscribe anytime.

MLX, Apple's machine learning framework for Apple Silicon, integrated RDMA into its distributed computing layer around the same time. The MLX team apparently worked directly with whoever built the networking changes. Without this piece, the hardware capability would sit there doing nothing useful.

EXO Labs dropped version 1.0 of their clustering software mid-November after months of apparent abandonment. Project looked dead. Commits stopped. Issues piled up unanswered. Then they resurfaced with a native Mac app, complete rewrite, tight hooks into Apple's RDMA stack. Model sharding, node discovery, API exposure, all automatic. Setup takes minutes instead of hours.

Pull any one of these and the whole thing falls apart. RDMA without MLX means no tensor parallelism. MLX without EXO means manual configuration that almost nobody would attempt. EXO without RDMA means the same disappointing performance that killed interest in Mac clustering back in March.

The NVIDIA Comparison Requires Context

Stacking a $50,000 Mac cluster against $780,000 in H100 hardware demands some honesty about what you're actually comparing.

NVIDIA's software ecosystem is years ahead. CUDA has two decades of optimization work behind it. Every major AI framework, training pipeline, production deployment system assumes NVIDIA somewhere in the stack. The Mac cluster runs inference well enough. Training? Impractical. Production deployment at scale? The tooling doesn't exist.

Raw performance comparison: a single H200 GPU hits roughly 50 tokens per second on Llama 3.3 70B at FP8 precision. Half the precision the Mac cluster uses. The Mac cluster at FP16 manages about 26 tokens per second. Half the speed. But one H200 runs $30,000 before you build anything around it. Server chassis, cooling, datacenter power circuits. It pulls more wattage alone than the entire Mac cluster.

That $780,000 figure assumes 26 H100s at 80GB VRAM each to match 2TB of unified memory. Ignores the NVLink fabric connecting them. Ignores the server nodes housing them. Ignores networking gear, power delivery, physical space. Real deployment costs run higher.

Apple's unified memory creates a different performance profile. Memory bandwidth per GPU core runs lower than NVIDIA's HBM3, but raw capacity per dollar tilts hard the other way. You can walk into an Apple Store and buy a 512GB Mac Studio for around $12,000. Try getting 80GB of HBM3 for that. NVIDIA's H100 runs $25,000-30,000, and you're still six times short on memory.

The honest framing: local inference at frontier model scale now costs what a midrange car does rather than what a house does. That shift matters for researchers, developers, small companies that want to experiment without cloud bills or API rate limits.

Tool integration lags badly. Coding assistants like Cursor and Claude Code expect OpenAI-compatible APIs. EXO exposes one, and it mostly works, then breaks on edge cases nobody documented. You can chat with these models through the web interface. Plugging them into development workflows reliably? Not yet. The API handles basic completions but chokes on function calling, the feature that lets AI agents execute code and interact with external systems.

What This Means for Everyone Else

The $50,000 cluster sits at the extreme end. The underlying technology works on cheaper hardware with proportionally cheaper results.

Two Mac Studios at 256GB each run about $12,000 and give you 512GB of unified memory. Enough for most open-weight models at reasonable quantizations. Performance scales down accordingly, maybe 8-10 tokens per second on Llama 3.3 70B. That config handles Deep Seek V3.1 at 4-bit with room to spare.

Drop down another tier and you're looking at M4 Max Mac Studios. Two of them with 128GB each, about $8,000 out the door, 256GB combined. Plenty for Llama 3.3 70B at 4-bit quantization, Qwen 2.5 72B, the usual suspects in the coding model lineup. Ironically, your performance per dollar gets better as you spend less. You just lose the ability to run the really massive stuff.

The limitation that matters most: M4 Mac Minis lack Thunderbolt 5. The cheapest path into Apple Silicon clustering can't touch RDMA. Those machines still network through standard Thunderbolt, still suffer the latency penalties, still show minimal gains from adding nodes. Apple could theoretically enable RDMA on Thunderbolt 4 later. No indication they will.

Software stability remains a problem. Network Chuck and Linus Tech Tips both documented crashes, failed model loads, hangs with no clear cause. EXO pushed dozens of patches across just a few days of testing. The beta label means something here. Production workloads face undefined failure modes and zero enterprise support.

Model selection stays constrained for now. EXO supports only pre-converted MLX models validated against their sharding code. No uploading arbitrary weights. The library covers major open-weight releases but misses fine-tuned variants and specialized architectures. Custom model support sits on the roadmap without a date attached.

Six months ago, Mac clustering degraded performance by 91%. Now the same hardware architecture handles trillion-parameter models at conversational speed. Four Mac Studios on a desk, drawing somewhere between 480 and 600 watts depending on workload, running Kimi K2 at 28-35 tokens per second. Total hardware cost sits around $50,000. Getting equivalent capability from NVIDIA means writing a check for $780,000 or more, then finding somewhere to put all that equipment.

❓ Frequently Asked Questions

Q: Can I use M4 Mac Minis for this cluster setup?

A: No. M4 Mac Minis have Thunderbolt 4, which doesn't support RDMA. You need Thunderbolt 5, found only in M4 Pro, M4 Max, and M3 Ultra machines. Mac Minis can still cluster using standard Thunderbolt networking, but you'll hit the same latency problems that caused 91% performance degradation in early 2025 tests. Apple hasn't announced plans to enable RDMA on Thunderbolt 4.

Q: How do I physically connect the Mac Studios together?

A: Use Thunderbolt 5 cables in a mesh configuration where each Mac connects directly to every other Mac. For four machines, that's six cables total. You'll also need Ethernet connections to a switch for model downloads and network discovery. After cabling, boot each Mac into recovery mode and manually enable RDMA before installing EXO 1.0.

Q: Can I use this cluster with coding tools like Cursor or Claude Code?

A: Partially. EXO exposes an OpenAI-compatible API that handles basic chat completions. However, function calling breaks unpredictably, which means AI agents can't execute code or interact with external systems reliably. You can chat with models through EXO's web interface, but plugging into development workflows remains unreliable as of December 2025.

Q: Why do mixture-of-experts models like Deep Seek scale worse than dense models?

A: Communication overhead. Dense models activate every parameter per token, creating heavy computation that buries the cost of cross-machine chatter. Mixture-of-experts models activate only 5% of parameters, so computation runs light while communication stays constant. Deep Seek V3.1 jumps from 20 to 24 tokens per second going from two to four nodes. Llama 3.3, a dense model, jumps from 9 to 16.

Q: Is this setup stable enough to run production workloads?

A: No. The software remains in beta with documented crashes, failed model loads, and unexplained hangs. EXO pushed dozens of patches during testing. There's no enterprise support, no SLA, and undefined failure modes. Use it for experimentation and development, not customer-facing applications. Stability should improve as macOS 26.2 and EXO mature past their current beta states.

Sign up for Implicator.ai

Strategic AI news from San Francisco. Clear reporting on power, money, and policy. Delivered daily at 6am PST.

No spam. Unsubscribe anytime.