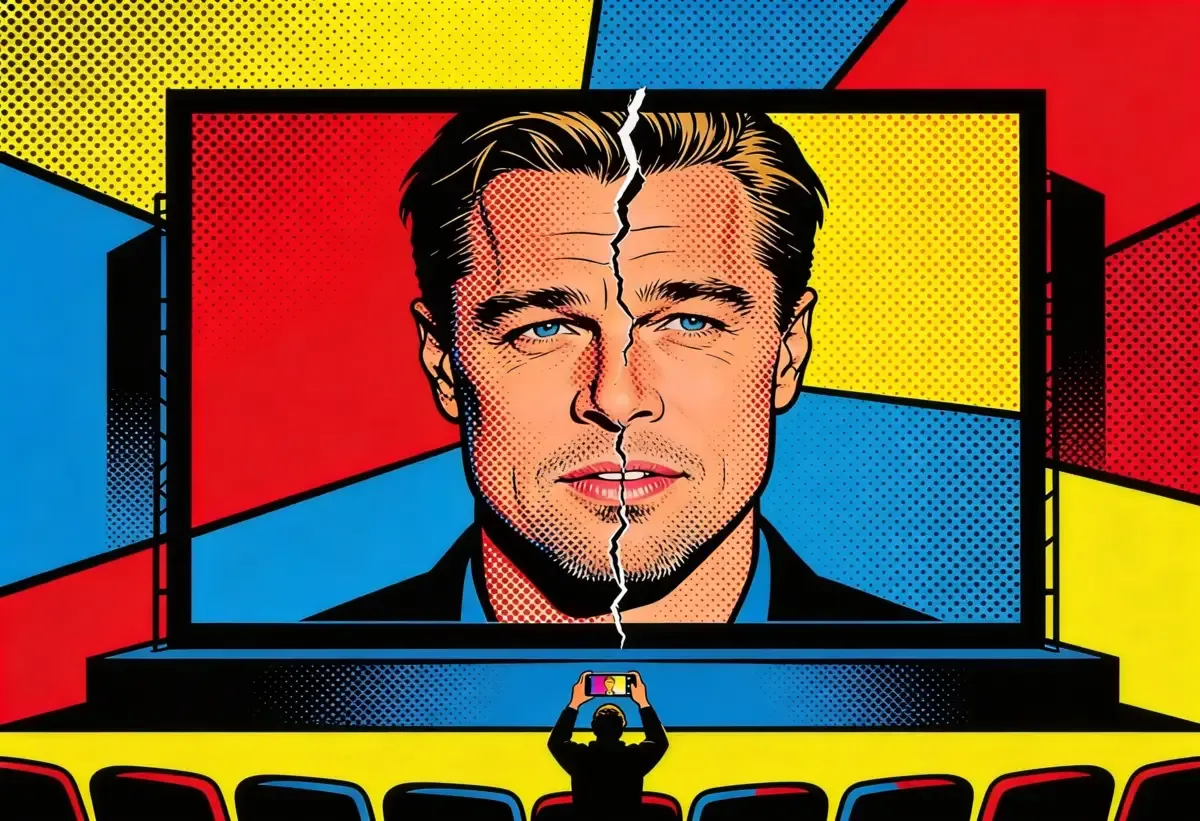

ByteDance's new AI video generator Seedance 2.0 went viral this week with clips that reproduce Hollywood intellectual property in startling detail, Reuters reported. Users generated a Tom Cruise versus Brad Pitt deepfake fight, Avengers: Endgame remixes, and a Kim Kardashian-Ye palace drama in Mandarin that racked up around a million Weibo views.

The model launched in limited beta over the weekend and was officially unveiled on Thursday. ByteDance separately suspended a feature that could clone a person's voice from nothing more than a face photograph, after testers found it produced audio "nearly identical" to real voices without any voice data. ByteDance said in a blog post that Seedance 2.0 processes text, images, audio, and video simultaneously, generating clips up to 15 seconds long with synchronized sound. Users can feed the system up to nine reference images, three video clips, and three audio tracks. It assembles them into scenes that follow physical laws, maintain character consistency across shots, and obey cinematic logic. Swiss consultancy CTOL called it the "most advanced AI video generation model available," claiming it outperforms both OpenAI's Sora 2 and Google's Veo 3.1 in practical testing.

Previous AI video generators felt like slot machines. You typed a prompt, pulled the lever, and hoped for something coherent. Seedance 2.0 offers something closer to a director's chair. Analysts at Chinese investment firm Kaiyuan Securities used that phrase, and the distinction matters more than a marketing claim usually does. ByteDance trained the model on Douyin's massive video library, the Chinese version of TikTok, giving it a feel for human movement and facial expression that shows even in mundane footage, not just in the flashy action sequences. Output exports at 2K resolution, 30 percent faster than version 1.5.

Character consistency is the part that makes industry people nervous. Earlier video generators could produce a face, but that face would drift between shots, hair changing color, jaw shifting shape, clothes mutating between frames. Seedance holds a character steady across an entire clip. Pair that with physical accuracy, figure skaters completing mid-air spins and sticking landings in a demo ByteDance shared, and you start to see why some observers called this the first tool that could plausibly feed into a professional production pipeline.

Right now, you can only use it in China, through ByteDance's Jimeng AI platform, the Doubao AI assistant, or the CapCut video editor. No word on a global rollout. With TikTok's ownership drama still playing out in the United States, don't expect one soon.

So the internet got the director's chair. The first thing everyone filmed was other people's movies.

The copyright alarm

Within days of the beta launch, users were pulling copyrighted characters into their videos. A Tom Cruise versus Brad Pitt brawl. Optimus Prime fighting Godzilla. Rachel and Joey from Friends, recast as otters. Disney, Warner Bros. Discovery, and Paramount all had IP showing up in feeds they didn't control. Chinese state media amplified the output. Global Times highlighted work by the X user el.cine, who posted a Captain America fight sequence. Deadline reported that ByteDance did not respond to a request for comment, and that Disney, Warner Bros., and Paramount were also contacted.

The Breakdown

• ByteDance's Seedance 2.0 generates 15-second video clips from text, images, audio, and video inputs with character consistency across shots

• Viral deepfakes using Hollywood IP from Disney, Warner Bros., and Paramount spread within days of beta launch

• ByteDance suspended a face-to-voice cloning feature after testers found it could replicate voices from photographs alone

• Seedance outputs are watermark-free, unlike OpenAI's Sora 2 and Google's Veo 3.1, complicating copyright enforcement

Peter Yang, a Roblox product manager who follows AI tools, watched the Cruise versus Pitt fight and didn't mince words on X. "Everything I've seen from this model (Seedance 2) is a copyright violation." Hard to argue.

Deadpool & Wolverine co-writer Rhett Reese went further. The Pitt versus Cruise video left him "shook," he wrote on X, and he wasn't being flippant about it. "I was blown away by the Pitt v Cruise video because it is so professional. That's exactly why I'm scared. My glass half empty view is that Hollywood is about to be revolutionized/decimated."

Martial artist and actor Scott Adkins, who has appeared in John Wick: Chapter 4, discovered his own likeness in a Seedance-generated video he had nothing to do with. He is far from the only performer whose face showed up in someone else's AI project this week.

Stay ahead of the curve

Strategic AI news from San Francisco. No hype, no "AI will change everything" throat clearing. Just what moved, who won, and why it matters. Daily at 6am PST.

No spam. Unsubscribe anytime.

Rick & Morty writer Heather Anne Campbell offered a cooler read on Bluesky. Give people infinite creative power and they keep producing fanfiction, she wrote. "Almost like the original ideas are the hardest part." The observation cuts both ways. If you can render a photorealistic Avengers scene in your living room but can't think beyond remixing an existing franchise, maybe the creative moat is wider than the technology suggests. Studios are not going to find that reassuring while their characters appear in unauthorized AI video with no watermark attached.

That watermark detail is worth pausing on. Sora 2 applies visible watermarks to its output. Veo 3.1 embeds Google's SynthID metadata tags. Seedance 2.0 produces clips that are, in ByteDance's words, "completely watermark-free." For rights holders trying to identify and flag unauthorized reproductions of their IP, that is not a footnote.

A voice from a face

Three days before the official launch, ByteDance quietly pulled one of Seedance 2.0's features. The model had shipped with the ability to generate a person's voice from a face photograph alone. No voice samples. No authorization needed.

Tech media founder Pan Tianhong ran the experiment on himself. He uploaded a personal photo and the system produced audio he described as "nearly identical to his real voice, without using any voice samples or authorized data." ByteDance disabled the feature once the discovery surfaced publicly.

The science isn't speculative. Research projects like Foice have examined voice generation from facial images as an attack vector against voiceprint authentication systems. Skull structure, jaw size, sinus cavities, neck length, all of it shapes how a person sounds. All of it is visible, or at least inferable, from a good photograph. ByteDance built a tool that did this well enough to be practical, and pulled it once it realized what that meant.

Acting fast counts in the company's favor. But the incident says something uncomfortable about the pace of AI development right now. ByteDance set out to build a video generator. Somewhere along the way, it also built a voice cloning tool that worked from photographs, and nobody inside the company flagged the risk before the beta shipped. The capabilities outran the understanding of what those capabilities could do.

Beijing wants this to be the next DeepSeek

Chinese state media leaned in hard on the framing. Global Times wrote in an editorial on Wednesday that Seedance 2.0 and similar innovations had given "rise to a wave of admiration for China within Silicon Valley." Beijing Daily ran a Weibo hashtag, "from DeepSeek to Seedance, China's AI has succeeded." Tens of millions of clicks followed.

The comparison is not accidental and not subtle. A year ago, DeepSeek's R1 reasoning model rattled Wall Street and briefly wiped roughly a trillion dollars from US tech stock valuations. That shock came from a text-based model, impressive to developers but invisible to most consumers. Seedance 2.0 is a different kind of product, consumer-facing video that anyone can see, judge, and share. Beijing's narrative frame is the same as last year, Chinese AI catches and passes American competitors, but the medium makes it stickier. People don't share benchmark charts. They share 15-second clips of Brad Pitt getting punched.

Financial markets got the message. Bloomberg data from the weekend showed COL Group slamming into its 20 percent daily price ceiling, with Shanghai Film and Perfect World both up 10 percent. Even the CSI 300 climbed 1.63 percent, all of it riding on a video AI demo.

And then Musk weighed in. He saw a post praising Seedance on X, replied with two words. "It's happening fast."

Three-front war for hyperreal video

Seedance 2.0 did not appear in isolation. Kuaishou Technology released Kling 3.0 a week earlier, and that model earned immediate attention for its physics accuracy. Former Google product manager Bilawal Sidhu said on LinkedIn that Kling 3.0 "finally lets you art direct motion instead of hoping for it." Seedance targets cinematic narrative and character consistency. Kling is built around making objects and bodies obey gravity and light. Together they represent a coordinated, if unplanned, Chinese push into the same space that American labs have been working for years.

On the American side, Sora 2 and Veo 3.1 remain the Western reference points. Both apply watermarks. Both enforce tighter content policies. And both operate under the legal exposure of US copyright law in a way that a Chinese company hosting content inside the Great Firewall does not. Neither American model generated the kind of viral moment Seedance produced this week.

The quality gap is becoming visible to casual observers, not just to engineers. Google's video AI recently powered Darren Aronofsky's On This Day... 1776 series, which drew millions of views but earned widespread criticism for stiff faces and unrealistic morphing. Critics called it "a horror." Weeks later, Seedance users were generating martial arts sequences and palace dramas that look like raw footage from a film set. The distance between those two outputs tells you where the technology moved in a matter of months.

Studios are watching the legal questions stack up without answers. Regulators in the US and EU got a preview of a risk category that existing rules don't cover, courtesy of the face-to-voice incident. ByteDance, meanwhile, faces a familiar problem: a product built for controlled, professional use has escaped into the wild, and the content people are making with it looks more real than anything that came before.

ByteDance said it designed Seedance 2.0 for film, e-commerce, and advertising production. The internet turned it into a machine for generating Brad Pitt fighting Tom Cruise. In 15 seconds of watermark-free video, nobody could tell the difference.

Frequently Asked Questions

Q: Is Seedance 2.0 available outside China?

A: Not yet. The model is currently limited to ByteDance's Jimeng AI platform, the Doubao AI assistant, and the CapCut editor, all within China. ByteDance has not announced a global rollout, and TikTok's ongoing US ownership dispute adds uncertainty to any international expansion timeline.

Q: How does Seedance 2.0 compare to OpenAI's Sora 2?

A: Swiss consultancy CTOL called Seedance 2.0 the most advanced AI video generator available, claiming it outperforms both Sora 2 and Google's Veo 3.1 in practical testing. One key difference: Sora 2 and Veo 3.1 apply watermarks to generated content, while Seedance output is watermark-free.

Q: What was the face-to-voice feature that ByteDance suspended?

A: Seedance 2.0 shipped with a feature that could generate a person's voice from a face photograph alone, without any voice samples. Tech media founder Pan Tianhong tested it on his own photo and found the output nearly identical to his real voice. ByteDance pulled the feature after the discovery went public.

Q: Why are Hollywood writers alarmed by Seedance 2.0?

A: Viral Seedance clips reproduced copyrighted characters with startling accuracy. Deadpool co-writer Rhett Reese called the output 'so professional' that it left him 'shook,' warning Hollywood could be 'revolutionized/decimated.' Actor Scott Adkins found his own likeness used without authorization in a generated video.

Q: What is Kling 3.0 and how does it relate to Seedance?

A: Kling 3.0 is a rival Chinese AI video model released by Kuaishou Technology a week before Seedance 2.0. While Seedance focuses on cinematic narrative and character consistency, Kling specializes in physics accuracy, making objects and bodies obey gravity and light. Together they represent a Chinese push into AI video generation.