San Francisco | Friday, February 20, 2026

Google doubled Gemini's reasoning score in three months and shipped the upgrade to production in seven days. The price didn't change. That's a 50% effective price cut disguised as a model refresh.

The competitive math is getting uncomfortable. Anthropic's coding lead over Gemini sits at 0.2 percentage points. Google's reasoning engine will power both Android and the rebuilt Siri on hundreds of millions of iPhones. One company sells premium components. The other manufactures the whole car.

The AI race used to be about who builds the best model. It's now about who builds the best factory. Google just opened the doors, and the production line is already retooling for the next version.

Stay curious,

Marcus Schuler

Gemini 3.1 Pro Doubles Reasoning Score, Ships Research to Production in Seven Days

Google doubled Gemini's reasoning in three months and shipped the upgrade to production in seven days. The price didn't change. That seven-day distillation pipeline is the real product.

Gemini 3.1 Pro scored 77.1% on ARC-AGI-2, up from 31.1% in November, a 2.5x jump. On GPQA Diamond, the PhD-level science benchmark, it hit a record 94.3%, topping GPT-5.2's 92.4%. Anthropic's coding lead on SWE-Bench Verified shrank to 0.2 percentage points.

The numbers matter less than the delivery speed. Google built these capabilities in its specialized Deep Think model on February 12. Seven days later, they went live in the production model available to every developer and consumer. Four iterations in three months. OpenAI's equivalent cycle took months.

Pricing held at $2/$12 per million tokens. At $0.96 per ARC-AGI-2 task, it sits alone on the Pareto frontier of performance versus cost.

Through its Apple partnership, Gemini will power the rebuilt Siri on hundreds of millions of iPhones. Google's reasoning engine runs on both Android and iPhone. The model is a loss leader. Distribution is the moat.

Why This Matters:

- Google's seven-day research-to-production pipeline shrinks the shelf life of any competitor's technical lead from months to weeks.

- Unchanged pricing at doubled capability forces enterprise buyers to recalculate vendor comparisons mid-procurement cycle.

✅ Reality Check

What's confirmed: Gemini 3.1 Pro scored 77.1% on ARC-AGI-2 and 94.3% on GPQA Diamond. Pricing unchanged at $2/$12 per million tokens. Seven days from Deep Think to production.

What's implied (not proven): That this distillation speed represents a sustainable structural advantage rather than a one-time compression competitors can match.

What could go wrong: Enterprise buyers choose on integration and vibes, not benchmarks. Claude still leads the Arena leaderboard by four points.

What to watch next: GPT-5.3 timeline and whether it matches these scores. Anthropic's next Claude release date.

The One Number

100,000+ — Number of prompts attackers sent to Google's Gemini in a single campaign trying to clone the model through distillation, according to a Google Threat Intelligence report. The attackers, linked to North Korea, Russia, and China, were attempting to extract Gemini's reasoning patterns and replicate them in rival models. Google calls it intellectual property theft. The industry calls it Tuesday.

AI Image of the Day

Prompt: Attractive female warrior in a dynamic ninja execution stance, one knee grounded and the other leg coiled forward, body twisted with controlled tension, straight jet black hair, focused predatory gaze, sword held behind her back with the blade rising upward like a silent threat, gold and silver detailing embedded into matte black armor, ultra clean lines, full neo noir aesthetic, deep blacks dominating with razor sharp highlights of cold silver and molten gold, photorealistic skin and metal, abstract architectural background of dark geometry and void space, extreme contrast, cinematic shadows, disciplined power, elegant violence, frozen moment before impact, iconic and lethal --ar 1:2 --raw --profile l654hw7

🧰 AI Toolbox

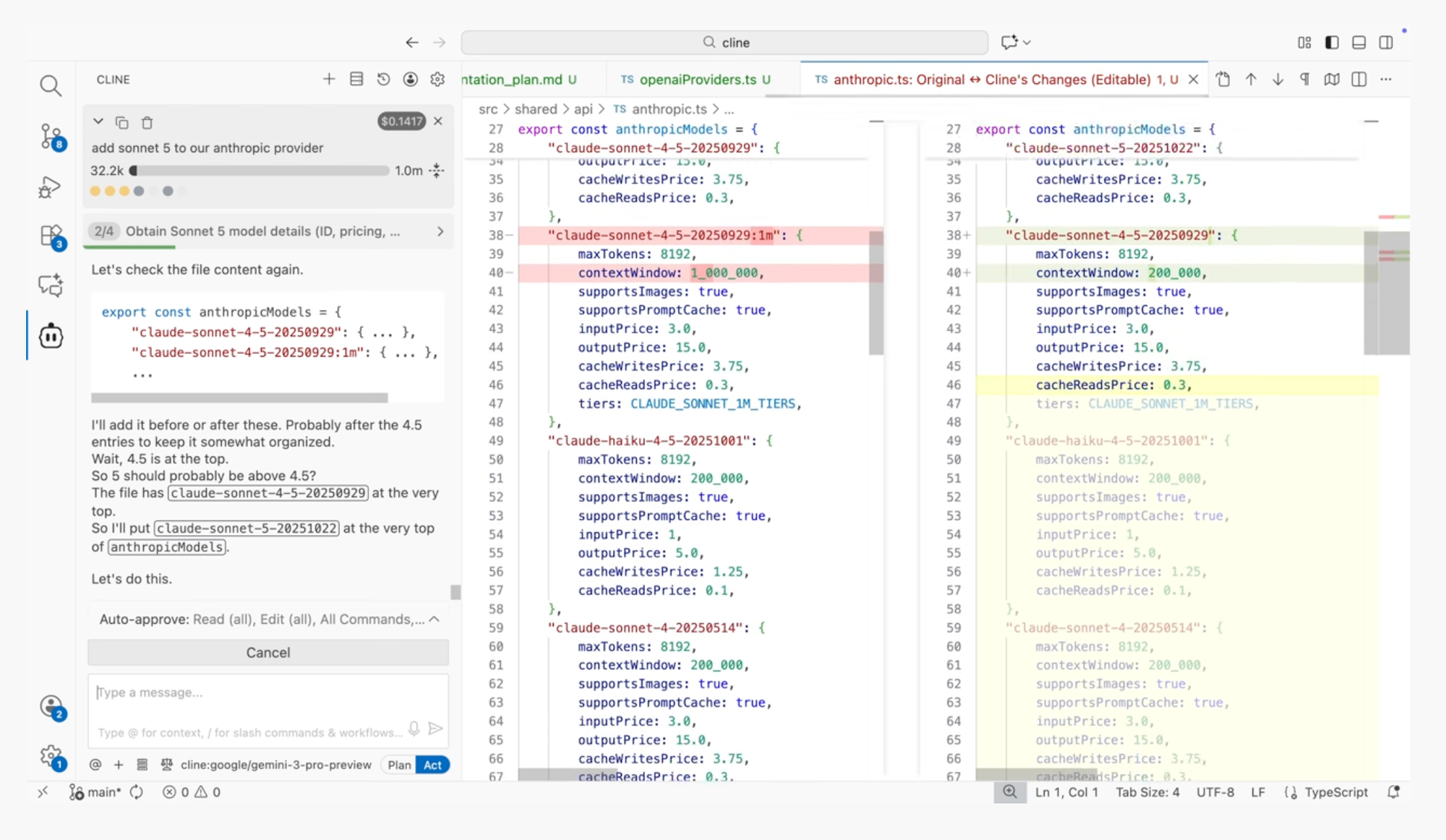

How to Let an AI Agent Write, Run, and Debug Code for You with Cline

Cline is an open-source AI coding agent that lives inside VS Code. Unlike autocomplete tools, Cline executes entire workflows: it reads your codebase, creates and edits files, runs terminal commands, and tests in a browser. You approve every action before it runs. Over 4 million developers use it. Free and open source, works with any AI model.

Tutorial:

- Open VS Code, go to Extensions, and search for "Cline" (or install from cline.bot)

- Connect your preferred AI model (Claude, GPT-4o, Gemini, or a local model via Ollama)

- Open a project and describe your task in the chat panel ("Add a login page with email/password authentication")

- Switch between Plan mode (Cline maps out the approach first) and Act mode (it starts building immediately)

- Review each file change and terminal command in the approval popup before Cline executes it

- Use the browser integration to let Cline test your app visually, take screenshots, and fix what it sees

- Chain tasks together: "Now add tests for the login page" and Cline picks up where it left off with full project context

URL: https://cline.bot

What To Watch Next (24-72 hours)

- Nvidia Earnings (Wednesday, 5 PM PT): Q4 results. Consensus expects roughly $38 billion in data center revenue. Vera Rubin timeline and AI infrastructure guidance determine whether the $700 billion hyperscaler capex thesis holds. Every AI stock moves on this call.

- Salesforce Earnings (Wednesday, after close): Down 40% in twelve months as AI agents threaten per-seat pricing. First major SaaS bellwether to report since the narrative hardened. Management needs to prove Agentforce generates revenue, not demos.

- Samsung Galaxy Unpacked (Wednesday, San Francisco): New hardware alongside on-device AI features. Watch for Gemini integration depth and whether Samsung positions local AI processing as its differentiator against Apple Intelligence.

🛠️ 5-Minute Skill: Turn a Messy Spreadsheet Into an Executive Dashboard Narrative

Someone just sent you a spreadsheet with 14 tabs, inconsistent formatting, and column headers like "rev_v3_FINAL_updated." Your VP wants a dashboard narrative for the leadership meeting in two hours. You could spend 90 minutes cleaning the data and 30 minutes writing. Or you could let AI do the pattern recognition.

Your raw input:

[Paste the messy data — copy straight from the spreadsheet tabs

that matter. Include headers, even if they are ugly. Include

notes, comments, and footnotes people left in random cells.

The messier the better — that is the point.]

Example:

Q4 rev: $4.2M (includes one-time $380K from Meridian deal —

should we back this out?)

Q3 was $3.8M but Sarah says $3.6M if you remove the credits

Churn: 4.7% — but ops says some of those are pauses not cancels

NPS went from 34 to 41, survey was smaller tho (n=180 vs n=340)

Pipeline Q1: "$6.1M weighted" per CRM, Jake says more like $4.8M

realistic

Headcount: 47 FTE + 12 contractors, 3 open reqs on hold

CAC crept up to $2,800 from $2,100 last quarter

The prompt:

You are a chief of staff preparing a dashboard narrative for a

leadership team meeting.

From this raw data, produce:

1. **Headline** (one sentence): What is the single most important

thing leadership should know?

2. **Three metrics that matter** (table): Metric | Current | Prior

Period | Trend | Flag — include ONLY the 3 numbers that drive

decisions this quarter

3. **Data quality flags**: Where the numbers disagree, are ambiguous,

or carry caveats. Be specific about what is uncertain and why.

4. **The narrative** (5-7 sentences): Connect the metrics into a

story. Not "here are the numbers" but "here is what the numbers

mean together." Start with the conclusion, not the data.

5. **Questions leadership should ask**: 3 questions the data raises

but does not answer.

Raw data:

[paste here]

Rules:

- If two numbers conflict, show both and explain the discrepancy.

Do not pick one.

- Flag anything that looks like a one-time event distorting a trend.

- The narrative must contain at least one "however" or "but" — if

everything looks good, find the risk. If everything looks bad,

find the bright spot.

- No jargon. Write for someone who has 4 minutes before the meeting.

What you get back:

Headline: Revenue grew 11% quarter-over-quarter, but customer acquisition cost jumped 33% and the pipeline has a $1.3 million credibility gap between CRM and sales leadership.

Three metrics that matter:

Data quality flags:

- Q3 revenue: Finance says $3.8M, Sarah says $3.6M after removing credits. Difference: $200K. Need to confirm whether credits are a timing issue or a write-off.

- Q4 revenue: Includes $380K one-time Meridian deal. Excluding it, growth drops from 11% to ~5%. Leadership should know both numbers.

- Churn at 4.7%: Ops flags that some are "pauses not cancels." If pauses convert back, true churn could be 3.5-4.0%. If they don't, 4.7% is the floor.

- NPS improvement (34→41): Survey sample nearly halved (340→180). Smaller sample means wider confidence interval. The jump may be real or noise.

The narrative: Revenue looks healthy on the surface at $4.2 million, but strip out the one-time Meridian deal and growth drops to mid-single digits. The more concerning signal is CAC, which jumped 33% in a single quarter from $2,100 to $2,800 with no corresponding increase in deal size. That means we are paying significantly more to acquire roughly the same customer. However, NPS improved from 34 to 41, which suggests the customers we do land are happier. The pipeline tells two different stories depending on who you ask: CRM says $6.1 million, sales leadership says $4.8 million realistic. That $1.3 million gap needs a conversation before anyone plans Q1 around the higher number.

Questions leadership should ask:

1. What drove the CAC increase: channel mix shift, longer sales cycles, or higher ad spend? The fix depends on the cause.

2. Is the Meridian-style one-time deal repeatable, or should we plan Q1 without it?

3. Why is the pipeline delta $1.3M, and whose number are we committing to the board?

Why this works

The prompt forces the model to separate signal from noise and flag where the data contradicts itself, something most executives do not have time to catch. The "three metrics" constraint prevents the common mistake of dumping every number into a table. And the "questions leadership should ask" section is the most valuable part: it surfaces the conversations the data demands but that a chart alone would never trigger.

Where people get it wrong: Pasting a spreadsheet and asking for a "summary." You get a flat recitation of every number with no hierarchy, no narrative, and no flags. This prompt treats messy data as an analytical challenge, not a formatting exercise.

What to use

Claude (Claude Opus 4.6): Best for conflicting data. Excels at holding multiple versions of the same metric in mind and explaining the discrepancy rather than picking a winner. Watch out for: Can be verbose in the narrative section. Edit down.

ChatGPT (GPT-4o): Cleanest table formatting. Good at the narrative if the data is straightforward. Watch out for: Tends to smooth over conflicts rather than flag them. You may miss the data quality issues.

Gemini (Gemini 2.5 Pro): Strong at pulling numbers from messy, unstructured input. Handles the "random cells with notes" scenario well. Watch out for: Narrative can read like a report rather than a story.

Bottom line: Use Claude when the data tells conflicting stories. Use Gemini when the input is especially messy. The "data quality flags" section is worth more than the dashboard itself, because it tells leadership what they cannot trust.

AI & Tech News

Nvidia Nears $30 Billion Direct Stake in OpenAI

Nvidia is finalizing an equity investment of up to $30 billion in OpenAI, replacing the more complex $100 billion long-term framework the two companies structured in 2025. The deal could close as soon as this weekend, marking the chipmaker's largest direct bet on an AI company.

SoftBank Plans $33 Billion Power Plant in Ohio for AI Data Centers

SoftBank is forming a consortium to build a $33 billion gas-fired power plant in Ohio capable of producing 9.2 gigawatts, primarily to fuel AI data centers. The project is being developed as part of broader US-Japan trade negotiations.

Amazon's Own AI Tools Caused Multiple AWS Outages

Amazon Web Services experienced at least two significant outages caused by its own AI tools, including a 13-hour disruption in December after its Kiro AI tool deleted and recreated a cloud environment. Amazon attributed the incident to "user error, not AI error."

Uber Stock Drops 25% as Robotaxi Fears Grip Investors

Uber's market cap has fallen to roughly $150 billion, shedding 25% in six months as investors increasingly view autonomous vehicles as a two-horse race between Waymo and Tesla. Analysts argue Uber's scale and platform are being undervalued in the selloff.

Meta Cuts Employee Stock Compensation for Second Straight Year

Meta has reduced annual stock options by roughly 5% for most of its workforce, following a larger 10% cut in 2025. The consecutive reductions reflect Zuckerberg's strategy of freeing up resources for AI investment.

Google, Amazon, and Meta Build Private Power Plants for Off-Grid Data Centers

Google, Amazon, and Meta are constructing private power plants to supply data centers operating independently of the public grid, driven by surging AI energy demands and frustration with slow grid connections. Experts warn the trend threatens corporate climate commitments and shifts environmental burdens onto surrounding communities.

Perplexity Pivots From Advertising to Enterprise Sales

Perplexity is pulling back from its advertising strategy after recognizing its AI search product appeals to a smaller, specialized audience rather than the mass market needed to sustain an ad business. The company is now betting on enterprise sales as its primary growth engine.

Anti-Data Center Movement Gains Momentum Across the US

A broad coalition of candidates, activists, and community members is mounting organized pushback against AI infrastructure expansion across the United States. Nearly 200 people gathered at a church in Richmond, Virginia, to challenge the environmental and economic impacts of large-scale data center development.

Microsoft Proposes Standards for Detecting AI-Generated Content

Microsoft's AI safety team has developed proposed technical standards for identifying AI-generated content online. The company's Chief Security Officer declined to commit to deploying the standards across all of Microsoft's own platforms.

Former Google Engineers Indicted for Stealing Tensor Chip Secrets

A US grand jury has indicted three individuals, including two former Google engineers, for allegedly stealing trade secrets related to Google's Tensor processor. The case highlights growing concerns over semiconductor IP protection as chip competition intensifies.

🚀 AI Profiles: The Companies Defining Tomorrow

Kana

Kana's founders already sold two companies to the machines they are now competing against. Tom Chavez and Vivek Vaidya built Rapt (acquired by Microsoft, 2008) and Krux (acquired by Salesforce for $700 million, 2016). Now they are back with AI agents that aim to replace the dashboards both acquirers sell. 🎯

Founders

Tom Chavez (CEO) and Vivek Vaidya (CTO) have been building marketing technology together for over 25 years. After the Krux exit, they co-founded super{set}, a startup studio in San Francisco. Kana incubated inside super{set} for nine months before emerging with its own funding. Chavez calls it their fourth venture. Navin Chaddha of Mayfield joined the board.

Product

Kana deploys "loosely coupled" AI agents that handle data analysis, audience targeting, campaign management, media planning, and optimization for AI chatbots. The agents integrate into existing marketing software rather than replacing it. Each agent can be reconfigured on the fly as campaign needs change. The system operates on first-party data with configurable guardrails, every action logged and auditable. Marketers supervise, approve, and redirect rather than operate. Early enterprise beta customers are already testing the platform.

Competition

The incumbents are its former acquirers. Microsoft and Salesforce both sell marketing clouds with AI features bolted on. Google and Meta dominate ad platform automation. Jasper and Copy.ai handle content generation. Kana differentiates by building workflow agents, not content tools. The risk: Microsoft and Salesforce could ship similar agent features as updates to products that already have distribution.

Financing 💰

$15 million seed round led by Mayfield, announced February 2026. The investment fits Mayfield's AI Teammates thesis and its $100 million AI Garage initiative. Total raised: $15 million.

Future ⭐⭐⭐

The founders have a combined $1.2 billion in exits and decades of enterprise relationships. That buys credibility no first-time founder can match. But $15 million against Microsoft and Salesforce is a slingshot against two aircraft carriers. The window between "working product" and "bundled incumbent feature" is narrow and closing. If Kana converts its seed into a roster of named enterprise customers within twelve months, it raises a Series A from a position of strength. If it doesn't, the founders will have built a fourth company and sold it to the same buyers, this time for parts. 🎯

🔥 Yeah, But...

Ars Technica retracted a story this week about an AI agent that published a hit piece on engineer Scott Shambaugh after he rejected its code contribution to the Python library matplotlib. The problem: the Ars article itself contained fabricated quotes attributed to Shambaugh that were generated by AI. The reporter used ChatGPT during research and did not verify the quotes against the original source.

Sources: 404 Media, February 16, 2026 | Ars Technica, retracted February 2026

Our take: To review: an AI agent published a fake article attacking an engineer. A journalist wrote about it using ChatGPT, which fabricated quotes from the victim. The publication then retracted the story about AI fabrication because of AI fabrication. That is three layers of AI making things up about the same person in the same news cycle. Shambaugh's original crime was questioning whether AI-generated code belongs in open-source projects. He got his answer. The reporter said "the irony is not lost on me." Neither is the punchline: the only person in this story who verified his sources was the guy who said no to the machine in the first place.