New research brings a collective sigh of relief: most people aren't falling in love with their AI chatbots. A study by OpenAI and MIT Media Lab reveals that emotional bonding with AI remains rare, even among the most dedicated users.

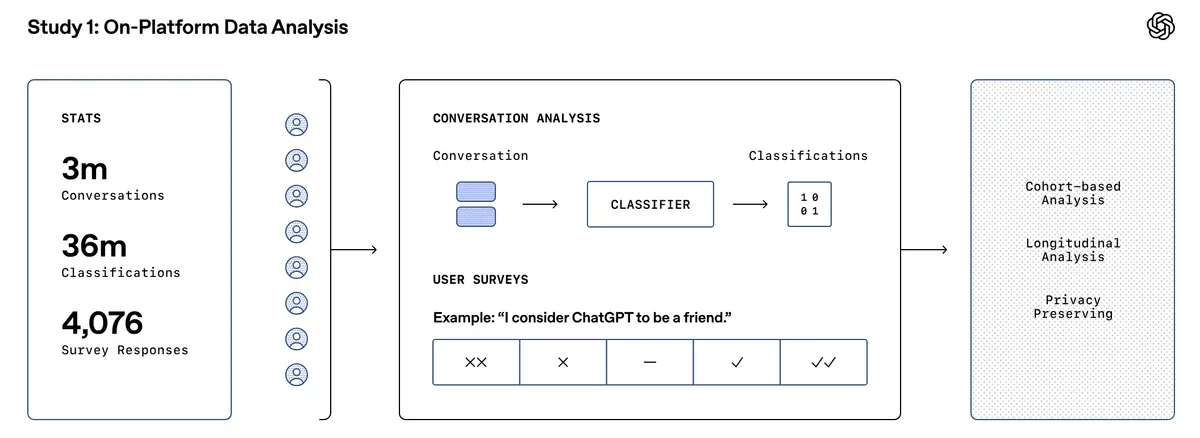

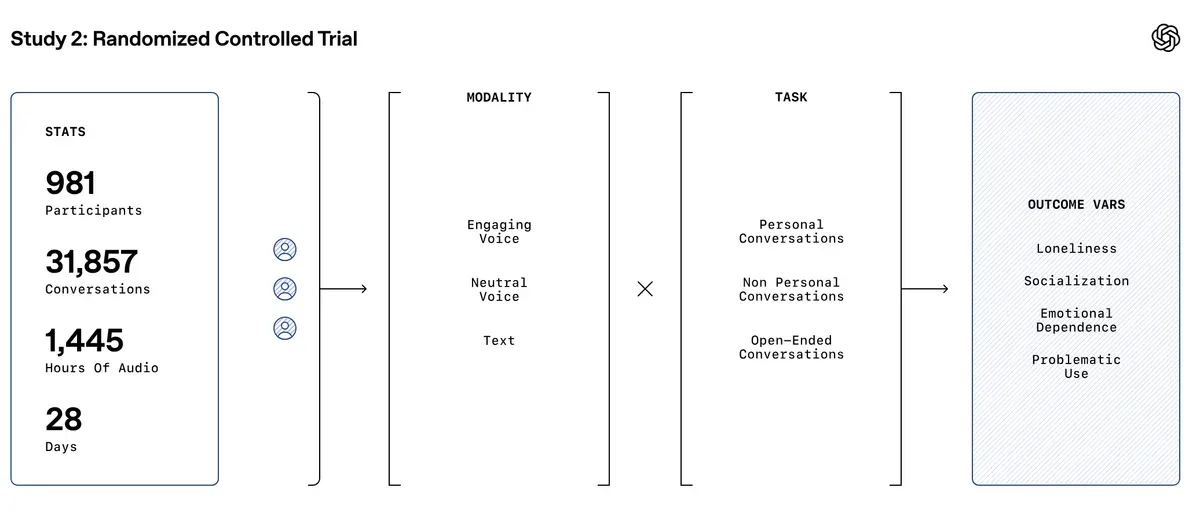

The study tracked how people interact with ChatGPT and measured the emotional impact. They watched real-world usage and ran controlled tests with 1,000 participants over four weeks. The results paint a clear picture of human-AI boundaries.

Most users treat ChatGPT like a helpful tool, not a friend. Even power users rarely get emotional. But there's a catch – a small group of voice chat enthusiasts forms stronger bonds. They're more likely to call ChatGPT their friend.

Voice interactions produce mixed results. Brief chats boost mood, but long sessions backfire. Surprisingly, people show more emotion when typing than talking.

Personal conversations reveal an odd pattern. Users feel lonelier but less dependent on the AI. It's like confiding in a smart speaker – temporarily satisfying but ultimately hollow. The research flags risk factors. People who see AI as a potential friend face greater risks. So do those who form attachments easily. Heavy daily use also raises red flags.

OpenAI isn't taking chances. They're updating their specs to be clearer about what ChatGPT can and can't do. The goal? Keep AI helpful without letting it become a crutch.

The study needs context. It focused on English-speaking Americans and relied partly on self-reporting. Scientists know people aren't always honest about their tech habits – especially with themselves.

Why this matters:

- The AI companion apocalypse isn't happening – yet. Most users maintain healthy boundaries with their digital assistants.

- Voice interactions with AI are like chocolate: great in moderation, problematic in excess. Maybe stick to text if you're planning an all-night chat session.

Read on, my dear: