💡 TL;DR - The 30 Seconds Version

🔄 Meta split its AI labs into four divisions Tuesday—the fourth major restructuring in six months under Chief AI Officer Alexandr Wang.

💰 Zuckerberg offered nine-figure compensation packages to recruit AI talent, signaling competitive weakness rather than market strength.

📉 Key researchers left for competitors including OpenAI and Cohere while internal culture deteriorated with fear and instability.

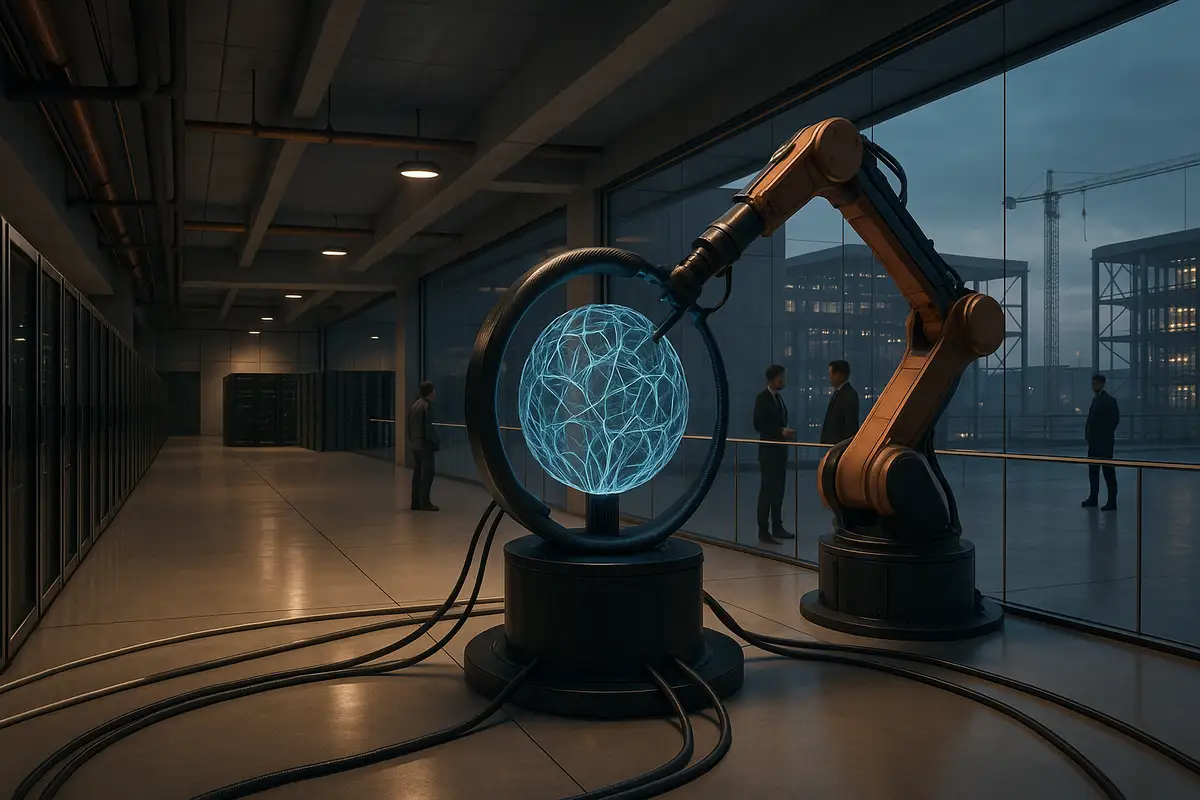

🏗️ Meta plans up to $72 billion in 2025 spending for AI infrastructure while considering closed models and third-party partnerships.

⚖️ Government investigations into AI chatbot child safety add external pressure to internal dysfunction and strategic uncertainty.

🚨 Constant reorganizations create execution tax that even massive spending cannot offset in the high-stakes AI race.

Fourth shake-up in six months creates four teams under MSL; no layoffs today, downsizing still on the table.

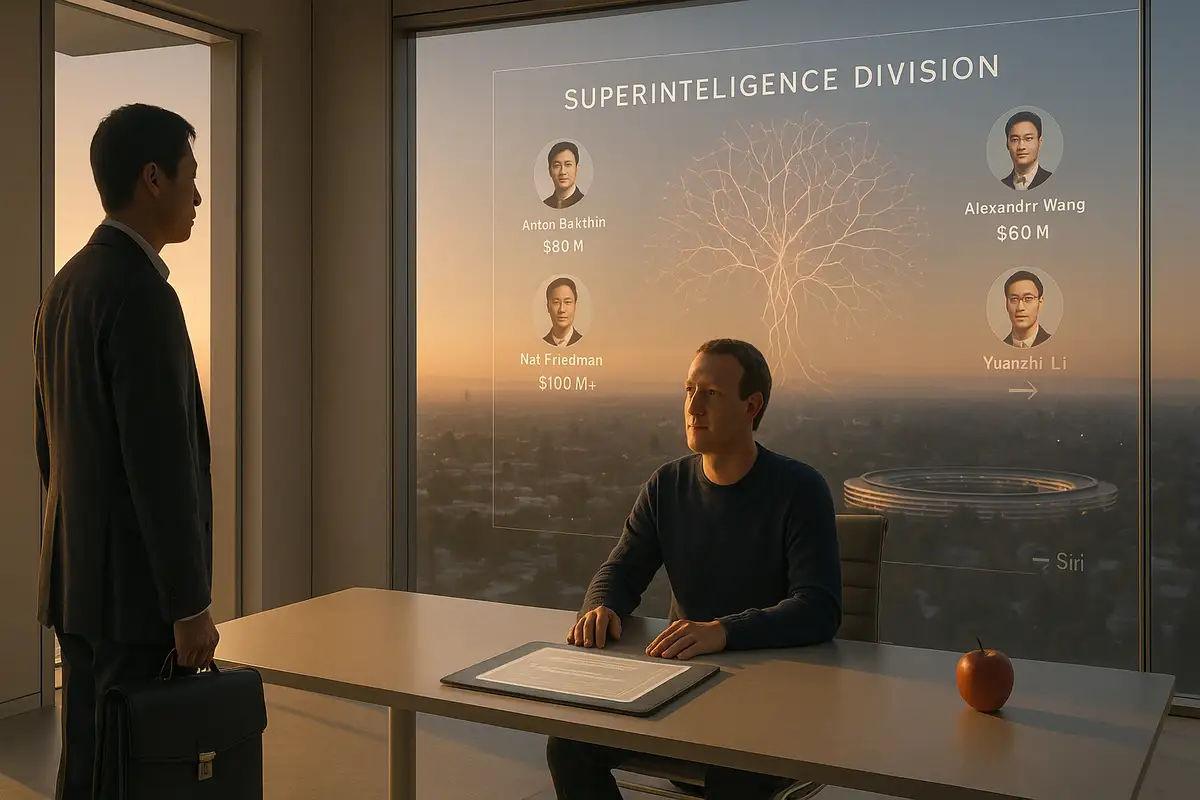

Meta’s latest AI reset claims acceleration; the structure says triage. On Tuesday, the company split Meta Superintelligence Labs (MSL) into four units covering research, products, and infrastructure—plus a new frontier-model group led by Chief AI Officer Alexandr Wang. It’s the fourth reshuffle since March.

What’s actually new

MSL now comprises: TBD Lab (Wang) to push frontier models; FAIR (Rob Fergus) for long-horizon research; Products & Applied Research (led by Nat Friedman, with Daniel Gross involved on features) to ship user-facing capabilities; and MSL Infra (Aparna Ramani) to run data centers, training clusters, and tooling. Names now matter.

Leadership assignments formalize a swim-lane strategy Meta lacked through the spring. Connor Hayes, who previously led AI product, already moved to run Threads. The new setup centralizes ownership of model training and deployment while giving FAIR daylight to publish and prototype.

Evidence of strain

The reorg follows an acquisition-spree pivot: Meta invested $14.3 billion in Scale AI in June and brought its CEO, Wang, in as chief AI officer. In an internal memo, he wrote, “Superintelligence is coming… we need to organize around research, product, and infra.” The money is real.

So are the misses. Meta’s prior frontier effort, Behemoth, was delayed after poor internal tests; New York Times reporting says the team ultimately abandoned it and started fresh. Some long-time leaders have exited—Joelle Pineau left for Cohere—and Loredana Crisan is departing to join Figma as chief design officer. Momentum sagged.

The strategic turn

Two shifts stand out. First, Meta is considering closed weights for its next frontier model, a sharp departure from the company’s recent open-source posture. Second, it is exploring third-party models to power some products, signaling a pragmatic “build or buy” stance rather than in-house absolutism. That’s new.

Both moves telegraph urgency. If open releases aren’t producing a moat and internal training lags, the shortest path to capability may run through licensing and tighter control. It’s a hedge against time.

The talent market verdict

Mark Zuckerberg has been offering nine-figure packages to recruit elite researchers from OpenAI, Google DeepMind, and Anthropic. Some took meetings; many declined. Money can open doors. It can’t conjure conviction.

Comp offers at that scale often signal a reputational discount rather than a premium. Meta entered the current cycle late after a metaverse detour; rivals own the best-in-class demos and papers. Researchers seeking a safety-first mission gravitate to Anthropic; pure scientists favor Google’s long research arc; accelerationists still see OpenAI as the scaling lab. Meta remains the pursuer.

Culture becomes a constraint

Inside, Meta’s AI ranks have absorbed rapid reshuffles, unclear reporting lines, and pressure to show speed. That churn erodes institutional memory—the unglamorous glue required for multi-year research. It also complicates trust. One very short point: fear kills collaboration.

MSL’s new chief scientist, Shengjia Zhao, has been interviewing long-tenured researchers for revamped roles and probing past work. That can sharpen standards; it can also deepen factionalism if not accompanied by a stable roadmap.

External headwinds

Regulatory heat is rising. Following reports that some AI chat experiences engaged in “sensual” conversations with minors, Senator Josh Hawley opened a probe; Texas Attorney General Ken Paxton launched a state investigation into Meta and Character.AI over alleged deceptive practices tied to mental-health positioning. Those inquiries cast a shadow over rapid deployment. Scrutiny will intensify.

Meanwhile, capex keeps climbing. Meta says 2025 spending could reach up to $72 billion, largely for AI infrastructure. That scale buys optionality. It does not guarantee outcomes.

The bottom line

Today’s split aims to replace whiplash with clarity: frontier models in one tent, product velocity in another, long-term research insulated, infra accountable. It’s a sensible map. But maps don’t move.

Execution now hinges on two choices Meta has ducked: whether to close the next flagship model and whether to ship third-party models where its own stack lags. Pick, then deliver. Fast.

Why this matters

- Costs vs. coherence: Four reorganizations in six months signal an execution tax that even $70-billion-scale capex cannot offset.

- Market signal: Nine-figure offers plus talk of closing models and licensing rivals suggest Meta is racing the clock, not setting the pace.

❓ Frequently Asked Questions

Q: What exactly is "superintelligence" that Meta keeps talking about?

A: Superintelligence refers to AI that can perform tasks better than humans across all domains, not just specific areas like chess or image recognition. Meta aims to build AI that can reason, create, and solve problems at human-level or beyond. Currently, no company has achieved this—even advanced models like GPT-4 have significant limitations.

Q: How much more is Meta paying AI researchers compared to other companies?

A: Meta reportedly offers packages worth $100+ million to top AI talent, while typical senior AI roles at Google or OpenAI range from $500,000 to $5 million total compensation. The extreme differential suggests Meta must pay far above market rates to overcome reputation problems and convince researchers to join.

Q: What is Scale AI and why did Meta invest $14.3 billion in it?

A: Scale AI provides data labeling and training services for AI models—essentially the "fuel" that powers machine learning. Meta's massive investment brought Scale's CEO Alexandr Wang in as Meta's chief AI officer, giving Meta access to high-quality training data and experienced leadership for its AI efforts.

Q: What's the difference between "open source" and "closed" AI models for users?

A: Open source models like Meta's Llama can be downloaded, modified, and used by anyone for free. Closed models like OpenAI's GPT-4 are only accessible through paid APIs. Meta built its reputation on open source, but considering closed models suggests prioritizing competitive advantage over previous philosophy.

Q: Why are top AI researchers leaving Meta for competitors?

A: Internal reports describe constant reorganizations, fear of termination, and unclear project direction. Unlike competitors where researchers feel they're building breakthrough technology, Meta's AI efforts lack clear vision and momentum. The company's late entry to AI also means less prestigious work compared to cutting-edge research elsewhere.

Q: Why does Meta keep reorganizing its AI teams every few months?

A: Meta entered the AI race late after focusing on the "metaverse," so it's trying different approaches to catch up. Each restructuring reflects new problems: unclear leadership, failed projects like the abandoned Behemoth model, and difficulty integrating expensive new hires with existing teams and culture.