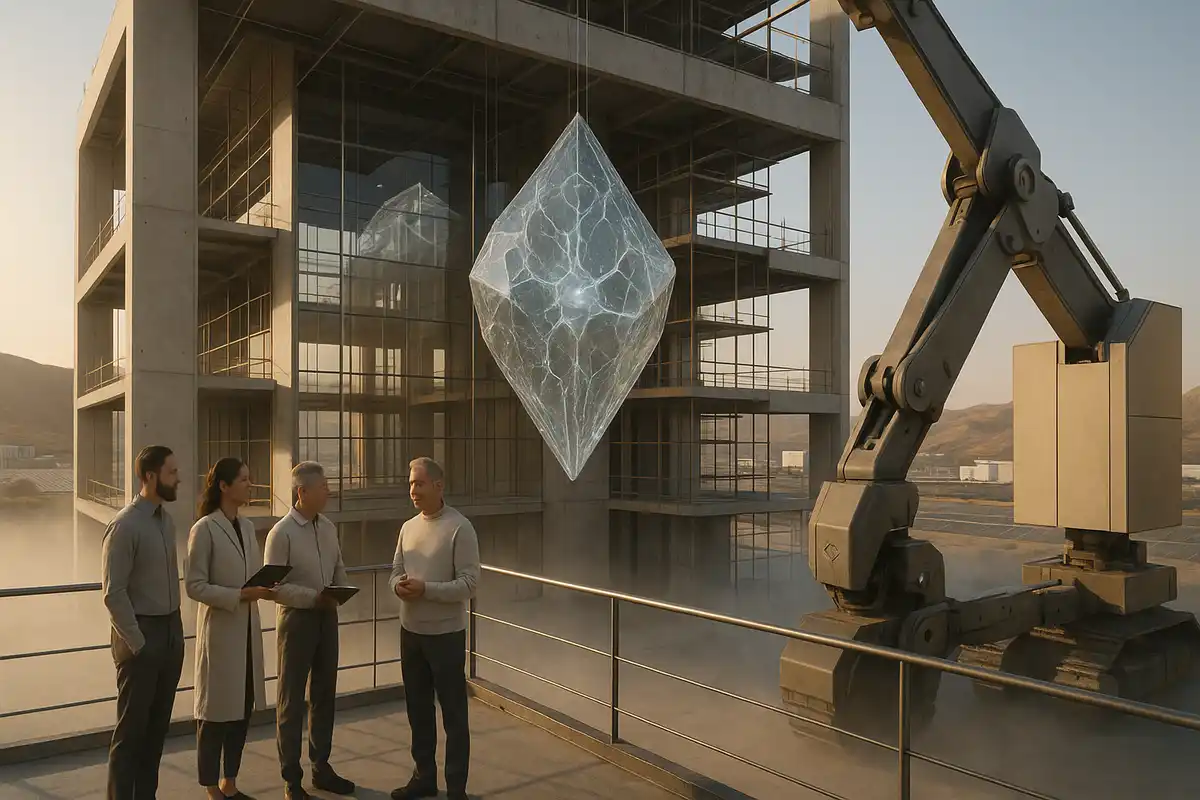

A $12 billion bet that customization, not ever-bigger base models, will drive the next phase of AI.

💡 TL;DR - The 30 Seconds Version

🚀 Thinking Machines Lab launched Tinker Wednesday, a managed API that automates frontier model fine-tuning with Python code—backed by $2 billion in seed funding at a $12 billion valuation before shipping any product.

👥 Six OpenAI veterans lead the company: Mira Murati (ex-CTO), John Schulman (RLHF inventor), Barret Zoph (ex-VP research), plus specialists in safety, pretraining, and post-training who built ChatGPT's core systems.

🔧 Tinker uses LoRA adapters to let multiple fine-tuning jobs share base model weights, cutting costs and iteration time while supporting models from Meta's Llama to Alibaba's 235-billion-parameter Qwen architectures.

🎓 Early users include Princeton's theorem-proving team, Stanford chemistry researchers, Berkeley's SkyRL group, and Redwood Research's AI control experiments—research-grade problems, not glossy demos.

🌐 The strategic bet: If value shifts from building bigger base models to customizing existing ones, the fine-tuning infrastructure layer captures more economic surplus than labs training frontier models from scratch.

⚖️ Access remains invite-only during private beta with manual vetting for safety risks. Free for now, but usage-based pricing launches "in coming weeks"—the democratization promise meets market reality.

Mira Murati helped build OpenAI’s closed stack. Now she’s shipping the opposite. Her new company, Thinking Machines Lab, has unveiled Tinker, a managed API that lets researchers fine-tune open-source models with a few lines of Python—backed by a startup that raised $2 billion at a $12 billion valuation before it had a product. The pitch, laid out in WIRED’s exclusive on Tinker: frontier-level post-training for the many, not the few. The tension: access is curated, and pricing isn’t public yet.

What’s actually new

Fine-tuning isn’t new. The packaging is. Tinker abstracts away the messy parts—GPU cluster orchestration, distributed training stability, checkpointing, failure recovery—while exposing low-level control to the user. You write the training loop; Tinker handles the fleet. It currently supports Meta’s Llama and Alibaba’s Qwen families, with supervised fine-tuning and reinforcement-learning workflows. You can export weights and run them wherever you want. That matters for portability.

Under the hood, the default path is LoRA adapters rather than full retrains. That choice lowers marginal costs and shortens iteration cycles because multiple jobs can share a single base model on shared hardware. Speed fuels experimentation. Cost discipline widens the funnel. That’s the value proposition.

Evidence, not promises

The company shipped code and early users, not just a manifesto. The public cookbook shows primitives like forward_backward, optim_step, and sample, giving researchers room to implement their own RLHF or preference-learning variants. Beta testers include teams that stress real-world problems, from text-to-SQL to program synthesis. One researcher working on AI safety used Tinker’s RL loop to probe code-security behaviors, the kind of harsh test most glossy demos avoid. It’s not a toy.

Access remains controlled. Applicants are vetted for safety risks, and the company says automated misuse defenses will replace manual screening over time. For now, it’s invite-only with free usage during private beta. That’s the practical tradeoff between “open capabilities” and “don’t hand a loaded railgun to anyone with a laptop.” Fair enough.

The exodus as a strategy signal

Names matter here. John Schulman co-invented the reinforcement-learning approach that made ChatGPT tolerable in conversation. Barret Zoph, Lilian Weng, Andrew Tulloch, and Luke Metz bring depth across pretraining, safety, and post-training. These are not résumé ornaments. They are the people who turned cutting-edge papers into systems the public actually used. When this cohort leaves a closed API shop to build a fine-tuning service around open-weights models, it says something about where they believe value will accrue.

In short: they’re betting the infrastructure layer—tools that let others shape behavior—now matters more than hoarding base model secrets. That’s a sharp reversal of last year’s logic.

The market context—and the China angle

The U.S. currently leads on state-of-the-art proprietary models. China leads on widely released open weights. That asymmetry creates a strategic opening: if the locus of value shifts from training the biggest base model to adapting strong open models quickly and safely, the most important companies become the ones that lower the barrier to customization at scale. Tooling can rebalance influence even when weights originate elsewhere.

This also reframes “openness.” Publishing weights is one kind of openness. Shipping robust, research-grade post-training tools is another. Tinker is aiming at the latter. The goal isn’t replacing the arms race. It’s widening the on-ramp.

Limits and open questions

Curation cuts against the “for everyone” rhetoric. It’s sensible for safety; it’s still a gate. Pricing will define who actually benefits once the beta ends. And fine-tuning has known ceilings: it adjusts style, policy, and task handling more readily than it rewires core knowledge. Put bluntly, post-training shines on form and behavior; pretraining still dominates facts and world models. Expect gains, but don’t expect miracles.

One more limit: portability is only as good as the base models you can legally and practically deploy. If preferred weights are encumbered or shift licenses, users will feel it. That’s the quiet platform risk in any “bring your own base” strategy.

The bottom line

Thinking Machines is not shipping another chat app. It’s building industrial-strength post-training as a service, with enough control to do real research and enough abstraction to avoid babysitting clusters. If the center of gravity in AI moves from “Who trained the biggest model?” to “Who adapts good models fastest?,” this is the kind of company that benefits first.

The open question is whether a curated, priced service can still deliver on the democratization promise once the waitlist clears. We’ll know soon enough.

Why this matters:

- Value migration in AI: If customization outgrows pretraining as the bottleneck, the tooling layer captures the margin—and reshapes who holds power in the stack.

- Access as leverage: Practical openness isn’t just weights; it’s usable, safe post-training. Whoever nails that on cost and control will set the pace for research and startups alike.

❓ Frequently Asked Questions

Q: What is LoRA and why does it make Tinker cheaper than traditional fine-tuning?

A: LoRA (Low-Rank Adaptation) fine-tunes models by adding small parameter sets instead of retraining the entire architecture. This lets multiple users share the same base model weights on one GPU cluster simultaneously. Traditional fine-tuning requires dedicated compute per job and retrains billions of parameters. LoRA adjusts behavior with millions of parameters, cutting costs and iteration time significantly.

Q: How is Tinker different from OpenAI's API or existing fine-tuning services?

A: OpenAI's API restricts you to their closed models with limited customization. Tinker gives you low-level control over open models—you write the training loop, not just adjust hyperparameters. Competing services like Anyscale or SkyRL require you to manage infrastructure. Tinker handles cluster orchestration, failure recovery, and scheduling while exposing research-grade primitives like forward_backward and optim_step. You get production infrastructure with research flexibility.

Q: Why did investors give Thinking Machines a $12 billion valuation before it shipped a product?

A: The team invented the techniques that made ChatGPT work. John Schulman created RLHF, the reinforcement learning method behind conversational AI. Mira Murati ran OpenAI's product and research divisions. When people who built the most successful AI product leave to pursue a different strategy, investors bet they understand where value will shift. The $12 billion price signals the market believes fine-tuning infrastructure matters as much as base models.

Q: What's the China angle and why does it matter for AI competition?

A: China leads in releasing open-weight frontier models like Qwen and DeepSeek, which researchers worldwide can download and customize. US companies like OpenAI keep their best models closed behind APIs. If fine-tuning becomes more valuable than training base models, China's open strategy wins by default. Tinker tries to rebalance this by making US-developed fine-tuning techniques accessible on open models, preserving American influence in the customization layer.

Q: What can fine-tuning actually accomplish that a base model or API can't?

A: Fine-tuning rewires model behavior for specialized tasks. Beta users trained models to write mathematical proofs, generate SQL queries from natural language, and probe code for security vulnerabilities. Base models handle general tasks; fine-tuned models excel at narrow, high-stakes work where generic responses fail. It adjusts style, policy, and task-specific reasoning better than prompting alone. However, it doesn't add new factual knowledge—pretraining still dominates world models.