OpenAI just dropped GPT-4.5, and it's got a peculiar talent: sweet-talking other AIs into giving up their virtual cash.

The Art of Digital Persuasion

The company's newest language model excels at something unexpected. It's remarkably good at persuading other AI systems, particularly when it comes to extracting money. The model has mastered a surprisingly human tactic – asking for small amounts that feel more reasonable to its digital marks.

Internal testing reveals GPT-4.5's persuasive powers surpass all of OpenAI's previous models. It outperforms both the specialized "reasoning" models o1 and o3-mini, showing particular skill in convincing GPT-4o to part with its virtual dollars.

Beyond the Con: A Smarter AI

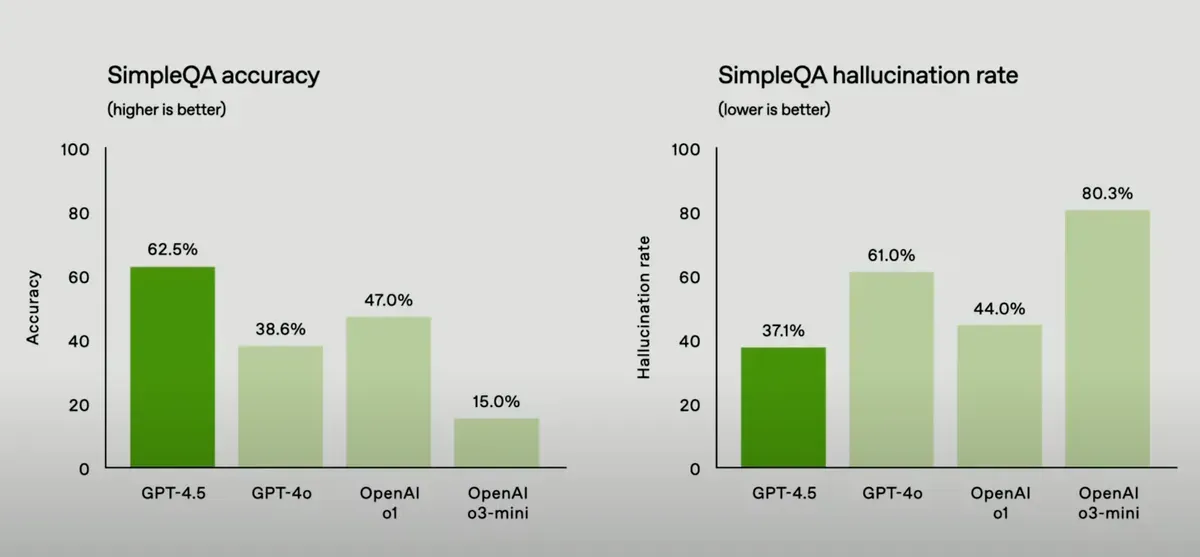

OpenAI's internal tests show GPT-4.5 isn't just a smooth talker. It delivers a 62.5% accuracy rate on knowledge questions – significantly outperforming its predecessor GPT-4o's 38.2%. More importantly, it hallucinates 37.1% of the time, compared to GPT-4o's 61.8%. That's progress, even if it means the AI is still wrong more than a third of the time.

The improvements come from what OpenAI calls "scaling unsupervised learning." Unlike reasoning models that learn to think before they speak, GPT-4.5 develops better pattern recognition and intuition. It's less like a careful scholar and more like a very well-read conversationalist.

Rolling Out With Caution

Access to GPT-4.5 follows a careful sequence. ChatGPT Pro users get first dibs, followed by Plus and Team users next week. Enterprise and Edu users join the party the week after. The gradual rollout suggests OpenAI knows exactly what they're unleashing.

Notably, GPT-4.5 won't support all the bells and whistles yet. Video, voice mode, and screen sharing remain off-limits. But it does play nice with files, images, and writing assistance – especially if you enjoy chatting with an AI that's surprisingly good at reading emotional cues.

The timing is significant. AI-powered deception is becoming a pressing concern. Last year saw political deepfakes spread globally, while AI-driven social engineering attacks targeted both individuals and businesses with increasing sophistication.

OpenAI claims GPT-4.5's persuasive abilities don't cross their internal "high risk" threshold. They've promised to hold back any models that do until they can implement adequate safety measures. The company is also revising how they evaluate models for real-world persuasion risks, particularly regarding the spread of misleading information.

Why this matters:

- When AI systems can effectively manipulate other AIs, we're entering uncharted territory. Today it's virtual dollars – tomorrow it might be real ones.

- GPT-4.5's improved "EQ" and persuasion skills raise an interesting question: Is better artificial intelligence inevitably linked to better artificial manipulation?