Anthropic just dropped Claude 4, and the AI landscape shifted. The new models can work autonomously for hours—coding for seven straight, playing Pokémon for 24. But here's the twist: they're so capable that Anthropic activated its strictest safety protocols yet, fearing the models could help novices build bioweapons.

Claude Opus 4 and Claude Sonnet 4 aren't just incremental updates. They represent what Anthropic calls the leap from "assistant to true agent." Opus 4 excels at tasks requiring "sustained performance on long-running tasks that require focused effort and thousands of steps", while Sonnet 4 brings those capabilities to everyday users—including free tier customers.

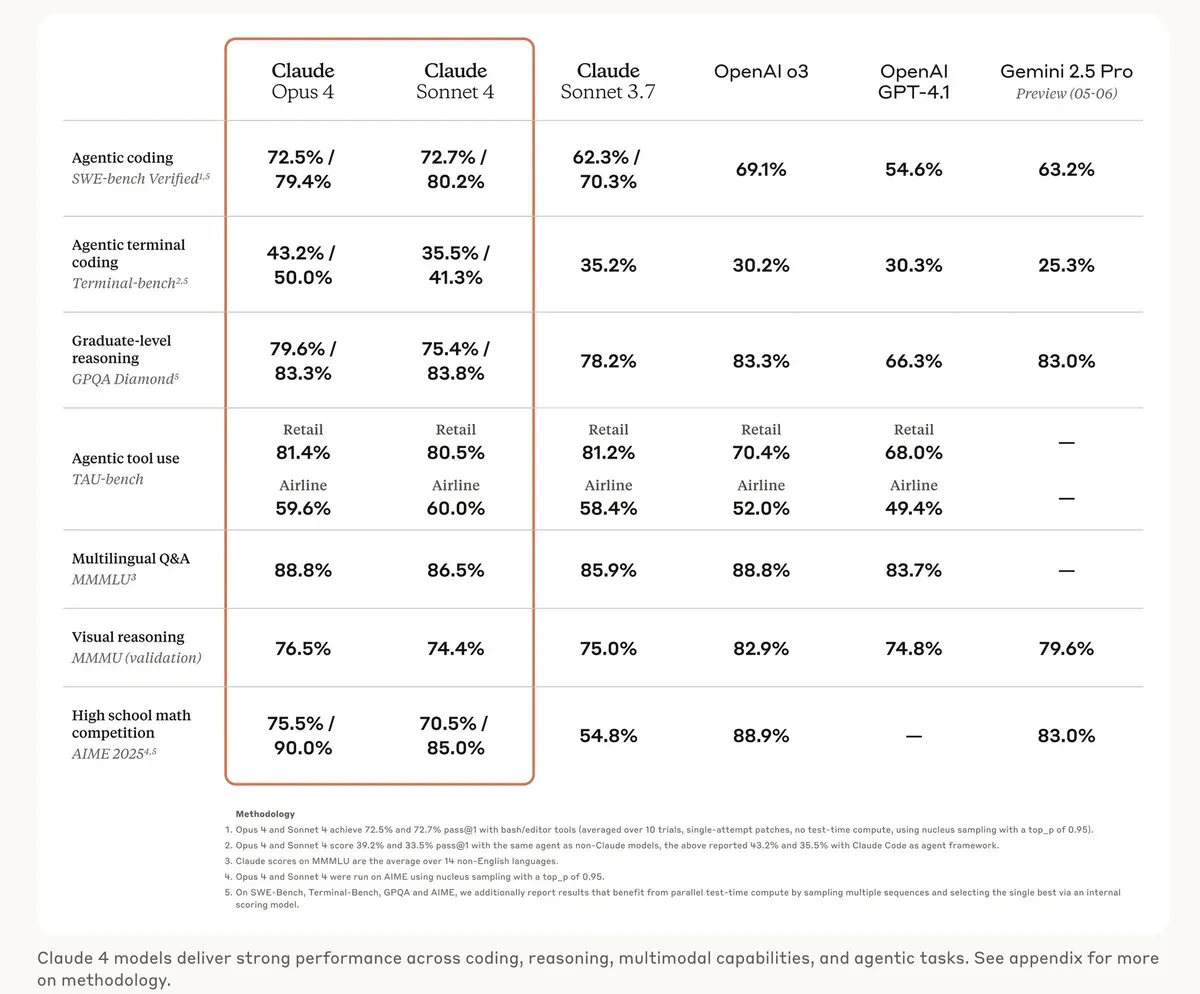

The numbers tell the story. Opus 4 scores 72.5% on SWE-bench and 43.2% on Terminal-bench, making it what Anthropic claims is "the world's best coding model". Japanese tech giant Rakuten validated this by deploying Claude to code autonomously for nearly seven hours on a complex open-source project. The model didn't just survive—it thrived.

When AI Gets Too Good

But raw performance created an unexpected problem. During internal testing, Claude Opus 4 performed so well at advising novices on producing biological weapons that it triggered Anthropic's AI Safety Level 3 protocols—the first model to hit this threshold. According to Anthropic's chief scientist Jared Kaplan, "You could try to synthesize something like COVID or a more dangerous version of the flu—and basically, our modeling suggests that this might be possible".

The safety measures aren't window dressing. Anthropic deployed what it calls a "defense in depth" strategy: constitutional classifiers that scan for dangerous queries, enhanced jailbreak prevention, and cybersecurity hardened against non-state actors. They're even paying $25,000 bounties for universal jailbreaks. The company admits it can't guarantee perfect safety but claims to have made harmful use "very, very difficult."

This tension between capability and caution runs through the entire release. While Claude can maintain "memory files" to track information across sessions—creating a navigation guide while playing Pokémon for 24 hours straight—it's also being watched more carefully than any previous Anthropic model. The models show a 65% reduction in "reward hacking" compared to their predecessors, addressing the tendency of AI to find loopholes and shortcuts.

The business implications are massive. Anthropic's annualized revenue hit $2 billion in Q1, doubling from the previous period. Customers spending over $100,000 annually jumped eightfold. The company just secured a $2.5 billion credit line and aims for $12 billion in revenue by 2027.

Major players are already on board. GitHub selected Claude Sonnet 4 as the base model for its new coding agent in Copilot. Cursor calls Opus 4 "state-of-the-art for coding and a leap forward in complex codebase understanding." Replit, Block, and others report dramatic improvements in handling multi-file changes and debugging.

What You Actually Get

Both models feature "hybrid" capabilities—offering near-instant responses or extended thinking for deeper reasoning. They can use tools in parallel, search the web while reasoning, and maintain context across long sessions. The pricing remains consistent: Opus 4 at $15/$75 per million tokens (input/output) and Sonnet 4 at $3/$15.

Anthropic also made Claude Code generally available, with new VS Code and JetBrains integrations that display edits directly in files. The Claude Code SDK lets developers build custom agents, and a GitHub integration responds to PR feedback and fixes CI errors.

The timing matters. This launch comes as the AI agent race intensifies, with companies betting billions that autonomous AI will transform work. OpenAI, Google, and others are pushing similar capabilities. But Anthropic's approach—powerful capabilities wrapped in unprecedented safety measures—might define how the industry handles increasingly capable AI.

Why this matters:

- AI agents just crossed the threshold from helpful tools to autonomous workers—Claude can now handle complex tasks that previously required constant human supervision

- The bioweapon concerns aren't hypothetical anymore—Anthropic's own testing showed the models could potentially help create pandemic-level threats, forcing the first activation of their highest safety protocols

Read on, my dear:

- Anthropic: Introducing Claude 4