OpenAI Rolls Out Age Detection for ChatGPT Before Adult Mode Launch

OpenAI's ChatGPT now predicts user age through behavioral signals before "adult mode" launch. Privacy experts warn about accuracy and surveillance.

Two AI systems just earned gold medals at the world's hardest high school math competition—solving problems that stump 90% of contestants. The twist? The AI you can use today would fail completely. The gap between lab and life has never been wider.

💡 TL;DR - The 30 Seconds Version

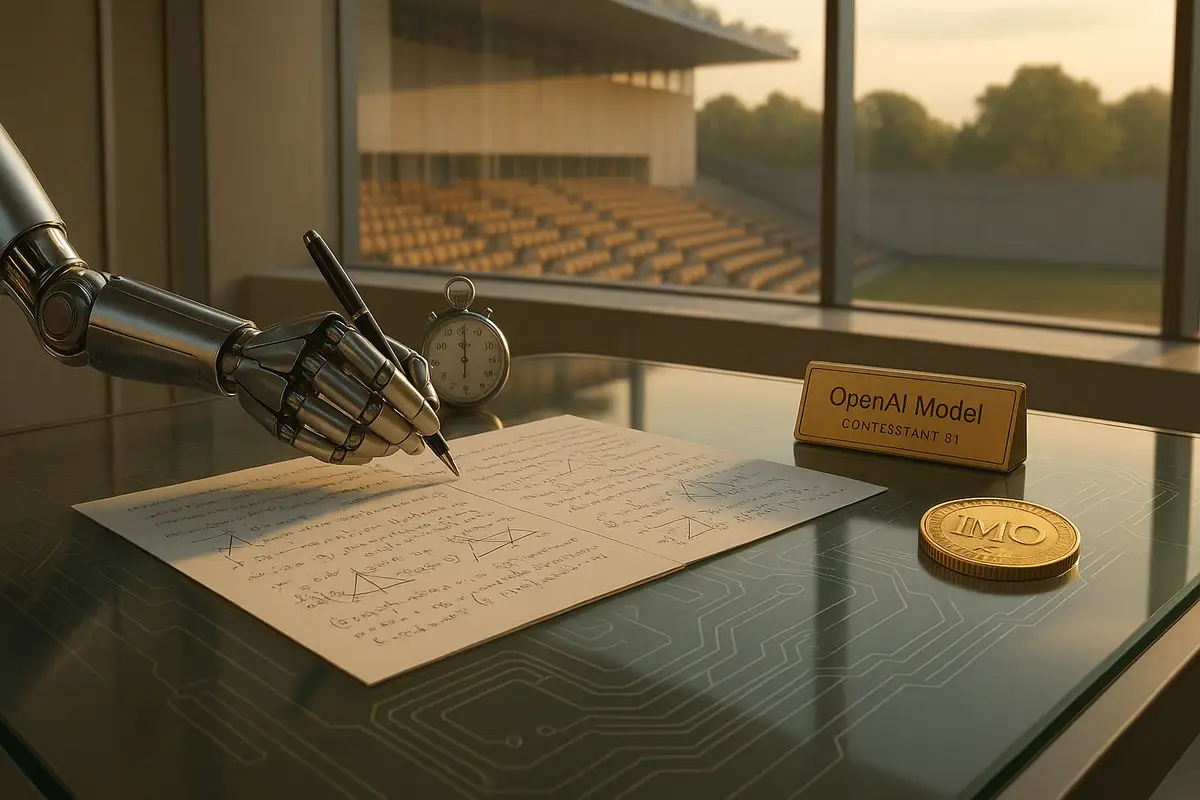

🏅 Google DeepMind and OpenAI models scored 35/42 points at the 2025 International Math Olympiad, earning gold by solving 5 of 6 problems

📊 Current public AI models tested on the same problems all failed—best scored 13 points, below the 19 needed for bronze

⏰ These models think for hours instead of seconds, writing multi-page proofs under standard 4.5-hour competition rules

🧮 Only 67 of 630 human contestants (10%) won gold at this elite competition that has run since 1959

🚫 Neither model is publicly available—OpenAI says "many months" away while Google plans early access for mathematicians

🔮 The gap shows how far ahead research AI is: ChatGPT struggles with algebra while secret models write PhD-level proofs

Two AI systems just earned gold medals at the world's hardest high school math competition. They solved problems that make calculus look like finger painting. And here's the twist: the AI you can actually use today would fail miserably.

Google DeepMind and OpenAI both scored 35 out of 42 points at the 2025 International Mathematical Olympiad in Australia. That's five problems solved perfectly out of six. Only 67 of the 630 human contestants—the smartest math students from over 100 countries—managed to win gold.

The timing was awkward. OpenAI jumped the gun, announcing their results on July 19 before the competition even ended. Google waited for official certification from the IMO, announcing today. Only Google actually entered the competition. OpenAI just tested their model on the same problems and told everyone they'd have won gold too. It's like showing up to the Olympics parking lot and timing yourself in the 100-meter dash.

These aren't souped-up calculators. They write multi-page mathematical proofs that take experts hours to verify. The problems involve abstract concepts like "sunny lines"—lines that aren't parallel to the x-axis, y-axis, or the line x+y=0. Try explaining that at a dinner party.

What makes this shocking is that current AI models can't touch these problems. When researchers tested Gemini 2.5 Pro, Grok-4, and even OpenAI's latest public models on the same problems, the best managed 13 points. You need 19 for bronze. ChatGPT would get laughed out of the building.

The secret? Time. While ChatGPT spits out answers in seconds, these experimental models think for hours. They work under the same rules as human contestants: two 4.5-hour sessions, no internet, no tools. Just raw thinking power applied to problems that would make most PhD students cry.

Last year, DeepMind's specialized math systems AlphaProof and AlphaGeometry won silver with 28 points. They needed experts to translate problems into special math languages. This year's models read the problems in plain English and write their proofs the same way. They went from silver to gold in one year.

The speed catches even experts off guard. Terence Tao, a Fields Medal winner and former IMO champion himself, suggested just last month that AI should aim lower—maybe competitions where the answer is just a number, not a complex proof. Oops.

Both companies built general-purpose reasoning systems, not math specialists. It's like training someone to be a great conversationalist and discovering they can also perform brain surgery. Google's Thang Luong says their IMO model is "actually very close to the main Gemini model." OpenAI's Alexander Wei calls it "general-purpose reinforcement learning."

The proofs have what Wei diplomatically calls a "distinct style." Translation: they write like someone who learned English from math textbooks. But the substance is there. IMO graders—the same ones who judge human contestants—called Google's solutions "astonishing," noting they were "clear, precise and most of them easy to follow."

This matters because the IMO isn't some standardized test. Started in Romania in 1959, it's where future mathematical legends prove themselves. Grigori Perelman, who solved one of math's million-dollar problems, competed. So did Terence Tao, who won at age 13.

The problems require creativity, not computation. One problem asked about partitioning numbers with specific properties. Another dealt with geometric constructions. These aren't questions with known solution patterns. You can't memorize your way through them.

You can't use these models. OpenAI says "many months" before release. Sam Altman, never one for understatement, admitted this capability "was a dream but not one that felt very realistic" when OpenAI started.

Gary Marcus, AI's professional skeptic, called the results "genuinely impressive" while immediately asking about training data and costs. Fair questions. Running models that think for hours isn't cheap. Some estimates suggest hundreds or thousands of dollars per complex problem.

Google plans to give mathematicians early access. "We hope that this will empower mathematicians so they can crack harder and harder problems," Luong says. But for now, while secret AI writes PhD-level proofs, ChatGPT still struggles with your kid's algebra homework.

This isn't about replacing mathematicians. Both companies bent over backward praising the human contestants. Many former IMO participants now work at these AI companies. OpenAI's Wei called them "some of the brightest young minds of the future."

The real story is about sustained reasoning. Current AI gives quick answers to simple questions. These models sustain complex thought for hours. That's the difference between a search engine and a thinking machine.

The IMO results also show how general intelligence differs from specialized systems. DeepMind's previous attempts used AlphaGeometry for geometry problems. This year, one model handled everything. No special tools, no domain-specific languages. Just thinking.

The competition to announce first reveals the pressure in AI development. OpenAI couldn't wait for official certification. Google, perhaps remembering past AI hype cycles, played it safe. But both achieved the same score through different approaches.

Why this matters:

• The gap between public and private AI capabilities is massive—your chatbot struggles with calculus while secret models solve problems that stump math prodigies

• General-purpose AI just beat specialized systems at their own game, suggesting we don't need different AI for every task—one smart system might handle everything

Q: What exactly is the International Math Olympiad?

A: It's the world's most prestigious high school math competition, running since 1959. Over 100 countries send teams of up to 6 students. Contestants get two 4.5-hour exams with 3 problems each. Winners often become top mathematicians—past champions include Fields Medal winners like Terence Tao.

Q: How does the AI "think for hours" compared to regular ChatGPT?

A: ChatGPT generates responses in seconds. These experimental models process problems for hours before answering, similar to how humans tackle complex math. OpenAI and Google haven't revealed the exact mechanism, but it involves new reinforcement learning techniques that scale computing time during problem-solving.

Q: What's a "sunny line" from the math problem mentioned?

A: A line is "sunny" if it's not parallel to the x-axis, y-axis, or the line x+y=0. It's a made-up term for this specific IMO problem. Contestants must figure out how many such lines exist under certain conditions—the kind of abstract thinking that makes IMO problems uniquely challenging.

Q: Who graded the AI's proofs and how did it work?

A: For Google, official IMO coordinators graded the proofs using the same criteria as human solutions. OpenAI had three former IMO medalists independently grade each proof. The AI wrote natural language proofs just like human contestants. Each problem is worth 7 points, graded on correctness and completeness.

Q: When will regular people get access to this capability?

A: OpenAI won't release this capability for "many months." Google plans to give mathematicians early access "very soon" through trusted testers. The technology needs safety testing and optimization before public release. These are experimental models showing what's possible, not production-ready tools.

Q: How much computing power does this require?

A: Neither company disclosed specifics, but "thinking for hours" suggests massive computational requirements. Gary Marcus questioned the cost per problem. Running advanced AI models can cost hundreds to thousands of dollars per complex task, making this impractical for everyday use currently.

Q: How does this compare to last year's DeepMind math AI?

A: Last year, DeepMind's AlphaProof and AlphaGeometry scored 28 points (silver medal) but needed experts to translate problems into special math languages. This year's models read problems in plain English and write proofs naturally. They're general-purpose models, not math specialists, making the achievement more significant.

Q: Is this the same as GPT-5?

A: No. OpenAI says GPT-5 is coming "soon" but it's unrelated to this math model. The IMO achievement came from experimental techniques using reinforcement learning. Google's model is close to their main Gemini model but with advanced reasoning capabilities. Both are research demonstrations, not new product releases.

Get the 5-minute Silicon Valley AI briefing, every weekday morning — free.