Moltbot Left the Door Open. Tesla Bet the Factory.

Moltbot exposed 1,862 servers without authentication. Tesla discontinues flagship cars for unproven robots. Airtable launches AI agents amid platform complaints.

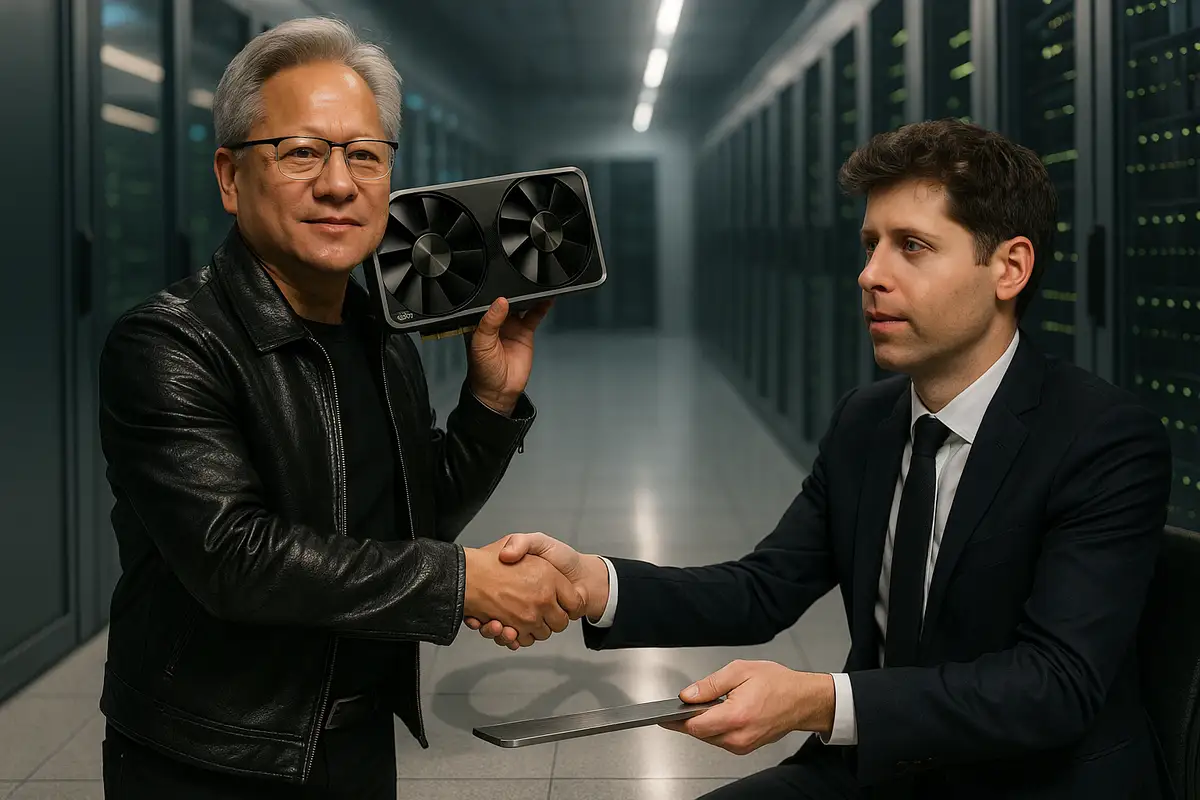

OpenAI commits $1.4T to infrastructure; Nvidia projects $500B in chip sales. Same day, same pitch: AI requires sovereign-scale capital. The tension: revenue is still catching up to the rhetoric, and someone will hold expensive capacity if demand falters.

On Tuesday, Sam Altman put a sovereign-scale sticker price on the next phase of AI: $1.4 trillion in infrastructure already committed—roughly 30 gigawatts of data-center capacity—and an ambition to build a gigawatt a week at about $20 billion per GW. He framed it as the only way to meet demand for smarter models and faster inference, a case he laid out in a livestream unveiling OpenAI’s new structure and goals.

Two hundred miles north, Nvidia chief Jensen Huang walked into a Washington convention hall with his own number: $500 billion in chip bookings over the next five quarters, fueled by today’s Blackwell and next year’s Rubin platforms. He paired that with announcements calibrated for policymakers—Blackwell now in full production in Arizona, a $1 billion strategic stake in Nokia to power AI-ready 5G/6G networks, and seven new Department of Energy supercomputers. He ended by thanking the audience for “making America great again.” The message was unmistakable.

Both companies are selling the same idea to different buyers. Altman is pitching financiers and partners. Huang is pitching Washington. The claim: whoever controls the AI buildout, controls the future. The tension: revenue is still catching up to rhetoric.

Key Takeaways

• OpenAI committed $1.4T to infrastructure, targeting gigawatt-per-week buildout at $20B each—but needs hundreds of billions in annual revenue it doesn't yet have

• Nvidia's Washington push: $500B chip revenue projection, Blackwell production in Arizona, $1B Nokia stake for 5G/6G, seven DOE supercomputers—all timed for political leverage

• The revenue gap: Both companies betting demand materializes before runway ends, but capital commitments are concrete while paying workloads remain theoretical

• Sovereign-scale stakes: AI infrastructure now requires government/public market capital, embedding both companies in national security calculations before next regulatory wave

Altman didn’t sugarcoat the mismatch. To sustain that buildout, OpenAI “eventually” needs hundreds of billions in annual revenue. Today, it’s nowhere near that mark. That’s why the $1.4 trillion figure bundles chip purchases, cloud capacity, and multi-partner deals across Nvidia, AMD, Broadcom, Oracle, and others—not just cash on hand. It’s a financing machine as much as a technology plan.

The structure shift at OpenAI—placing the nonprofit firmly on top of a public-benefit corporation—adds a path to public markets. It also signals that private capital alone won’t foot this bill. The scale is simply new. Sovereign, not startup.

Nvidia sits on the other side of this ledger. Those bookings depend on buyers like OpenAI (and the clouds behind it) keeping the orders flowing. If the demand curve stalls, the silicon doesn’t get cheaper. It just piles up. That’s the unspoken risk in both presentations.

Huang’s Arizona news is politics and supply chain in one package. Moving Blackwell production—at least in part—onto U.S. soil checks a box for national-security hawks and buffers Nvidia against shocks abroad. It also gives the company a louder voice in export debates that have already cost it China sales.

There are caveats. Nvidia didn’t say what share of Blackwell will be made domestically versus Taiwan. The practicalities of advanced packaging, specialized tooling, and trained labor still matter. But “made in Arizona” buys Nvidia time and goodwill as it argues for flexible export rules and licenses that let U.S. firms keep selling the world’s top AI chips—on America’s terms. Smart politics.

OpenAI’s gigawatt-per-week ambition would dwarf any private computing program on record. One gigawatt is roughly a big nuclear reactor’s output. That means new power lines, substations, water or alternative cooling, transformers, chip supply, and construction labor at industrial scale. It’s not just racks and nodes. It’s grid math.

Nvidia is betting the grid and the capital really do show up. The company says demand supports 20 million units of its latest chips over the cycle, with Rubin arriving as Blackwell ramps. The near-term proof points are government-grade: seven DOE systems, plus a quantum-link project (NVQLink) that marries GPUs with quantum processors for error correction and orchestration. These are the kinds of line items Congress understands.

If the enterprise side doesn’t materialize quickly enough, consumer AI has to pull more weight than a $20/month subscription can carry. Altman says there are broader consumer revenue streams ahead, but didn’t detail them. That’s the gap. Revenue remains assumed; capex is real.

The Nokia deal is a tell. A $1 billion equity stake to put Nvidia silicon into 5G/6G base stations is both a go-to-market plan and a foreign-policy argument: build Western networks on U.S. technology, not Huawei’s. In Washington, that line plays. So do DOE supercomputers tied to nuclear stewardship and fusion research, and quantum-secure initiatives that promise resilient encryption.

OpenAI’s parallel argument is scale as industrial policy. A trillion-dollar buildout means jobs, fabs, power plants, and new industrial corridors. It pulls governors and utilities into the conversation. It turns permitting reform into an AI issue. It reframes AI regulation as an employment and competitiveness question. Once the spigots open, they’re hard to close.

The question for both companies is timing. How fast will paying workloads arrive relative to construction schedules and chip deliveries? And how concentrated will that spending be among three clouds and a handful of frontier-model labs? Concentration risk cuts both ways: buyers gain leverage; suppliers chase political cover.

If demand underwhelms, someone is left holding very expensive capacity. If export rules tighten again, the “U.S. stack” narrative faces a revenue problem abroad. If power interconnects slip, commissioning dates do too. None of those are tail risks at this scale. They’re scenarios to model.

Still, the die is cast. OpenAI and Nvidia are betting that the next decade belongs to those who can finance, build, and wire up compute fastest. Tuesday simply made the scale explicit. The money will have to follow.

Q: What does "one gigawatt per week" actually mean in physical terms?

A: One gigawatt is roughly the output of a large nuclear reactor—enough to power about 750,000 homes. Building that weekly means constructing data centers plus the supporting infrastructure: substations, cooling systems, power lines, and transformers. For context, Amazon's entire AWS network took 15 years to reach 50 gigawatts globally. OpenAI wants to add 52 gigawatts annually.

Q: Why does OpenAI need to go public if it's already raised billions?

A: OpenAI's current revenue (estimated $3-4 billion annually) can't fund $1.4 trillion in infrastructure commitments. The company restructured Tuesday to enable an eventual IPO because private markets—even with venture capital and strategic partners—don't operate at trillion-dollar scale. Public markets provide access to institutional investors, pension funds, and sovereign wealth funds that can sustain this capital intensity.

Q: What happens if AI demand doesn't match these infrastructure bets?

A: Someone holds very expensive, underutilized capacity. Data centers can't be easily repurposed—they're specialized for AI chips, cooling, and power density. If enterprise customers don't materialize or hyperscalers slow spending, both OpenAI and Nvidia face write-downs. The concentration risk is real: Microsoft, Amazon, and Google drive most AI chip demand. If any one slows, the entire projection shifts.

Q: How much has Nvidia actually lost from China export restrictions?

A: Nvidia would have recorded $10.5 billion in H20 chip sales over two quarters if the U.S. government hadn't required licenses in April 2024. The company is currently "100% out of China" with no market share, CEO Jensen Huang said earlier this month. The Trump administration approved H20 licenses in July but imposed a 15% tax on China revenue. Nvidia argues it needs access to $50 billion in potential China sales to fund U.S. R&D.

Q: What does "hundreds of billions in annual revenue" from enterprises actually look like?

A: It means selling AI services beyond $20 monthly ChatGPT subscriptions. Enterprise deals involve API access charged per token, custom model training, dedicated compute capacity, and integration services. OpenAI needs thousands of enterprise customers spending millions annually—think JPMorgan, Walmart, government agencies—plus consumer revenue streams Altman mentioned but didn't detail. The gap between current revenue (~$4B) and target is the entire bet.

Get the 5-minute Silicon Valley AI briefing, every weekday morning — free.