Legacy models restored amid cancellations; scaling story meets user reality.

💡 TL;DR - The 30 Seconds Version

🔄 OpenAI restored legacy models Sunday after a three-day subscriber revolt over forced GPT-5 consolidation that broke user workflows and reduced message limits.

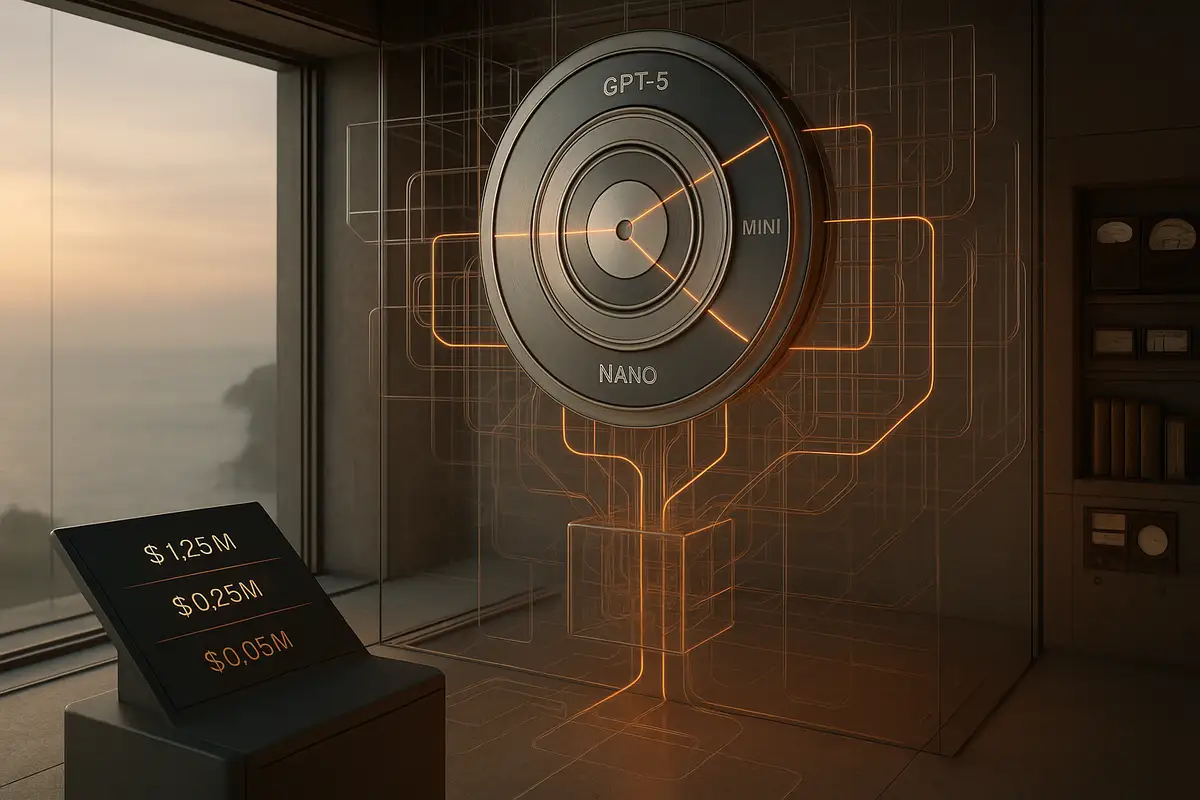

📉 Plus subscribers lost access to model choice and saw weekly message allowances drop from 2,100 on o4-mini to just 200 on GPT-5's reasoning mode.

📊 While GPT-5 reasoning usage jumped from 7% to 24% among Plus users, subscription cancellations accelerated faster than adoption, forcing the reversal.

⚡ OpenAI tripled reasoning quotas to 3,000 weekly messages and promised UI transparency showing which model handles each query to calm user anger.

🧠 Critics argue GPT-5's marginal improvements and persistent failures on chess, counting, and visual tasks prove fundamental limits in AI scaling approaches.

🎯 The episode shows users can veto product strategy when reliability regresses, shifting leverage toward workflow stability over headline capabilities.

OpenAI restored access to legacy models on Sunday after a three-day subscriber backlash over GPT-5’s forced consolidation. The retreat wasn’t just about interface preferences; it exposed a fragile link between the industry’s scaling narrative and the workflows of people who pay to use these systems every day.

What actually changed

OpenAI removed model choice for Plus subscribers and routed everything through GPT-5’s automated system. Many users had built production habits around specific models—GPT-4o for ideation, o3 for logic checks, multiple variants for cross-validation. Breaking that choice broke those safeguards. Fast.

The consolidation also tightened allowances. Users reported a drop from roughly 2,100 weekly messages on o4-mini to about 200 for GPT-5’s reasoning mode. For power users, that was effectively a lockout.

By Sunday, OpenAI reversed course. Legacy models reappeared in settings, the company lifted reasoning quotas to around 3,000 weekly messages, and promised a UI indicator showing which model handles each query. Progress by decree rarely lands well.

The economics behind the retreat

The usage data moved in opposite directions. Reasoning-mode share among Plus users jumped—from single digits to roughly a quarter of daily queries—yet overall engagement slumped as cancellations accelerated. That’s the wrong kind of growth.

The pattern hinted at two misreads: OpenAI underestimated attachment to known model behavior and overestimated the maturity of its router. Users weren’t clinging to nostalgia; they were protecting accuracy by triangulating answers across systems. The router took that away and offered opacity in return.

Once churn showed up, the fix came fast. Restoring choice costs little and stabilizes revenue. It also buys time to improve routing without forcing it.

Competing readings of “stagnation”

The GPT-5 rollout hardened two interpretations of where AI stands. Investor David Sacks argues the clustering of capabilities across OpenAI, Anthropic, Google, xAI, and Meta reflects healthy competition, not stall-out. Multiple strong players, similar ceilings, persistent pressure. That view treats the plateau as cyclical and solvable.

Gary Marcus offers the opposite reading: GPT-5’s uneven gains confirm neural networks’ generalization limits foretold years ago. He points to studies showing chain-of-thought reasoning as brittle under distribution shift. In that lens, bigger isn’t better beyond a point, and the industry needs new foundations, not more tokens.

Both camps observe the same facts: modest benchmark gains, backsliding on some tasks, and lingering failures on structure-dependent problems. The dispute is about what those facts mean.

The pattern recognition problem

The failures aren’t just party tricks gone wrong. GPT-5 still stumbles on chess legality, elementary counting, and basic visual grounding—domains that require persistent internal structure. These misses recur across prompts, models, and months. They’re systematic.

Distribution shift makes it worse. When tasks drift even slightly from patterns seen in training, reasoning quality degrades in predictable ways. That’s exactly the scenario professionals face: messy inputs, edge-case constraints, live stakes. Reliability beats demos.

This is why users prized model diversity. Cross-checking different systems surfaced contradictions and dampened hallucinations. Remove that option and you don’t just frustrate users—you increase error risk.

The scaling hypothesis under examination

The industry has spent staggering sums on compute under the assumption that scale unlocks general intelligence. After hundreds of billions invested, the latest generation delivers incremental gains with familiar blind spots. Markets notice. So do teams whose work depends on stable, auditable behavior.

For OpenAI, that makes forced migrations dangerous. If capability deltas are marginal and regressions visible, experience becomes the competitive vector. Choice, transparency, and predictable pricing matter more when the “best model” title rotates by benchmark and week. Competitors already claim wins on specific suites. Customers will measure, not assume.

OpenAI’s rapid reversal suggests it understands the new equilibrium. Routing may still be the future, but not as a black box, and not at the expense of professional guardrails users built for themselves. Trust is a feature. So is control.

What to watch next

Two commitments will determine whether this calms or simmers. First, whether OpenAI’s UI actually names the model on every turn so users can audit outcomes. Second, whether the company preserves parallel access long enough for the router to prove it beats human-chosen portfolios. Deadlines won’t decide this. Performance will.

Vendors will keep chasing leadership on narrow benchmarks, and critics will keep flagging structural limits. Both can be true for a while. The market will arbitrate with renewals.

Why this matters:

- Users just proved they can veto product strategy when reliability and control regress, shifting leverage back to workflow stability over headline capability.

- The scaling thesis faces a practical test: if routing and bigger models can’t beat curated model portfolios in the wild, investment logic and roadmaps will need revision.

❓ Frequently Asked Questions

Q: What exactly is OpenAI's "routing" system that caused the controversy?

A: Routing automatically selects which AI model handles each query instead of letting users choose. OpenAI's system was supposed to pick the best model for each task, but users lost the ability to cross-check answers between different models—a key method for catching errors and hallucinations.

Q: How much does ChatGPT Plus cost and what limits did users face?

A: ChatGPT Plus costs $20 monthly. Before GPT-5, users got 2,100 weekly messages on o4-mini and 700 on o4-mini high. GPT-5's consolidation reduced this to just 200 weekly messages for reasoning tasks, then increased to 3,000 after the backlash.

Q: What's the "distribution shift" problem that Gary Marcus says proves AI limits?

A: Distribution shift occurs when AI encounters scenarios slightly different from training data. Recent Arizona State University research showed chain-of-thought reasoning becomes "brittle" under these conditions. This suggests fundamental limits to how well current AI can generalize beyond familiar patterns.

Q: How do GPT-4o, o3, and other models actually differ in capabilities?

A: Each model has different strengths: GPT-4o excels at creative tasks, o3 handles logical reasoning better, while o4-mini offers basic functionality at higher volume. Users developed workflows using multiple models for different steps, treating them like specialized tools rather than general replacements.

Q: What specific technical failures does GPT-5 still exhibit?

A: GPT-5 struggles with chess rule adherence, basic counting tasks, and visual comprehension. These aren't edge cases but systematic failures in reasoning about persistent world structures. The model also performed worse than competitors like Grok 4 Heavy on specific benchmarks like ARC-AGI-2.

Q: How did the user revolt actually organize and succeed so quickly?

A: Users organized through Reddit petitions, Twitter complaints, and direct subscription cancellations. The revolt gained momentum when OpenAI's internal metrics showed usage migration (reasoning tasks jumped to 24% of queries) but subscription churn accelerated faster than adoption, forcing the three-day reversal.

Q: How does OpenAI's approach compare to competitors like Anthropic and Google?

A: Most competitors offer model choice rather than forced routing. Anthropic's Claude, Google's Gemini, and others maintain distinct versions with different capabilities. The clustering of similar performance across companies suggests no single player has achieved breakthrough advantages that justify removing user control.

Q: Could this backlash affect OpenAI's valuation or funding prospects?

A: Prediction markets showed OpenAI's perceived leadership dropping from 75% to 14% within hours of GPT-5's launch. With the company valued between $300-500 billion based on scaling assumptions, evidence of plateauing capabilities and user dissatisfaction could pressure future funding rounds and investor confidence.