💡 TL;DR - The 30 Seconds Version

💰 OpenAI slashed o3 pricing 80% from $10/$40 to $2/$8 per million tokens while launching o3-pro at $20/$80.

🐌 O3-pro takes over 3 minutes to respond but beats Google and Anthropic models on math and science benchmarks.

🔧 The model excels with extensive context but can't generate images, use Canvas, or handle temporary chats currently.

📊 Expert evaluators prefer o3-pro over standard o3 64% of the time for complex analysis and strategic planning tasks.

🎯 Pro and Team users get access Tuesday, Enterprise customers next week, as reasoning models become the new AI battleground.

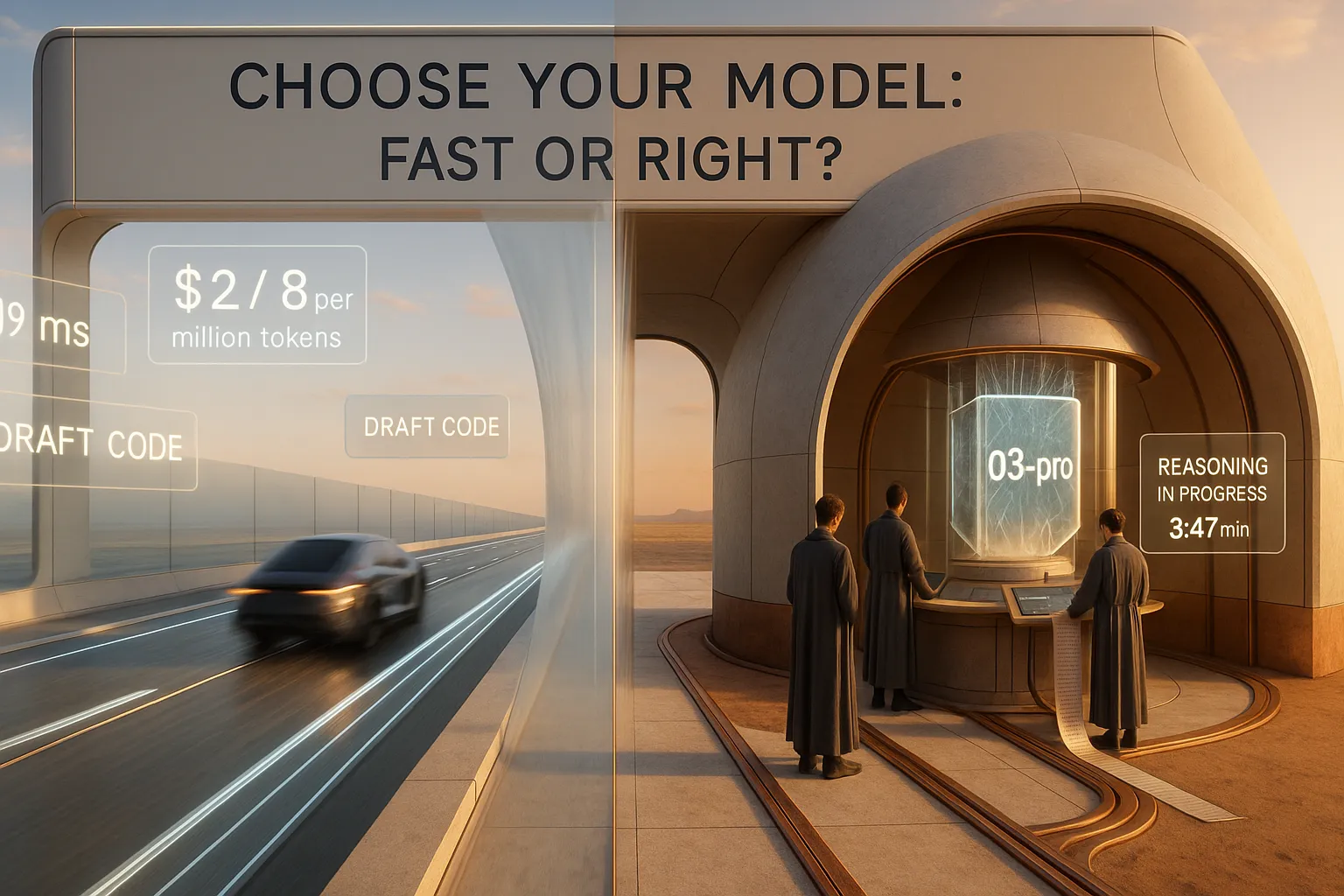

🚀 The AI market now splits between fast chat models and slow thinking models, changing how we interact with artificial intelligence.

OpenAI made two moves Tuesday that reshape the AI pricing landscape. The company cut its o3 model costs by 80% and launched o3-pro, a new reasoning model that takes minutes to respond but delivers what the company calls its most reliable answers yet.

The o3 price drop is dramatic. Input tokens fell from $10 to $2 per million, while output tokens dropped from $40 to $8. This puts o3 in direct competition with Google's Gemini 2.5 Pro and undercuts Anthropic's Claude models significantly.

CEO Sam Altman announced the cuts on X, writing he was "excited to see what people will do with it now." The timing suggests OpenAI wants broader adoption before competitors respond.

The slow lane gets slower

O3-pro takes the opposite approach to speed. Early users report waiting over three minutes for responses to simple prompts like "Hi, I'm Sam Altman." OpenAI admits responses "typically take longer" than its previous o1-pro model and recommends it only when "reliability matters more than speed."

The model costs $20 per million input tokens and $80 per million output tokens through the API. That's ten times more expensive than the newly discounted o3, supporting theories that o3-pro runs multiple instances of the base model and picks the best answer.

Expert evaluators prefer o3-pro over the standard o3 model 64% of the time. OpenAI claims reviewers rate it higher for clarity, accuracy, and instruction-following across science, programming, business, and education tasks.

Benchmarks tell one story, users tell another

O3-pro beats Google's Gemini 2.5 Pro on the AIME 2024 math test and outperforms Anthropic's Claude 4 Opus on PhD-level science questions. But benchmark performance doesn't capture what makes the model different.

Ben Hylak, who had early access to o3-pro, says the real power shows up when you feed it extensive context. He and his co-founder gave the model their company's complete planning history, goals, and voice memos. The result was a detailed strategic plan that "actually changed how we are thinking about our future."

"There was no simple test or question I could ask it that blew me away," Hylak wrote. "But then I took a different approach."

This points to a key limitation of current AI evaluation. Simple tests miss what happens when models get rich, real-world context to work with.

Tools make the difference

O3-pro has access to web search, file analysis, Python coding, and memory features. But unlike faster models that might hallucinate capabilities, o3-pro seems better at understanding what tools it actually has and when to use them.

Hylak noted the model excels at "accurately communicating what tools it has access to" and "choosing the right tool for the job." This matters more than raw intelligence when AI systems need to work alongside humans and other software.

The model works best as what Hylak calls a "report generator." Give it context, set a goal, and let it think. Don't try to chat with it like you would GPT-4o.

The reasoning race heats up

Reasoning models have become the main battleground in AI. Unlike standard models that predict the next word, reasoning models work through problems step by step. This makes them more reliable for math, science, and coding tasks where accuracy matters more than speed.

Google, Anthropic, and China's DeepSeek all have competing reasoning models. DeepSeek's models cost as little as $0.07 per million input tokens during off-peak hours, making OpenAI's pricing moves look almost conservative.

The competition has pushed everyone to lower prices. Anthropic's Claude Opus 4 costs $15/$75 per million tokens. Google's Gemini 2.5 Pro ranges from $1.25 to $2.50 for input, depending on prompt size.

Current limitations slow adoption

O3-pro launches with several restrictions. Temporary chats are disabled due to a "technical issue." The model can't generate images or work with Canvas, OpenAI's collaborative workspace feature.

These limitations, combined with slow response times, mean o3-pro targets a specific use case: complex analysis where accuracy matters more than convenience. Think research projects, strategic planning, or debugging complex code.

The model replaces o1-pro for ChatGPT Pro and Team users starting Tuesday. Enterprise and education customers get access next week.

Market implications

OpenAI's pricing strategy suggests two things. First, the company wants to drive adoption of reasoning models before competitors can match their capabilities. Second, they're betting users will pay premium prices for premium performance when the task demands it.

The 80% price cut on o3 makes advanced reasoning accessible to smaller teams and individual developers. A million tokens costs $10 total now versus $50 before. That's enough to process about 750,000 words—roughly the length of "War and Peace."

Independent testing by Artificial Analysis found o3 now costs $390 to complete their full benchmark suite, compared to $971 for Gemini 2.5 Pro and $342 for Claude 4 Sonnet.

The integration challenge

Fast AI models have become so capable that the real challenge isn't intelligence—it's integration. How well do they work with existing tools, understand their environment, and collaborate with humans?

O3-pro seems designed for this challenge. It thinks longer, uses tools more carefully, and produces more detailed analysis. But it requires a different approach from users who expect instant responses.

This creates a split in the AI market. Fast models like GPT-4o handle daily tasks and conversation. Slow reasoning models tackle complex analysis and planning. Users will likely switch between them based on the task at hand.

Why this matters:

- The pricing war in AI just intensified, making advanced reasoning models affordable for smaller teams and individual developers for the first time.

- Speed and intelligence are diverging—the smartest AI models now require patience, fundamentally changing how we interact with artificial intelligence.

❓ Frequently Asked Questions

Q: How much does it actually cost to run o3-pro compared to other AI models?

A: O3-pro costs $20/$80 per million tokens, making it 10x more expensive than the newly discounted o3 ($2/$8). It's cheaper than Claude Opus 4 ($15/$75) but costs 8-16x more than Google's Gemini 2.5 Pro ($1.25-$2.50/$10-$15). A million tokens processes about 750,000 words.

Q: Why does o3-pro take over 3 minutes to respond to simple questions?

A: O3-pro is a reasoning model that works through problems step-by-step rather than predicting the next word. It likely runs multiple instances of the base model and picks the best answer. This "thinking time" is what makes it more reliable for complex tasks than faster models.

Q: What can't o3-pro do that other OpenAI models can?

A: O3-pro currently can't generate images, work with Canvas (OpenAI's collaborative workspace), or handle temporary chats due to a technical issue. It also takes much longer to respond than GPT-4o or standard o3, making it impractical for real-time conversations.

Q: Who gets access to o3-pro and when?

A: ChatGPT Pro and Team users get o3-pro immediately, replacing the older o1-pro model. Enterprise and Education customers get access the week of June 17, 2025. The model is also available through OpenAI's developer API starting Tuesday.

Q: How much context should I give o3-pro to see its best performance?

A: Early users report o3-pro shines with extensive context—complete project histories, detailed goals, and comprehensive background information. Simple questions don't showcase its capabilities. Think of it as a report generator that needs rich context to produce valuable insights rather than a chat companion.