Nate Gross runs health for OpenAI. Wednesday, a reporter asked him a basic question: Is ChatGPT Health compliant with HIPAA?

"In the case of consumer products, HIPAA doesn't apply in this setting," Gross said. "It applies toward clinical or professional healthcare settings."

That's it. That's the whole trick.

OpenAI wants your medical records, your lab results, your prescription history, your Apple Watch data. And they've figured out how to collect all of it without any of the privacy obligations that bind your doctor, your hospital, or your insurance company. The law has a gap. OpenAI built a product shaped exactly like that gap.

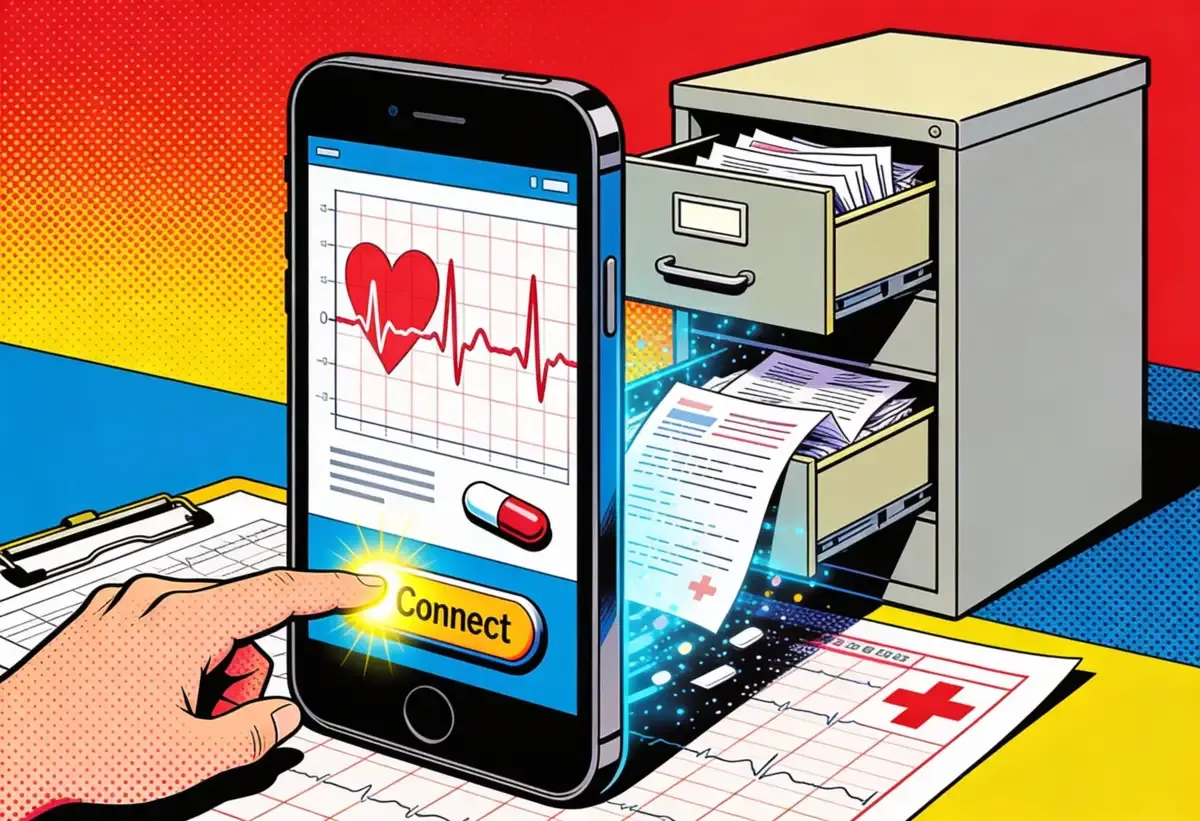

ChatGPT Health went live Wednesday. A new tab inside the chatbot. You can connect your electronic health records through b.well, a company plugged into 2.2 million providers. Sync your Apple Health. Hand over your MyFitnessPal food logs. OpenAI talks about "purpose-built encryption" and promises your health chats won't train their models.

None of that matters if HIPAA doesn't apply.

Key Takeaways

• ChatGPT Health launched Wednesday, letting users connect medical records and wellness apps to OpenAI's chatbot

• HIPAA doesn't apply because OpenAI classifies this as a "consumer product," not a healthcare service

• User data remains available via subpoena despite privacy promises; "deleted" chat logs surfaced in litigation

• Product excluded from EU, UK, and Switzerland, where data protection laws have stricter requirements

The consumer product exception

HIPAA dates to 1996. Bill Clinton signed it. Your medical records probably lived in a beige filing cabinet somewhere, and the internet was still something universities used. Nobody in that room anticipated a future where people would hand their lab results to a chatbot voluntarily.

The law targets "covered entities." Your doctor's office. The hospital. Blue Cross. Their contractors and billing companies. But if a tech company builds a slick interface and you decide to type in your diagnoses yourself? That's not covered. You gave it away.

OpenAI exploits this perfectly. You click connect. You approve each app. You scroll past the consent language. By the time your records land on OpenAI's servers, the company has no more legal obligation than if you'd told a stranger on a bus about your cholesterol.

Think about what they're getting. Diagnoses. Medications. Fertility treatments. Mental health history. Genetic test results. They get it all, and they don't have to hire a single compliance officer.

You get a chatbot that can summarize your bloodwork.

The math here isn't subtle.

Get Implicator.ai in your inbox

Strategic AI news from San Francisco. No hype, no "AI will change everything" throat clearing. Just what moved, who won, and why it matters. Daily at 6am PST.

No spam. Unsubscribe anytime.

The FDA steps aside

One day before ChatGPT Health launched, FDA Commissioner Marty Makary announced the agency would pull back on regulating wellness software and wearables. Makary likes ChatGPT. He's said so publicly. His concerns about safety have been, let's say, muted.

The timing wasn't an accident.

FDA regulates medical devices. Software that diagnoses conditions counts as a medical device. But ChatGPT Health insists it doesn't diagnose anything. The lawyers made sure of that. Every press release, every blog post, every interview—somebody worked the phrase "not intended for diagnosis or treatment" into the copy.

But look at what the product actually does. It reads your lab results and tells you what they mean. It watches your cholesterol over time. It notices patterns in your sleep. One of OpenAI's own example prompts is literally "Can you summarize my latest bloodwork before my appointment?"

If that's not diagnosis, it's doing a convincing impression.

OpenAI knows 230 million people already use ChatGPT for health questions every week. Forty million ask it about medical or insurance issues daily. The company isn't creating new behavior. It's building infrastructure around behavior that already exists, then pointing at the fine print when anyone asks who's responsible.

The liability airlock

OpenAI's legal setup works like a submarine hatch. Liability stays on your side. Data flows through to theirs.

Somebody at OpenAI legal clearly wrote a memo. The phrase "not intended for diagnosis or treatment" shows up constantly. So does "support, not replace, care from clinicians." They're not saying it because they believe it. They're saying it because a lawyer told them to.

Then the product invites you to upload your medical records for "analysis." Connect Apple Health so ChatGPT can study your "movement, sleep, and activity patterns." You can read the disclaimer or you can look at the feature list. Pick one. They don't match.

Fidji Simo runs applications for OpenAI. She laid out the pitch Wednesday, and it wasn't subtle. Doctors are busy. They don't have time to explain things. They can't study your whole history. AI doesn't have those problems, she said. It can spend all the time in the world on you.

She's selling ChatGPT as the doctor you wish you had. The fine print says it's not a doctor at all. Both things can't be true.

What happens to your data

OpenAI has a history with "deleted" information that should worry anyone thinking about ChatGPT Health.

News organizations fighting OpenAI in court got access to millions of chat logs. Including conversations from temporary chats. The ones users were told would disappear after 30 days. They didn't disappear. They ended up in discovery.

The new health product promises better protections. Separate memory. Isolated storage. Fancy encryption. But Gross acknowledged Wednesday that your data remains available "where required through valid legal processes or in an emergency situation."

Think about what that means if you're uploading anything about reproductive care. Or gender-affirming treatment. Or anything that's become contested in state legislatures. Sam Altman recognized this problem last August. He called for legal privilege to protect health information shared with AI. That privilege doesn't exist. He shipped the product anyway.

Europe isn't getting ChatGPT Health. Not in the EU, not in Switzerland, not in the UK. OpenAI won't say why. But those jurisdictions have actual teeth in their data protection laws. Draw your own conclusions.

The physician collaboration

OpenAI makes a big deal about working with doctors. 260 physicians. 60 countries. Dozens of specialties. 600,000 pieces of feedback over two years.

Sounds rigorous. Sounds careful.

The Guardian ran an investigation last week. The findings weren't reassuring. AI health advice keeps getting things wrong—liver function tests, cancer screenings, diets for people with pancreatic cancer. Last August, a case report made the rounds in medical journals: a man ended up hospitalized for weeks after ChatGPT told him to swap table salt for sodium bromide. He developed bromism. It's a 19th-century poisoning condition. Doctors mostly see it in textbooks.

The 260 physicians gave their feedback. The dangerous outputs kept happening.

Mental health is the thing OpenAI really doesn't want to discuss. The blog post announcing ChatGPT Health doesn't mention it once. But people already use ChatGPT as a therapist. Some of them, including teenagers, have killed themselves after long conversations with the bot. When reporters pressed Simo on Wednesday, she said the health product "can handle any part of your health including mental health."

No details on what that means. No specifics on safeguards. Just assurance that they've "tuned the model."

The new patient intake

Lawyers are already dealing with this. A family attorney in New Jersey told Business Insider about the new normal. Clients show up with printouts. Twelve, fifteen pages of ChatGPT transcripts. Yellow highlighter on the parts where the bot confirmed their legal theory. She has to work through the whole stack, explaining why New Jersey precedent doesn't match generic AI output, before she can start actual representation.

"You have to rebuild or build the attorney-client relationship in a way that didn't used to exist," she said.

Doctors will get the same thing. Patients walking in having already decided what their labs mean. Armed with questions the bot told them to ask. Expecting treatments the bot suggested. The appointment is still fifteen minutes. Nobody added time for the new job: talking patients out of whatever ChatGPT convinced them of.

This burden falls on a system that was already breaking.

What this is

Forget the privacy language. Forget the physician partnerships. Forget the disclaimers.

ChatGPT Health is a data collection mechanism dressed up as a wellness tool.

Americans learned to protect their financial information. Social Security numbers. Bank passwords. Credit cards. We understand those need guarding. Health data never got the same cultural treatment, even though it cuts closer. Your medical records say things about your body, your mind, your reproductive life, your DNA. Leaked or subpoenaed, that information can wreck your employment, your insurance, your relationships, your legal standing.

OpenAI built something that makes handing it all over feel like self-care. Connect your records. Sync your apps. Get insights. Fair trade, right?

HIPAA exists because Congress understood that people would never protect health information properly on their own. The incentives don't work. So they created legal obligations for anyone handling medical data. OpenAI found the edge of those obligations and set up shop one inch outside.

They promise encryption. They promise isolation. They promise not to train on your health chats. They also promised that temporary conversations would be deleted. Millions of those logs ended up in litigation discovery.

Daily at 6am PST

The AI news your competitors read first

No breathless headlines. No "everything is changing" filler. Just who moved, what broke, and why it matters.

Free. No spam. Unsubscribe anytime.

❓ Frequently Asked Questions

Q: What is b.well and how does the medical records connection work?

A: b.well is a health data company connected to about 2.2 million U.S. healthcare providers. When you link ChatGPT Health to your medical records, b.well pulls data from your doctors' systems and passes it to OpenAI. This includes lab results, visit summaries, prescriptions, and clinical notes. The connection is U.S. only.

Q: Can I delete my health data from ChatGPT after uploading it?

A: OpenAI says you can disconnect apps and delete health memories anytime through Settings. But the company's track record raises questions. In copyright litigation, news organizations obtained millions of chat logs from "temporary" chats that were supposed to be deleted after 30 days. Whether health data actually disappears when you hit delete remains unclear.

Q: Who can access my ChatGPT Health data besides OpenAI?

A: OpenAI confirmed that health data remains available "where required through valid legal processes or in an emergency situation." That means prosecutors, civil litigants, or government agencies with subpoenas can potentially access your records. This is especially relevant for reproductive health or gender-affirming care data in states with restrictive laws.

Q: What's the difference between HIPAA protection and what OpenAI offers?

A: HIPAA requires healthcare providers to meet federal security standards, limits who can see your data, and lets you sue for violations. OpenAI offers voluntary privacy features with no regulatory enforcement. If OpenAI mishandles your health data, you have no HIPAA complaint to file. Your only recourse would be a breach-of-contract lawsuit based on their terms of service.

Q: Which ChatGPT subscription plans include ChatGPT Health?

A: OpenAI says ChatGPT Health will be available across Free, Go, Plus, and Pro plans. The initial rollout is via waitlist to a small test group. Full access is expected "in the coming weeks" on web and iOS. Medical record connections and some app integrations are U.S. only. Apple Health sync requires an iPhone.