💡 TL;DR - The 30 Seconds Version

🚨 OpenAI deployed fortress-level security after Chinese startup DeepSeek allegedly copied its AI models using distillation techniques in January 2025.

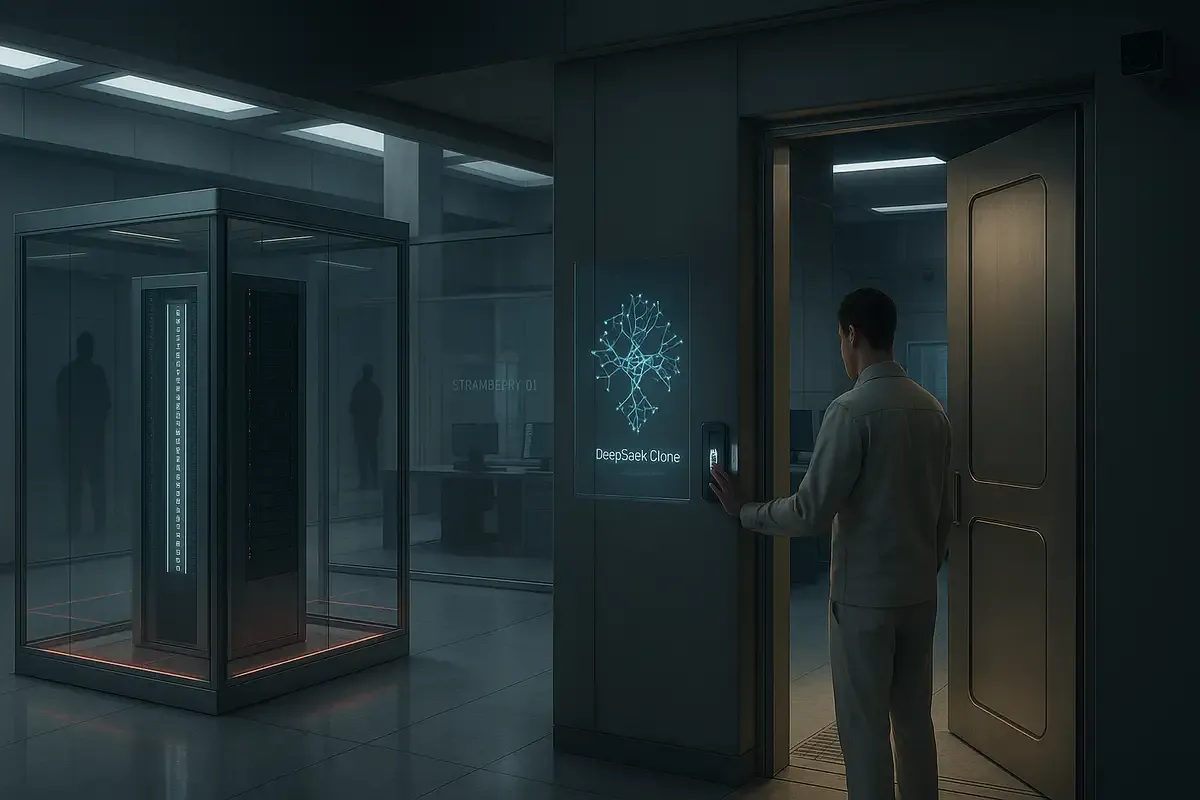

🔒 Employees now scan fingerprints to access certain rooms and must verify colleagues' clearance before discussing projects in shared spaces.

💻 The company moved sensitive technology into offline systems and adopted a deny-by-default internet policy requiring explicit approval for connections.

👥 OpenAI hired a security chief from Palantir and added retired NSA director Paul Nakasone to its board for cybersecurity oversight.

🏭 The measures include information tenting that limited access to the o1 model codenamed Strawberry to only verified team members.

🌍 Other AI companies are watching closely as the industry shifts from open collaboration to paranoid fortress mentality protecting intellectual property.

OpenAI got spooked. After Chinese startup DeepSeek launched a competing model in January, the AI company went into full lockdown mode. OpenAI accused DeepSeek of copying its technology through "distillation" - basically stealing by reverse engineering.

The result? OpenAI turned its offices in San Francisco into something resembling a spy agency. Employees now scan fingerprints to enter certain rooms. Computers stay offline. Workers need special clearance to discuss projects in hallways.

It's a far cry from Silicon Valley's usual open-door culture. But OpenAI decided secrecy beats getting ripped off.

When Paranoia Becomes Policy

The company calls it "information tenting." Think of it as digital compartments where only certain people get access to specific projects. When OpenAI built its o1 model (code name "Strawberry"), team members had to check if their colleagues were "in the tent" before talking shop.

One employee told the Financial Times it got "very tight - you either had everything or nothing." Lunch conversations became minefield navigation. Want to discuss your work? Better make sure your tablemate has clearance first.

The system made collaboration harder but kept secrets safer. OpenAI gradually relaxed some rules as it figured out who needed what access.

Fort Knox Meets Silicon Valley

OpenAI moved its crown jewels - the algorithms that power its models - into isolated computer systems. These machines never touch the internet. Nothing connects to outside networks without explicit approval.

Biometric scanners guard office doors. Data centers got military-grade security upgrades. The company hired Dane Stuckey from Palantir as its new security chief. Palantir specializes in government and military contracts, so Stuckey knows how to keep secrets.

OpenAI also brought retired Army General Paul Nakasone onto its board. Nakasone used to run both the NSA and US Cyber Command. When you're hiring former spymasters, you're taking security seriously.

More Than Foreign Threats

This isn't just about Chinese competition. OpenAI faces leaks from inside too. CEO Sam Altman's comments regularly appear in tech blogs. The AI industry's talent wars mean constant poaching attempts. Former employees might take sensitive knowledge to competitors.

The security measures target both external and internal threats. Trust nobody, verify everything.

The Distillation Problem

DeepSeek allegedly used "distillation" to copy OpenAI's models. This technique studies how an AI system behaves and creates a similar version without needing the original training data or massive computing power.

It's like industrial espionage for the AI age. Companies can skip years of research by studying competitors' finished products. No wonder OpenAI freaked out.

The method works because AI models reveal their decision-making patterns through their outputs. Feed enough examples into an analysis system and you can reverse-engineer the underlying logic.

Industry Arms Race

OpenAI's paranoia reflects broader trends. US authorities warned last year that foreign adversaries, especially China, were ramping up efforts to steal AI technology. The stakes are huge - whoever controls advanced AI gains massive economic and military advantages.

Washington has imposed export controls to limit China's access to cutting-edge computer chips. But stealing software is easier than building hardware. Code can cross borders in email attachments.

Other AI companies are watching OpenAI's response closely. If the industry leader feels compelled to implement spy-level security, others will likely follow. The result could be a more secretive, paranoid tech sector.

The Innovation Trade-Off

All this security comes with costs. AI development works best when researchers can freely share ideas and collaborate across teams. Too much secrecy could slow progress.

OpenAI must balance protection against productivity. Lock things down too tight and innovation suffers. Stay too open and competitors steal your work.

The company insists these changes weren't prompted by any specific incident. It claims to want industry leadership in security practices. But the timing after DeepSeek's release suggests otherwise.

New Reality

OpenAI's transformation from research lab to fortress reflects how much the AI landscape has changed. Early companies shared research openly. That collaborative era appears to be ending as commercial stakes rise.

The Financial Times reports that OpenAI has been "aggressively" expanding its security teams and practices. The company now treats its technology like state secrets.

This shift could reshape the entire industry. If building AI requires spy-level security, fewer companies will be able to compete. The technology could become concentrated among a few heavily fortified players.

Why this matters:

• AI development is shifting from open collaboration to armed camps, which could slow innovation and concentrate power among companies that can afford military-grade security.

• The distillation threat shows how easily AI advantages can be copied, forcing companies to choose between sharing knowledge and protecting their competitive edge.

❓ Frequently Asked Questions

Q: What exactly is "distillation" and how does it work in AI?

A: Distillation involves feeding thousands of prompts to an AI model and studying the responses to reverse-engineer how it works. Companies can then build similar models without the original training data or computing power. It's like copying homework by studying the answers rather than doing the research yourself.

Q: How much is OpenAI spending on these new security measures?

A: OpenAI hasn't disclosed specific costs, but the measures include hiring specialized personnel from companies like Palantir, installing biometric systems, building isolated networks, and upgrading data centers. Enterprise-level security overhauls typically cost millions annually for companies of OpenAI's $300 billion valuation.

Q: Are other AI companies doing similar things?

A: Yes. The Financial Times reports that OpenAI joined "a number of Silicon Valley companies" that stepped up staff screening due to Chinese espionage threats. Google, Microsoft, and Meta have all increased security spending, though none have publicly detailed measures as extensive as OpenAI's tenting system.

Q: What was OpenAI's security like before this?

A: OpenAI operated more like a typical tech startup. Employees could freely discuss projects in common areas without clearance checks. The company began tightening security last summer but accelerated dramatically after January 2025, when DeepSeek released its competing model.

Q: How do these changes affect OpenAI employees daily?

A: Employees must verify colleagues' clearance levels before discussing projects in shared spaces. Workers scan fingerprints to access certain rooms. One employee described the initial system as "very tight - you either had everything or nothing." OpenAI has since adjusted to make collaboration easier.

Q: Is DeepSeek's model actually as good as OpenAI's?

A: DeepSeek hasn't provided detailed performance comparisons, and OpenAI hasn't publicly benchmarked the competing model. The concern isn't necessarily that DeepSeek's model is better, but that distillation techniques could allow rapid copying of future OpenAI advances without years of research investment.

Q: How common is AI model theft in the industry?

A: US authorities warned tech companies in 2024 that foreign adversaries, particularly China, had increased efforts to acquire sensitive AI data. Specific incidents are rarely disclosed publicly. OpenAI's response suggests model theft is becoming a significant industry concern rather than an isolated problem.

Q: Will these security measures slow AI development across the industry?

A: Potentially. AI research traditionally benefits from open collaboration and knowledge sharing. If other companies follow OpenAI's lead with extensive security measures, it could fragment the industry and slow innovation. However, protecting intellectual property may be necessary for continued R&D investment.