💡 TL;DR - The 30 Seconds Version

📊 OpenAI announced 5 new US data centers pushing total capacity to 7 gigawatts—equivalent to seven large nuclear reactors—with $400+ billion committed over three years.

🏭 Sites span Texas, New Mexico, Ohio, and an unnamed Midwest location, selected from 300+ proposals across 30 states, with Oracle and SoftBank as financing partners.

👷 Claims of "25,000+ onsite jobs" face reality check: Abilene facility will have ~1,700 permanent positions once automated data centers go live.

⚡ Environmental impact unaddressed despite nuclear-scale power requirements and water needs for cooling—no disclosed plans for energy sourcing or grid connections.

🇺🇸 Infrastructure sprint advances explicit US-China AI competition, with officials framing buildout as securing "American leadership" in global AI race.

🤖 The bet hinges on scale-drives-capability theory while rivals like DeepSeek demonstrate efficient algorithms can achieve results with far less compute power.

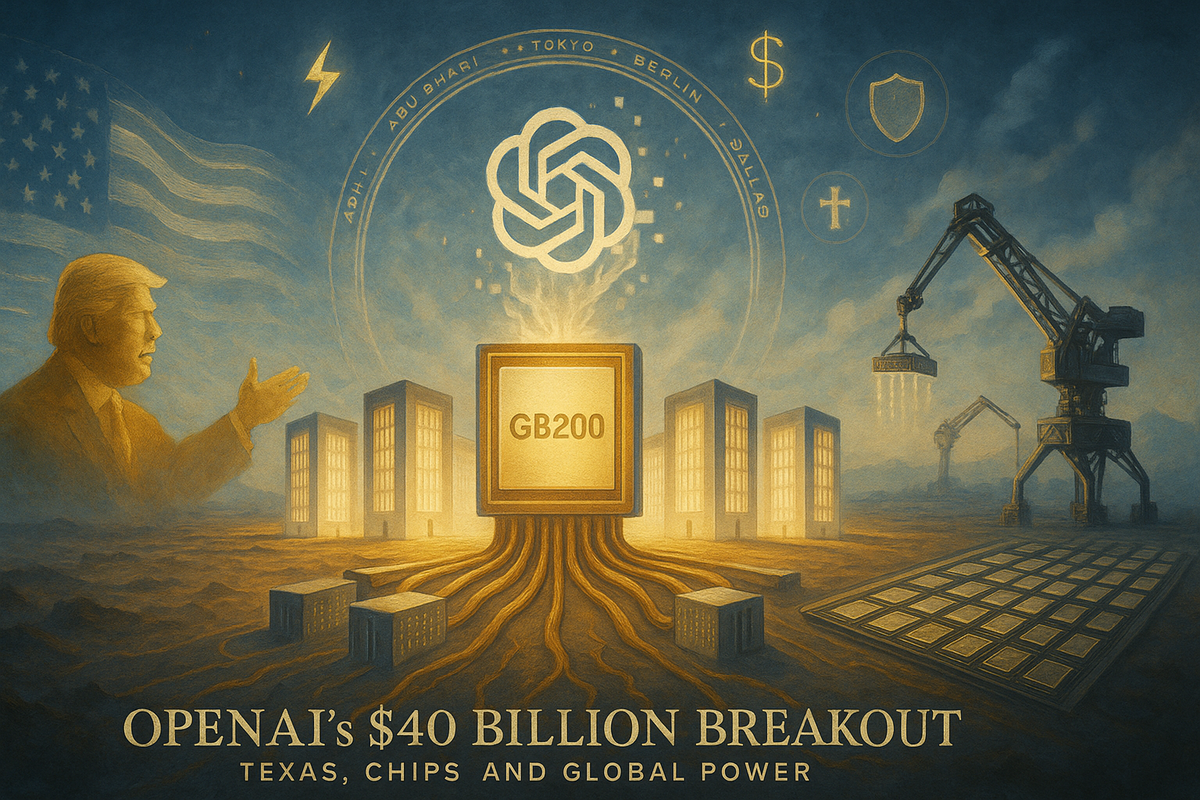

Five new U.S. sites push Stargate toward 7 GW as infrastructure outpaces job promises and environmental plans

OpenAI said it will add five U.S. data-center sites under its Stargate umbrella, lifting planned capacity to nearly 7 gigawatts—about seven large nuclear reactors—on the way to a 10-GW target. The company detailed locations and partners in its five-site Stargate announcement.

Texas anchors the sprint. Abilene—already running early OpenAI workloads on Oracle Cloud Infrastructure—now serves as the template: eight halls across roughly 1,100 acres, with fiber strung above and below ground and GB200 racks arriving in June. Executives say the first buildings are live, with the campus designed to approach a 900-megawatt draw at full tilt. The scale is the story.

What’s new and who’s paying

Three of the new sites sit inside the OpenAI–Oracle pact—Shackelford County (TX), Doña Ana County (NM), and an as-yet-unnamed Midwestern location. Two more—Lordstown (OH) and Milam County (TX)—are tied to SoftBank and SB Energy. OpenAI says the five were selected from more than 300 proposals across 30 states and, together with Abilene and projects with CoreWeave, push committed investment past $400 billion over three years. More sites are under review. Expect more concrete.

Financing splits by partner. Oracle is standing up and operating three sites while selling capacity back to OpenAI, and has floated “new corporate structures” to attract outside capital. SoftBank is leaning on bank debt and its energy arm’s “powered-pad” approach to shorten the path from dirt to racks. Different balance sheets, same outcome: more GPUs online, faster.

The hardware math

A separate letter of intent with Nvidia would layer on at least 10 GW of systems—millions of GPUs—with Nvidia investing up to $100 billion as each gigawatt is deployed. The first Vera Rubin–based gigawatt is slated to produce tokens in the second half of 2026. Nvidia’s own rule of thumb is stark: one gigawatt of AI capacity can cost $50–$60 billion to build, most of it silicon and systems. Ten gigawatts clears the half-trillion mark. Big numbers. Bigger execution risk.

Scale vs. efficiency

OpenAI’s argument is blunt: capability requires scale. With an estimated 700 million weekly users and a push into agentic and reasoning tasks, the company says demand is compute-constrained. “This is the fuel that we need to drive improvement,” Sam Altman told CNBC. Greg Brockman contrasted today’s plan with OpenAI’s first DGX delivery in 2016—orders of magnitude more compute now within reach.

Not everyone buys the premise. The “DeepSeek moment” earlier this year—credible results trained with far less compute—fortified a counter-thesis: algorithmic efficiency, model distillation, and on-device inference can deliver gains without terawatt-hour appetites. Veteran researcher Jonathan Koomey has cautioned that success depends on innovation and efficiency, not just concrete and chips. The industry may be confusing what’s technically possible with what’s economically rational. It’s a fair warning.

Washington’s chessboard

The politics are explicit. Stargate launched at the White House with a goal of “securing American leadership in AI.” Texas officials have embraced the buildout; national lawmakers are using it to argue for a light-touch regulatory lane in a perceived race with China. Nvidia’s Jensen Huang was equally direct about the strategic objective: build the world on an “American tech stack”—chips, infrastructure, models, applications. The message is industrial policy in plain sight.

Beijing’s approach diverges: state-directed energy and grid investment plus a visible emphasis on training frugality. That sets up two competing theories of advantage—compute abundance versus efficiency leadership. Nvidia profits from either path. The U.S. strategy, however, now hinges on delivering U.S.-sited megaprojects at speed, with all the permitting, grid, and supply-chain friction that implies. Timelines matter.

Jobs: boom now, lean later

OpenAI touts “more than 25,000 onsite jobs” across the new sites, plus knock-on effects. In Abilene, local officials cite crews working two 10-hour shifts, and temperatures topped 100°F during this week’s media tour. It’s a visible surge for host communities.

Steady-state operations look different. Modern data centers are automated and security-intensive; headcount is small. Oracle has indicated roughly 1,700 permanent roles on the Abilene campus once construction winds down. Expect similar ratios elsewhere: big, temporary peaks while building; tight teams once the lights are on. That’s the pattern.

The environmental ledger

Seven gigawatts is an energy and water story as much as a compute story. Tuesday’s materials didn’t include site-by-site power sourcing or water plans. The industry trendline points to a mix of renewables, firmed by gas and increasingly nuclear; Microsoft has already inked a long-term nuclear arrangement, and other hyperscalers are pairing campuses with firm generation. Those pathways take years and require regulatory stamina. They also add capex.

Baseline demand is moving the wrong way for planners. The International Energy Agency estimates that data-center electricity use was roughly 1.5% of global consumption in 2024 and could reach about 945 TWh by 2030—close to doubling—if AI growth holds. Cooling remains a water constraint even with closed-loop systems; in arid counties, make-up water requirements become a gating factor. Host cities face three hard variables: interconnect queues, water rights, and who funds substations and new transmission. None are trivial.

Financing the concrete

Follow the money and the logic emerges. Reuters reports OpenAI and partners plan to lease chips via debt rather than buy outright, spreading risk across vendors and financiers. Oracle, by financing and operating three sites, becomes landlord for compute; SoftBank leans on SB Energy’s powered-infrastructure model to hit near-term timelines. The Nvidia structure is circular by design: invest in the customer who then buys your systems. Elegant if utilization holds. Fraught if it doesn’t.

The open questions

Two uncertainties loom. First, grid reality: interconnect queues, transformer lead times, and new transmission could slow deliveries even as contracts get signed. Announced megawatts aren’t delivered megawatts. Second, the efficiency curve: if frontier models get cheaper per joule and more work shifts to the edge, the returns on 10-GW campuses compress. That isn’t an argument against building. It is a reminder that timing and utilization are everything.

Why this matters

- AI now competes with heavy industry for power, water, land, and grid headroom—without commensurate public detail on sourcing, cooling, or who pays for upgrades.

- The U.S. is betting on compute abundance while rivals chase efficiency; which thesis wins will determine whether these trillions become durable advantage or stranded capacity.

❓ Frequently Asked Questions

Q: How much electricity does 7 gigawatts actually use compared to everyday things?

A: 7 gigawatts could power about 5.3 million average American homes simultaneously. For context, that's equivalent to powering all households in Arizona, or roughly the output of seven large nuclear reactors running at full capacity.

Q: Why are they leasing chips through debt instead of buying them outright?

A: Each gigawatt costs $50-60 billion to build, with most going to Nvidia hardware. Debt financing spreads the massive upfront costs across multiple parties and reduces risk if AI demand doesn't materialize as projected—turning potential stranded assets into someone else's problem.

Q: When will these data centers actually be operational?

A: Abilene is already processing workloads since June 2024. The first Nvidia Vera Rubin systems won't produce tokens until second half of 2026. New sites face 2-4 year construction timelines, plus potential delays from power grid connections and permitting.

Q: How does OpenAI's $400 billion compare to what other tech giants are spending?

A: Amazon, Google, Meta, and Microsoft combined plan to spend $325+ billion on AI data centers by end of 2025. OpenAI's $400 billion over three years would exceed all of them combined, though much depends on partners' actual capital deployment.

Q: What happens if AI demand doesn't grow as fast as OpenAI expects?

A: The infrastructure becomes "stranded assets"—expensive facilities with limited buyers. At $50-60 billion per gigawatt, a demand shortfall could leave partners holding hundreds of billions in underutilized real estate and equipment, particularly given the specialized nature of AI chips.