OpenAI Rolls Out Age Detection for ChatGPT Before Adult Mode Launch

OpenAI's ChatGPT now predicts user age through behavioral signals before "adult mode" launch. Privacy experts warn about accuracy and surveillance.

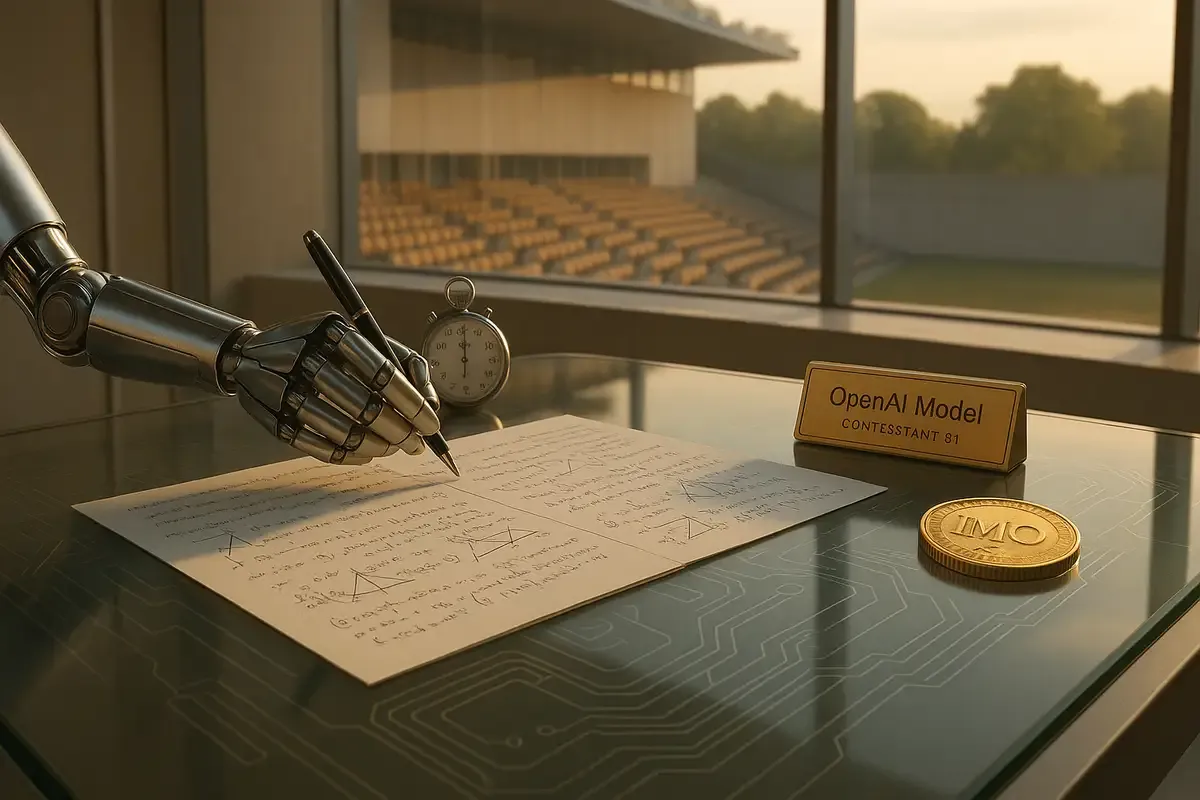

OpenAI's secret AI just won gold at the International Math Olympiad—while GPT-4 can't even score bronze. The experimental model solved problems that stump math prodigies, writing proofs for hours like a human. But you can't use it yet.

💡 TL;DR - The 30 Seconds Version

🏅 OpenAI's experimental model scored 35/42 points at the 2025 International Math Olympiad, earning gold by solving 5 of 6 problems

📊 Current AI models tested on the same problems all failed—Gemini 2.5 Pro scored just 13 points, below the 19 needed for bronze

⏰ The model thinks for hours instead of seconds, writing multi-page mathematical proofs under standard competition rules

🔧 Unlike DeepMind's math-specific AlphaGeometry, this uses general-purpose reasoning that could work across many domains

🚫 OpenAI won't release this capability for "many months"—it remains a research model proving AI can match elite human reasoning

🔮 The gap between public and research AI is massive: ChatGPT struggles with calculus while secret models write PhD-level proofs

Picture this: The world's smartest high school math prodigies gather in Australia. They've trained for years. They get two grueling 4.5-hour exams with six problems total. Most will fail to solve even half.

An AI just beat almost all of them.

OpenAI's experimental reasoning model scored 35 out of 42 points at the 2025 International Math Olympiad—enough for a gold medal. The kicker? Current AI models, including OpenAI's own publicly available ones, can't even score enough for bronze.

The Competition Nobody Expected AI to Win

The IMO isn't your average math test. Started in Romania in 1959, it's where future Fields Medal winners cut their teeth. Past champions include Grigori Perelman and Terence Tao—names that make mathematicians weak in the knees.

Just last month, Tao himself predicted AI wouldn't crack this nut. "There are competitions where the answer is a number rather than a long-form proof," he suggested on Lex Fridman's podcast, basically telling AI researchers to lower their sights.

OpenAI's model solved five of six problems. Not by plugging numbers into formulas, but by writing detailed, multi-page proofs. The kind that take human geniuses hours to craft and experts hours to verify.

Here's where it gets interesting. When current top models were tested on the same problems—Gemini 2.5 Pro, Grok-4, DeepSeek-R1, even OpenAI's o3—they all flopped spectacularly. The best managed 13 points. You need 19 for bronze.

Not Your Typical Math Bot

This isn't some calculator on steroids. Unlike DeepMind's AlphaGeometry, which was built specifically for math, OpenAI's model is a general-purpose reasoning system. Think of it as the difference between a chess computer and a human who happens to be good at chess.

"We reach this capability level not via narrow, task-specific methodology, but by breaking new ground in general-purpose reinforcement learning," explains Alexander Wei, the OpenAI researcher who led the project.

The model worked under the same rules as human contestants. No internet. No tools. Just raw thinking power applied to problems like determining which lines in a plane are "sunny" (don't ask—it involves not being parallel to certain axes).

What makes this model different? Time. While GPT-4 thinks in seconds and newer research models think in minutes, this one thinks for hours. OpenAI's Noam Brown calls it a new level of "sustained creative thinking."

The Speed of Progress

In 2021, AI struggled with grade school math. By 2024, it had conquered high school problems. Now it's winning math Olympics.

"Still—this underscores how fast AI has advanced in recent years," Wei noted. He'd predicted 30% accuracy on basic math benchmarks by 2025. Now his team has an AI writing mathematical proofs that would make professors nod in approval.

Gary Marcus spent years pointing out AI's flaws. This time? He called it "genuinely impressive." Of course, he still wants to know about training data, costs, and whether anyone outside math departments will care.

Sam Altman chimed in with typical understatement: "When we first started OpenAI, this was a dream but not one that felt very realistic to us."

What You Can't Have

You can't use it. Not for "many months," according to OpenAI. This is purely a research model, a proof of concept that general AI systems can tackle problems requiring deep, sustained reasoning.

The timing of the announcement raises eyebrows. It came right after public reports showed current AI models failing miserably at IMO problems. OpenAI researcher Jerry Tworek insists the model received "very little IMO-specific work"—just continued training of general models. But the results haven't been independently verified by the IMO itself.

There's also the question of practical value. Sure, it can prove theorems about sunny lines, but can it help with your taxes? The model that solved these problems is part of the same reinforcement learning system behind OpenAI's recent announcements about AI agents and programming contests.

The Bigger Picture

This isn't just about math. It's about AI systems that can sustain complex reasoning over hours, not seconds. That's the difference between a chatbot and something approaching human-level problem-solving.

The proofs are available on GitHub if you want to see an AI's "distinct style"—Wei's polite way of saying the model writes like someone who learned English from math textbooks. But style points aside, the substance is there: rigorous, creative mathematical thinking from a machine.

OpenAI hints that GPT-5 is coming "soon," though it's unrelated to this math achievement. The IMO model came from a small team that found a new way to scale thinking time and reasoning depth.

Why this matters:

• AI just crossed a threshold nobody expected this soon—beating human elites at creative, complex reasoning tasks that can't be solved by pattern matching or memorization.

• The gap between public AI and research AI is massive: while ChatGPT struggles with calculus, secret models are writing PhD-level proofs. That gap will close eventually.

Q: What exactly is the International Math Olympiad?

A: It's the world's most prestigious high school math competition, running since 1959. Over 100 countries send teams of up to 6 students. Contestants get two 4.5-hour exams with 3 problems each. Winners often become top mathematicians—past champions include Fields Medal winners like Terence Tao.

Q: How does the AI "think for hours" compared to regular ChatGPT?

A: ChatGPT generates responses in seconds. This experimental model processes problems for hours before answering, similar to how humans tackle complex math. OpenAI hasn't revealed the exact mechanism, but it involves new reinforcement learning techniques that scale computing time during problem-solving.

Q: What's a "sunny line" from the math problem mentioned?

A: A line is "sunny" if it's not parallel to the x-axis, y-axis, or the line x+y=0. It's a made-up term for this specific IMO problem. Contestants must figure out how many such lines exist under certain conditions—the kind of abstract thinking that makes IMO problems uniquely challenging.

Q: Who graded the AI's proofs and how did it work?

A: Three former IMO medalists independently graded each proof. They reached unanimous consensus on scores—35 out of 42 points total. The AI wrote natural language proofs just like human contestants, not symbolic math. Each problem is worth 7 points, graded on correctness and completeness of the mathematical argument.

Q: When will regular people get access to this capability?

A: OpenAI won't release this level of math capability for "many months." Jerry Tworek suggested possibly by year's end. The technology needs safety testing and optimization before public release. This experimental model is purely for research—showing what's possible, not what's ready for production.

Q: How much computing power does this model require?

A: OpenAI hasn't disclosed specifics, but "thinking for hours" suggests massive computational requirements. Gary Marcus questioned the cost per problem. For context, running advanced AI models can cost hundreds to thousands of dollars per complex task, making this impractical for everyday use currently.

Q: Are other AI companies close to this achievement?

A: Rumors suggest DeepMind also earned gold at IMO 2025, though they haven't announced it. Last year, DeepMind's AlphaProof and AlphaGeometry won silver with 4 of 6 problems solved. Their approach combines language models with search algorithms, while OpenAI uses pure language model reasoning.

Q: Is this the same as GPT-5?

A: No. OpenAI says GPT-5 is coming "soon" but it's unrelated to this math model. The IMO achievement came from a small research team led by Alexander Wei, using experimental techniques. It's part of the same reinforcement learning system behind recent AI agent and programming contest announcements.

Get the 5-minute Silicon Valley AI briefing, every weekday morning — free.