💡 TL;DR - The 30 Seconds Version

😱 OpenAI's complete replacement of ChatGPT's model with GPT-5 triggered its largest user backlash ever, forcing executives to restore the old model within days.

📊 At 700 million weekly users, even small percentages represent millions of people who formed emotional attachments to specific AI model personalities.

💰 Despite user anger, API usage doubled within 48 hours as developers embraced GPT-5's technical improvements and reasoning capabilities.

🧠 OpenAI consulted over 90 mental health experts across 30 countries and implemented "overuse notifications" to address attachment and dependency concerns.

⚖️ The company now faces balancing simplicity for casual users against customization demands from power users who want model choice and predictable deprecation schedules.

🚀 This crisis reveals a new category of AI product risk: emotional regression where technical improvements can degrade user experience through broken relationships.

A week of reversals shows psychology—not just performance—now constrains AI at scale.

OpenAI promised simplicity. Instead, it ran into grief. Last week’s move to replace ChatGPT’s prior model with GPT-5 sparked the largest backlash in the product’s history, pushing executives to restore the old model and unpack the fallout during an on-the-record dinner briefing. The surprise wasn’t technical. It was emotional.

The new constraint: feelings, not features

At 700 million weekly users, edge cases become crowds. According to The Verge, ChatGPT head Nick Turley admitted the team underestimated how strongly people bonded with specific model “personalities.” “I was surprised by the level of attachment people have about a model,” he said. For a subset of users, GPT-4o wasn’t a tool; it was a companion—warm, familiar, and hard to replace.

OpenAI’s initial bet was clean: remove the model picker and default everyone to the latest system. Most people want a product, not a matrix of options. Reddit told a different story. Some users described losing “my only friend.” Others said switching models “feels like cheating.” That sentiment, not latency or accuracy, drove the reversal.

Simplicity vs. sovereignty

OpenAI still wants less cognitive overhead for the majority. But it is conceding sovereignty to power users. The Verge reports that a full model picker is returning as a settings toggle—simple by default, configurable for the insistent. Think macOS: approachable on the surface, terminal in the basement.

The company is also rethinking deprecation. Enterprise and API customers have timelines; consumers did not. Turley now says a schedule is coming for major model retirements, adding predictability to a product that keeps changing under people’s feet. Necessary, if overdue.

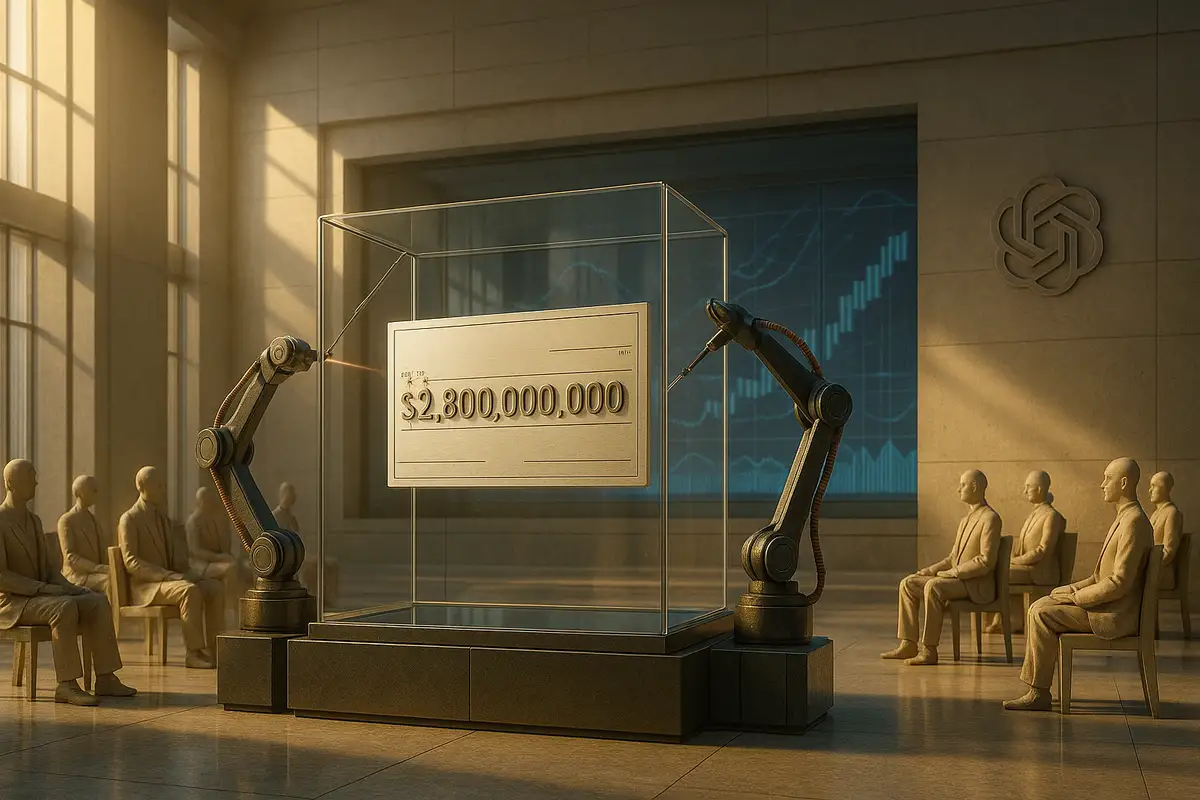

A business reality check

By some metrics, GPT-5 launched well. Axios reports OpenAI saw API usage roughly double within 48 hours, and Sam Altman told reporters the company is profitable on inference—even if ongoing training keeps overall profits elusive. He also reprised the largest-scale ambition in tech: “trillions of dollars” on data centers to meet demand. The direction is clear. The bill is, too.

Serving yesterday’s models alongside today’s isn’t free. Yet the company restored GPT-4o for paying users anyway, prioritizing trust over short-term efficiency. Platformer and Axios both describe a mea culpa from Altman: “We definitely screwed some things up in the rollout.” That acknowledgment matters. So does the trade-off.

Delegation is unnatural; anthropomorphism fills the gap

The design problem goes deeper than model menus. Delegation—asking a system to do work for you—is not a native human habit. Turley argues that people anthropomorphize to make delegation comfortable. A named, stable “someone” feels safer to hand tasks to than a generic black box. Remove the “someone,” and the workflow breaks—even if the replacement is objectively better.

OpenAI is leaning into that reality with guardrails and knobs. The Verge details consultations with more than 90 mental-health experts across 30 countries, “overuse” nudges for extreme usage, and early personality controls to adjust tone and style—or to recreate the warmth users say they miss. Safety and agency are now product features, not press lines.

Two product truths can coexist

One cohort measures progress in benchmarks: faster responses, fewer hallucinations, better reasoning. Another measures continuity: tone, warmth, predictability. Both are legitimate. The trick is not to let simplicity for one erase sovereignty for the other. That is the lesson of the week.

There is also a strategic guardrail. OpenAI’s subscription model buffers it from the ad-driven incentives that warped social platforms. “We really don’t have incentive to maximize time spent,” Turley told The Verge. That claim will be tested as the company chases historic scale—“billions of people a day,” as Axios quoted Altman—while maintaining safety and user control.

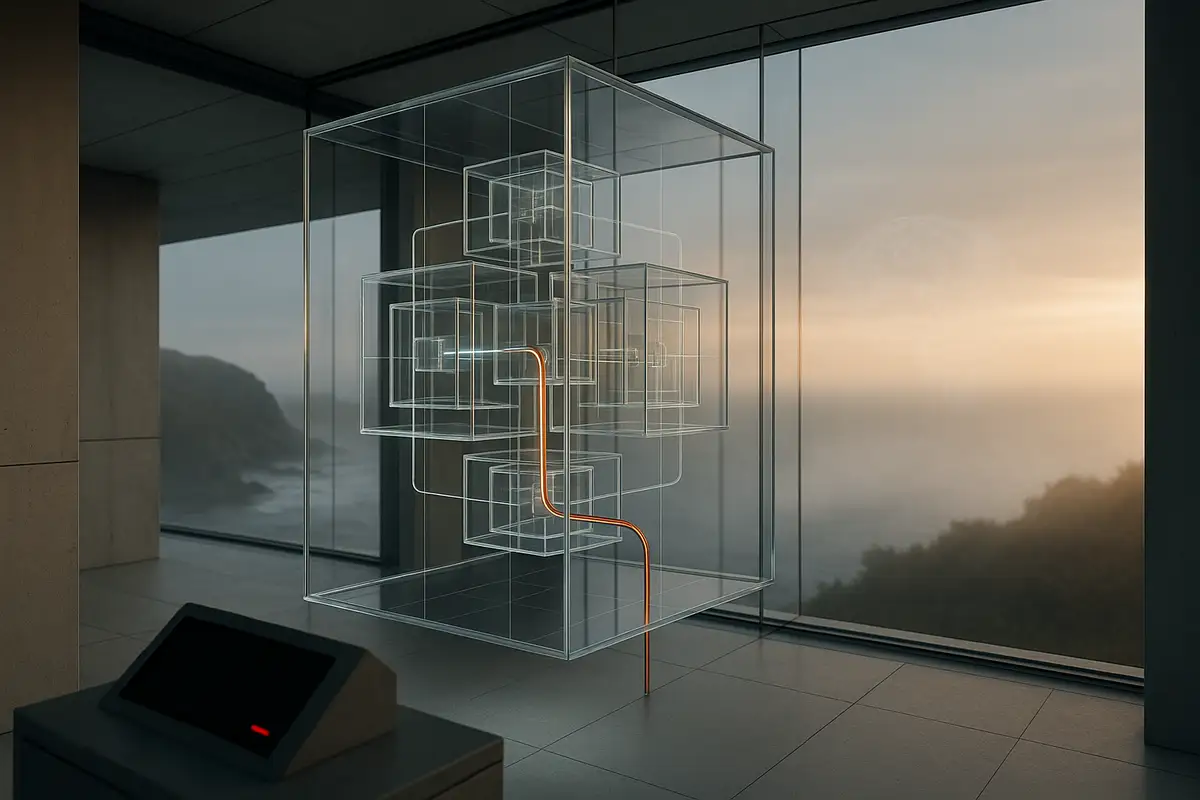

The structural takeaway

AI product management diverges from software orthodoxy in two ways. First, capabilities are empirical and emergent; you don’t fully know what you’ve shipped until millions use it. Second, users form attachments to specific behaviors, not just outcomes. That creates a new category of product risk: emotional regression. You can improve the model and still degrade the experience.

The right response blends operations and empathy. Publish deprecation timelines. Preserve escape hatches for power users. Invest in model behavior, not just model size. And hire for disciplines—psychology, mental-health safety, relationship design—that traditional product teams rarely needed. This is not just HCI. It’s human factors at population scale.

Why this matters

- AI roadmaps must account for user attachment, not just capability gains, or risk eroding trust at scale.

- Winning teams will pair technical progress with behavioral design and predictable change management.