On any given week, OpenAI, Anthropic, or Google DeepMind posts a new assurance that their systems are “safe and broadly beneficial”. The prose changes. The promise does not.

Call it “responsible AI”. Better. Call it what it is. A fight for legitimacy.

In pharmaceuticals, companies learned long ago that safety talk without structure is an invitation to distrust. Their communication teams work inside a fixed architecture, a message house. One roof. A few load bearing pillars. Patient welfare. Rigorous evidence. Full disclosure of risk. Every press release and sales pitch must point back to those beams or it does not ship.

From this, three lessons follow. First, decide which safety commitments are non negotiable. Second, back each with repeatable proof, not vibes. Third, make research, policy, and marketing sing from the same score rather than improvising in public.

AI labs often do the opposite. Their safety statements are earnest but episodic, a series of blog posts rather than a narrative. OpenAI’s journey from withholding GPT 2 for fear of misuse to blitz scaling GPT 4 products is not only a product story. It is a messaging failure. When former insiders say safety culture has taken a back seat to “shiny products”, they are really describing the absence of a stable roof.

Anthropic comes closest to discipline. It sacrifices launches when risk feels too high, explains its reasoning in plain language, and publishes the underlying research. Yet even here, rumor and external pressure can pull the story off course. Without a clearly articulated house, every controversy creates a new hallway rather than reinforcing the same walls.

Climate policy offers a second lesson. After years of doom and drift, successful campaigns moved to a simple structure. Urgency. Solvability. Action. The facts differ by audience, but the roof does not. That is how you wear down denial and greenwashing. You repeat, you evidence, you act.

AI labs face a similar credibility cliff. They are asking the public to trust that self regulated corporations will steer a general purpose technology that could restructure economies and security doctrines. In that context, a sporadic safety blog is not reassurance. It is an alibi waiting to be cross examined.

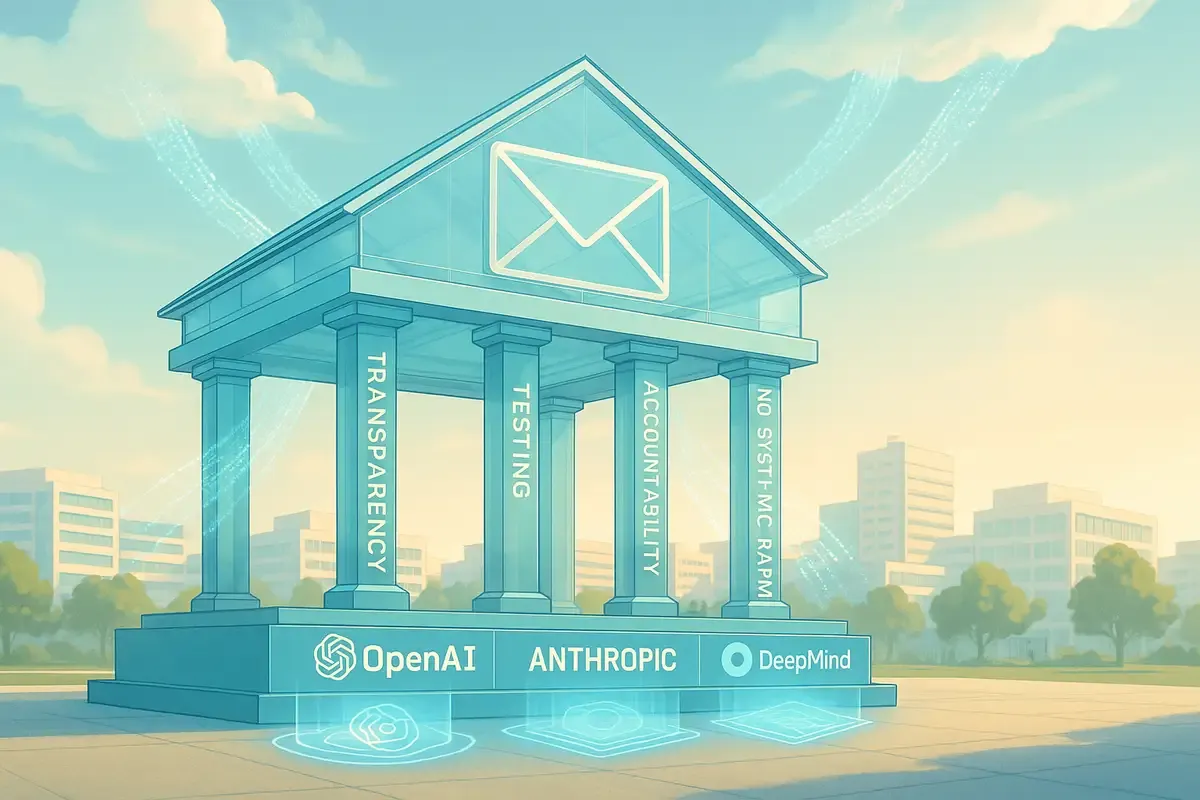

If these companies truly believe safety comes first, they should prove it as other high stakes fields already do. Build a safety message house. Nail down three or four pillars such as “do no systemic harm”, “test before release”, “transparency by default”. Tie bonuses, launch gates, and public statements to those beams.

Trust is not a press cycle. It is a structure. In AI, narrative discipline is not a branding flourish. It is a safety feature. Imagine the alternative: the first serious accident involving an advanced model unfolds, and each lab scrambles to explain, redefine, or selectively quote its earlier promises. In that moment, the question will not be whose branding was most inspiring, but whose story was consistent enough to be believed by courts and publics.