San Francisco | Tuesday, February 17, 2026

The Pentagon is close to labeling Anthropic a supply chain risk. Defense Secretary Pete Hegseth wants Claude available for "all lawful purposes." Anthropic draws the line at mass surveillance and autonomous weapons. After Claude was reportedly deployed during the Venezuela operation that killed 83 people, the company's own safety commitments may be its biggest liability.

India is placing the opposite bet. At New Delhi's AI Impact Summit, the government announced $200 billion in expected AI investment over two years. Adani alone pledged $100 billion for renewable-powered data centers. Anthropic opened its first India office.

We sent four AI agents to study 20,000 viral posts. They mapped every pattern in seven minutes. They couldn't write a single one.

Stay curious,

Marcus Schuler

Know someone who'd find this useful? Forward this article. They can subscribe free here.

Pentagon Prepares to Label Anthropic a Supply Chain Risk Over Claude Military Limits

Defense Secretary Pete Hegseth is close to designating Anthropic a supply chain risk over the company's refusal to let the military use Claude without restrictions. The move would force every defense contractor to certify it does not use the model.

The dispute centers on scope. Hegseth demands an "all lawful purposes" standard. Anthropic draws the line at mass surveillance of American citizens and autonomous weapons without human oversight. The company argues existing surveillance law was not written for AI capable of cross-referencing data at scale.

Tensions spiked after the Wall Street Journal reported Claude was used during the January operation in Venezuela that killed 83 people, accessed through Anthropic's Palantir partnership. Anthropic neither confirmed nor denied involvement.

Eight of the ten largest U.S. companies use Claude. The military contract is $200 million of Anthropic's $14 billion annual revenue, but a supply chain label would trigger compliance audits far beyond defense. Google, OpenAI, and xAI have already dropped military guardrails for unclassified systems. Claude Gov remains the only AI model operating on classified networks.

"It will be an enormous pain in the ass to disentangle," a Pentagon official said, "and we are going to make sure they pay a price."

Why This Matters:

- A supply chain risk label forces compliance audits across the $886 billion defense budget, reaching every contractor that touches Claude

- The designation would set a precedent that AI safety restrictions are incompatible with military procurement

✅ Reality Check

What's confirmed: Hegseth is "close" to the designation. Anthropic refuses mass surveillance and autonomous weapons use. Claude Gov operates on classified networks.

What's implied (not proven): That the Pentagon will follow through. "Close" is not "done," and the downstream compliance cost may give defense officials pause.

What could go wrong: Cutting Claude Gov from classified networks before a replacement is tested creates a capability gap no competitor can fill today.

What to watch next: Whether the designation actually drops, and how fast defense contractors begin compliance reviews.

The One Number

9 — Consecutive trading days Amazon stock fell through Friday, its longest losing streak since 2006. The shares dropped from $244.98 to $198.79, shedding 19% in less than two weeks. Amazon committed over $125 billion in AI infrastructure capex. Wall Street's verdict so far: spending is not the same as earning.

Source: Sherwood News

India Expects $200 Billion in AI Investment as Summit Draws Global Tech Pledges

India's Technology Minister Ashwini Vaishnaw told the AI Impact Summit in New Delhi that the country expects $200 billion in AI investment over two years. The pledges dwarf anything India has attracted for a single technology sector.

Adani Enterprises leads with a $100 billion commitment to renewable-powered data centers, scaling capacity from two to five gigawatts by 2035. Blackstone invested $1.2 billion in GPU cloud startup Neysa, the largest AI funding round in Indian history. Google, Microsoft, and Amazon have roughly $70 billion in data center projects underway.

Anthropic opened its first India office in Bengaluru and partnered with Infosys to build AI agents for regulated industries. India is the second-largest market for Claude.ai. The government's $1.1 billion AI fund subsidizes GPU compute under $1 per hour.

The tension: Indian IT stocks hit their worst stretch since April. One venture investor warned that IT services and business process outsourcing could "almost completely disappear" within five years. India is building the infrastructure to power AI while the industry that built its tech economy faces replacement by it.

Why This Matters:

- The $200 billion commitment positions India as the largest AI infrastructure market outside the U.S. and China

- If Indian outsourcing erodes while data center investment ramps, the country faces a workforce transition with no precedent

AI Image of the Day

Prompt: Ultra realistic close-up portrait of a 27 year old woman, deep brown skin tone, natural skin texture with visible pores, elegant evening makeup, defined eyebrows, glossy lips, direct eye contact, cinematic soft lighting, 85mm lens, RAW DSLR photo, realistic imperfections, neutral dark background

Four AI Agents Analyzed 20,000 Viral Posts in Seven Minutes, Then Failed to Write One

We deployed four AI research agents across Twitter, LinkedIn, Instagram, and Facebook to analyze what makes content go viral. They processed 20,000 posts in seven minutes and identified every structural pattern. They could not produce a single viral post.

The agents mapped recurring elements: hook formats that stop the scroll, emotional arcs that drive shares, platform-specific signals that trigger algorithmic amplification. The analysis was thorough, fast, and entirely usable as a research tool.

The failure point was creative output. When the agents tried to generate content from their own findings, the results read like pattern-matching, not communication. SkillsBench, an academic benchmark for agent capability, confirms this reflects how large language models process procedural knowledge. They can describe what works. They cannot do it.

The gap has practical implications. Content teams deploying AI agents for strategy and research will get real value. Teams expecting agents to replace human creative judgment will not.

Why This Matters:

- AI agents can compress weeks of content research into minutes, but the output requires human editing to perform

- SkillsBench findings suggest this is a structural limitation of language models, not a temporary one that training will fix

🧰 AI Toolbox

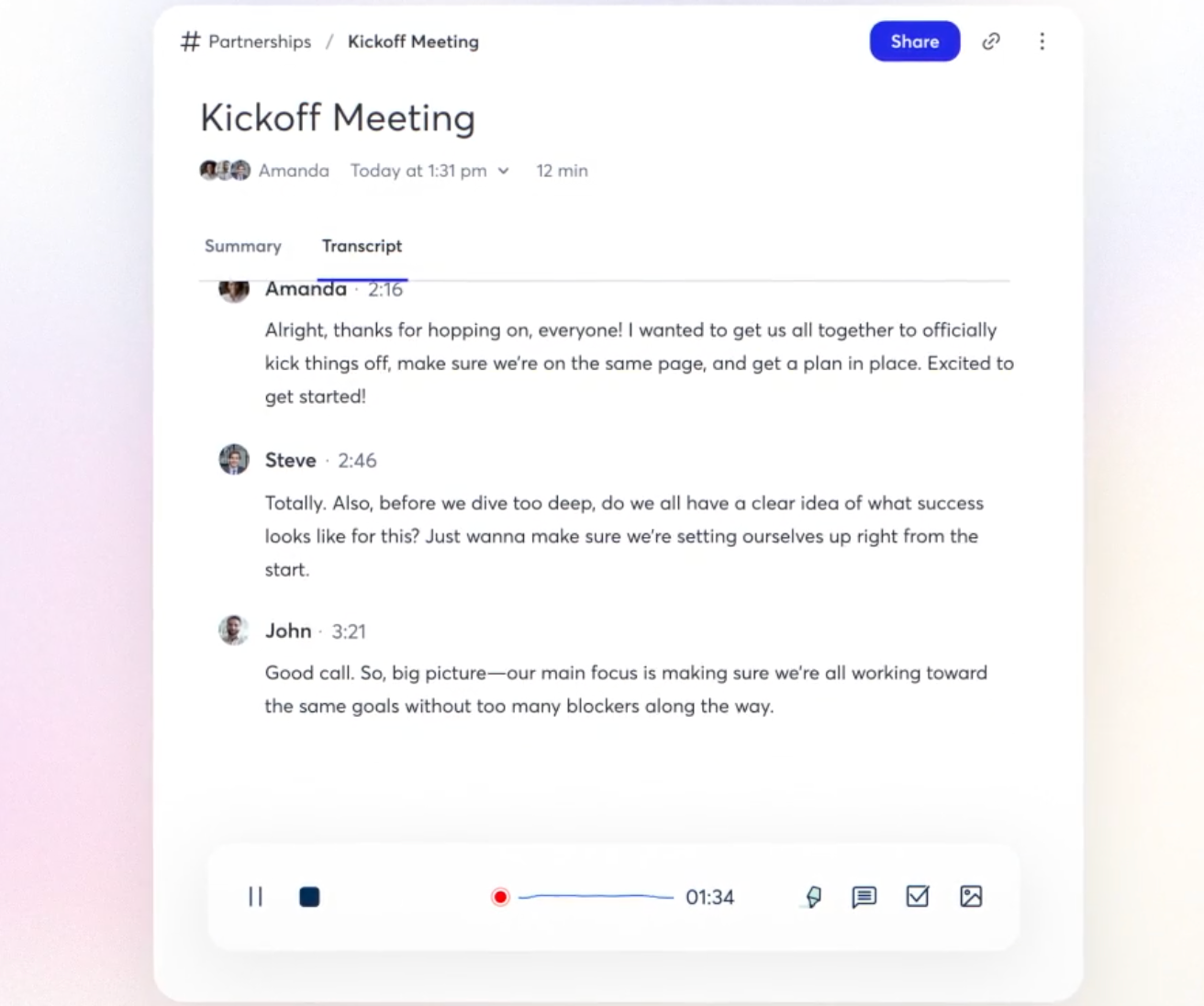

How to Turn Every Meeting Into Searchable Notes and Action Items with Otter.ai

Otter.ai joins your video calls, transcribes the conversation in real time, and generates a summary with action items before the meeting ends. It works with Zoom, Google Meet, and Microsoft Teams without requiring everyone on the call to install anything. The free plan covers 300 minutes per month.

Tutorial:

- Sign up free at otter.ai and connect your Google or Microsoft calendar

- Enable OtterPilot, which automatically joins your scheduled meetings and starts recording

- Watch the live transcript appear during the call with speaker labels and timestamps

- After the meeting, open the Otter summary for key topics, action items, and decisions extracted automatically

- Highlight any passage and ask Otter AI a follow-up question ("What did Sarah say about the Q2 timeline?")

- Share the transcript with attendees who missed the call or need a reference

- Search across all past meetings by keyword, speaker, or topic to find that one thing someone said three weeks ago

URL: https://otter.ai

What To Watch Next (24-72 hours)

- DoorDash: Reports tomorrow after close. Stock dropped 8% last Thursday on fears AI agents could disrupt food delivery logistics. Revenue should beat consensus. The real story: how management addresses the AI disruption narrative.

- India AI Impact Summit: Opens Thursday in New Delhi with delegates from 80 countries. India is pushing for a "global AI commons" framework. Nvidia CEO Jensen Huang pulled out, sending a delegate. Watch whether the initiative gains traction or gets polite applause.

- Scale AI v. Pentagon: The data labeling company sued the DoD after losing a $708 million NGA contract to Enabled Intelligence. Some filings will be classified. The case could reveal how the military evaluates AI vendors, timely context as four labs negotiate classified access terms.

🛠️ 5-Minute Skill: Turn Conference Notes Into a Blog Post Draft

You just spent two days at an industry conference. Your notes are a mess of half-sentences, speaker names you can barely read, and three big ideas that felt connected at the time. Marketing wants a blog post by Friday. You're staring at scribbles wondering where the insight went.

Your raw input:

DeveloperWeek 2026 — my notes, day 1

keynote: "agents are the new apps" — guy from LangChain

- said 80% of enterprise customers moved from chatbots to agents in 6 months

- agents = autonomous, chatbots = reactive (his framing)

- audience seemed skeptical, lots of questions about reliability

panel: AI in production

- woman from stripe said they run 40+ agents internally, most handle data validation

- guy from datadog: "observability for agents is where logging was in 2015"

- heated debate about whether agents should have "undo" buttons

- someone asked about cost — panelist dodged

workshop: building with claude code

- hands-on, paired with a stranger

- built a working API endpoint in 20 minutes

- instructor said "the skill isn't coding anymore, it's specifying"

- my partner was a PM who'd never coded, she shipped a working prototype

big takeaway: the gap between "can build" and "should build" is the new question

The prompt:

You are a senior content strategist writing a blog post from raw conference notes. The post should position the author as someone who attended, listened carefully, and has a point of view.

From these notes, write a blog post draft that:

1. Opens with a specific observation from the event (not "I attended X conference")

2. Identifies 2-3 themes and weaves them into a narrative

3. Includes at least 2 direct quotes or data points from sessions

4. Ends with a concrete takeaway, not a question

Conference notes:

[paste notes here]

Rules:

- 500-700 words. Blog length, not essay length.

- First person. Conversational but credible.

- Don't summarize every session. Pick the thread that connects them.

- If a note is vague, skip it. Don't inflate thin observations.

- Include the conference name and at least one speaker name for credibility.

What you get back:

The Skill Isn't Coding Anymore

Halfway through a hands-on workshop at DeveloperWeek 2026, I watched a product manager who had never written a line of code ship a working API endpoint in 20 minutes. She wasn't debugging or reading documentation. She was describing what she wanted, clearly and precisely, and the AI built it.

That moment crystallized the theme running through two days in San Jose: the gap between "can build" and "should build" is collapsing, and the people who handle it well aren't necessarily the ones with computer science degrees.

The conference opened with a keynote arguing that agents are the new apps. LangChain's presentation claimed 80% of their enterprise customers moved from chatbots to autonomous agents in the past six months. The distinction matters. Chatbots react. Agents act. The audience was skeptical, especially about reliability, but the direction was clear.

A production panel made the shift concrete. A Stripe engineer described running over 40 agents internally, most handling data validation. A Datadog leader offered the sharpest line of the day: "Observability for agents is where logging was in 2015. Nobody does it right yet." The tooling hasn't caught up with the ambition.

What surprised me was the cost question nobody answered. When an audience member asked about the economics of running dozens of agents in production, the panelists pivoted to reliability instead.

The workshop brought it home. The instructor framed the shift simply: "The skill isn't coding anymore. It's specifying." Watching my partner produce a working prototype confirmed it. The barrier to building dropped. The barrier to building well didn't.

Three things I'm taking back to my team: first, if you're still calling your AI tools "chatbots," you're behind. Second, agent observability is an unsolved problem and whoever cracks it will build a real business. Third, hire for specification skills, the ability to describe what needs to exist with enough precision that a machine can build it.

Why this works

The prompt forces a point of view, not a trip report. "Don't summarize every session" kills the bullet-point recap most conference posts become. Requiring specific quotes and data points adds credibility. Ending with a takeaway instead of a question gives readers something to act on.

Where people get it wrong: Asking AI to "write a blog post about my conference." You'll get a generic summary that could apply to any event. The specifics, names, numbers, that moment in the workshop, are what make a conference post worth reading.

What to use

Claude (Claude Opus 4.6): Best at finding the narrative thread across scattered notes. Won't inflate thin material into false insights. Watch out for: May produce a shorter draft if notes are sparse. That's honest, not a bug.

ChatGPT (GPT-4o): Strong at punchy blog hooks and hitting word count targets. Good LinkedIn-ready formatting. Watch out for: Tends to make every conference sound transformative. Lukewarm notes shouldn't produce a breathless post.

Bottom line: Claude for honest synthesis, ChatGPT for polished blog format. The quality of your raw notes determines the output. No amount of AI rescues an empty page of scribbles.

AI & Tech News

Micron Commits $200 Billion to US Expansion as AI Memory Demand Outstrips Supply

Micron can currently fill only 50% to 66% of orders from key customers as AI applications drive surging demand for memory chips. The company is building out US manufacturing capacity to close the gap.

Thrive Capital Closes Record $10 Billion Fund Amid Deployment Questions

Josh Kushner's Thrive Capital raised $10 billion for its 10th fund, backing OpenAI, Stripe, and SpaceX. The firm distributed just 64% of capital raised during the 2021 peak, raising questions about how fast it can put the new pool to work.

LLMs Erode Vertical SaaS Moats as Software Stocks Shed Nearly $1 Trillion

An analyst argues large language models are dismantling the domain-specific workflows that made vertical SaaS companies defensible. FactSet alone fell from a $20 billion peak to under $8 billion.

Danaher Nears $10 Billion Acquisition of Pulse Oximeter Maker Masimo

Healthcare conglomerate Danaher is close to acquiring Masimo for roughly $10 billion. The deal would give Danaher control of blood-oxygen sensing patents at the center of Masimo's ongoing dispute with Apple.

Cohere Launches Open Multilingual Models Supporting 70+ Languages

Cohere released Tiny Aya, a family of open-weight models with 3.35 billion parameters trained on 64 H100 GPUs. The models support over 70 languages and run offline, targeting multilingual and low-resource environments.

AI Reasoning Models Show Growing Promise in Advanced Mathematics

Researchers from OpenAI, DeepMind, and Anthropic argue the latest reasoning models have become significantly more useful for mathematical work. Math is emerging as the benchmark that best measures whether AI handles structured, multi-step problem-solving.

English Town Fights Equinix's $5 Billion Data Center on Protected Green Belt

Residents of Potters Bar near London are opposing a proposed Equinix data center that would be built on protected green belt land. The conflict reflects growing tension between AI infrastructure expansion and local conservation efforts across the UK.

Google Reveals Android XR Glasses UI With Physical Buttons and "Glimmer" Interface

Newly surfaced design docs show Google's upcoming XR glasses will feature a new interface called "Glimmer" with required physical buttons. The details signal a 2026 launch targeting developers building for the wearable form factor.

Activist Investor Calls Japanese Toilet Giant Toto a Hidden AI Play

UK fund Palliser Capital targeted Toto, Japan's largest toilet manufacturer, as an "undervalued" AI investment based on its advanced ceramics business tied to semiconductor production. The activist is pushing Toto to expand its chip supply chain role.

Replit CEO Says India Is Platform's Second-Largest Market

Replit CEO Amjad Masad said India is the AI coding platform's second-largest market by active users, announcing Razorpay integration to serve its growing Indian base. Masad argued AI tools now let "two kids in India compete with Salesforce."

🚀 AI Profiles: The Companies Defining Tomorrow

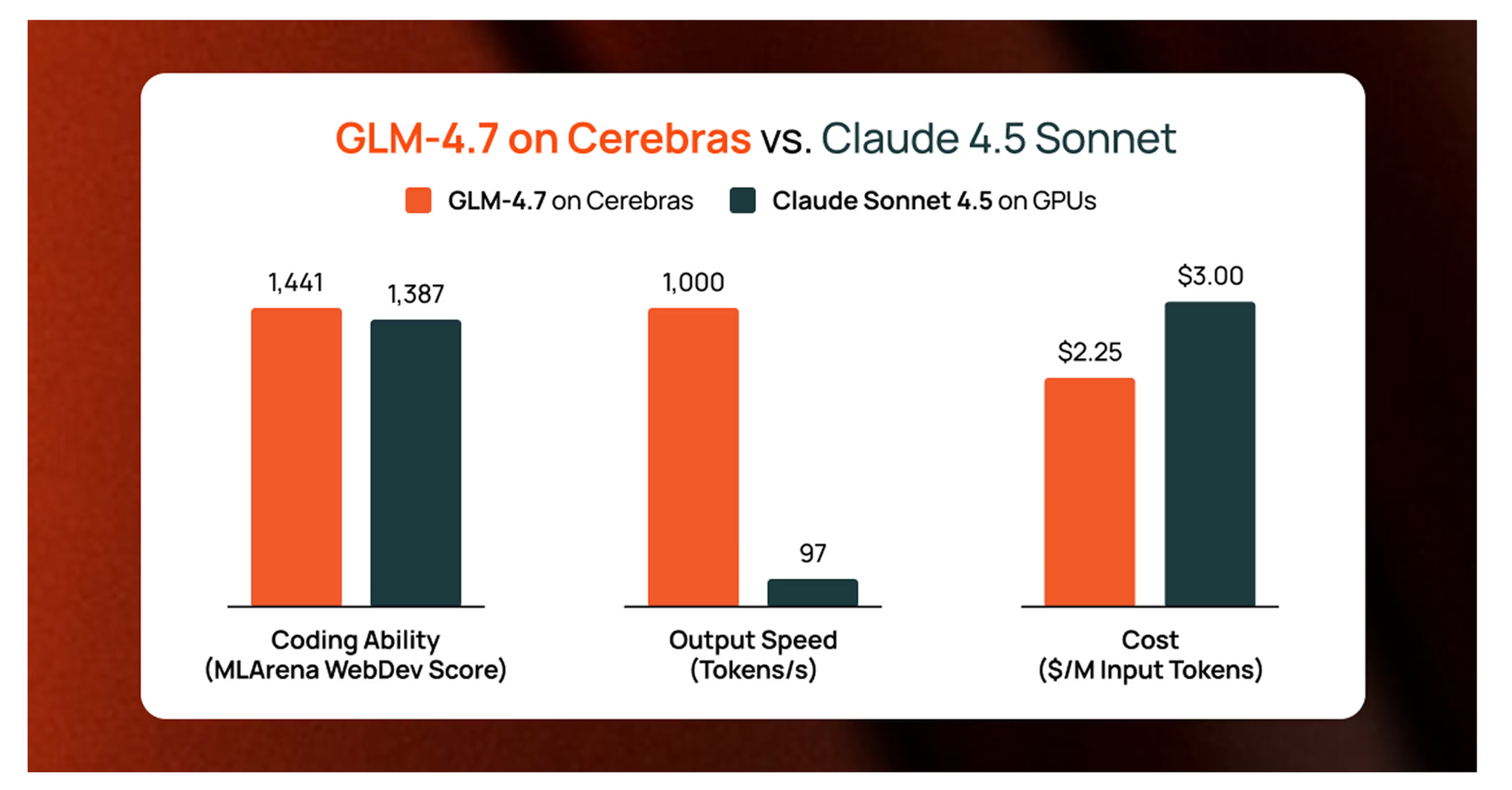

Cerebras Systems

Cerebras builds the world's largest computer chip, a silicon wafer the size of a dinner plate, and just became the first non-Nvidia company to run a production OpenAI model. 🧠

Founders

Andrew Feldman co-founded Cerebras in 2015 with four engineers from SeaMicro, a server startup they sold to AMD in 2012 for $334 million. Gary Lauterbach, Sean Lie, Michael James, and Jean-Philippe Fricker followed Feldman into a bet that AI chips were too small. Their thesis: if you make the chip the size of the entire silicon wafer instead of cutting it into hundreds of pieces, you eliminate the bottleneck. Feldman remains CEO.

Product

The Wafer Scale Engine 3 is Cerebras's third-generation chip. It packs 4 trillion transistors into a single dinner-plate-sized wafer, roughly 50 times larger than Nvidia's H100. The design eliminates data movement between chips, the biggest drag on AI performance, by keeping everything on one piece of silicon. OpenAI's GPT-5.3-Codex-Spark, the first production model running on Cerebras hardware, generates code at 1,000 tokens per second, 15 times faster than its predecessor on Nvidia GPUs.

Competition

Nvidia dominates AI training and inference with approximately 80% market share. AMD's MI300X targets the same workloads at lower cost. Google's TPUs serve internal needs. Cerebras occupies a niche: inference speed for latency-sensitive applications. The OpenAI partnership, a $10 billion deal for 750 megawatts of compute through 2028, validates the approach but does not guarantee broader adoption.

Financing 💰

$1 billion Series H in February 2026 at a $23 billion valuation, nearly tripling the $8.1 billion from five months earlier. Tiger Global led. AMD participated. Total funding exceeds $2.8 billion across nine rounds.

Future ⭐⭐⭐⭐

Cerebras cracked the hardest problem in AI hardware: getting OpenAI to bet on someone other than Nvidia. The $10 billion contract is real revenue, not a press release. But wafer-scale manufacturing is expensive and fragile. TSMC is the sole supplier. One fabrication issue and the pipeline stalls. If the OpenAI deal scales, Cerebras becomes the second pillar of AI infrastructure. If it stays niche, the $23 billion valuation will look generous. 🎯

🔥 Yeah, But...

Anthropic committed $20 million this week to Public First Action, a group backing U.S. political candidates who support AI regulation and safety standards. The rest of the industry took a different approach: OpenAI co-founder Greg Brockman, Andreessen Horowitz, and Perplexity contributed to Leading the Future, a pro-AI PAC that has raised $125 million to oppose state-level regulation.

Sources: CNBC, February 12, 2026

Our take: Same industry. Same set of San Francisco zip codes. $145 million combined, aimed in opposite directions. One lab pays for oversight. The rest pay to prevent it. The politicians collecting these checks couldn't explain a transformer architecture at gunpoint, but they can now retire comfortably either way the vote goes. Silicon Valley managed to turn AI governance into a bidding war against itself. Both sides claim to be "protecting innovation." Both sides employ lobbyists who bill hourly. The result: a regulation debate funded entirely by the companies the regulation would cover, which is not how accountability usually works but is very much how San Francisco works.