💡 TL;DR - The 30 Seconds Version

👉 Major publishers including Reddit, Yahoo, and Medium launched the Really Simple Licensing standard Wednesday to charge AI companies for training data.

📊 Reddit already gets $60 million annually from Google for data access but still backs the new collective licensing protocol.

🏭 Publishers can now set three payment models: pay-per-crawl, pay-per-inference when AI cites their content, or subscription fees.

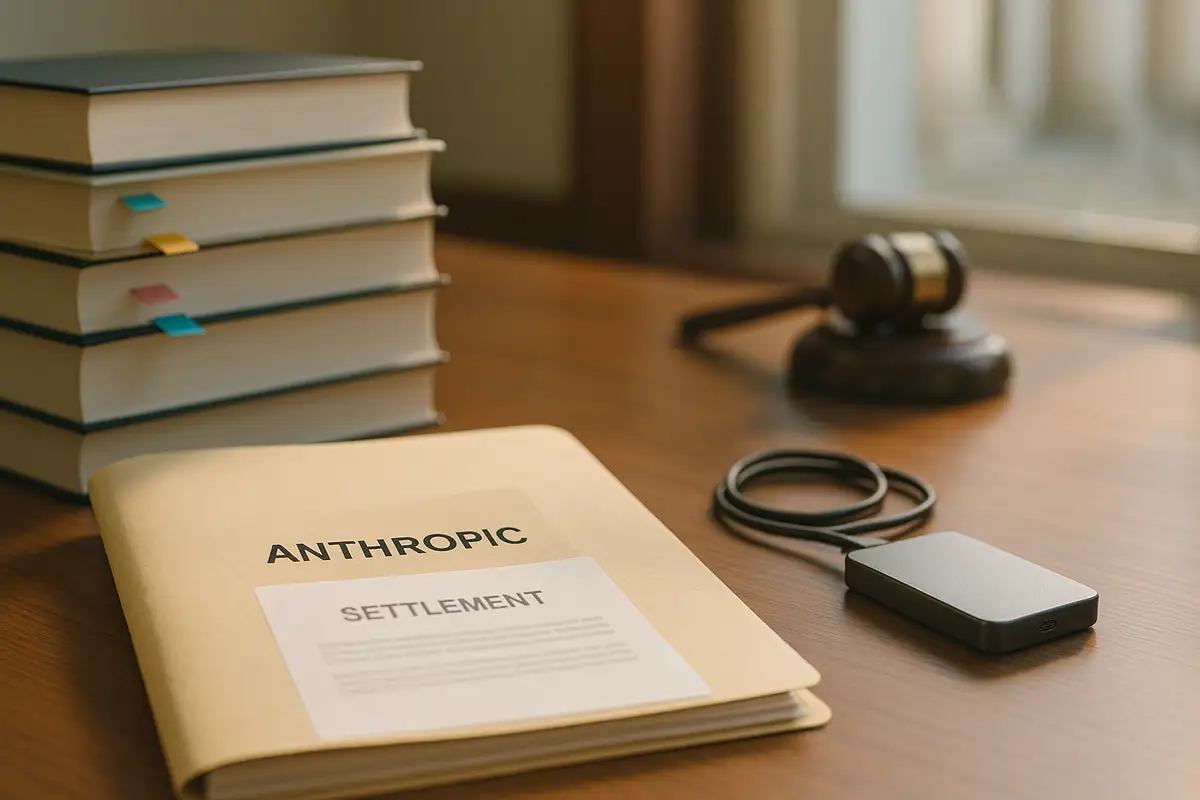

⚖️ The move follows Anthropic's $1.5 billion copyright settlement and at least 40 pending lawsuits against AI companies.

🌍 No major AI companies have committed to honoring the standard, leaving enforcement entirely dependent on voluntary compliance.

🚀 Success could shift the web's foundation from advertising revenue to direct licensing payments for content creators.

An open protocol promises pay-per-crawl and pay-per-inference; real power arrives only if AI companies agree to honor it.

Major web publishers launched the Really Simple Licensing (RSL) standard Wednesday, creating the first unified framework for extracting payment from AI companies that train on their content. Reddit, Yahoo, Medium, and dozens of other sites can now embed licensing terms directly into robots.txt files, moving beyond simple crawler permissions to demand subscription fees, per-crawl charges, or compensation each time an AI model references their work.

The timing follows Anthropic's $1.5 billion copyright settlement and at least 40 pending lawsuits targeting AI companies for unauthorized data use. The RSL Collective, a nonprofit formed to negotiate licensing deals, represents the publishing industry's attempt to monetize what has historically been free training data.

Yet no major AI company has agreed to honor the new standard. OpenAI, Google, Meta, and Anthropic declined to comment on RSL compliance, leaving publishers to navigate the same enforcement challenge that has plagued web standards for decades.

The collective licensing architecture

The RSL standard builds on the 25-year-old RSS protocol that enabled content syndication across the early web. Co-creator Eckart Walther, who developed RSS at Yahoo, partnered with former IAC Publishing CEO Doug Leeds to design what they describe as "RSS's enforcement layer"—one that demands payment instead of just enabling distribution.

Their model mirrors music industry mechanics. Just as ASCAP and BMI pool musicians' rights to negotiate with radio stations and streaming services, the RSL Collective creates a single licensing clearinghouse for web content. Publishers join without cost, but the collective handles negotiations and royalty distribution across potentially millions of sites.

The technical implementation allows three pricing structures: pay-per-crawl (compensation for each bot visit), pay-per-inference (fees when AI models reference content in responses), or subscription access. Publishers can also specify attribution requirements or offer content under Creative Commons terms.

Early supporter momentum appears substantial. Reddit alone receives an estimated $60 million annually from Google for training data access, yet still backs RSL—suggesting that even publishers with existing deals see value in standardized pricing. Ziff Davis, which owns PCMag and is actively suing OpenAI for copyright infringement, views RSL as additional leverage in ongoing legal battles.

Three stakeholder calculations

From publishers' perspective, RSL addresses an existential threat. Web traffic to traditional media has declined as AI-powered search results provide direct answers without requiring users to click through to source sites. Google's AI Overviews and ChatGPT's search features exemplify this displacement—they deliver information while capturing engagement that previously drove advertising revenue.

Medium CEO Tony Stubblebine articulated the zero-sum framing: "Right now, AI runs on stolen content. Adopting this RSL Standard is how we force those AI companies to either pay for what they use, stop using it, or shut down."

AI companies face different pressures. The Anthropic settlement established concrete financial liability for unauthorized use, while Getty Images, The New York Times, and Reddit have filed major lawsuits seeking billions in damages. Unlike software piracy or music streaming, where legal frameworks evolved over decades, AI training data exists in largely uncharted legal territory.

From their perspective, RSL potentially offers regulatory clarity. Rather than fighting individual lawsuits or negotiating separate deals with thousands of publishers, they could license content at web scale through a single protocol. Sundar Pichai's public comments about needing "a system like RSL" suggest at least some industry recognition of the legitimacy gap.

Infrastructure providers see business opportunity. Fastly partnered with RSL to provide enforcement mechanisms—acting as what Leeds describes as "the bouncer checking IDs" to block AI crawlers that haven't agreed to licensing terms. This creates a new revenue stream for content delivery networks while addressing publishers' technical enforcement challenges.

The enforcement reality

RSL's technical architecture reveals both ambition and limitations. The standard works across websites, videos, books, and training datasets, with encryption capabilities for premium content. But enforcement depends entirely on voluntary compliance and technical infrastructure that doesn't universally exist.

Fastly can block non-compliant crawlers, but only for sites using its services. This leaves gaps in coverage across the broader web, while most publishers lack the technical resources to implement sophisticated bot-blocking independently.

The deeper challenge involves usage tracking. Pay-per-crawl fees are straightforward to monitor, but pay-per-inference requires AI companies to report when they reference specific content in responses. This presents both technical and business complications that go to the heart of how large language models actually work.

Most AI training happens in massive batches where individual sources become statistically untrackable once ingested into neural networks. OpenAI's ChatGPT can cite sources when acting as a search engine replacement, but can't easily identify which training documents influenced a given response. The infrastructure for granular usage tracking simply doesn't exist at most AI companies.

Leeds acknowledges this limitation but suggests the gap is bridgeable: "Some of the licensing agreements they've already done have required them to be able to report on it, so it's possible. It doesn't have to be perfect. It just has to be good enough to get people paid."

Why collective action matters now

The structural forces reshaping both publishing and AI economics make this moment particularly significant for leverage dynamics. Publishers face declining search traffic and advertising revenue, while AI companies confront mounting legal pressure over training data legitimacy.

The timing suggests publishers recognize their negotiating position may weaken. Advanced AI models are getting better at synthetic content generation, potentially reducing their dependence on fresh web material. But there's a parallel risk: as AI systems increasingly answer user questions directly, traditional publishers lose the search traffic that funds their operations. This dynamic makes licensing deals less optional than strategic.

The music industry parallel provides both template and cautionary tale. ASCAP and BMI succeeded because radio stations and venues needed music to operate, creating natural leverage for collective licensing. Web content presents different dynamics—AI companies can potentially train on older datasets, synthetic data, or content from regions with different copyright frameworks.

Network effects will likely determine RSL's trajectory. If major sites consistently implement the standard, adoption costs for AI companies could exceed legal battle expenses. But fragmented adoption might push AI companies toward prioritizing content from non-participating publishers.

The Cloudflare precedent offers one data point. The company launched its own pay-per-crawl system in July, blocking AI crawlers by default across roughly 20% of the internet. That initiative demonstrates industry willingness to experiment with new economic models, though adoption challenges persist.

The systemic implications

RSL represents three potential shifts in internet economics and governance. First, it could push the web away from its advertising foundation toward direct licensing relationships. Quality journalism might become more viable under subscription and licensing models, though this also concentrates power among publishers who can negotiate effectively.

Second, the protocol tests whether collective action can succeed where individual negotiations have largely failed. Publishers joining RSL spread legal enforcement costs while potentially strengthening bargaining positions—but only if AI companies view the collective as truly indispensable.

Third, RSL's technical implementation might influence broader internet infrastructure. Machine-readable licensing terms embedded in standard web protocols could extend beyond AI training to cover other automated content uses, from social media aggregation to academic research.

Industry-led standards like RSL often become templates for government regulation. Today's voluntary protocol could evolve into tomorrow's legal requirements for AI companies operating in key markets, particularly as lawmakers seek frameworks for emerging technology governance.

Why this matters:

• Revenue model transformation: The web's shift from advertising to licensing could strengthen quality content creators while changing who controls information distribution—potentially favoring established publishers over emerging voices.

• Regulatory blueprint: Industry standards frequently shape government rules, so RSL's enforcement mechanisms and technical specifications may become the foundation for how regulators approach AI company obligations globally.

❓ Frequently Asked Questions

Q: How does RSL actually work on a website?

A: Publishers add machine-readable licensing terms directly to their robots.txt files. For example, they can specify "pay $0.01 per crawl" or "pay $0.001 per inference." AI companies' crawlers would need to read these terms and agree to payment before accessing content.

Q: What happens if AI companies just ignore RSL like they do robots.txt?

A: RSL relies on voluntary compliance and technical enforcement. Fastly can block non-compliant crawlers for sites using its services, but coverage isn't universal. The RSL Collective plans legal enforcement similar to music rights organizations, spreading lawsuit costs across all members.

Q: How much money could publishers realistically make from RSL?

A: That depends on adoption and pricing models. Reddit's existing deal with Google pays $60 million annually. For smaller sites, even modest per-inference fees could add up—if an AI references a recipe site 1 million times monthly at $0.001 per use, that's $12,000 annually.

Q: Why would AI companies agree to pay when they can use free datasets?

A: Legal pressure is mounting. Anthropic just paid $1.5 billion to settle copyright claims, and 40+ lawsuits are pending. Fresh, high-quality content also performs better than older training data, making licensed content potentially worth the cost for competitive AI models.

Q: How is RSL different from publishers cutting individual deals with AI companies?

A: RSL creates standardized pricing and collective bargaining power. Instead of each publisher negotiating separately, the RSL Collective represents millions of sites at once. It's modeled after ASCAP, which collects music royalties for 800,000+ songwriters rather than individual negotiations.