The standout feature? These models can switch between careful reasoning and quick responses. For complex problems, they take time to think step by step. For simple questions, they answer immediately. Users control this thinking mode through simple commands.

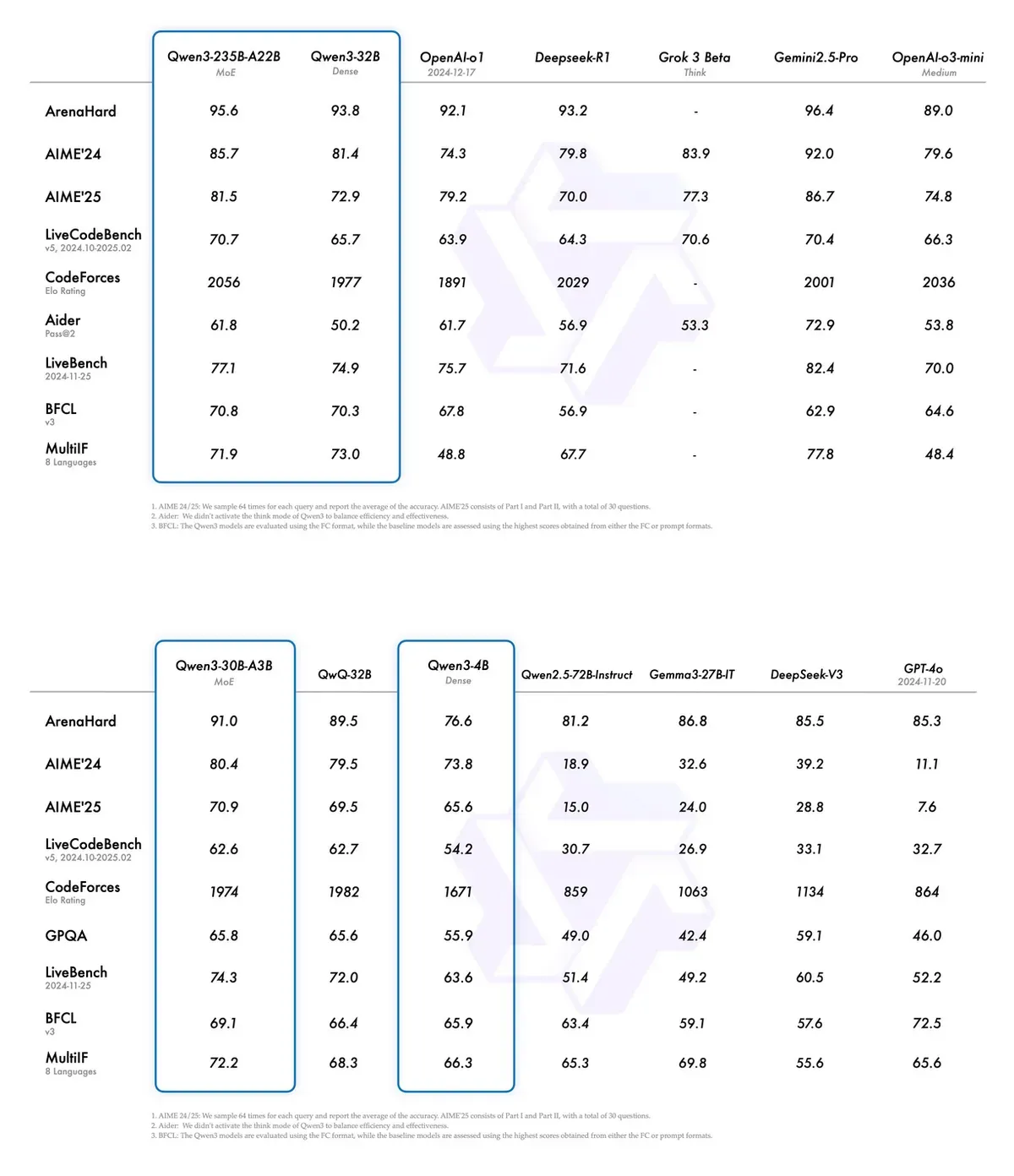

The largest model, Qwen3-235B-A22B, beats OpenAI's o3-mini and Google's Gemini 2.5 Pro on programming contests. It also scores higher on math tests and reasoning tasks. However, this top model isn't publicly available yet.

Smart Architecture Cuts Computing Costs

The models use a clever trick called Mixture of Experts (MoE). This splits complex tasks among specialized sub-models, making the system more efficient. The smaller MoE model, Qwen3-30B-A3B, matches the performance of models ten times its size.

Training these models took serious computing power. Alibaba fed them 36 trillion tokens - twice the data of earlier versions. The training happened in three stages: basic language skills, then advanced topics like science and coding, and finally the ability to handle longer texts.

Speaking in 119 Tongues

The models speak 119 languages, from widely-used ones like English and Mandarin to regional dialects like Lombard and Friulian. This broad language support makes them useful for global applications.

Developers can download most models now from Hugging Face and GitHub under an open license. Cloud providers like Fireworks AI and Hyperbolic also offer them. For local use, tools like Ollama and LMStudio work well.

AI Teaching AI

The training process got creative. Alibaba used older Qwen models to generate practice problems in math and coding, essentially having AI teach AI. They also used visual AI to pull text from documents, expanding their training data.

The results show clear progress. Smaller Qwen3 models match larger predecessors while using fewer resources. Take Qwen3-4B - it performs like the much larger Qwen2.5-72B model.

Qwen3-32B, the largest publicly available version, holds its own against both open and closed AI models. It beats OpenAI's o1 on several tests, including coding benchmarks. This supports a growing trend - open models keeping pace with closed-source leaders like OpenAI and Anthropic.

US-China AI Race Heats Up

The release comes amid rising competition between Chinese and American AI labs. U.S. policymakers have restricted chip sales to China, aiming to limit Chinese companies' ability to train large AI models. Yet models like Qwen3 show Chinese AI development continues to advance.

Baseten CEO Tuhin Srivastava sees this reflecting a broader trend. Companies now build their own AI tools while also buying from established providers like OpenAI. This hybrid approach shapes how businesses adopt AI technology.

Each Qwen3 model excels at specific tasks. Some focus on coding, others on math or general knowledge. The family's range lets users pick the right tool for their needs without wasting resources on oversized models.

The ability to toggle between quick and thoughtful modes marks a practical advance. Just as humans adjust their thinking based on complexity, these models can now match their approach to the task.

Why this matters:

- Open AI models now rival closed-source leaders while using fewer resources. This could make advanced AI more accessible and cost-effective

- The switch between quick and careful thinking modes gives users more control. They can balance speed and accuracy based on their needs, much like human decision-making

Read on, my dear:

- Qwen3: Think Deeper, Act Faster