Samsung's TM Roh stood under the bright wash of CES stage lights Sunday evening and declared the company's mission: "AI experiences everywhere, for everyone." Double Gemini-powered devices from 400 million to 800 million this year. Put AI in phones. Put it in TVs. Refrigerators, washing machines, everything. Create what Samsung calls "one seamless, unified AI experience."

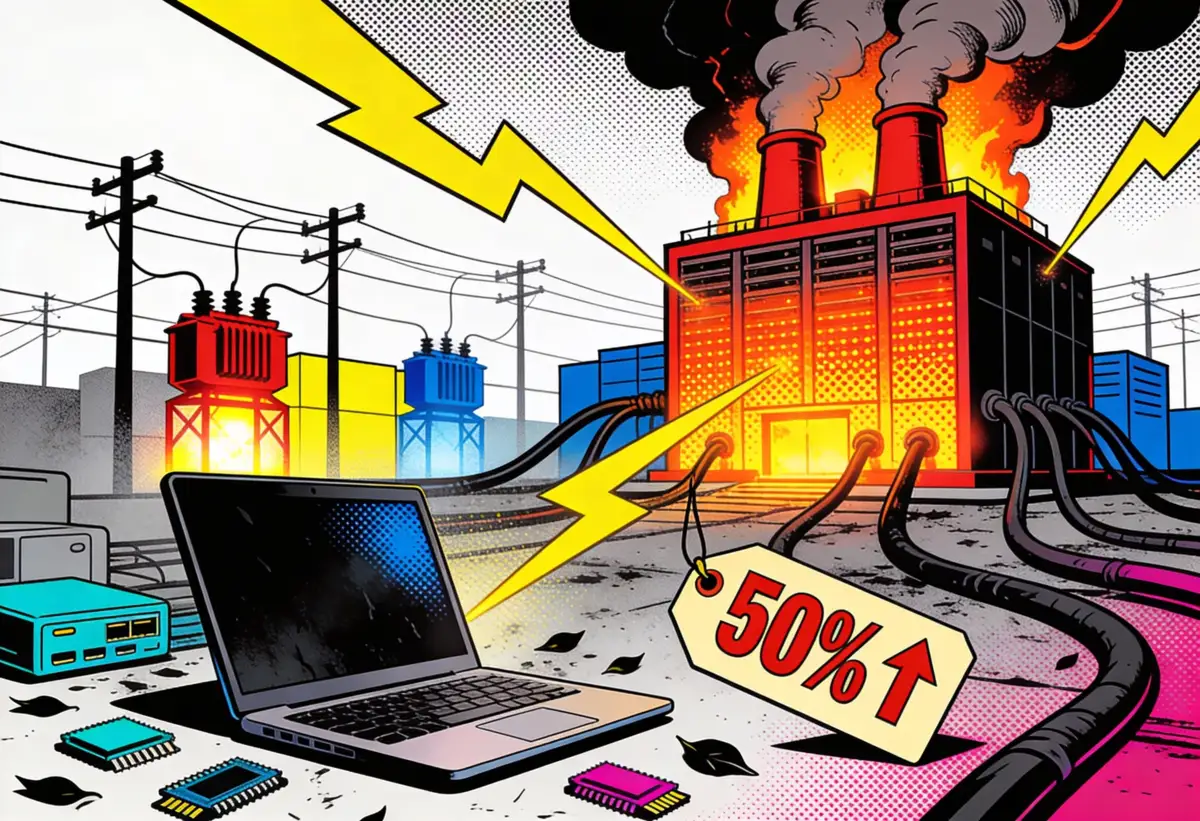

The same day, the same executive told Reuters that price increases across Samsung's product lines were "inevitable" due to memory chip shortages. DRAM contract prices jumped 50% in Q1 2026. No company is immune. The crisis affects everything from smartphones to home appliances.

Samsung announced an ambitious AI expansion the same week it acknowledged it can't afford to execute on current economics. That tension defines what's actually happening here.

The Breakdown

• Samsung targets 800 million Gemini devices in 2026, but DRAM prices jumped 50% and shortages last through 2027

• Only six Galaxy models support on-device AI currently; scaling requires expensive chips during a shortage or cloud processing

• HSB insurance partnership monetizes appliance data when hardware margins collapse from memory costs

• Free $120 storage upgrades likely eliminated; Samsung's chip division profits while mobile division faces squeeze

The Vision Presented at CES

Roh's presentation followed a familiar template. Refrigerators generate meal plans from your blood glucose readings. Washing machines optimize detergent automatically. TVs mute sports commentators when they talk. A "Bespoke AI Jet Bot Steamer" removes wrinkles.

The company ships 500 million devices annually. That scale creates opportunities for data collection and ecosystem lock-in that smaller players can't match. Samsung positions this as wall-to-wall AI integration across daily life—what it calls being "your companion to AI living."

Galaxy AI brand awareness climbed from 30% to 80% in one year, according to Samsung's numbers. The company believes AI features have become a purchase driver, particularly for search, image editing, and translation functions.

The CES presentation included a partnership with Hartford Steam Boiler, Munich Re's insurance unit. Offer personalized home insurance based on appliance data. Leak detection. Frozen pipe monitoring. Preventative maintenance alerts. All feeding SmartThings data to insurers who adjust your premiums.

Sign up for Implicator.ai

Strategic AI news from San Francisco. Clear reporting on power, money, and policy. Delivered daily at 6am PST.

No spam. Unsubscribe anytime.

That's how Samsung plans to monetize AI. Not better appliances. Data sharing with third parties. Your fridge monitors inventory, washer tracks cycles, insurers get the telemetry. The financial incentive runs to HSB, not to you.

The Hardware Reality

Memory shortages aren't new. The 2020-2022 pandemic created supply problems. This crisis runs different. Hyperscalers buy high-bandwidth memory for data centers—Alphabet, Meta, Oracle. Consumer electronics lose the bidding war. Whales inhaling krill. Smaller fish starve.

Samsung, SK hynix, Micron make most DRAM. Micron killed its consumer business in December 2025. Data centers only now. Remaining capacity goes to highest bidder. Your next phone loses to someone's AI training cluster. Those facilities run hot, loud, racks of servers burning electricity. They burn through chips faster than fabs produce them.

DDR5 RAM prices more than doubled. DDR4 doubled. NAND flash storage climbed 50-75% according to Phison's CEO. These aren't temporary spikes. Shortages are projected through 2027, possibly beyond, as long as data center buildout continues at current pace.

Samsung needs chips for 800 million Gemini devices. On-device AI requires specialized processors. Exynos 2400e. Snapdragon 8 Gen 3. Both with AI accelerators. Six Galaxy models run Gemini Nano on-device currently. Six. Scale to 800 million? Either expensive chips go into mid-range phones during a shortage, or everything gets pushed to cloud with latency problems and privacy concerns.

Cloud-based AI also means Samsung can't deliver the fluid experience Roh promised. Features run on remote servers, create noticeable delays, and require data sharing that feeds model training. Users get slower performance while providing free training data.

What Gets Cut

Samsung is evaluating how to navigate chip shortages without tanking sales. One option: eliminate the free storage upgrade pre-order perk for the Galaxy S26 series launching this month.

Bought a Galaxy S24 or S25? You probably got free storage. Samsung bumped 256GB to 512GB without charging the $120 difference. The double storage program worked—it pushed early sales and created preorder urgency. It also cut Samsung's margins. That's over now.

Korean media reports Samsung is considering shelving this benefit. The company hasn't confirmed, but the $120 per unit adds up when you're already paying 50% more for DRAM. Cut the free upgrade, maintain launch prices, preserve margins.

Or raise prices outright. Roh didn't rule that out either. Some impact is "inevitable," he told Reuters. The world's number one TV maker is "working with partners on longer term strategies to minimize the impact," but minimizing isn't eliminating.

Here's where it gets strange: Samsung's semiconductor division is thriving on this shortage. Higher memory prices mean fatter margins on chips Samsung sells to hyperscalers. The chip arm is celebrating record quarters while the mobile division sweats over its bill of materials. It's a weird fratricidal dynamic—one side of the company profits from the crisis squeezing the other side.

The Developer Problem

Samsung pulled its Neural Software Development Kit. There's no public program for third-party developers to build on Samsung's AI infrastructure comparable to Google's AI Edge SDK (which itself remains experimental). Google granted early access to Adobe and Grammarly. Samsung hasn't announced equivalent partnerships.

For the 800 million device target to mean anything beyond marketing, developers need tools to create AI-powered features consumers actually want. Right now, those tools don't exist in usable form. Infrastructure exists from past Exynos NPU toolchains, but API access and timing remain unclear. Nobody builds for a ghost platform.

Samsung can't build every AI feature consumers might find useful. The company envisions AI everywhere, but "everywhere" requires an ecosystem of third-party apps that exploit on-device processing. Without developer tools, that ecosystem is a hallucination. Samsung ships phones with AI chips that only run Samsung's first-party features.

The alternative is cloud processing through Google's Gemini, which puts Google in control of the experience layer. Samsung becomes a hardware vendor for someone else's AI platform—exactly what it's trying to avoid by maintaining Bixby alongside Gemini. But running two assistants creates the UI conflicts that already plague Samsung phones.

The Insurance Angle

The HSB partnership deserves more attention than it received at CES. When you can't make money on the hardware because memory costs are eating margins, you monetize the user instead. Samsung positions connected appliances as risk reduction for insurers. Leak detection prevents flood damage. Usage monitoring identifies maintenance needs before breakdowns. Smart sensors create data streams that let insurers price risk more accurately.

For consumers, the value proposition is lower premiums in exchange for comprehensive home monitoring. Everything connects through SmartThings. Your refrigerator shares inventory data. Your washer reports cycle patterns. Even basic appliances need connectivity chips to participate.

HSB's pilot program expands beyond initial tests to more U.S. states this year. The model applies industrial predictive maintenance to residential settings. Catch small problems before they become insurance claims. Share that cost savings with homeowners who participate.

What's unstated: the data collection required to make this work. Insurers need detailed usage information to assess risk and validate claims. That data flows from Samsung devices through SmartThings to HSB's systems. The discount depends entirely on your willingness to grant that access.

This is AI monetization through surveillance infrastructure. Not surveillance in the sense of malicious monitoring, but in the sense of comprehensive data collection as a prerequisite for service. You get cheaper insurance. HSB gets risk data. Samsung gets ecosystem lock-in because switching appliance brands means losing your insurance discount.

The business model makes sense. Whether consumers want it remains unclear.

What Actually Ships

Samsung's 800 million target assumes a market that doesn't exist. Smartphone shipments shrink in 2026. Memory shortages guarantee it. Higher prices guarantee it. IDC projects contraction. Counterpoint projects contraction. People delay upgrades.

Roh said AI will become "much more widespread" within six to twelve months. But widespread assumes affordable. If Galaxy S26 prices rise and preorder perks disappear, fewer people buy. If TV prices climb due to memory costs, replacement cycles extend. If appliances cost more because every device needs an AI processor, consumers stick with dumb alternatives that just work.

This creates a vacuum in the market. "Dumb appliances that do what they're supposed to do reliably. Every time. For years." No Samsung sensors freaking out. No Bixby layered on top of Gemini. No insurance data sharing requirements. Just a washing machine that washes clothes. The door is wide open for a contrarian competitor.

Samsung's scale creates genuine advantages in AI development—500 million annual device shipments generate massive training datasets. But scale doesn't solve chip shortages or make expensive features affordable. The company can build AI-powered everything. It can't necessarily sell it at prices consumers will pay.

Sign up for Implicator.ai

Strategic AI news from San Francisco. Clear reporting on power, money, and policy. Delivered daily at 6am PST.

No spam. Unsubscribe anytime.

The CES presentation showcased vision. The Reuters interview acknowledged reality. The gap between them is where Samsung actually operates—trying to execute ambitious product plans while navigating supply constraints that make those plans economically questionable.

Roh's mission statement promises AI experiences everywhere, for everyone. The footnote, delivered in a separate interview, adds: at higher prices, with cut benefits, subject to chip availability. That isn't a roadmap. It's a triage.

❓ Frequently Asked Questions

Q: Why can't Samsung just make more memory chips to solve the shortage?

A: Building new chip factories (fabs) takes 2-3 years and costs $15-20 billion. Even if Samsung started construction today, new capacity wouldn't arrive until 2028-2029. Current fabs are running at maximum capacity, and the production lines that exist are already allocated to hyperscalers willing to pay premium prices for AI data center chips.

Q: What's the actual difference between on-device AI and cloud AI for users?

A: On-device AI runs locally on your phone's processor, works offline, and keeps data private. Cloud AI sends your request to remote servers, requires internet connection, and typically takes 200-500 milliseconds longer. Cloud processing also means your data gets used to train AI models, while on-device processing stays local. Battery drain is higher with cloud processing due to constant data transmission.

Q: How much does the HSB insurance program actually save you?

A: Samsung hasn't disclosed specific discount amounts from the HSB partnership. Typical smart home insurance programs offer 5-15% premium reductions, which would save $50-200 annually on a $1,200 homeowner's policy. However, you're required to keep all appliances connected to SmartThings and share usage data continuously with the insurer to maintain the discount.

Q: Why do AI data centers need so much more memory than smartphones?

A: Training large AI models requires processing billions of parameters simultaneously. A single AI training server uses 80-96GB of high-bandwidth memory (HBM) compared to 8-12GB in flagship phones. One data center can house 10,000+ servers, consuming as much memory as 80-100 million smartphones. Training runs 24/7 for weeks or months, burning through chips continuously.

Q: Will Apple and Google face the same chip shortage problems?

A: Yes. Apple already warned of supply constraints for iPhone 17 models. Google's Pixel line uses similar Snapdragon processors facing the same shortage. All smartphone makers compete for the same limited DRAM supply from Samsung, SK hynix, and Micron. The difference is Apple's cash reserves let them outbid competitors, while smaller manufacturers may cut production entirely.