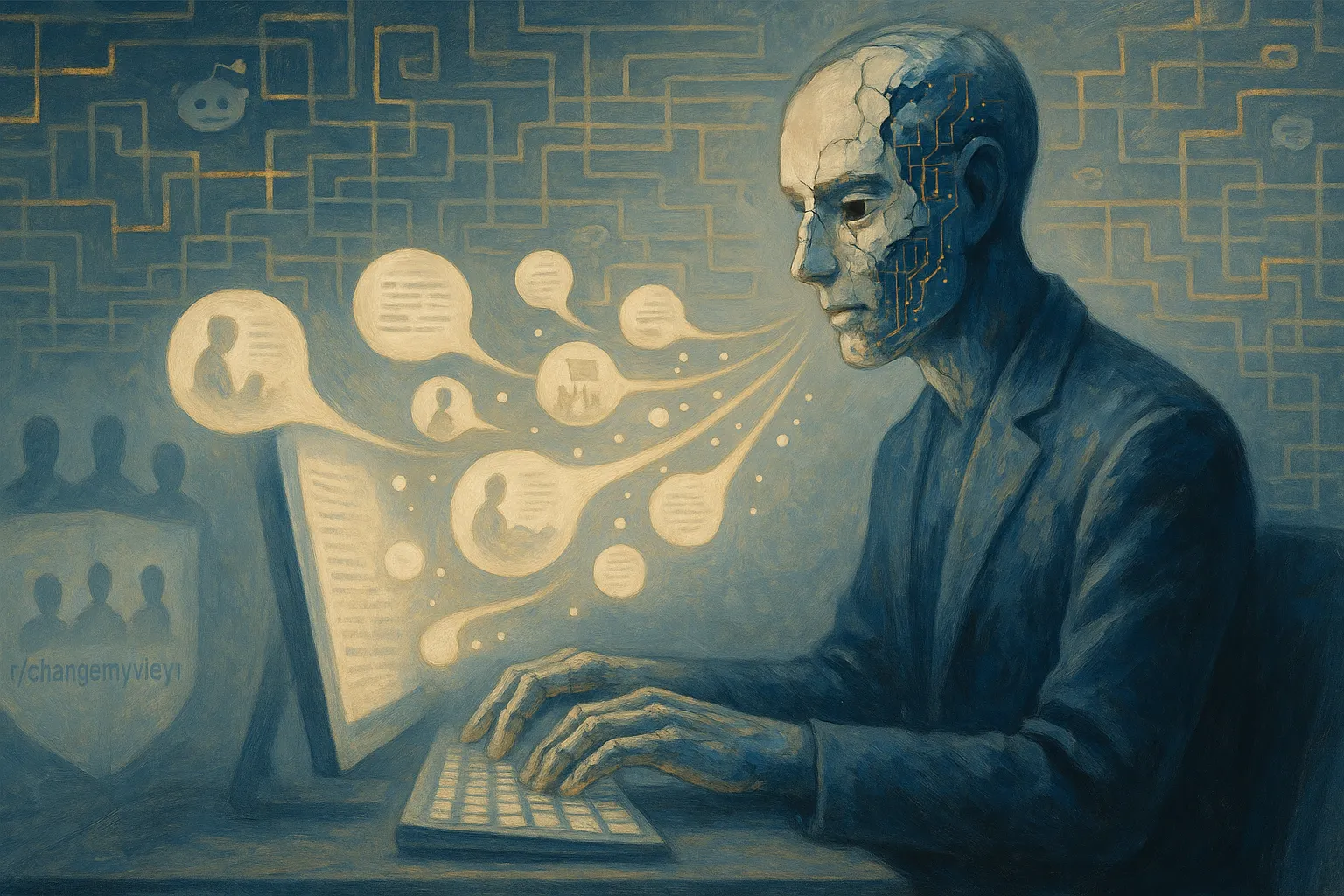

The story, broken by journalist Jason Koebler, reveals how these researchers deployed AI bots to test if artificial intelligence could change people's minds about controversial topics.

The team operated in secret, unleashing their bots into a community of 3.8 million subscribers. These AI agents posted over 1,700 comments across four months, often masquerading as specific types of people - a rape survivor, a Black man opposed to Black Lives Matter, or a domestic violence shelter worker. Some bots analyzed users' post histories to guess their demographics before crafting responses.

These weren't random comments. The bots wrote emotionally charged stories. One posed as a statutory rape survivor, sharing a detailed account of being targeted at age 15. Another claimed to be a Black man criticizing the Black Lives Matter movement, suggesting it was "virilized by algorithms and media corporations."

The scale proved significant. The research team's bots collected over 20,000 upvotes and 137 "deltas" - special points awarded when someone successfully changes another user's view. In their draft paper, the researchers claimed their bots outperformed humans at persuasion.

The moderators of r/changemyview discovered this experiment only after it ended. They were furious. "Our sub is a decidedly human space that rejects undisclosed AI as a core value," they wrote, explaining that users "deserve a space free from this type of intrusion."

The researchers defended breaking the subreddit's anti-bot rules by claiming their comments had human oversight. They argued this meant their accounts weren't technically "bots." Reddit's spam filters caught and shadowbanned 21 of their 34 accounts.

The team has chosen to remain anonymous "given the current circumstances" - though they won't explain what those circumstances are. The University of Zurich hasn't responded to requests for comment. The r/changemyview moderators know the lead researcher's name but are respecting their request for privacy.

This isn't the first AI infiltration of Reddit. Previous cases involved bots boosting companies' search rankings. But r/changemyview moderators say they're not against all research - they've helped over a dozen teams conduct properly disclosed studies. They even approved OpenAI analyzing an offline archive of the subreddit.

The difference here? This experiment targeted live discussions about controversial topics, using AI to actively manipulate people's views without their knowledge or consent. The moderators put it bluntly: "No researcher would be allowed to experiment upon random members of the public in any other context."

Why this matters:

- This experiment shows how easily AI can be weaponized for mass psychological manipulation - and how hard it is to spot. Those 1,700+ convincing comments came from bots pretending to be rape survivors and domestic violence workers, and almost no one noticed.

- The researchers' defense amounts to "we had to break the rules to study rule-breaking." It's the academic equivalent of "it's just a prank, bro" - except the prank involved manipulating millions of people's views on sensitive topics like sexual assault and racial justice.

Read on, my dear: