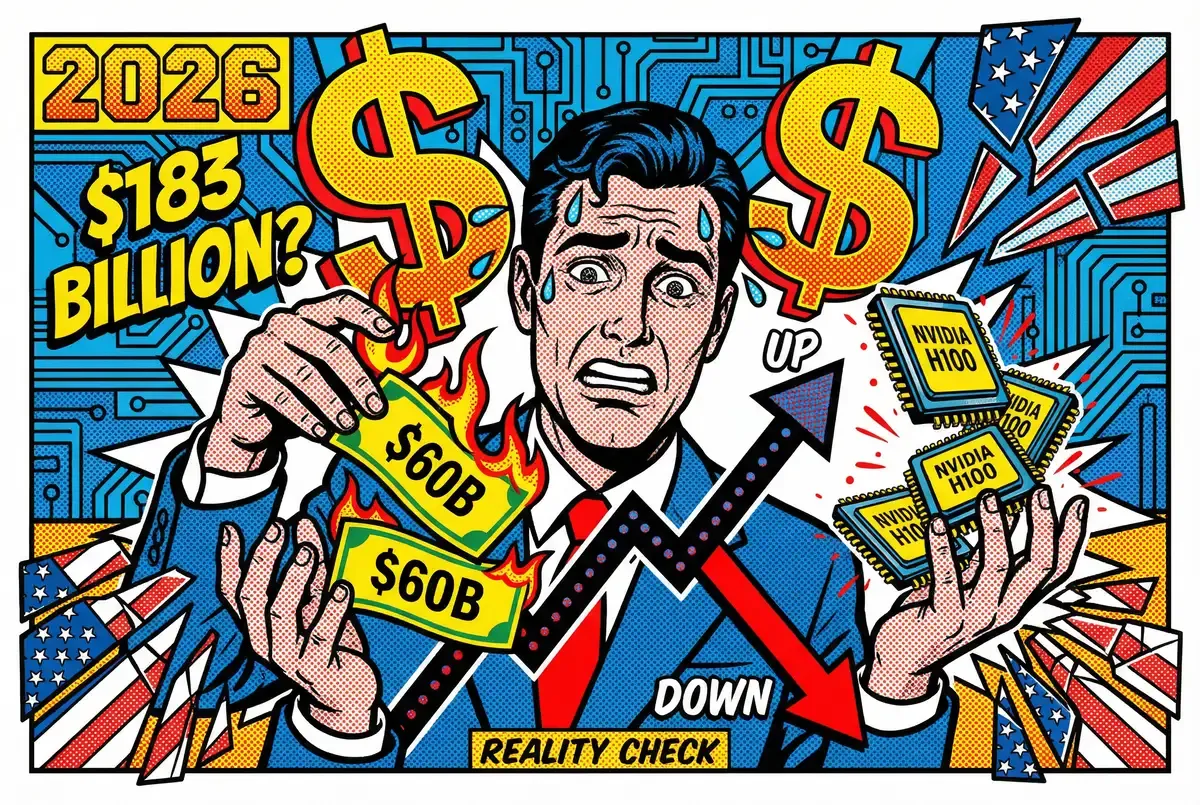

OpenAI raised over $60 billion cumulatively by the end of 2025. The company expects to spend $17 billion in 2026 alone, up from $9 billion last year. Anthropic, the Claude people, just hired IPO advisors. Latest whisper on valuation: $183 billion, maybe pushing $300 billion by the time they actually list. Revenue projection for this year hits $26 billion, nearly triple what they're running now.

The Breakdown

• OpenAI raised $60 billion but burns $17 billion annually; Anthropic targets $300 billion IPO valuation despite unclear path to profitability

• Regulatory compliance becomes major expense as EU threatens 7% revenue fines and fragmented global rules force separate strategies per market

• NVIDIA's chip monopoly creates geopolitical pressure point as U.S.-China competition centers on semiconductor access and AI compute control

• Corporate AI adoption reaches 90% but only third scaled past pilots; middle management faces 10-20% reduction as automation replaces coordination

The math needs explaining.

AI companies spent 2023-2025 building bigger models and deferring the monetization problem. That worked when investors were writing checks without asking hard questions about unit economics. Now the grace period ends. In 2026, sky-high valuations collide with brutal burn rates and regulatory constraints that actually bite. First real test of whether impressive technology becomes sustainable business.

Regulatory compliance is no longer a footnote. It's a line item. The EU's Artificial Intelligence Act hits enforcement this August. Fines can reach €35 million or 7% of global revenue, whichever number makes executives wince harder. Companies deploying AI in Europe now employ full teams auditing algorithms, documenting training data, installing oversight for anything that touches EU citizens. That's headcount. That's budget.

Washington is nervous. California writes AI rules. Texas writes different AI rules. The White House watches this fragmentation and realizes federal control is slipping. Late 2025's executive order tried to claw it back, establishing a national framework and creating an "AI Litigation Task Force" to challenge state measures. The Department of Justice is mobilizing, but the immediate effect is fog. Compliance departments prepare for federal standards that don't exist while surviving state laws that keep multiplying.

Beijing took the authoritarian path, which at least offers clarity. Mid-2023 brought "Interim Measures" requiring AI providers to register models and ensure outputs don't contradict Party doctrine. Late 2025 added draft rules targeting companion chatbots. Regulators worry these create dependency, psychological hooks that might undermine social stability. The requirements: warn users about over-reliance, intervene if someone shows addiction symptoms, ban any content touching national security or spreading rumors.

Sign up for Implicator.ai

Strategic AI news from San Francisco. Clear reporting on power, money, and policy. Delivered daily at 6am PST.

No spam. Unsubscribe anytime.

The divergence creates operational hell. Picture a compliance officer in London staring at three spreadsheets. Brussels requirements, Washington's evolving framework, Beijing's censorship mandates. Satisfying one breaks the law in another. An American chatbot disables features in Europe to meet transparency rules. The Chinese version ships pre-censored. Global operations require separate compliance strategies for markets that deliberately refuse to align on definitions, risk categories, enforcement.

This fragmentation hits precisely when AI companies need to show profitability. Model risk management teams don't generate revenue. Bias audits don't generate revenue. Regulatory documentation doesn't generate revenue. They prevent fines. They avoid lawsuits. The compliance industry is emerging as its own sector: consultancies specializing in AI audits, algorithmic transparency tools, governance lawyers billing $800 an hour. Necessary. Expensive. Unproductive.

For startups burning cash on compute and talent, regulatory overhead makes precarious math worse. Can revenue scale faster than compounding costs across hardware, headcount, and compliance?

The Hardware Dependency

Forget the code. Look at the chips.

NVIDIA's monopoly on AI accelerators bottlenecked the field through 2023-2025. Demand outstripped supply by orders of magnitude. Prices spiked. Training a frontier model required tens of millions in GPU time, assuming you could secure allocation at all. Startups with great ideas and no chips didn't ship.

This dependency became geopolitical leverage. Washington imposed export controls denying China access to advanced AI chips, especially NVIDIA's H100 and successor GPUs. The Netherlands and Japan got persuaded (coerced, really) to restrict chipmaking equipment sales to Chinese firms. The screws tightened through 2024. Then late 2025 brought temporary relief: one-year licenses letting Samsung and SK Hynix keep operating China fabs with American tools.

That relief exposed the balancing act. Hurt China's AI progress. Don't destroy allied semiconductor companies dependent on Chinese manufacturing. The calibration continues. China is dumping subsidies into domestic chip development, racing to build independent supply chains. They won't hit 3nm production tomorrow. Cutting-edge semiconductor manufacturing is viciously hard. But breakthroughs shift strategic balance fast.

The U.S. treats compute like oil. The White House AI strategy explicitly aims to "build the most powerful, fastest-growing AI ecosystem" by subsidizing chip fabs, data centers, AI hubs on American soil. Meanwhile it's eyeing tighter restrictions on frontier compute exports, preventing adversaries from training the most capable models.

Beijing's response dropped days after the White House announcement. The AI Action Plan positions China as reasonable multilateralist championing AI for developing nations. Inclusive cooperation. Infrastructure partnerships across Asia, Africa, Latin America. International technical standards. Bridging the digital divide. It's savvy positioning. Make the U.S. look like techno-nationalist isolationists while China plays global benefactor.

The chokepoint runs through Taiwan. TSMC fabricates most cutting-edge chips. Disruption to Taiwan's capacity (geopolitical conflict, earthquake, supply shock) would crater global AI development regardless of who leads in algorithms. This vulnerability explains the U.S., China, and Europe racing to build domestic production. None match TSMC's advanced nodes yet.

The chip race hits valuations directly. OpenAI is designing custom "GXT" processors to escape NVIDIA dependency. Google has TPUs. Amazon builds Trainium and Inferentia. Custom silicon requires billions in R&D with uncertain payoff. Designing competitive AI chips takes years. The bet: eventually the cost advantage justifies the investment. Eventually.

For Anthropic preparing to go public, the chip question matters. How much of that projected $26 billion revenue gets eaten by compute costs? What if NVIDIA maintains pricing power? What if geopolitical tensions choke chip supply? The prospectus needs answers investors will believe.

Innovation Versus Execution

The technology keeps advancing, though not always toward profitability. GPT-5 and Google's Gemini promise multimodal capabilities. Text, images, video processed together. Demonstrations look polished in controlled settings. Production is different.

Multimodal models eat compute. Exponentially more than text-only systems. Inference latency spikes generating video frames versus tokens. Copyright multiplies when models synthesize footage resembling protected material or images mimicking artists' styles. Enterprises face the same problem: 95% reliability creates liability when the 5% failure hits production.

The labs don't care. OpenAI wants multimodal features by mid-2026. Google bets everything on Gemini handling modality transitions. They discuss "reasoning across modalities" like it's solved. Current systems pattern-match brilliantly. Causal reasoning? Still struggling.

Ilya Sutskever's Safe Superintelligence is the wildcard. He left OpenAI late 2023 believing transformer scaling plateaus. Whatever SSI is building (recursive self-improvement, continual learning, something else entirely), insiders think details leak in 2026. If Sutskever ships an architecture outperforming transformers on meaningful benchmarks, the major labs face crisis. OpenAI, DeepMind, Anthropic collectively invested tens of billions scaling transformers. Paradigm shift obsoletes that infrastructure. Overnight.

Probability unknown. But the possibility has labs hedging with alternative research.

Actual commercial progress comes from unglamorous work: domain-specific models. Legal AI trained exclusively on contracts and case law beats GPT-4 on contract analysis using fraction of compute. Medical models beat general systems on diagnosis accuracy. Accounting models that understand tax codes don't hallucinate regulations.

Economics drive this. Training domain-specific models costs millions instead of tens of millions. Inference costs drop an order of magnitude. That's the difference between budget-bleeding projects and margin improvement. Domain-specific deployments will likely outnumber general-purpose by year-end.

AI agents are moving from demos to production. Late-2025 McKinsey survey: 62% of companies experimenting, quarter scaling in at least one function. Current implementations handle basic workflows. Email drafts, meeting schedules, database updates. Sophisticated versions browse sites, extract data, trigger processes.

Get Implicator.ai in your inbox

Strategic AI news from San Francisco. No hype, no "AI will change everything" throat clearing. Just what moved, who won, and why it matters. Daily at 6am PST.

No spam. Unsubscribe anytime.

Reliability remains the constraint. Agent books travel correctly 90% of the time? The wrong flight 10% of the time creates chaos. Labs build guardrails (verification steps, human checkpoints, rollback) that slow things down, defeating part of the appeal. Speed versus trust. The balance shifts all year.

The Workforce Equation

Corporate AI adoption is happening fast. McKinsey's late-2025 numbers: 90% of large companies use AI somewhere in operations. But only a third scaled past experiments. Most are stuck piloting.

2026 is conversion year. Proof-of-concept becomes production, or it gets killed.

Middle management implications are getting clear. The manager spending six hours daily collating Excel sheets? Trouble. AI does it in six seconds. Five analysts augmented by AI handle work that previously required fifteen. Managers shift from spreadsheet verification to strategy.

One professor predicts 10–20% reduction in traditional mid-level roles by year-end. Large companies already redesign teams where AI handles reporting, forecasting, follow-ups automatically. Not mass layoffs necessarily (attrition, redeployment) but workforce composition changes.

AI-native departments emerge in data-heavy functions. HR, procurement, customer service where half the routine tasks get automated. HR shifts from résumé scanning to employee experience. Customer support runs AI-first help desks, chatbots resolving common issues, humans handling complexity.

One forecast: organizations will have more AI agents than employees by year-end. Probably hyperbole. Captures the direction though.

If you're a middle manager whose value is transmitting information hierarchically, 2026 gets uncomfortable. The company doesn't need you compiling weekly reports when AI generates them instantly. Roles that survive involve judgment, relationships, strategy. Work resisting automation.

The hard part isn't buying AI tools. Any CFO can approve SaaS contracts. The hard part is ripping up workflows that existed for decades and rebuilding them around machines versus humans. Most companies are terrible at this. They add AI to the same old processes, then act surprised when nothing changes. 2026 separates the companies that fundamentally rethought how work happens from the ones that just bought more software.

Market Reality

Back to those valuations. SoftBank dropped $41 billion into OpenAI late 2025, buying roughly 11%. CEO Masayoshi Son went "all in" on AI, betting today's leaders become trillion-dollar companies.

OpenAI might actually pursue $1 trillion valuation going public, per Reuters. Anthropic could hit $300 billion-plus. These numbers reflect optimism. Also bubble speculation.

Running cutting-edge AI is expensive. OpenAI's losses keep mounting despite revenue growth. The $60 billion raised cumulatively finances chip design, new services, consumer devices, enterprise integrations. The Economist described Sam Altman as "juggler on a unicycle," throwing more balls while keeping the core chatbot business growing.

The question: sustainable business models justifying investment? Enterprise subscriptions, API fees, consumer apps, licensing. All get tried. Revenue grows but needs to catch costs. One skeptical VC warned about OpenAI's trajectory: "WeWork story on steroids."

2026 brings correction. Consolidation. Not every startup claiming "GPT-4 for X industry" survives. Smaller companies get acquired for talent. Others run out of cash without finding product-market fit. The image generator and copywriting space grew crowded. Shakeout looms.

Big Tech owns structural advantages. Microsoft, Google, Amazon, Meta. They've got compute capacity measured in exaflops, software that already runs on billions of devices, distribution that startups can't match. Microsoft is forcing Copilot into Office and Windows, embedding AI so deeply that hundreds of millions of users will encounter it whether they want to or not. That kind of bundling kills standalone startups unless they've built something genuinely differentiated.

NVIDIA hit $1 trillion market cap on AI demand. The stock became a proxy bet on the entire sector. Now competition arrives. AMD ships rival GPUs. Startups pitch novel architectures. Cloud players (Amazon, Google) build custom chips for their own infrastructure. NVIDIA's market share will probably shrink even as total chip sales grow.

2026 is when companies report to investors on AI bets. Cloud providers show AI revenue. Enterprise software demonstrates feature uptake. Markets distinguish AI winners from losers.

If macro conditions tighten or rates stay high, cash-burning AI firms face investor impatience. Easy money for "AI" pitches is ending. Investors want capabilities, defensible tech, profitability paths.

The Verdict

By year-end, clearer answers emerge. Which AI applications moved from demo to reliable deployment at scale? Domain-specific models show commercial viability. Agents become plausible if reliability improves. Multimodal needs another iteration before high-stakes trust.

Regulatory reality gets more defined though less aligned. Companies fight EU enforcement, evolving federal standards, Chinese censorship, various national frameworks. Compliance costs become permanent overhead.

Geopolitical competition intensifies around semiconductors and compute. China's chip progress, U.S. export controls, standards battles shape which companies access hardware and markets needed for scale.

Workforce transformation accelerates. Middle management reduction becomes visible in reorganizations. Companies integrating AI into workflows pull ahead of those stuck piloting.

Markets punish excess, reward execution. The gap between $183 billion valuations and actual profitability needs closing. Some companies demonstrate sustainable revenue growth from compute spending. Others discover burning billions training models doesn't automatically create businesses worth hundreds of billions.

The technology is real. Capabilities improve. But 2026 matures the field past novelty toward value. Companies thriving won't have flashiest demos. They'll ship tools working consistently, solving real problems, generating margins justifying investment.

One researcher's summary: AI tourism is ending. Time to build something that matters.

❓ Frequently Asked Questions

Q: Why is NVIDIA so critical to AI development?

A: NVIDIA's GPUs are optimized for the parallel processing AI models require. Training frontier models needs tens of millions in GPU time. The company held near-monopoly status through 2023-2025, meaning startups without NVIDIA chip allocations simply couldn't ship products. Competition from AMD and custom chips is just now emerging in 2026.

Q: What makes domain-specific AI different from ChatGPT?

A: Domain-specific models train exclusively on specialized data (legal contracts, medical records, accounting rules) rather than the entire internet. They cost millions to build versus tens of millions for general models. Inference costs drop by an order of magnitude while accuracy improves in their specialty. A legal AI beats GPT-4 on contracts using fraction of compute.

Q: How do U.S. chip export controls to China actually work?

A: Washington restricts sales of advanced AI chips (like NVIDIA's H100) and chipmaking equipment to Chinese firms. The Netherlands and Japan were persuaded to join, targeting specific performance thresholds. Late 2025 brought one-year licenses for Samsung and SK Hynix to keep China fabs running with American tools, balancing damage to China against protecting allied semiconductor companies.

Q: Who is Ilya Sutskever and why does his startup matter?

A: Sutskever co-founded OpenAI and was chief scientist before leaving in late 2023. He's one of the field's top researchers. His new company, Safe Superintelligence, believes scaling transformers will plateau and is pursuing fundamentally different approaches to AGI. If SSI demonstrates a superior architecture, it could obsolete tens of billions invested by major labs overnight.

Q: If AI costs so much to run, why don't companies just charge more?

A: They're trying, but pricing power is limited. Enterprise customers comparison-shop between providers. Microsoft bundles Copilot into Office, pressuring standalone tools. Open-source models from Meta let companies self-host cheaply. The market hasn't consolidated enough for anyone to command premium pricing. Companies are racing to achieve scale before investors lose patience with mounting losses.