Lawsuits have become the opening bid in AI content negotiations. The Times already proved it's willing to deal.

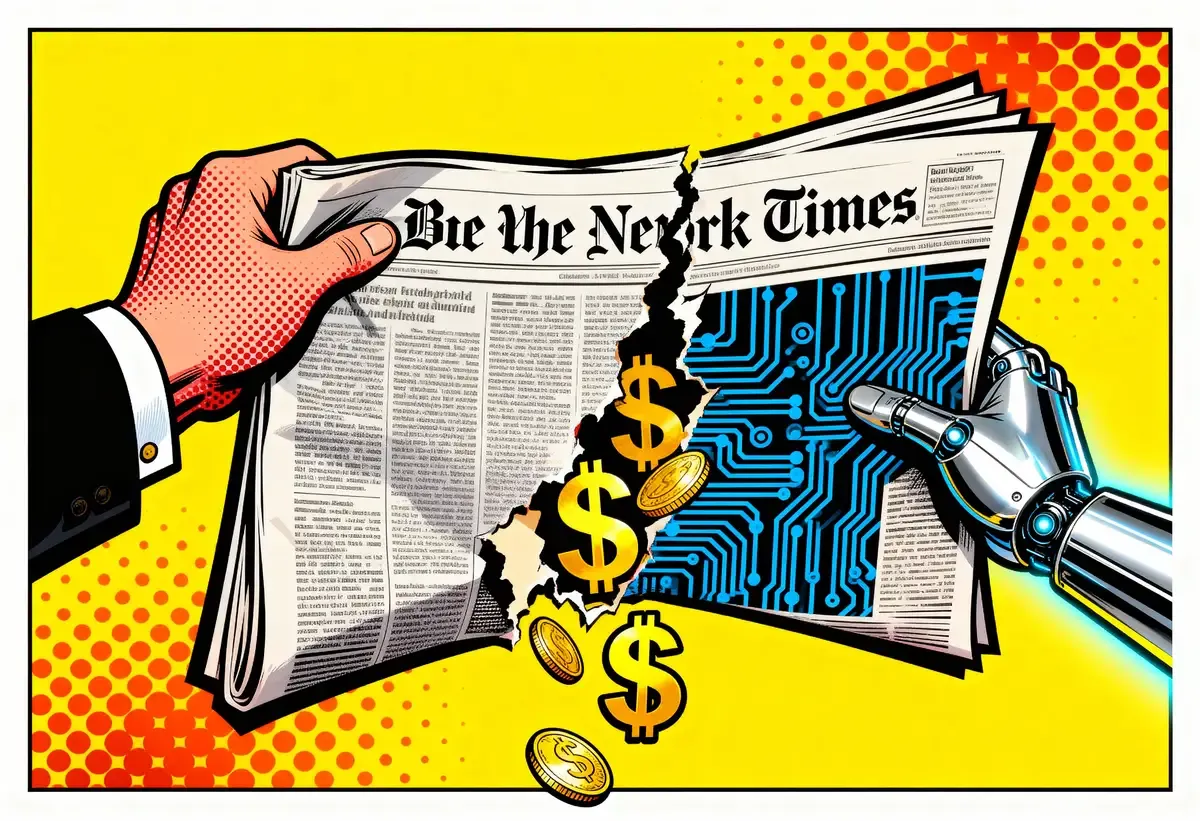

Another week, another AI copyright suit in federal court. On Friday the New York Times went after Perplexity, a San Francisco company that turned article-scraping into a $20 billion valuation. The complaint throws everything at the wall: copyright infringement, trademark claims, reputational harm from AI-generated falsehoods attributed to Times reporters.

Standard allegations for 2025. More than 40 similar cases sit on federal dockets. But read past the legal boilerplate and something else emerges.

The Times doesn't want Perplexity dead. It wants Perplexity paying.

Key Takeaways

• The Times sued Perplexity months after signing an Amazon AI licensing deal—lawsuits now function as negotiation leverage, not last resorts

• Perplexity launched publisher revenue-sharing programs that Gannett, TIME, and Fortune joined; the Times declined all of them

• Unlike training-data cases, this suit targets RAG—real-time content retrieval that creates traceable, ongoing infringement claims

• Anthropic's $1.5 billion September settlement established a benchmark now shaping every publisher-AI negotiation

Sue First, Negotiate Second

TechCrunch, covering Friday's filing, dropped any pretense about what's actually going on. Publishers "use lawsuits as leverage in negotiations in the hopes of forcing AI companies to formally license content," the outlet reported. They've accepted that AI isn't going away. The question is who pays whom.

The chronology tells the story. October 2024: cease-and-desist letter from the Times. July 2025: follow-up warning. In between, months of communication that apparently led nowhere. Perplexity's search engine kept humming. The Times kept objecting. Eventually the lawyers got involved.

But here's what else happened while those letters flew back and forth. In May 2025, the Times signed its first AI licensing deal. Amazon got rights to use content from the Times' food site and The Athletic for training AI models. Terms undisclosed, but the deal got done. One AI company got the green light. Another got sued.

That's not contradiction. That's negotiating leverage.

Publishers figured this out quickly. OpenAI signed deals with the Associated Press, Axel Springer, Vox Media, The Atlantic. Microsoft cut agreements too. Anthropic reached settlements. All of this happened while lawsuits were active or threatened. News Corp provides the clearest example: they sued Perplexity in 2024 while maintaining a content partnership with OpenAI. Same parent company, opposite treatment for different AI players.

The pattern is consistent enough to call it a playbook. And the Anthropic settlement in September 2025 made the stakes explicit. $1.5 billion paid to authors and publishers over pirated training data. Largest AI copyright recovery on record. That number hangs over every negotiation now.

Perplexity Built the Infrastructure

What makes this lawsuit odd: Perplexity actually tried to address compensation concerns. Not perfectly, not to everyone's satisfaction, but they built programs with real money attached.

Last year the company launched its Publishers' Program. Revenue sharing from advertising. Gannett joined. TIME joined. Fortune and the Los Angeles Times signed on. Then came Comet Plus in August, a $5 monthly subscription that sends 80% of fees to participating publishers. Getty Images signed a multi-year licensing agreement for visual content.

Call it inadequate if you want. But Perplexity created mechanisms to pay publishers who were willing to participate. Revenue sharing with major outlets. Subscription revenue flowing to content creators. An image licensing deal that actually got done.

The Times passed on all of it.

Why? Neither side has explained. The lawsuit references 18 months of contact but says nothing about what was offered, what was demanded, what fell apart. Somewhere between Perplexity's public programs and the Times' legal filing, negotiations failed.

Aravind Srinivas, Perplexity's CEO, responded to that October 2024 cease-and-desist with apparent openness. "We are very much interested in working with every single publisher," he told reporters. "We have no interest in being anyone's antagonist here."

Fourteen months later, antagonists is exactly what they are. Something broke down. The obvious guess: price. The Times commands premium rates and likely valued its content far above what an 80% split of a $5 subscription, divided among all participating publishers, would provide. The Amazon deal suggests the Times has a number in mind. Perplexity apparently couldn't hit it.

Real-Time Theft vs. Training Data

Technical distinction worth understanding. This lawsuit attacks something different than the Times' ongoing case against OpenAI and Microsoft.

That earlier suit concerns training data. Did OpenAI have the right to feed Times articles into systems that learned from them? Backward-looking question about datasets already consumed.

The Perplexity case is about RAG, retrieval-augmented generation. Real-time fetching. Graham James, the Times spokesperson, put it bluntly: Perplexity's system "crawl[s] the internet and steal[s] content from behind our paywall and deliver[s] it to its customers in real time."

Every user query potentially triggers fresh infringement. Perplexity's AI grabs articles, summarizes them, delivers what the complaint calls "verbatim or near-verbatim reproductions." The connection between source material and output is direct, traceable, ongoing.

Easier case to make. If someone asks Perplexity a question and gets a detailed summary of a paywalled Times article, that person has less reason to subscribe. Direct substitution. The Times' business model depends on people paying for access to journalism. Perplexity's model, as the company once marketed it, encourages users to "skip the links."

That phrase appeared in Perplexity's own sales materials until recently. The Tribune lawsuit filed Thursday? It quoted that slogan as proof Perplexity designed its product specifically to bypass source websites.

When AI Gets It Wrong

The copyright claims get attention. But the trademark argument might matter more.

AI hallucinates. Everyone building these systems knows it. When Perplexity's AI invents information and attributes that invention to the Times, it damages a brand the newspaper spent 173 years constructing. Readers who encounter false information labeled as Times reporting may not realize the AI made it up.

Japanese publishers Nikkei and Asahi Shimbun raised the same concern in their August lawsuit against Perplexity. False information attributed to their articles, they argued, "severely damages the credibility of newspaper companies."

Copyright cases require proving infringement happened. Trademark cases require proving the brand suffered. When an AI confidently lies and cites a trusted source, the harm is immediate and measurable. Expect more lawsuits to emphasize this angle.

Why Perplexity Gets Hit Hardest

Strange thing about these lawsuits: Perplexity faces more publisher litigation than companies with deeper pockets and larger user bases.

News Corp sued them. The Times sued them. Chicago Tribune this week. Nikkei and Asahi Shimbun in August. Reddit in October over data scraping. OpenAI trained on more content, has more users, and generates more revenue. Yet Perplexity draws concentrated fire.

A few reasons. RAG makes copying visible. When Perplexity summarizes an article, the relationship between source and output is obvious. When ChatGPT generates text drawing on training data, no single source stands out.

OpenAI also moved faster on licensing. By the time publishers organized lawsuits, OpenAI had already signed many of them. Perplexity built compensation programs but didn't close deals with the publishers now suing.

And then there's positioning. Perplexity marketed itself as an "answer engine" that lets users skip source material entirely. That framing makes the substitution argument easy. The product's core value proposition is replacing the need to read original journalism.

The Telegraph Line Doesn't Hold

Perplexity's communications team offered a quote that's already getting repeated. "Publishers have been suing new tech companies for a hundred years, starting with radio, TV, the internet, social media and now AI," said Jesse Dwyer. "Fortunately it's never worked, or we'd all be talking about this by telegraph."

Good line. Bad history.

TechCrunch noted the problem immediately: "Publishers have, at times, won or shaped major legal battles over new technologies, resulting in settlements, licensing regimes, and court precedents."

ASCAP and BMI exist because of litigation over radio broadcasting. Cable television operates under compulsory licensing frameworks born from lawsuits. Streaming services pay digital performance royalties negotiated against legal threats. Publishers don't always win outright. But they've consistently extracted compensation mechanisms from new distribution technologies.

That's the likely endpoint here. Not courts blocking AI development. Mandatory licensing structures, standardized royalty payments, negotiated revenue shares. The lawsuits accelerate that future rather than prevent it.

Settlement Is the Obvious Outcome

The Times demands damages and a permanent injunction barring Perplexity from using its content. What they'll actually get is probably something else. A licensing agreement. Terms that let Perplexity continue operating while paying the Times for access.

Neither side wants this dragging through courts for years. Perplexity faces lawsuits from multiple publishers now, and unresolved litigation complicates fundraising, partnerships, everything. The Times wants money flowing, not legal fees accumulating. A five-year fight ending with exhausted appeals and a transformed AI landscape serves no one.

The Anthropic precedent looms. $1.5 billion establishes that courts will impose serious costs for infringement. Perplexity can't pay that sum, but the number shapes what both sides think is possible.

Open question: whether Perplexity's existing publisher programs survive this wave of litigation or get replaced by direct licensing deals more like what OpenAI pursued. That 80% revenue share looked reasonable when it launched. Measured against billion-dollar settlements, it might not satisfy publishers calculating their leverage.

Why This Matters

For publishers: The playbook is now proven. File suit, negotiate from strength, sign licensing deal. Media companies watching from the sidelines are taking notes on what terms become achievable.

For AI companies: Voluntary compensation programs didn't prevent Perplexity from getting sued. Companies without preemptive deals with major publishers should expect litigation regardless of what revenue-sharing initiatives they launch.

For the information economy: If RAG-based search requires licensing agreements with every publication it might access, the economics of AI search shift dramatically. Premium content that can't be easily replaced becomes leverage over the platforms that need it.

❓ Frequently Asked Questions

Q: What is RAG and why does it matter in this lawsuit?

A: RAG (retrieval-augmented generation) is how AI systems fetch real-time information from websites to answer queries. Unlike training, which happens once using historical data, RAG creates ongoing access to live content. The Times argues each Perplexity query potentially triggers fresh infringement by pulling paywalled articles and summarizing them for users who haven't paid for access.

Q: What was the Anthropic settlement and why does it matter here?

A: In September 2025, Anthropic agreed to pay $1.5 billion to settle claims it trained AI models on pirated books. A court ruled that while lawfully acquired books might qualify as fair use, pirated copies infringe copyrights. This is the largest publicly reported AI copyright recovery and now serves as a benchmark in publisher negotiations.

Q: Who else has sued Perplexity?

A: Perplexity faces lawsuits from News Corp/Dow Jones (2024, motion to dismiss rejected), Chicago Tribune (December 2025), Nikkei and Asahi Shimbun (August 2025), and Reddit (October 2025). Forbes and Wired have also accused the company of plagiarism. Cloudflare confirmed Perplexity scraped websites that explicitly blocked such access.

Q: How does Perplexity's Publishers' Program actually work?

A: Perplexity offers two compensation tracks. The Publishers' Program shares advertising revenue with participating outlets including Gannett, TIME, Fortune, and the Los Angeles Times. Comet Plus, a $5 monthly subscription launched in August 2025, allocates 80% of fees to participating publishers. The Times declined to join either program.

Q: What AI licensing deals has the Times already signed?

A: The Times signed one AI deal so far. In May 2025, Amazon secured rights to use content from the Times' food and recipe site plus The Athletic for AI training. Financial terms weren't disclosed. The Times continues suing OpenAI and Microsoft (filed 2023) while negotiating with other AI companies.