Google launched its vibe coding studio a day ago while Chamath Palihapitiya posted charts showing vibe coding usage had already peaked and crashed. The mismatch wasn't subtle—platforms doubled down on "anyone can build apps" just as data revealed most attempts end in abandoned prototypes. Cursor hit $100 million in annual revenue faster than any software app in history; Replit claims 34 million users built two million apps in six months. The market they're serving is contracting.

What's actually new

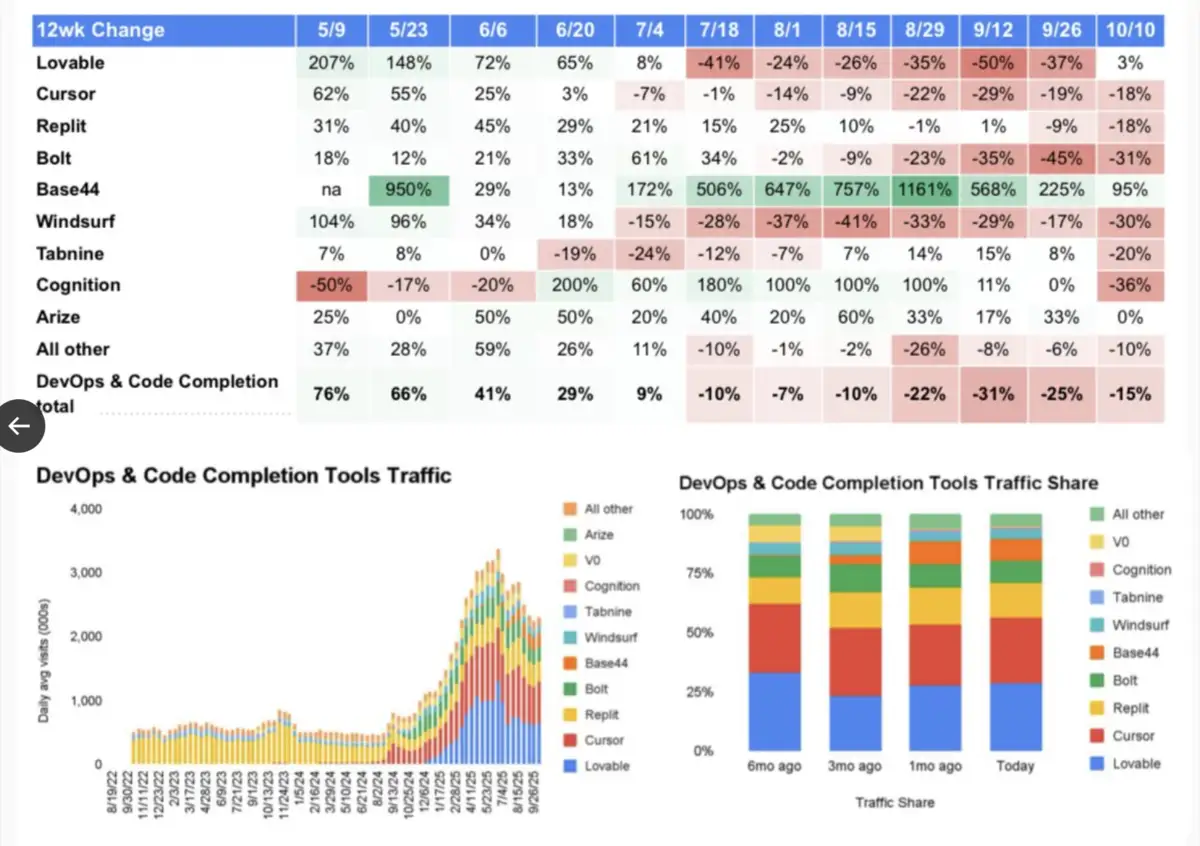

Chamath's October data shows vibe coding usage spiked, then collapsed over subsequent months—a pattern invisible in the promotional coverage. Google AI Studio launched features to "turn your vision into a working, AI-powered app" the same week. The timing reveals the gap between platform investment and user retention.

The pattern was predictable from first principles. Vibe coding works when you're building something the training data has seen thousands of times—to-do lists, event calendars, landing pages. It fails when projects require novel combinations or context the model hasn't encountered. Andrej Karpathy, who coined "vibe coding" in February, now acknowledges it's "fine if you are building something very familiar, but is less reliable for the unfamiliar." The limitation isn't a fixable bug. It's the fundamental constraint of pattern-matching systems encountering problems outside their distribution.

The Breakdown

• Chamath's data shows vibe coding usage spiked then crashed while Google and Cursor launched October features promoting mass adoption.

• AI code generation works for familiar patterns like to-do lists, fails on novel combinations—training distribution limits, not fixable bugs.

• User pattern: initial success with prototypes, growing frustration with complexity, eventual abandonment when debugging AI code becomes harder than writing it.

• Market projected to reach $12.4 billion by 2034 now faces retention crisis; survivor use cases narrow to developer augmentation, not replacement.

User experience follows a consistent arc: initial success with simple prompts, growing frustration as complexity increases, eventual abandonment when debugging AI-generated code becomes harder than writing it yourself. CNET's trial produced a working Halloween events calendar—then broke when requesting advanced features. "After it failed to fix itself three times, I decided to stop the experiment." That's not a user error. That's the use case hitting its ceiling.

The training distribution bind

Steel mills and semiconductor fabs both require massive capital, but steel's production process generalizes. Vibe coding doesn't. The tools excel at replicating familiar patterns: authentication flows, CRUD interfaces, standard layouts. They stumble on anything requiring architectural decisions the training data didn't encode.

From the non-technical user's view: empowering for prototypes, frustrating for production. One developer described AI coding tools as "managing interns"—capable but requiring constant supervision. Another felt like "a responsible babysitter for code." Neither metaphor suggests scalable productivity.

Professional developers see different utility. Offloading boilerplate makes sense when you can debug the output quickly. But Yale professor Kyle Jensen notes that "skilled coders might feel sheepish" using tools that bypass craft they spent years developing. The ambivalence is structural: vibe coding's value peaks for tasks experienced developers consider beneath their time, then plummets for problems requiring judgment.

The maintenance trap closes gradually. AI-generated code works until requirements shift or bugs surface in edge cases. Then non-technical creators can't diagnose issues, and technical developers inherit codebases they didn't write in patterns they didn't choose. Mike Judge's September essay documented the "vibe coding promise versus vibe coding reality"—projects that start fast and stall permanently.

The market miscalculation

Platforms invested in democratization narratives backed by aggressive growth projections: $1.8 billion in 2024 scaling to $12.4 billion by 2034. Cursor charges $20 monthly for pro subscriptions; Replit uses the same pricing. Revenue models assume sustained usage, not demo-and-abandon cycles.

Google CEO Sundar Pichai framed vibe coding as "making engineers dramatically more productive" while using Cursor himself to build custom dashboards. The positioning is careful—productivity tools for technical users, not wholesale engineer replacement. Platforms selling to hobbyists face different retention math.

Chamath's analysis was blunt: usage crashing months after launch suggests fundamental product-market mismatch, not just early adopter churn. Investors who projected exponential adoption now confront usage curves that look like every previous "no-code" movement—initial excitement, gradual recognition that abstraction layers create as many problems as they solve.

The survivor use cases look narrow. Rapid prototyping for validation, one-off internal tools, templated projects where maintenance doesn't matter. Serious applications still require developers who can debug, extend, and maintain systems as requirements evolve. The promise was teams of engineers replaced by natural language prompts. The reality is junior developer work partially automated under senior oversight.

The sorting ahead

Three signals indicate where vibe coding stabilizes: retention curves by user sophistication, tool repositioning toward technical users, and platform pivot from "build anything" to "automate repetitive tasks." Cursor's fast growth targeted developers from launch. Replit tried broader appeal but may narrow. Google's emphasis on "AI-powered app" rather than "no-code solution" suggests awareness.

The pattern reveals platform economics meeting distribution boundaries. Companies can't sell subscriptions to users who abandon after first projects. Marketing's "turn ideas into apps" collides with reality's "turn familiar ideas into prototypes that break under load."

What survives is augmentation, not replacement—LLMs generating starting points skilled developers refine, not autonomous systems producing production code. The distinction determines which business models work. Platforms betting on mass amateur adoption face Chamath's usage charts. Tools selling developer productivity to technical users avoid the trap.

The outcome was legible from Gary Marcus's initial skepticism: vibe coding would "never be remotely reliable" for "products that would have previously required teams of engineers." The data arrived faster than the promotional cycle expected. Demos scaled; complex applications didn't. Training distribution limitations don't disappear because marketing teams prefer different physics.

Why this matters:

- Pattern-matching systems hit predictable walls when novelty exceeds training data, regardless of parameter count or interface design.

- Platforms selling automation to non-technical users face structural retention problems; tools augmenting technical users avoid distribution boundaries.

❓ Frequently Asked Questions

Q: What exactly is vibe coding?

A: Vibe coding means using natural language prompts to have AI tools generate application code without writing it yourself. Coined by OpenAI co-founder Andrej Karpathy in February 2025, the approach lets users describe desired features conversationally—"make a recipe app using fridge ingredients"—and the AI handles the technical implementation. Tools like Cursor and Replit then generate the code automatically.

Q: How much do vibe coding platforms cost?

A: Most platforms offer free tiers with limited features before charging $20 monthly for pro subscriptions. Cursor reached $100 million in annual revenue using this model. Google AI Studio provides free quota, then requires adding your own API key when exhausted. Replit claims 34 million users, suggesting most stay on free tiers rather than converting to paid plans.

Q: Why does vibe coding work for simple projects but fail on complex ones?

A: AI models generate code by pattern-matching against training data. To-do lists, login forms, and event calendars appear thousands of times in training sets, so models replicate them reliably. Novel combinations or architectural decisions the training data didn't encode cause failures. This training distribution boundary can't be fixed through better interfaces or marketing—it's a fundamental constraint of pattern-matching systems.

Q: How is this different from previous no-code tools?

A: Traditional no-code platforms provide visual builders with predefined components. Vibe coding uses conversational prompts instead of drag-and-drop interfaces. Both hit similar walls: abstraction layers work for familiar patterns but create maintenance problems as complexity grows. The usage curve—initial spike, gradual abandonment—mirrors every previous no-code movement. Different interface, same fundamental trade-offs between accessibility and power.

Q: Who actually benefits from using vibe coding tools?

A: Professional developers using them to offload boilerplate code they can quickly debug. Non-technical users building one-off prototypes where maintenance doesn't matter. The gap: hobbyists expecting to build production apps without coding knowledge. They hit the abandonment pattern when projects need debugging or feature additions. Market retention data suggests successful use cases remain narrow despite platforms marketing broad accessibility.