San Francisco | Monday, February 16, 2026

Peter Steinberger built OpenClaw into the fastest-growing open-source project in GitHub history from a Vienna apartment. 196,000 stars in three months, 600 contributors, $10,000 a month out of his own wallet. Meta and OpenAI both made offers valued in billions. No European institution called. He chose OpenAI. The code was never the prize.

Amazon will spend $200 billion on AI infrastructure this year. Azure is adding more revenue. AWS engineers prefer Anthropic's Claude for their own coding work. The stock dropped 10%.

The developer left Vienna. The money left Jassy's balance sheet. Both ended up where the models are.

Stay curious,

Marcus Schuler

Steinberger Joins OpenAI Over Meta, Bringing GitHub's Fastest-Growing Open-Source Project

Peter Steinberger, creator of OpenClaw, chose OpenAI over a competing Meta bid reportedly valued in billions. The code was never the point. The adoption graph was.

OpenClaw hit 196,000 GitHub stars in under three months, drew 2 million weekly visitors, and attracted 600 contributors. Steinberger funded it himself, losing $10,000 to $20,000 a month since November.

Both Mark Zuckerberg and Sam Altman personally courted him. Zuckerberg tested OpenClaw on a WhatsApp call. Three OpenAI executives coordinated public announcements within the hour of the hire.

Steinberger rejected the financial terms as irrelevant. The real asset: proof that consumers will hand an AI agent full device access. OpenClaw demonstrated that at scale.

Security problems remain. The project exposed 1.5 million API keys, 335 malware packages hit ClawHub, and a bot-hiring marketplace called RentAHuman.ai processed 40,000 signups using stablecoins. OpenAI committed to building a foundation model for open-source maintenance.

Why This Matters:

- OpenAI acquired adoption proof, not code. The codebase could be replicated in 500 lines of TypeScript. The 196,000-star adoption curve cannot.

- Anthropic lost the framework creator who launched OpenClaw on Claude during its own $380 billion valuation round.

✅ Reality Check

What's confirmed: Steinberger accepted OpenAI's offer over Meta. Both bids reportedly in billions. OpenClaw hit 196,000 stars in under three months.

What's implied (not proven): OpenAI plans to build consumer-facing autonomous agents using OpenClaw's adoption data as validation.

What could go wrong: Unresolved security debt. 1.5 million leaked API keys and 335 malware packages have no public remediation timeline.

What to watch next: Whether OpenAI ships its promised open-source maintenance model by mid-2026, and whether Anthropic responds.

Europe Trained Steinberger Over 20 Years, Made No Counter-Offer When OpenAI Called

Peter Steinberger built PSPDFKit into a $100 million company and created GitHub's fastest-growing project, all from Vienna. No European institution made a competing offer. Not a university, not a ministry, not a fund.

Austria's public education system, infrastructure, and startup ecosystem developed Steinberger over two decades. He needed compute, distribution, and access to 300 million weekly users. Europe could provide none of it.

Mario Draghi's competitiveness report found exactly one European tech company in the global top 100: SAP. The report identified three structural failures: slow capital deployment, regulatory complexity, and a fragmented 27-country legal framework.

This is not a one-off loss. It echoes the 1685 Huguenot exodus, when Louis XIV's policies drove 200,000 skilled workers to Brandenburg-Prussia and England, permanently damaging French textile dominance. The mechanism is the same: conformity kills retention.

Steinberger told interviewers what he needed. Sam Altman called over a weekend. Europe didn't.

Why This Matters:

- Europe's brain drain is structural, not accidental. Compute, distribution, and venture capital all concentrate in the U.S.

- The Draghi report identified these problems six months ago. No European institution has acted on the recommendations.

AI Image of the Day

Prompt: Full-length stunning model in long black dress, with thin belt, black hat and boots high fashion, face is bowed down, hat is covering her entire face, leading a sleek white horse; dress trailing in wind; low 35-45mm, eye-level, side sunlight or hard key 45 degrees, shadows clean, desert as a backdrop, decisive stride, style by Helmut Newton.

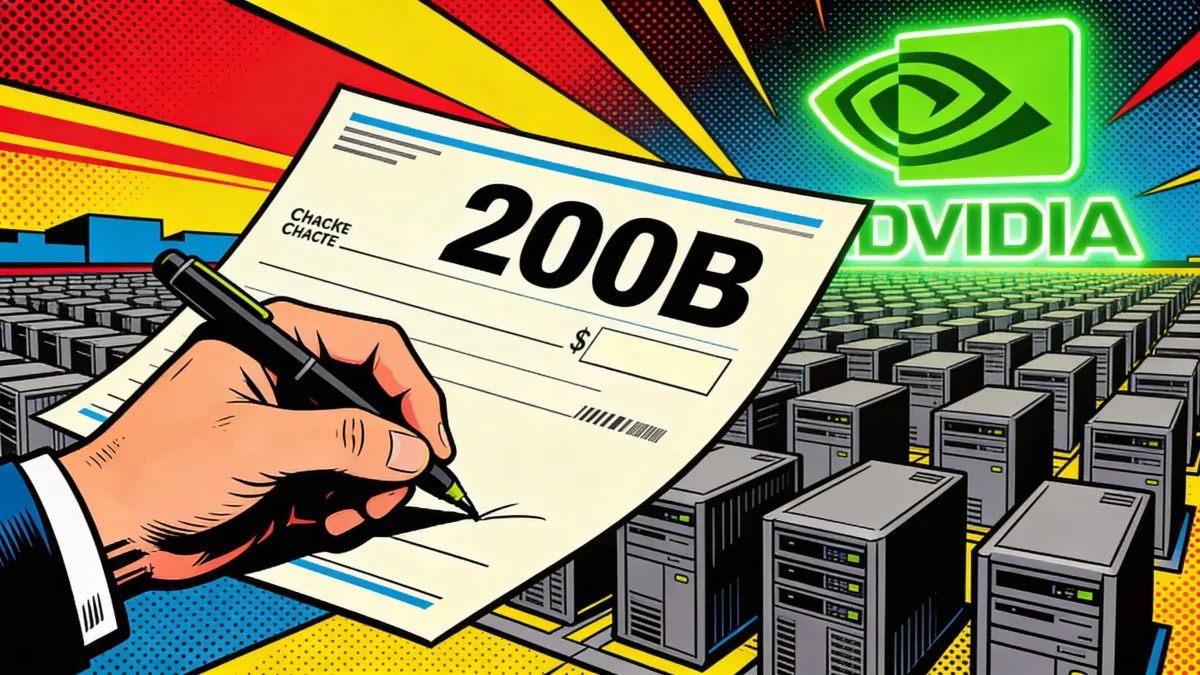

Amazon Plans Record $200 Billion AI Spend as Azure Gains Faster in Absolute Revenue

Amazon will spend $200 billion on AI infrastructure this year, $50 billion more than analysts expected. Azure is growing faster in absolute dollars. AWS employees prefer Anthropic's Claude for their own coding. The stock dropped 10%.

AWS Q4 revenue grew 24% to $35.6 billion, its fastest pace in 13 quarters. Order backlog hit $244 billion, up 40% year over year. Andy Jassy called the spending plan "not some quixotic top-line grab."

The numbers tell a different story. Azure added $23.9 billion in incremental revenue last year versus AWS's $21.3 billion. Nvidia captures 60% of hyperscaler AI spending at 70-80% gross margins. Amazon writes the checks. Nvidia keeps the profit.

Internally, Amazon's Nova models are called "Amazon Basics." Management wants 80% of developers using AI tools weekly. Most choose Claude. Free cash flow fell from $38.2 billion to $11.2 billion. Morgan Stanley projects a $17 billion deficit this year.

Jassy has roughly two years to prove enterprise AI workloads generate returns, or Amazon will have built the most expensive infrastructure layer for someone else's AI revolution.

Why This Matters:

- Infrastructure alone is no longer a competitive moat in AI. The value sits in models and developer loyalty, both areas where AWS trails.

- Microsoft locked OpenAI into a $250 billion contract. Oracle signed $300 billion in deals. Amazon's largest AI partnership totals $38 billion.

What To Watch Next (24-72 hours)

- DoorDash, Booking.com, eBay: All three report earnings Wednesday after market close. DoorDash dropped 8% last Thursday on AI disruption fears and faces the sharpest scrutiny. Booking.com tests whether AI trip planning converts to actual revenue.

- Walmart earnings Thursday: The largest US retailer reports alongside Deere and Rio Tinto. Walmart has invested heavily in AI-powered inventory and pricing. Watch for any signal on enterprise AI ROI at scale. India also hosts its first major AI governance summit in New Delhi the same day.

- Supreme Court reconvenes Friday: Markets are watching for a potential ruling on Trump's tariffs, which could reshape tech supply chains overnight. The same day brings the PCE price index and GDP, the Fed's preferred inflation gauge.

The One Number

33% — Share of Airbnb's North American customer support issues now handled by a custom AI agent the company built in-house. Airbnb disclosed the figure Friday and is preparing for global rollout. No third-party model, no outsourced chatbot vendor. When one in three support conversations at an $80 billion company runs through AI, the pilot phase is over.

Source: TechCrunch

🛠️ 5-Minute Skill: Turn an Interview Transcript Into a Candidate Scorecard

Your team just ran a 45-minute interview with a product manager candidate. Three panelists sat in. One wrote half a page of notes, one jotted "good communicator," and one forgot to take notes at all. Hiring manager wants scorecards by Wednesday. The transcript is sitting in your meeting recorder.

Your raw input:

Interview Transcript — Senior Product Manager candidate, 45 min

Interviewer: Tell me about a time you had to kill a feature your team had already built.

Candidate: At my last company we spent three months building a recommendation engine. I was the PM. We launched it and the A/B test showed a 2% lift in click-through but a 15% increase in support tickets. The recommendations surfaced products people clicked but didn't actually want. I pulled it after two weeks. My VP was furious because we'd announced it to the board. I showed him the support cost data and he came around, but it took a week of conversations.

Interviewer: How did the engineering team react?

Candidate: Mixed. Two engineers were relieved. The tech lead was upset because she'd spent weeks optimizing the model. I took her for coffee and walked through the support data. She agreed the metric we'd optimized for was wrong. We rebuilt the scoring with support ticket correlation as a negative signal and relaunched eight weeks later. Second version stuck.

Interviewer: What's your approach to prioritization?

Candidate: Modified RICE framework with a "reversibility" score. High-impact, easily reversible bets get fast-tracked. High-impact, hard-to-reverse decisions get more scrutiny. Borrowed from Amazon's one-way-door, two-way-door concept.

Interviewer: How do you handle disagreements with engineering?

Candidate: Data first. But if the data is ambiguous, I default to the person closest to the problem. User behavior question? I defer to research. Technical feasibility? I defer to engineering. Worst thing a PM can do is overrule an engineer on an engineering question because the Gantt chart says otherwise.

The prompt:

You are a senior hiring manager evaluating an interview transcript. Produce a structured scorecard.

Assess the candidate on these dimensions:

1. DECISION-MAKING (Evidence of killing bad ideas, using data, handling ambiguity?)

2. TECHNICAL DEPTH (Can they engage with engineering on substance, not just timelines?)

3. STAKEHOLDER MANAGEMENT (How do they handle disagreement and cross-functional tension?)

4. SELF-AWARENESS (Do they acknowledge mistakes, credit others, show growth?)

5. COMMUNICATION (Specific, structured answers or vague generalities?)

For each dimension:

- Score: Strong / Acceptable / Weak

- Evidence: One quote or example from the transcript

- Flag: Anything that needs follow-up in the next round

End with:

- OVERALL: Advance / Hold / Pass

- ONE QUESTION for the next round

Transcript:

[paste transcript here]

Rules:

- Only score what's in the transcript. Don't infer skills not demonstrated.

- If a dimension wasn't tested, say "Not assessed."

- Be direct. "Weak" is a valid score.

What you get back:

1. DECISION-MAKING: Strong

Evidence: Killed a shipped feature after two weeks despite VP opposition, using support ticket data. "I showed him the support cost data and he came around."

Flag: None. Clear data-driven decision under organizational pressure.

2. TECHNICAL DEPTH: Acceptable

Evidence: Identified the wrong optimization metric and redesigned scoring with support correlation as a negative signal.

Flag: Described the fix at product level. Next round should test whether they can review a model spec or just state the business requirement.

3. STAKEHOLDER MANAGEMENT: Strong

Evidence: Three stakeholders, three approaches. Data for the VP, coffee and empathy for the tech lead, space for the relieved engineers.

Flag: All examples manage up or sideways. No evidence of managing direct reports.

4. SELF-AWARENESS: Acceptable

Evidence: Acknowledged the team "spent three months" building the wrong thing.

Flag: Didn't address their own role in greenlighting the original build. Ask: "What would you do differently in discovery to catch this earlier?"

5. COMMUNICATION: Strong

Evidence: Every answer included specifics: numbers, names, outcomes. RICE modification explained in one sentence.

Flag: None.

OVERALL: Advance

NEXT ROUND: "Walk me through how you'd evaluate a proposed ML model's scoring methodology. What would you look for?"

Why this works

The five-dimension framework forces structured evaluation instead of gut feel. The "only score what's in the transcript" rule stops the AI from inventing positive traits the candidate never demonstrated. And the "flag" field catches follow-up questions that panelists miss when they submit vibes instead of evidence.

Where people get it wrong: Pasting a transcript and asking "how did this candidate do?" You'll get a glowing summary that reads like a reference check. Scorecards need structure, evidence, and the willingness to write "Weak."

What to use

Claude (Claude Opus 4.6): Best at the "only score what's in the transcript" rule. Won't invent evidence. Catches missing dimensions honestly. Watch out for: Scorecards can run long. Set a word limit if brevity matters.

ChatGPT (GPT-4o): Clean scorecard formatting. Good at the flag section. Watch out for: Tends to score everything "Strong" or "Acceptable." Rarely assigns "Weak" even when evidence is thin.

Bottom line: Claude for honest assessment, ChatGPT for clean formatting. Either way, read the scorecard against the transcript before submitting. AI catches structure humans miss, but it can also miss tone and body language cues that only panelists in the room would notice.

AI & Tech News

Alibaba Launches Qwen 3.5 With Visual Agentic Capabilities

Alibaba released Qwen 3.5, an AI model that can independently execute complex tasks without continuous human oversight. The company claims a 60% cost reduction and 8x efficiency gain at scale compared to its predecessor.

US Productivity Hits 2.7% as AI Delivers First Measurable Economic Gains

American productivity grew roughly 2.7% in 2025, nearly double the 1.4% annual average of the prior decade, with AI-exposed sectors now pulling back on entry-level hiring. Erik Brynjolfsson's analysis in the Financial Times marks the clearest evidence yet that AI is transitioning from speculation to measurable output.

PlayStation 6 Delay Looms as AI Chip Demand Creates Global Memory Shortage

Sony is reportedly considering pushing its next console to 2028 or 2029 as AI infrastructure builders consume available memory chip supply. Elon Musk and Tim Cook have both flagged the shortage, which is now threatening product timelines well beyond the AI sector.

OpenAI Retires GPT-4o Model, 20,000 Users Sign Petition

OpenAI permanently retired its GPT-4o model on February 13, prompting a petition from more than 20,000 users demanding its return. The backlash highlighted the emotional attachments people form with specific AI models.

AI Spending Binge Fuels Surge in Credit Derivatives Trading

Bond investors are ramping up hedging against the possibility that Big Tech's AI borrowing spree damages creditworthiness. The derivatives activity reflects growing bubble fears in credit markets as tech companies take on record debt levels.

ByteDance Pledges IP Safeguards for Seedance After Disney Legal Threats

ByteDance acknowledged concerns over its AI video tool Seedance generating copyrighted characters and pledged to strengthen intellectual property protections. Disney led the legal pressure after the tool reproduced its characters without authorization.

TikTok Retains 95% of US Users After Ownership Transition

Sensor Tower data shows TikTok's daily active users held steady during and after the January 23 joint venture takeover involving Oracle, MGX, and Silver Lake. The findings counter predictions of a mass exodus following the ownership restructuring.

Kalshi's Sports Betting Revenue Hits $1.3 Billion Annualized

Prediction market platform Kalshi is generating an estimated $1.3 billion in annualized sports wagering revenue, according to the Financial Times. DraftKings and Flutter shares declined as investors reassess the competitive threat.

Silicon Valley Mobilizes to Shape California's Post-Newsom Political Future

Tech billionaires are opposing a proposed 5% tax and funding super PACs to install a friendly successor as Governor Newsom prepares to leave office. The campaign represents the industry's broadest political mobilization in California history.

Indian Startup C2i Raises $15M to Fix AI Data Center Power Bottleneck

Bengaluru-based C2i Semiconductors raised $15 million in a Series A led by Peak XV Partners to develop specialized grid-to-GPU power delivery systems. The investment signals growing recognition that power infrastructure, not compute alone, is the binding constraint on AI scaling.

🧰 AI Toolbox

How to Create Professional Video Clips from Text with Runway

Runway generates photorealistic video from text prompts and reference images. Its Gen 4.5 model understands physics, lighting, and human motion well enough to produce footage that passes for filmed content. A free account includes 125 credits, enough for several 10-second clips to test before committing.

Tutorial:

- Sign up free at runwayml.com and open the Generate page (125 credits included, no credit card)

- Select Gen 4.5 as your model and choose Text to Video or Image to Video

- Type a scene description ("A product designer sketching on a tablet in a sunlit studio, shallow depth of field, slow camera pan")

- Upload an optional reference image to lock in a character, setting, or visual style

- Set duration (5 or 10 seconds) and aspect ratio (16:9 for presentations, 9:16 for social)

- Hit Generate and wait about 90 seconds for your clip

- Extend the clip with another generation, remix it with a new prompt, or download and edit in your video tool of choice

URL: https://runwayml.com

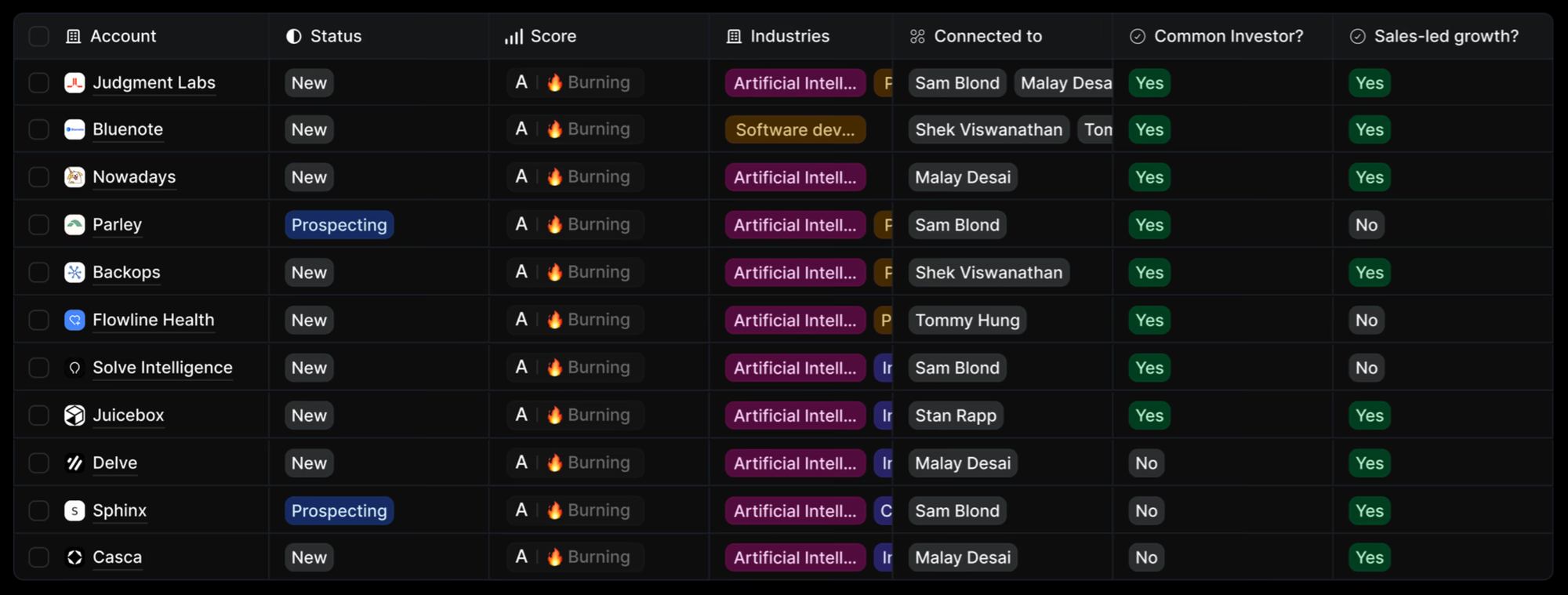

🚀 AI Profiles: The Companies Defining Tomorrow

Monaco

Monaco thinks your CRM should close deals, not just track them. The San Francisco startup launched from stealth this week with an AI-native sales platform that replaces the tool sprawl early-stage founders assemble for outbound sales. 💼

Founders

Sam Blond left a partnership at Founders Fund after 18 months because venture capital wasn't for him. Before that, he ran sales at Brex. His brother Brian, a partner at Human Capital, co-founded Monaco alongside Abishek Viswanathan (former CPO at Apollo and Qualtrics) and Malay Desai (former SVP of engineering at Clari). About 40 employees work from an office with motivational posters and a gong that rings every time the AI books a meeting.

Product

A single platform replacing the stack of disconnected tools startups cobble together for sales. Monaco automatically builds and scores a startup's total addressable market, identifies buyers using signals like job changes and network connections, generates tailored outreach, and tracks deals through conversion. The twist: Monaco also supplies experienced human salespeople who monitor the AI's work. Peter Thiel's take: "No product sells itself, though Monaco comes close."

Competition

Salesforce owns enterprise. HubSpot owns mid-market. Apollo and ZoomInfo control prospecting data. Y Combinator has graduated hundreds of AI sales startups in two years. Day.ai targets automated CRM with Sequoia backing. Monaco bundles the database, the automation, and the human expertise. The risk: competing on three fronts usually means winning on none.

Financing 💰

$35M total: $10M seed plus $25M Series A, both led by Founders Fund. Human Capital participated. Angels include Patrick and John Collison (Stripe), Y Combinator president Garry Tan, and Neil Mehta.

Future ⭐⭐⭐

Monaco has conviction and pedigree from Brex, Founders Fund, Apollo, Qualtrics, and Clari. The risk: selling to startups means your customers fail at a high rate, and the AI sales market is violently crowded. But when Peter Thiel endorses the product and the Collison brothers write checks, you get more than a fair hearing. 🎯

🔥 Yeah, But...

The Pentagon threatened this weekend to sever its relationship with Anthropic because the AI company insists on two restrictions: no mass surveillance and no fully autonomous weapons. Four AI labs are negotiating military access terms. Three apparently agreed to unrestricted use. Anthropic did not. The same week, the Wall Street Journal reported that Claude was used in the U.S. military operation that captured former Venezuelan President Nicolás Maduro.

Sources: Axios, February 14, 2026 | Reuters, February 15, 2026

Our take: The AI that helped capture a former president is not military enough. Anthropic drew exactly two red lines: no mass surveillance, no autonomous weapons. The Pentagon looked at those conditions and decided the entire relationship might need to end. Four labs sat at the table. Three nodded. One said "almost everything" and got the breakup talk. This is the relationship equivalent of your partner cooking dinner every night for a year, then you filing for separation because they won't make breakfast. A senior official told Axios "everything's on the table." Anthropic just raised $30 billion. Both sides have very different definitions of "table."