💡 TL;DR - The 30 Seconds Version

🤖 Web scraping now powers AI training data collection, with language models like ChatGPT depending on scraped text datasets at massive scale.

🐍 Python with Beautiful Soup and JavaScript with Cheerio remain the top beginner tools, while Scrapy handles enterprise-scale projects.

⚖️ The hiQ Labs v. LinkedIn case ruled scraping public data legal, but private content behind login walls carries higher legal risk.

🛡️ Sites fight back with IP blocks and CAPTCHAs, requiring proxy rotation, 1-5 second delays, and headless browsers for JavaScript-heavy sites.

🚀 Success now demands both technical skills and strategic thinking about data collection ethics in the AI-powered economy.

Learn web scraping step by step with Python & JS examples, tool comparisons, legal tips, and visuals for fast learning.

Companies scrape websites to track competitor prices. Journalists use it to gather news. Marketers build lead lists. But here's what's changed: AI models now depend on scraped data for training.

Web scraping works like having a research assistant that never sleeps. You write code that visits websites, reads the HTML, pulls out what you need, and saves it. No more copying information by hand.

AI has raised the stakes. Machine learning models eat massive datasets. ChatGPT-style language models trained on scraped text. Image recognition systems learned from millions of scraped photos. What started as simple data extraction now powers the AI revolution.

What Is Web Scraping?

Web scraping means using bots to pull content from websites. Every scraper follows the same steps:

- Crawling — Visit and discover URLs

- Parsing — Read and understand the HTML structure

- Extracting — Pull out specific data (product names, prices, text)

- Outputting — Save results as CSV, JSON, or database files

People scrape for price monitoring (Amazon, Airbnb), competitor research, lead generation, news aggregation, real estate data, and social media trends. The biggest use case now? Training AI models.

Code Tutorial: Python & JavaScript

Time to build your first scraper. Python first, then JavaScript.

Python: requests + BeautifulSoup

import requests

from bs4 import BeautifulSoup

url = 'https://example.com'

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36'}

response = requests.get(url, headers=headers)

soup = BeautifulSoup(response.text, 'html.parser')

# Extract all h2 titles

for title in soup.find_all('h2'):

print(title.text.strip())

Sample Output:

Article 1: Getting Started with Web Scraping

Article 2: Advanced Techniques for Data Extraction

Article 3: Legal Considerations for Web Scraping

JavaScript: Node.js + Cheerio

const axios = require('axios');

const cheerio = require('cheerio');

const headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36'

};

axios.get('https://example.com', { headers })

.then(response => {

const $ = cheerio.load(response.data);

$('h2').each((index, element) => {

console.log($(element).text().trim());

});

})

.catch(error => console.error('Error:', error));

Both scripts do the same thing: grab a webpage, parse the HTML, and extract h2 headings. The User-Agent header makes your request look like it came from a real browser, not a bot.

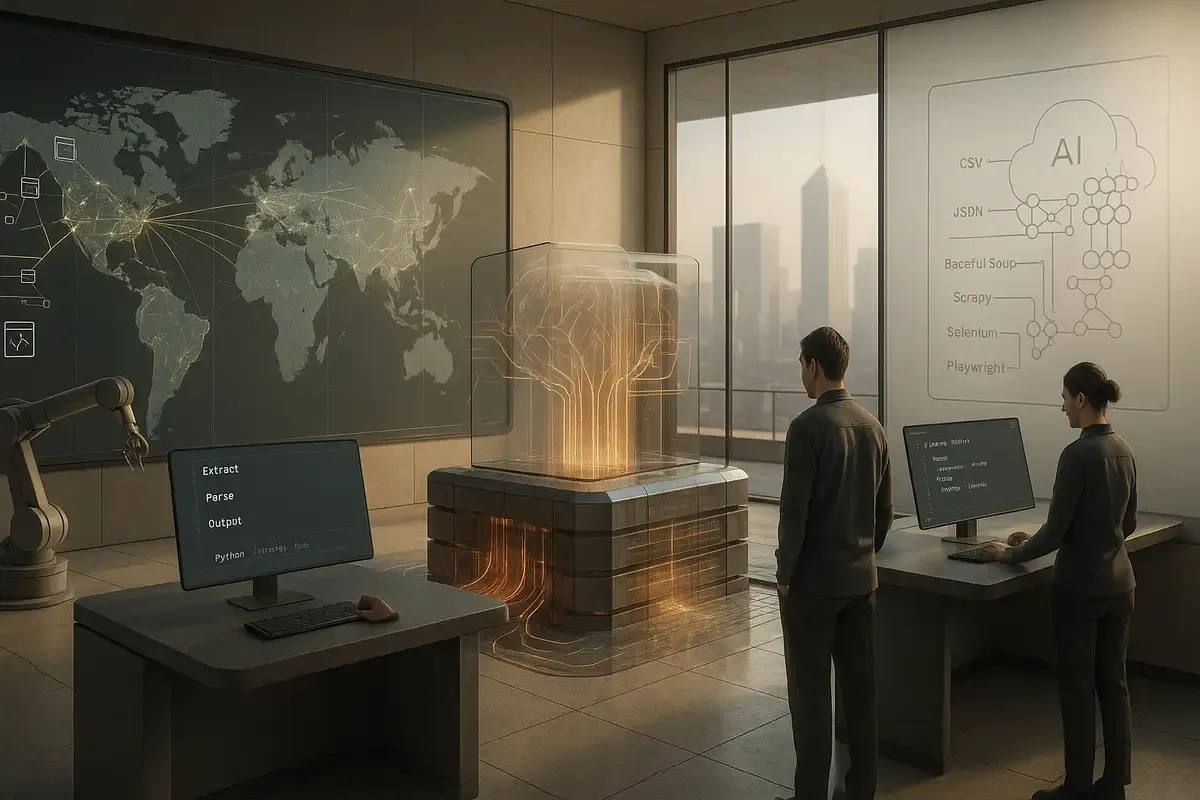

How Web Scraping Powers AI

Web scraping serves AI in four ways. First, it collects training data. Language models need huge text datasets. Computer vision models need millions of images. No other method gathers data at this scale.

Second, it feeds real-time intelligence. AI systems make decisions based on fresh scraped data - competitor prices, news sentiment, social media trends. Stale data kills AI accuracy.

Third, it enriches content. AI models analyze scraped content to find insights, classify information, spot patterns, and create summaries. A marketing AI might scrape competitor sites to understand their positioning.

Fourth, it powers smart monitoring. AI-powered scrapers adapt to website changes, identify relevant content, and filter noise better than traditional rule-based scrapers.

Tools and Platforms: What Actually Works

Python tools dominate the scraping world. Beautiful Soup makes parsing HTML and XML simple with clean syntax. Scrapy handles large-scale projects with built-in pipelines, duplicate filtering, and concurrent requests. Selenium tackles JavaScript-heavy sites through browser automation, but runs slower and uses more resources.

Browser-based tools offer modern alternatives. Playwright beats Selenium on JavaScript support and performance. Puppeteer works well for Chrome automation and single-page applications.

No-code platforms help non-programmers. Octoparse uses point-and-click scraping with a visual interface. ParseHub runs in the cloud with machine learning features for complex sites. Apify offers comprehensive scraping and automation tools.

Enterprise solutions handle scale and complexity. Bright Data provides residential proxies for large-scale scraping. ScrapingBee offers API-based scraping as a service. Zyte (formerly Scrapinghub) delivers professional-grade scraping platforms.

Here's what works for different scenarios:

| Tool | Best For | Learning Curve | Key Advantage |

|---|---|---|---|

| Beautiful Soup | Beginners, simple HTML | Easy | Clean, readable code |

| Scrapy | Large-scale projects | Moderate | Built-in pipelines, speed |

| Selenium | JavaScript sites | Moderate | Real browser behavior |

| Playwright | Modern web apps | Moderate | Better performance than Selenium |

| Octoparse | Non-programmers | Easy | Visual interface |

| Bright Data | Enterprise scale | Hard | Residential proxy network |

Anti-Blocking Strategies That Actually Work

Sites fight back against scrapers. They block IPs, serve fake data, or throw CAPTCHAs at you. Here's how to stay undetected.

Rotate your IP addresses using proxy pools. Free proxies rarely work. Paid residential proxies from providers like Bright Data or Oxylabs perform better because they look like real users. Data center proxies cost less but get detected easier.

Switch User-Agent headers to mimic different browsers and devices. Never use the same User-Agent for every request. Build a list of realistic headers and cycle through them randomly.

Space out your requests. Hitting a server with 100 requests per second screams "bot." Add random delays between 1-5 seconds. Some sites track request patterns, so vary your timing.

Use headless browsers for JavaScript-heavy sites. Puppeteer or Playwright can render pages like real browsers, execute JavaScript, and handle dynamic content. The tradeoff? Speed and resource usage.

Handle CAPTCHAs programmatically. Services like 2Captcha or Anti-Captcha solve them automatically, though this adds cost and complexity.

Watch your success rate. If you start getting blocked frequently, slow down or rotate your approach. Some sites use progressive blocking - they get stricter as they detect more bot-like behavior.

Legal Considerations: The Gray Zone

Web scraping sits in a legal gray area. U.S. courts have ruled inconsistently, creating confusion about what's allowed.

The hiQ Labs v. LinkedIn case gives the clearest guidance. The 9th Circuit Court ruled that scraping publicly available data doesn't violate the Computer Fraud and Abuse Act (CFAA). LinkedIn couldn't block hiQ from scraping public profiles, even after sending cease-and-desist letters.

But the ruling has limits. It only applies to public data. Scraping behind login walls or accessing private information carries more legal risk. The CFAA remains vague about private data scraping.

Best practices for legal compliance:

Check the site's robots.txt file first. It's usually at domain.com/robots.txt and tells you what the site allows. Respect these guidelines, even though they're not legally binding.

Don't hammer servers with requests. Rate limiting shows respect for the site's resources and reduces your legal exposure. One request per second is usually safe.

Avoid scraping behind login walls. Public data carries less legal risk than private or user-generated content that requires authentication.

Read the site's terms of service, though courts have been inconsistent about enforcing anti-scraping clauses against bots that don't agree to terms.

Identify your bot clearly in the User-Agent header. Some sites prefer knowing who's scraping them rather than dealing with mysterious traffic.

Don't republish copyrighted content without permission. Scraping for analysis or research generally receives more legal protection than republishing scraped content commercially.

Advanced Techniques: Handling Complex Sites

Modern websites fight scrapers with sophisticated defenses. Here's how to handle them.

JavaScript rendering poses the biggest challenge. Single-page applications load content dynamically, making traditional HTTP requests useless. Use Selenium, Puppeteer, or Playwright to render JavaScript before scraping.

Session management matters for sites that track user behavior. Maintain cookies across requests and handle login flows properly. Some sites require you to browse normally before accessing target pages.

Content delivery networks (CDNs) can serve different content based on geographic location. Use proxies from specific regions to access location-specific data.

Rate limiting varies by site. Monitor response codes and adjust your request frequency. A 429 status code means you're going too fast. A 403 might mean you're blocked.

Data validation prevents garbage collection. Websites sometimes serve fake data to bots. Cross-reference scraped data with known values to detect honeypots or manipulated content.

Integration with AI Workflows

Web scraping feeds AI pipelines in several ways. For training data, you'll need massive, clean datasets. Plan for data preprocessing, deduplication, and quality filtering. Raw scraped data rarely works directly for model training.

For real-time applications, build scraping pipelines that update regularly. Use message queues like RabbitMQ or Apache Kafka to handle data flow between scrapers and AI models.

Storage matters at scale. Traditional databases can't handle the volume. Consider data lakes using AWS S3 or Google Cloud Storage for raw data, with preprocessing pipelines that clean and structure data for AI consumption.

Monitor data quality continuously. AI models trained on bad data produce bad results. Build validation checks into your scraping pipeline to catch content changes, missing data, or formatting issues.

Why this matters:

• Web scraping has evolved from simple data extraction to the backbone of AI training data, making it essential for anyone working with machine learning models.

• The combination of legal gray areas and sophisticated anti-blocking measures means success requires both technical skill and strategic thinking about what data to collect and how to collect it ethically.

❓ Frequently Asked Questions

Q: How much does web scraping cost to run?

A: Basic scraping costs $0-50/month using free tools and data center proxies. Professional setups run $200-2000/month for residential proxies from Bright Data or Oxylabs. Enterprise solutions with dedicated infrastructure can cost $5000+/month depending on volume and complexity.

Q: How long does it take to learn web scraping?

A: Complete beginners need 2-4 weeks to build basic scrapers with Python and Beautiful Soup. Advanced techniques like handling JavaScript sites take 2-3 months. Mastering enterprise-scale scraping with anti-blocking measures requires 6-12 months of experience.

Q: How often do scrapers get blocked?

A: Basic scrapers get blocked 60-80% of the time on major sites like Amazon or LinkedIn. Professional scrapers using proxy rotation and proper delays achieve 85-95% success rates. E-commerce sites have the strictest blocking, while news sites are more permissive.

Q: Can I scrape without coding skills?

A: Yes, but with limits. Tools like Octoparse or ParseHub handle simple scraping through point-and-click interfaces. They cost $75-400/month and work for basic tasks. Complex sites with JavaScript or anti-blocking measures still require coding skills.

Q: What happens if I get caught scraping illegally?

A: Most cases result in cease-and-desist letters or IP blocks, not lawsuits. Violating terms of service typically leads to account bans. Criminal charges under the CFAA are rare and usually involve accessing private data or causing server damage.

Q: How much data can I scrape per day?

A: A single scraper can collect 10,000-100,000 pages daily with proper rate limiting. Professional setups with proxy rotation handle 1-10 million pages daily. Storage costs about $0.02-0.05 per GB on cloud platforms like AWS or Google Cloud.

Q: When should I use Selenium vs Beautiful Soup?

A: Use Beautiful Soup for static HTML sites - it's 10x faster and uses less memory. Choose Selenium for sites that load content with JavaScript, but expect 5-10x slower performance and higher server costs due to browser overhead.

Q: What's the difference between residential and data center proxies?

A: Residential proxies use real home IP addresses and cost $3-15 per GB but rarely get blocked. Data center proxies cost $0.10-1.00 per GB but get detected easily. Residential proxies are 95% more effective for scraping protected sites.