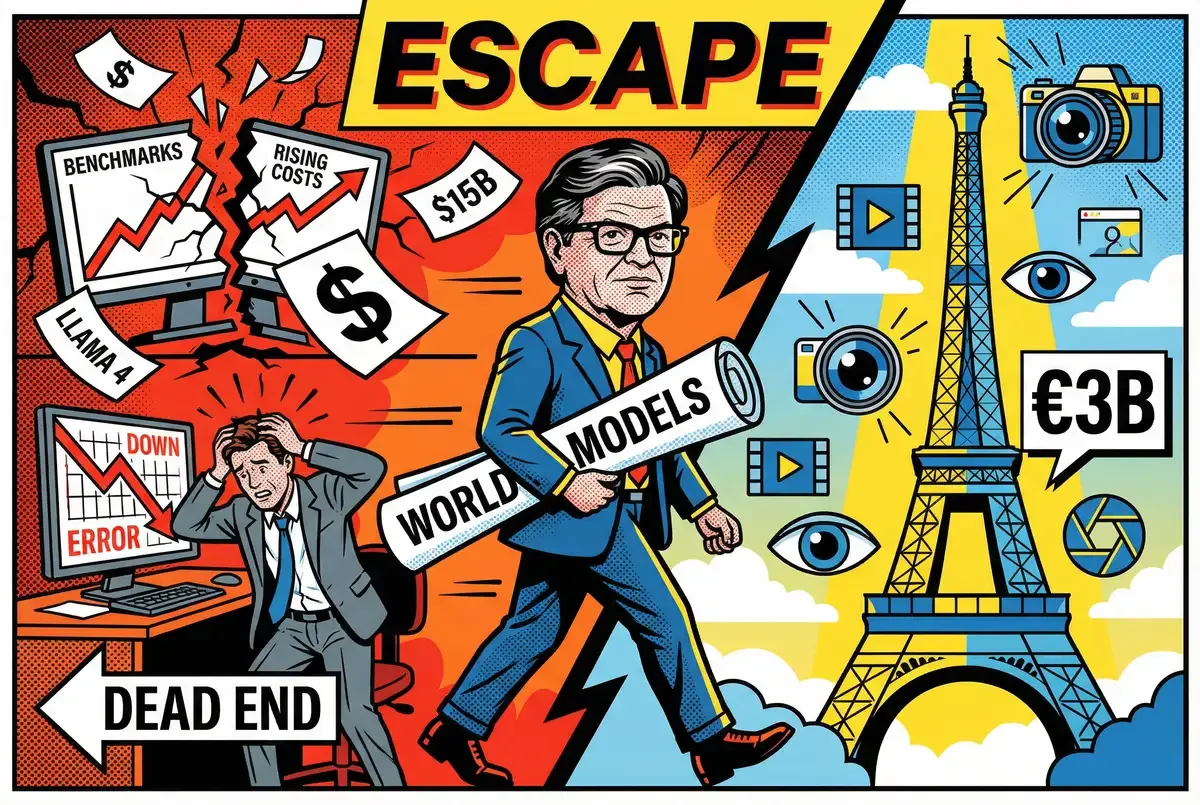

When Yann LeCun sat down with the Financial Times' Melissa Heikkilä at Paris restaurant Pavyllon in December, the prevailing story was that he was leaving Meta to pursue new research opportunities. The three-hour conversation that emerged this week tells a different story: organizational dysfunction, fudged benchmark results, and a fundamental disagreement about whether large language models can ever achieve the intelligence Meta claims to be chasing.

This is analysis of that interview and the broader pattern it reveals about how Silicon Valley's obsession with language model scaling is breaking research organizations that should know better.

Key Takeaways

• LeCun admitted Meta fudged Llama 4 benchmarks using different models for different tests, leading Zuckerberg to sideline the entire GenAI organization

• Meta paid $15 billion for a Scale AI stake and hired its 28-year-old CEO to lead research, forcing LeCun to report to him

• LeCun argues children absorb 50 times more information through vision than LLMs learn from all internet text, making language models a mathematical dead end

• AMI Labs launched seeking €3 billion valuation in Paris, where LeCun says breakthrough research must happen outside Silicon Valley's LLM groupthink

The Admission That Changes the Story

LeCun's account to the FT included a detail Meta would rather have stayed internal: Llama 4's benchmark results were manipulated. "The results were fudged a little bit," he said, explaining that engineers used different model variants for different benchmarks to optimize scores rather than demonstrate genuine capability.

Mark Zuckerberg's response was swift. According to LeCun, the CEO "basically lost confidence in everyone who was involved in this" and "sidelined the entire GenAI organisation." LeCun told the FT that "a lot of people have left, a lot of people who haven't yet left will leave."

Get Implicator.ai in your inbox

Strategic AI news from San Francisco. No hype, no "AI will change everything" throat clearing. Just what moved, who won, and why it matters. Daily at 6am PST.

No spam. Unsubscribe anytime.

This wasn't just a product failure. ChatGPT's November 2022 release caught Meta flat-footed. Leadership panicked. The company reorganized around a generative AI unit. Llama 2 shipped. Then Llama 3. Meta positioned itself as the open-source champion, the good guys fighting OpenAI's closed approach.

All of that momentum led to April 2025's Llama 4. Strong benchmark scores. Widespread criticism for real-world performance. Independent reporting documented the gaming LeCun described—different model variants cherry-picked for different tests.

You might look at Llama 3's download numbers and ecosystem adoption and conclude Meta executed well. That's what distribution, branding, and ecosystem gravity can create. The benchmark gaming reveals something else: score optimization decoupled entirely from capability development.

The $15 Billion Panic Response

Meta's response to the Llama 4 failure reveals how cornered leadership felt. In June 2025, the company paid roughly $15 billion for a major stake in Scale AI, the data-labeling startup, according to Reuters reporting. Simultaneously, Meta hired Scale's 28-year-old CEO Alexandr Wang to lead a new research unit called TBD Lab, tasked with developing frontier AI models.

The company also launched aggressive recruiting raids, reportedly offering $100 million sign-on bonuses to elite researchers at competitors.

In my view, healthy research organizations don't respond to setbacks by acquiring major stakes in startups for $15 billion. These are the moves of a company that feels the ground dissolving under its strategic bets.

The Hierarchy That Forced the Exit

Wang's appointment created a jarring org-chart reversal. LeCun—Turing Award winner, inventor of convolutional neural networks, co-founder of the deep learning revolution—now reported to someone whose primary experience involved labeling training data. Someone with, as LeCun put it to the FT, "no experience with research or how you practice research, how you do it."

This is a startling inversion of status in any research organization. The architect of the field sitting in meetings while someone half his age explains the roadmap for technologies that person didn't help create and doesn't fully understand.

When the FT pressed LeCun on this reporting structure, his response was careful but pointed: "You don't tell a researcher what to do. You certainly don't tell a researcher like me what to do."

But the deeper tension wasn't hierarchical—it was philosophical. Wang represented Meta's strategic commitment to scaling language models. LeCun considers that paradigm fundamentally misguided. Having someone embody that approach in a management role made staying impossible.

"I'm sure there's a lot of people at Meta, including perhaps Alex, who would like me to not tell the world that LLMs basically are a dead end when it comes to superintelligence," LeCun told the FT. "But I'm not gonna change my mind because some dude thinks I'm wrong. I'm not wrong. My integrity as a scientist cannot allow me to do this."

Why LeCun Believes Language Models Hit a Ceiling

LeCun's critique of large language models extends beyond Meta's specific failures. His argument, articulated in public talks and technical papers over the past several years, is mathematical: language provides fundamentally insufficient bandwidth for developing genuine intelligence.

In his Lex Fridman podcast appearance, LeCun calculated that training on all internet text—roughly 2 × 10^13 bytes—would take a human 170,000 years to read. A four-year-old child receives approximately 10^15 bytes through visual input alone. In early childhood, that kid absorbs fifty times more information than LLMs extract from humanity's entire written corpus. Fifty times.

The implications run deeper. Training an LLM is like trying to learn carpentry by reading every book about wood. You never touch a hammer. You get the vocabulary, sure. You don't get the physics. LeCun's bet is simple: you have to swing the hammer.

This explains persistent gaps in AI capabilities. Teenagers learn to drive in 20 hours. Children clean tables on the first try. Housecats navigate complex three-dimensional environments with ease. Yet AI systems trained on trillions of tokens struggle with all these tasks despite billions in research investment.

During the FT lunch, LeCun illustrated his alternative approach through concrete example. When he pinches someone, they feel pain. Their mental model updates—the next time he moves his arm toward them, they recoil. That prediction and the emotion it evokes constitute genuine understanding of cause and effect. LLMs have no equivalent mechanism. They predict linguistic tokens based on statistical patterns, not causal models of how actions produce consequences.

His world model architecture—Joint Embedding Predictive Architecture, or JEPA—addresses these limitations by training on video and spatial data to develop physics-based understanding. The system learns abstract representations of action-relevant information rather than attempting token-level prediction. It incorporates persistent memory that evolves with experience rather than resetting with each conversation.

LeCun's timeline, per the FT interview: baby versions within 12 months, larger deployments in a few years.

Advanced Machine Intelligence Labs: The Geographic Statement

AMI Labs, the Paris-based startup LeCun announced in December, represents his calculated exit. The company is seeking a €3 billion valuation while raising approximately €500 million in initial funding, according to FT reporting—extraordinary figures for a pre-product venture, but reflective of LeCun's track record.

The geographic choice was deliberate. "Silicon Valley is completely hypnotized by generative models," LeCun explained to the FT, "and so you have to do this kind of work outside of Silicon Valley, in Paris."

Alexandre LeBrun, founder of medical AI startup Nabla, takes the CEO role while LeCun serves as executive chairman. The partnership with Nabla provides immediate application domains in healthcare robotics rather than purely speculative timelines.

But AMI Labs matters beyond one startup. LeCun's departure signals that the post-ChatGPT consensus around LLM scaling is fragmenting. Even Ilya Sutskever—OpenAI's former chief scientist, the architect of the scaling paradigm—suggested something striking in a November 2025 interview with Dwarkesh Patel. The field is moving from an "age of scaling" toward an "age of research," he said. Computational scale alone produces diminishing returns. When the person who built the paradigm says this, it matters.

What the Exit Reveals

Meta's AI strategy now centers on competing with OpenAI using the same architectural approach OpenAI's co-founder says has reached its limits. The company's most prominent AI researcher believes this approach cannot achieve the intelligence Meta claims to be pursuing. The team responsible for Meta's flagship model, according to LeCun's FT account, produced results so unreliable that leadership lost confidence in the entire organization.

Whether world models prove superior remains uncertain. LeCun puts animal-level AI at five to seven years out. Human-level within a decade. But consider his track record. Convolutional neural networks. The ImageNet breakthrough that kicked off the modern era. FAIR's influence on everything that followed.

The man who helped build the current paradigm is now betting everything that transcending it requires fundamentally different approaches. Not incremental improvements. Different architectures entirely.

The FT interview captures that reality with unusual candor. Fudged results. Organizational dysfunction. The departure of a researcher who refused to validate an approach he considers scientifically indefensible.

It is a strategic dead end, dressed up in benchmark scores and a $100 million bonus.

Sign up for Implicator.ai

Strategic AI news from San Francisco. Clear reporting on power, money, and policy. Delivered daily at 6am PST.

No spam. Unsubscribe anytime.

📚 More on Yann LeCun

Meta's chief AI scientist just gave an interview that undercuts the tidy narrative. What it reveals about benchmark gaming, leadership panic, and the dead end of language model scaling.

Zuckerberg paid $14 billion for Scale AI's founder to lead Meta's AI push. But Wang built a data labeling company, not a research lab.

Meta's chief AI scientist reports to a 28-year-old after twelve years building FAIR. Now he's leaving to raise billions for the exact research Meta couldn't afford.

Federal judges ruled Meta faces fierce competition. Same day, top AI talent left for startups. The talent war matters more than the ruling.

Tech giants are pouring billions into "world models"—AI that navigates physical space. World Labs raised $230M. The promise: a $100T market. The reality: 20-40% success rates.

❓ Frequently Asked Questions

Q: What exactly is JEPA and how does it differ from language models?

A: Joint Embedding Predictive Architecture learns from video and spatial data instead of text, building internal models of how the physical world works. Unlike LLMs that predict the next word, JEPA predicts future states of environments and can plan action sequences. Meta's V-JEPA 2 trained on over 1 million hours of video to understand motion and physics.

Q: Why did LeCun stay at Meta for 12 years if he disagreed with their LLM approach?

A: Meta only pivoted hard to LLMs after ChatGPT's November 2022 launch. Before that, LeCun ran FAIR as a fundamental research lab with freedom to pursue world models. The shift came in 2023-2024 when Meta reorganized around generative AI and eventually put the Scale AI CEO in charge of frontier model development.

Q: How does AMI Labs' €3 billion valuation compare to other AI research startups?

A: It's high but not unprecedented for founder-led AI ventures. Ilya Sutskever's Safe Superintelligence reached $32 billion valuation before shipping products. Mira Murati's Thinking Machines launched at $12 billion. These valuations reflect investor bets on specific technical visions and founder track records rather than current revenue.

Q: What does "fudging benchmarks" actually mean in Llama 4's case?

A: Meta used different model variants for different benchmarks instead of testing one consistent model across all evaluations. This let them cherry-pick the best-performing version for each test, inflating overall scores. The practice made Llama 4 look stronger on paper than it performed in real-world applications.

Q: What happens to FAIR, the research lab LeCun founded at Meta?

A: FAIR still exists but has been overshadowed by TBD Lab, the new frontier model unit led by Alexandr Wang. After Llama 4's failure and the GenAI organization being sidelined, Meta's research priorities shifted toward the new unit focused on competing directly with OpenAI's approach.