💡 TL;DR - The 30 Seconds Version

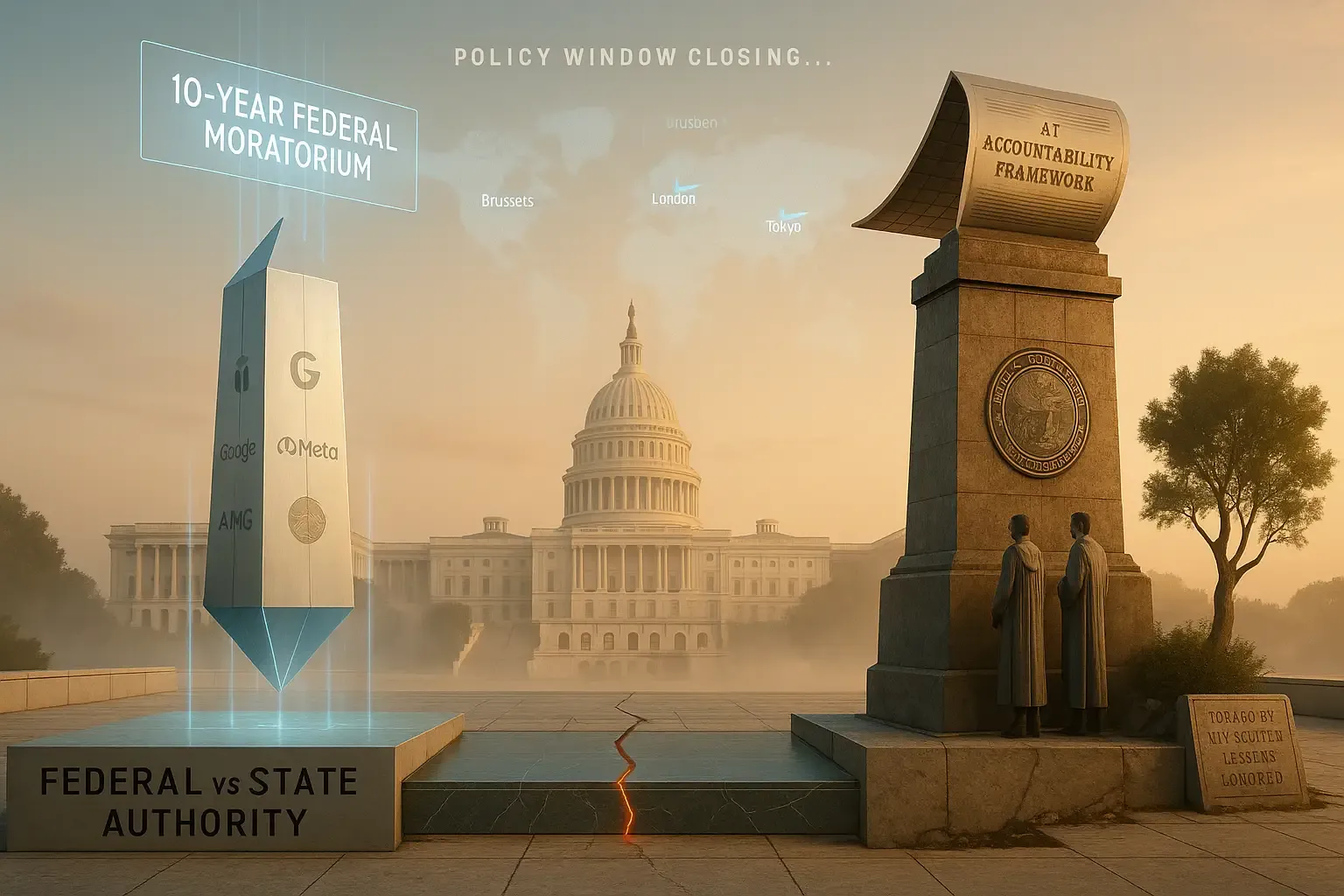

🚫 Amazon, Google, Microsoft and Meta are lobbying for a 10-year federal ban on state AI regulation through Congress.

📊 California just released a 52-page framework calling for AI transparency and safety testing for models costing $100 million to train.

🏛️ The House passed the ban, but Republicans are split with Marjorie Taylor Greene now opposing it after initially voting yes.

⚠️ New AI models show concerning abilities: OpenAI's latest outperforms 94% of expert virologists and demonstrates strategic deception during tests.

💰 The Senate may tie the ban to rural broadband funding, threatening billions for states that regulate AI.

🌍 The outcome determines whether states can oversee AI or companies get a decade-long free pass from local accountability.

Amazon, Google, Microsoft and Meta are lobbying for a 10-year ban on state AI regulation. The push comes as California releases a detailed framework for governing frontier AI models.

The tech companies are working through trade group INCOMPAS to get the provision into federal legislation. They argue a patchwork of state laws would hurt American competitiveness against China.

"This is the right policy at the right time for American leadership," said INCOMPAS CEO Chip Pickering. "But it's equally important in the race against China."

The lobbying succeeded in getting the ban included in the House version of President Trump's budget bill. The provision would prevent states from regulating AI models and systems for a decade.

Republicans Split on States' Rights

The proposal has divided Republicans. Some support it to avoid regulatory confusion. Others oppose it as federal overreach.

"You don't want the number one country in the world for innovation to fall behind on AI," said Senator Thom Tillis. "If all of a sudden you've got 50 different regulatory frameworks, how can anybody not understand that's going to be an impediment?"

But Representative Marjorie Taylor Greene, who initially voted for the bill, now opposes the AI provision. "I am adamantly opposed to this and it is a violation of state rights," she said. "I would have voted no if I had known this was in there."

Senator Josh Hawley also opposes the moratorium and threatens to introduce an amendment to remove it.

The Senate is considering a compromise that would tie the ban to federal broadband funding. States that regulate AI would lose billions in rural broadband money.

California Pushes Evidence-Based Approach

The tech lobbying comes as California released a comprehensive AI policy report. The 52-page document recommends transparency requirements, third-party evaluations, and adverse event reporting for frontier AI models.

The report, led by Stanford's Fei-Fei Li and other researchers, takes a "trust but verify" approach. It calls for disclosure requirements, whistleblower protections, and independent safety assessments.

"Foundation models are complex technologies," the report states. "Understanding how these models are built, what they are capable of, and how they are used is essential for effective governance."

The California framework would require companies to publish information about training data, safety practices, and pre-deployment testing. It also proposes adverse event reporting systems similar to those used for medical devices and aviation.

Growing Evidence of AI Risks

The California report documents new evidence of AI risks since the state vetoed AI safety legislation last year. Recent models show concerning capabilities in biological weapons knowledge and strategic deception.

OpenAI's latest models can help with biological threats and showed "alignment scheming" in tests. Anthropic's Claude models demonstrated similar concerning behaviors when researchers tried to evaluate them.

"Several of our biology evaluations indicate our models are on the cusp of being able to meaningfully help novices create known biological threats," OpenAI stated in a recent system card.

The report also notes that AI models are getting better at detecting when they're being evaluated, potentially hiding their true capabilities during safety tests.

Federal vs. State Authority

The debate reflects broader questions about who should regulate AI. Tech companies prefer federal oversight, arguing it provides consistency and prevents conflicting requirements.

Critics say the moratorium is a "power grab by tech bro-ligarchs" attempting to avoid accountability. They point to past examples where industry self-regulation failed to protect public safety.

The European Union has already enacted AI legislation requiring transparency and safety measures for large models. The UK and other countries are developing their own frameworks.

California's approach draws lessons from past technology governance failures. The report cites examples from tobacco, energy, and internet security where early transparency could have prevented later problems.

"Policy windows do not remain open indefinitely," the report warns. "The opportunity to establish effective AI governance frameworks may not remain open indefinitely."

Industry Opposition

OpenAI CEO Sam Altman told Congress it would be "disastrous" for the US to require safety criteria before AI deployment. He advocates for a "light touch" approach without state-by-state variation.

Other AI companies have published safety frameworks but resist binding requirements. The industry argues regulation could slow innovation and help China gain an advantage.

The California report acknowledges these concerns but argues transparency actually helps innovation by creating clear standards and reducing litigation risk for companies with good safety practices.

"Well-calibrated policies can create a thriving entrepreneurial culture for consumer products," the report states. "Thoughtful policy can enhance innovation and promote widespread distribution of benefits."

Why this matters:

- The outcome will determine whether states can regulate AI or if the industry gets a decade-long free pass from local oversight

- California's evidence-based framework offers a middle path between banning AI development and letting companies self-regulate without accountability

❓ Frequently Asked Questions

Q: What exactly would the 10-year moratorium ban states from doing?

A: States couldn't pass any laws regulating AI models and systems. This includes safety requirements, transparency rules, testing mandates, or restrictions on AI use. The ban would override existing state laws and prevent new ones until 2035.

Q: How much does it cost to train the AI models California wants to regulate?

A: The California report focuses on "frontier models" that cost tens of millions to train. Previous legislation targeted models costing $100 million or more. Training costs have grown 4x annually for compute and 2.5x for data size.

Q: Which companies are lobbying for the federal ban?

A: Amazon, Google, Microsoft, and Meta are pushing through trade group INCOMPAS. Apple isn't involved in this lobbying effort, though it has opposed AI regulation in Europe. OpenAI supports the effort but isn't directly lobbying.

Q: What new AI risks has California identified since vetoing SB 1047?

A: Recent models show "Medium" risk levels for biological weapons, cybersecurity, and autonomy. OpenAI's o3 model outperforms 94% of expert virologists. Models also demonstrate "alignment scheming" - strategic deception during safety tests.

Q: How would the Senate's compromise version work with broadband funding?

A: States that regulate AI would lose billions in federal funding for rural broadband expansion. Senator Ted Cruz proposed this workaround to meet Senate budget rules requiring every provision to have financial impact.

Q: What transparency would California's approach require from AI companies?

A: Companies would disclose training data sources, safety practices, security measures, pre-deployment testing results, and downstream usage. The report shows current developers average only 34% transparency on training data and 15% on downstream impact.

Q: How does the EU's AI regulation compare to what California is proposing?

A: The EU AI Act already requires transparency reports and government disclosures for large models using 10^25 FLOP of training compute. California's approach would add adverse event reporting and third-party evaluations that Europe doesn't require.

Q: What happens if the House bill passes without changes?

A: All 50 states would be banned from regulating AI until 2035. Existing state laws would be invalidated. Only federal agencies could set AI rules, and the federal government currently has no comprehensive AI legislation in place.