💡 TL;DR - The 30 Seconds Version

👉 Anthropic revoked OpenAI's Claude API access Tuesday, citing terms violations just weeks before GPT-5's planned August launch.

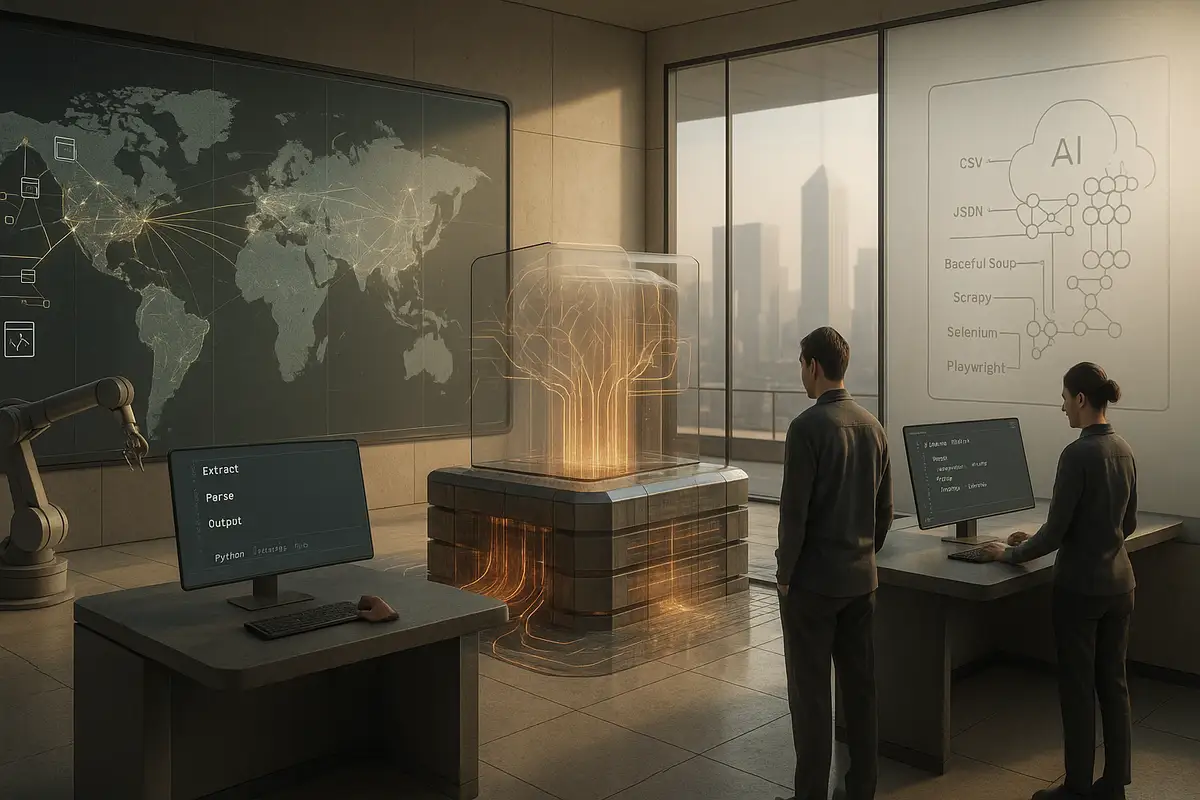

🔍 OpenAI integrated Claude directly into internal tools via API to systematically test coding, writing, and safety responses against their own models.

📋 Anthropic's terms explicitly ban using their service to "build competing products" or "train competing AI models" - which they claim OpenAI violated.

🥊 This marks Anthropic's second OpenAI-related block after cutting Windsurf's access in June during the $3 billion acquisition talks.

💻 Claude Code dominates as the industry's top coding assistant, making this restriction critical for GPT-5's rumored programming improvements.

🌍 The public dispute escalates AI competition as companies weaponize API access restrictions against major rivals.

Anthropic pulled the plug on OpenAI's access to Claude models this week, catching the ChatGPT maker just weeks before its planned GPT-5 release. The reason? OpenAI's engineers were using Claude Code internally to test and benchmark against their upcoming model.

"Claude Code has become the go-to choice for coders everywhere, and so it was no surprise to learn OpenAI's own technical staff were also using our coding tools ahead of the launch of GPT-5," Anthropic spokesperson Christopher Nulty told WIRED. "Unfortunately, this is a direct violation of our terms of service."

The timing couldn't be more pointed. GPT-5 is rumored to launch within days, with OpenAI reportedly aiming to outperform rivals specifically in programming tasks. That's precisely the domain where Claude Code has held the crown as the industry's preferred coding assistant.

The Technical Details

OpenAI wasn't just casually using Claude through the normal chat interface. The company had integrated Claude directly into its internal development tools through API access, allowing systematic testing across multiple scenarios. Engineers ran comparisons between Claude and their own models on coding tasks, creative writing, and safety-related prompts involving sensitive content like self-harm and defamation.

This API integration let OpenAI evaluate how Claude responded to various inputs, then adjust their own models to perform better under similar conditions. It's the kind of comprehensive benchmarking that goes well beyond checking if your competitor's chatbot can write a Python script.

Anthropic's commercial terms explicitly forbid using their service to "build a competing product or service, including to train competing AI models" or to "reverse engineer or duplicate" the services. The company argues OpenAI crossed that line from legitimate benchmarking into competitive development.

Two Stories, Same Facts

OpenAI frames this as standard industry practice. "It's industry standard to evaluate other AI systems to benchmark progress and improve safety," said Hannah Wong, OpenAI's chief communications officer. "While we respect Anthropic's decision to cut off our API access, it's disappointing considering our API remains available to them."

The comment about OpenAI's API remaining available to Anthropic carries obvious subtext. Translation: We're playing fair, why aren't you?

Anthropic draws a distinction between legitimate benchmarking and what OpenAI was doing. The company says it will "continue to ensure OpenAI has API access for the purposes of benchmarking and safety evaluations as is standard practice across the industry." But they haven't clarified whether OpenAI's current activities qualify for this exception.

Pattern Recognition

This isn't Anthropic's first rodeo with OpenAI-related access restrictions. In June, the company cut Claude access to Windsurf, a popular coding tool, after rumors surfaced that OpenAI planned to acquire the startup for $3 billion.

Anthropic co-founder Jared Kaplan was blunt about the reasoning at the time: "I think it would be odd for us to be selling Claude to OpenAI." The acquisition never happened - Windsurf's team eventually joined Google instead - but the message was clear.

The Windsurf incident reveals Anthropic's strategic thinking. They're not just protecting against direct competitors, but also preventing indirect access through acquisition targets. It's API access as corporate warfare.

Broader Stakes

Claude Code's reputation as the industry's best coding assistant makes this dispute particularly significant. While other AI models excel in different areas, coding has become Claude's signature strength. Anthropic has positioned its models as the clear leader in software development tasks, a claim backed by developer adoption and benchmark performance.

OpenAI's GPT-5 reportedly aims to challenge that dominance. Internal reports suggest the new model focuses heavily on improved programming capabilities. Losing access to Claude just weeks before launch means OpenAI can't fine-tune their final comparisons or identify last-minute competitive gaps.

The timing also follows Anthropic's recent rate limiting changes for Claude Code. Last week, the company formalized new weekly usage caps after quietly imposing restrictions that frustrated paying subscribers. Some users were consuming "tens of thousands in model usage on a $200 plan," according to Anthropic, forcing the company to implement financial guardrails.

Industry Implications

API access blocking has become a standard tech industry tactic. Facebook famously cut Twitter-owned Vine's access during their social media rivalry. More recently, Salesforce restricted competitors from accessing certain Slack API data. When platforms become competitive weapons, access becomes privilege.

The AI industry is converging on similar terms of service restrictions. Google, Microsoft, and Meta all include anti-competitive clauses in their model licensing agreements. The era of open benchmarking between major AI labs appears to be ending as the technology becomes more commercially valuable.

What makes this dispute different is the sophistication of the alleged violation. This wasn't simple reverse engineering or casual testing. According to sources, OpenAI built systematic evaluation frameworks specifically to compare Claude's performance across multiple domains. That suggests a level of competitive intelligence gathering that goes beyond typical industry practices.

The public nature of this dispute also signals escalating tensions. Most API restrictions happen quietly through legal channels. Anthropic chose to make this a public statement, likely calculating that the PR value - "OpenAI needs our tools to build their products" - outweighs any relationship damage.

What Happens Next

OpenAI can still access Claude through third-party providers like OpenRouter, though this adds complexity and cost. The company could also create new accounts under different entities, though that risks legal challenges if discovered.

More significantly, this dispute establishes precedent for how AI companies will handle competitive access going forward. If Anthropic successfully blocks a major competitor without business consequences, expect similar restrictions to become routine.

The broader question is whether these access restrictions help or hurt AI development. Competitive benchmarking drives innovation, but it also enables free-riding on competitor investments. The industry is still working out the rules for this new form of competition.

Why this matters:

• The coding AI crown is worth fighting for - Claude's dominance in programming tasks represents real competitive advantage in a market where developers drive adoption decisions.

• API access is the new corporate battleground - As AI models become infrastructure, controlling access becomes a strategic weapon that can cripple competitors at crucial moments.

❓ Frequently Asked Questions

Q: What exactly is Claude Code and why is it considered the industry leader?

A: Claude Code is Anthropic's coding tool that helps developers write, debug, and explain code. Developers widely prefer it because it handles programming tasks well and works across different coding languages better than most alternatives.

Q: How much does Claude API access typically cost?

A: Anthropic recently had to impose rate limits because some users consumed "tens of thousands in model usage on a $200 plan." API pricing varies by model and usage, but enterprise customers like OpenAI likely had custom pricing agreements for their high-volume internal testing needs.

Q: Can OpenAI just sign up again under a different company name?

A: Not easily. Multi-billion dollar companies can't hide their identity effectively, and if caught evading a ban, they risk legal action. OpenAI can still access Claude through third-party providers like OpenRouter, but this adds complexity and costs compared to direct API access.

Q: What specific terms of service did OpenAI violate?

A: Anthropic's commercial terms ban using their service to "build a competing product or service, including to train competing AI models" and "reverse engineer or duplicate" their services. OpenAI's systematic testing and model comparisons crossed the line from benchmarking into competitive development, according to Anthropic.

Q: How did Anthropic discover OpenAI was doing this internal testing?

A: The article doesn't specify detection methods, but API providers can monitor usage patterns, analyze query types, and track systematic testing behaviors. OpenAI's integration of Claude into internal tools via API would create identifiable usage signatures different from normal customer patterns.

Q: What makes GPT-5 different from current OpenAI models?

A: GPT-5 is rumored to launch in August with "auto" and reasoning modes, focusing heavily on improved programming capabilities. Internal reports suggest it aims to outperform rivals specifically in coding tasks - the exact area where Claude Code currently dominates the market.

Q: Does this ban affect regular Claude users and developers?

A: No. This only affects OpenAI's corporate API access. Individual developers, other companies, and regular Claude users can continue using the service normally. Anthropic specifically said they'll maintain API access "for benchmarking and safety evaluations" as standard industry practice.

Q: How common is it for tech companies to block competitors' API access?

A: Very common. Facebook blocked Twitter-owned Vine's API access, and Salesforce recently restricted competitors from accessing Slack API data. As platforms become competitive weapons, API access increasingly serves as both business tool and strategic leverage against rivals in high-stakes markets.