💡 TL;DR - The 30 Seconds Version

👉 Z.ai's new GLM-4.5 AI model costs 28 cents per million output tokens versus DeepSeek's $2.19, delivering an 87% cost reduction while matching performance on key benchmarks.

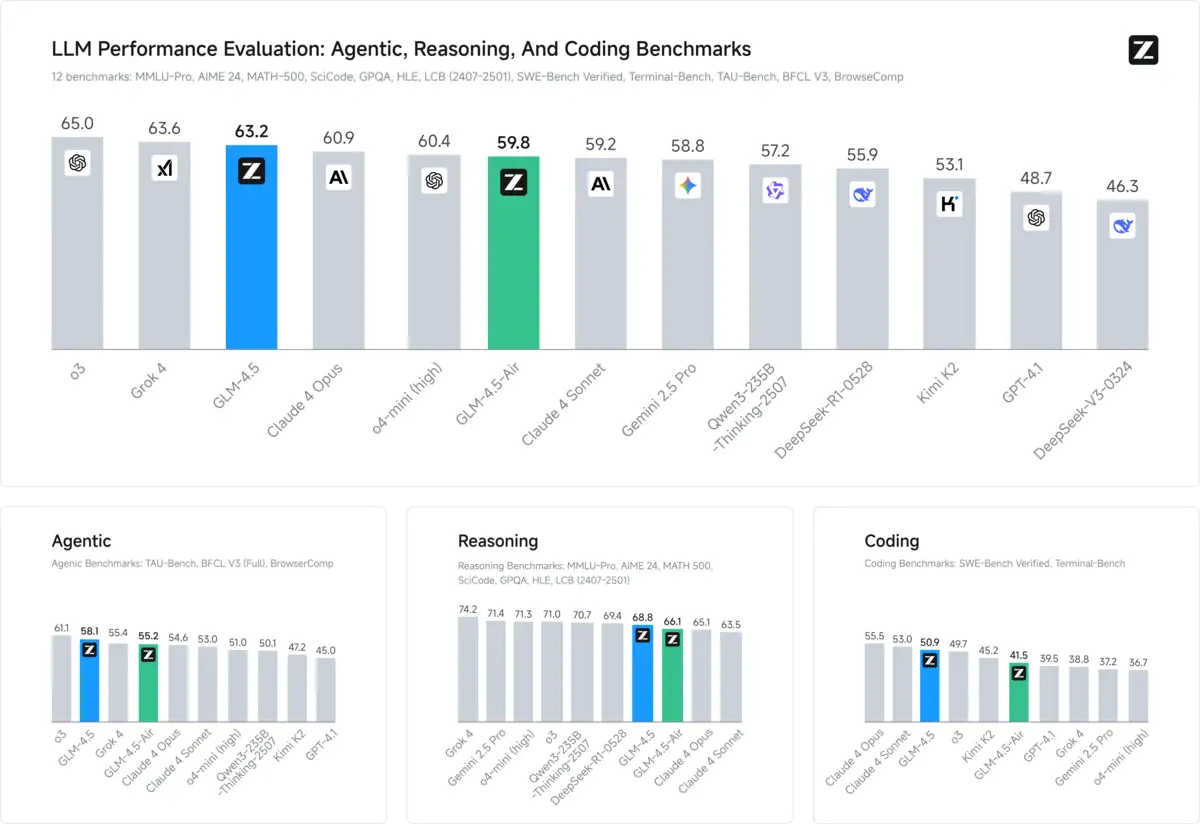

📊 The model runs on just eight Nvidia H20 chips—half what DeepSeek requires—and ranks third overall across 12 AI benchmarks covering reasoning, coding, and agent tasks.

🏭 Built with 355 billion parameters, GLM-4.5 uses "agentic" AI that breaks complex tasks into steps rather than processing everything simultaneously like traditional models.

🌍 Z.ai sits on the US entity list restricting American business, yet raised $1.5 billion from Alibaba, Tencent, and Chinese government funds for a planned IPO.

🚀 China has released 1,509 large language models as of July 2025, ranking first globally as companies use open-source strategies to undercut Western competitors.

💡 The breakthrough suggests US chip restrictions aren't preventing Chinese AI advancement, potentially forcing Western companies to slash prices or find new competitive advantages.

Z.ai just made DeepSeek look expensive. The Chinese startup's new GLM-4.5 model costs 11 cents per million input tokens compared to DeepSeek's 14 cents. But here's where it gets interesting: output tokens cost 28 cents versus DeepSeek's $2.19. That's an 87% discount on the expensive part.

The model runs on eight Nvidia H20 chips — half what DeepSeek needs. Z.ai CEO Zhang Peng says that's enough computing power for now, though he won't say how much the company spent training the model. Details come later, apparently.

This isn't just about cheaper prices. GLM-4.5 takes a different approach called "agentic" AI. Most models try to handle everything at once. GLM-4.5 splits complex tasks into manageable pieces. Think about tackling a research project: you'd outline first, then research each section, then write. That's how this AI works.

Technical Performance That Actually Matters

GLM-4.5 comes in two versions: the full model with 355 billion total parameters and 32 billion active ones, plus GLM-4.5-Air with 106 billion total and 12 billion active parameters. Both offer "thinking mode" for complex problems and "non-thinking mode" for quick responses.

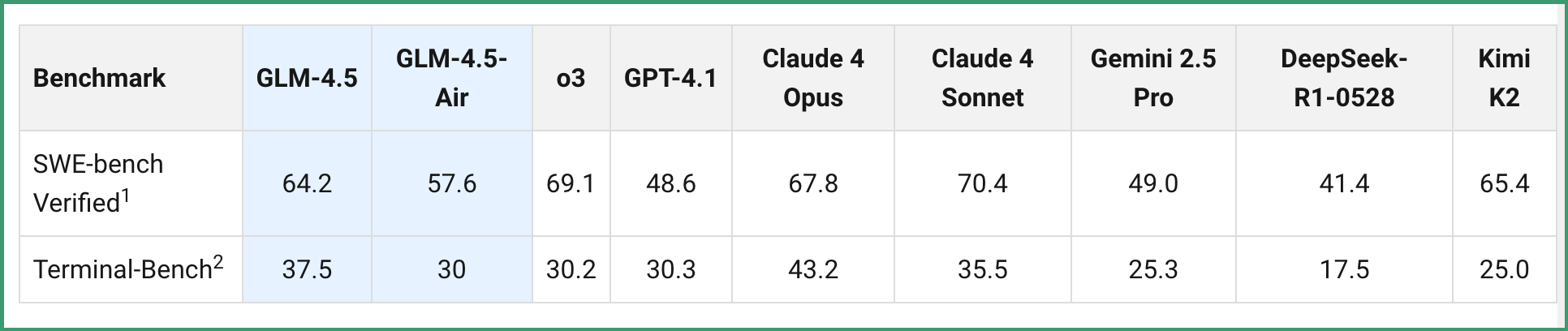

The performance numbers tell a story. GLM-4.5 ranks third overall across 12 benchmarks covering agent tasks, reasoning, and coding. It matches Claude 4 Sonnet on agent benchmarks and outperforms Claude-4-Opus on web browsing tasks. On coding benchmarks, it scores 64.2% on SWE-bench Verified compared to GPT-4.1's 48.6%.

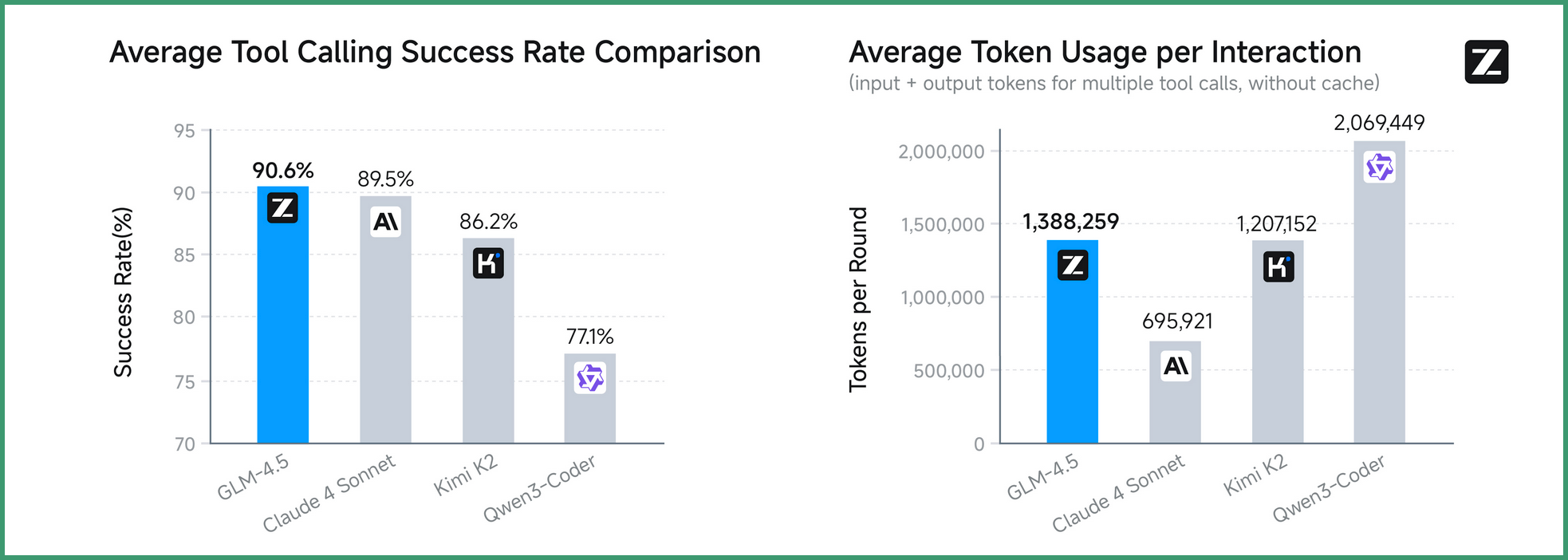

Z.ai tested GLM-4.5 against other models using Claude Code across 52 coding tasks. It won 53.9% of matchups against Kimi K2 and dominated Qwen3-Coder with an 80.8% success rate. The model achieved a 90.6% tool calling success rate, beating Claude-4-Sonnet's 89.5%.

The Geopolitical Chess Game

This release doesn't happen in a vacuum. Z.ai sits on the U.S. entity list, which restricts American companies from doing business with it. The timing feels deliberate — just weeks after DeepSeek rattled global markets by showing Chinese companies could build competitive AI despite chip restrictions.

OpenAI specifically warned about Zhipu (Z.ai's former name) in June, noting the company's progress in securing government contracts across Chinese regions. The warning now looks prescient. Z.ai has raised $1.5 billion from investors including Alibaba, Tencent, and municipal funds from Hangzhou and Chengdu. An IPO in Greater China is reportedly planned.

The H20 chips that power GLM-4.5 exist because of U.S. export controls. Nvidia designed them specifically for China to comply with restrictions. The chipmaker said this month it could resume China sales after a three-month pause, but shipment timing remains unclear.

The Open Source Strategy

Z.ai isn't alone in this approach. China has released 1,509 large language models as of July, ranking first among the 3,755 models released globally. Moonshot recently unveiled Kimi K2, which costs 15 cents per million input tokens and $2.50 for output tokens. StepFun released a non-proprietary reasoning system. Tencent and Alibaba have announced their own open-source models.

The pattern looks coordinated. Chinese companies give away their models for free, then undercut everyone else on API costs. Free software, cheap service. Western competitors have to match those prices or find something else to sell.

GLM-4.5's "agentic" approach represents something potentially more significant than cost competition. While most AI models process everything simultaneously, agentic AI breaks problems into logical steps. This could be more efficient for complex tasks like coding, research, or analysis.

Market Reality Check

The real test isn't benchmarks — it's adoption. Z.ai built GLM-4.5 for "agentic applications," meaning AI assistants that can handle multi-step tasks independently. The model works with existing coding tools like Claude Code and Roo Code, making it easy for developers to switch.

The company created demos showing GLM-4.5 building complete web applications, generating presentation slides, and creating interactive games. These aren't just tech demos; they're proof the model can handle real work. The difference between impressive benchmarks and useful tools often comes down to practical application.

Z.ai's pricing puts pressure on everyone. If a smaller Chinese company can deliver competitive performance at significantly lower costs, established players face a choice: match the prices or differentiate on features. Neither option is comfortable when your business model depends on premium pricing.

The broader implication extends beyond AI models. Chinese companies are demonstrating they can innovate around restrictions, not just comply with them. The H20 chips weren't meant to enable cutting-edge AI development — they were meant to limit it. GLM-4.5 suggests the limits aren't working as intended.

Why this matters:

• Chinese companies can build good AI models despite trade restrictions, and they're pricing them aggressively. That puts pressure on Western companies to cut prices or find new ways to compete.

• This "agentic" approach might actually work better for real tasks. Instead of trying to do everything at once, the AI breaks problems down and solves them step by step — which could make it more useful than current chatbots.

Read on, my dear:

❓ Frequently Asked Questions

Q: What are "tokens" and why do they matter for AI pricing?

A: Tokens are chunks of text that AI models process—roughly 3-4 characters each. "Hello world" equals about 2 tokens. AI companies charge per million tokens processed. Input tokens are what you send, output tokens are the AI's response. Output costs more because generating text requires more computing power.

Q: How does "agentic" AI work differently from regular AI?

A: Regular AI tries to answer everything at once. Agentic AI breaks complex tasks into steps—like planning, researching, then writing. If you ask it to analyze a market, it might first gather data, then compare competitors, then draw conclusions. This step-by-step approach often produces better results for complex tasks.

Q: What are Nvidia H20 chips and why does Z.ai use them?

A: H20 chips are special versions of Nvidia's AI processors designed for China to comply with US export restrictions. They're less powerful than chips sold elsewhere but still capable enough for AI training. Z.ai uses them because that's what Chinese companies can legally buy due to trade limitations.

Q: Why is Z.ai on the US entity list?

A: The US entity list restricts American companies from doing business with certain foreign firms deemed security risks. Z.ai (formerly Zhipu) was added because US officials worry about Chinese AI companies' ties to their government and military. This blocks Z.ai from buying US technology or working with US partners.

Q: Can regular people use GLM-4.5?

A: Yes, through Z.ai's website or API. The model is also open source, meaning developers can download and run it locally if they have enough computing power. However, running it yourself requires significant technical expertise and expensive hardware—most people will use Z.ai's hosted version.

Q: How much did Z.ai spend training GLM-4.5?

A: Z.ai hasn't revealed training costs. CEO Zhang Peng said "details come later" when asked. For comparison, DeepSeek claimed $6 million in training costs for its V3 model, though analysts estimate the real hardware investment was over $500 million. Training costs are closely guarded industry secrets.

Q: How does GLM-4.5 compare to ChatGPT or Claude?

A: GLM-4.5 ranks third overall in benchmark tests. It matches Claude 4 Sonnet on agent tasks but trails OpenAI's latest models on some reasoning tests. The main advantage is cost—GLM-4.5 is significantly cheaper to use than Western alternatives while delivering comparable performance for most tasks.