DeepSeek isn't allowed to buy the chips that run the modern AI industry. On New Year's Eve, they released a paper showing why that might not matter anymore.

The research introduces an architecture called Manifold-Constrained Hyper-Connections that solves a training stability problem most Western labs haven't bothered fixing—because throwing more compute at unstable models is cheaper than understanding why they fail. DeepSeek doesn't have that option. The result is engineering that works on restricted hardware but proves useful everywhere. This is the third time in eighteen months they've demonstrated this pattern. The export controls meant to slow Chinese AI development may be accelerating it instead.

The Breakdown

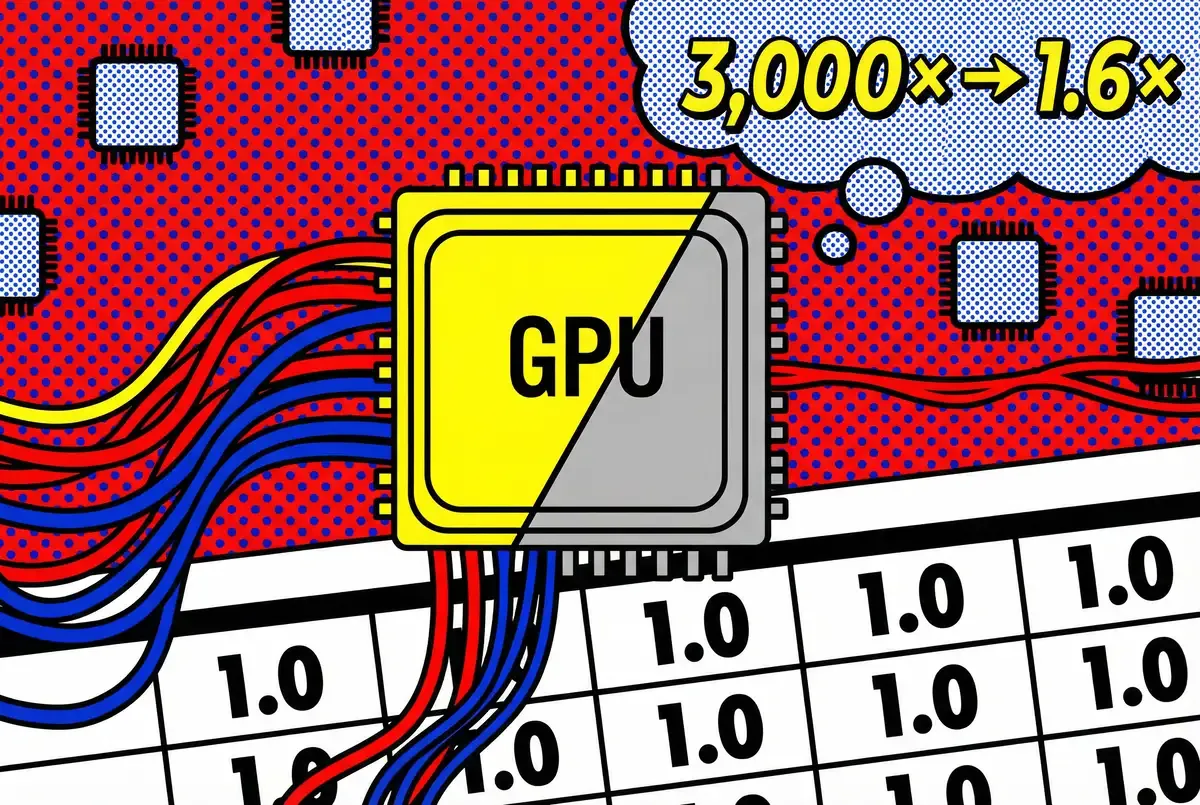

• DeepSeek's mHC architecture cuts training instability from 3,000x gradient amplification to 1.6x through doubly stochastic matrix constraints, achieving 6.7% overhead

• CEO Liang Wenfeng's direct authorship follows pattern preceding major releases, with observers expecting flagship model around Spring Festival 2026

• Chip export restrictions forced efficiency-first innovations on older A100 GPUs that may prove more durable than resource-intensive approaches

• Models achieving top-15 benchmarks at one-tenth training cost challenge assumption that scale-first development provides insurmountable competitive advantage

The Residual Stream Problem

Look at a standard neural network and you see the signal fighting its way through every single layer. Each computation transforms the input slightly, passes it forward, repeats. Stack enough layers and the gradient—the learning signal traveling backward—either explodes or vanishes. For years, this capped how deep networks could go.

ResNets, the 2016 breakthrough, cut a path through the woods. Add a shortcut that lets the signal skip the processing and jump ahead directly. The gradient can flow without degrading. Simple enough that it became infrastructure. Every major language model since Transformers uses some variant of this residual connection. Progress moved elsewhere—attention mechanisms, mixture-of-experts routing, parameter efficiency tricks. The residual stream itself stayed untouched, a stable foundation supporting increasingly elaborate superstructures.

ByteDance challenged this in 2024 with Hyper-Connections, which expanded the stream's width and added learned controls for how information flows between layers. Instead of a single pathway, you get multiple parallel streams that interact through transformations the network learns during training. The models got smarter. Performance improved across standard benchmarks.

Training them at scale proved different. DeepSeek's paper documents the failure with precision. In their 27B parameter experiments, the gradient norm spiked violently around step 12,000. Training collapsed. The cause: signal divergence. Stack learnable transformations across dozens of layers and small amplifications compound. By layer 60, the forward signal could grow 3,000 times larger. The backward gradient showed similar explosions.

This isn't a bug. Remove the constraints that made residual connections work and you get unbounded amplification. Standard ResNets keep average signal strength constant as it moves through the network. That identity mapping is the whole trick. ByteDance's Hyper-Connections threw it away for expressivity. At small scale, you can paper over the problem with careful tuning. At 27 billion parameters trained across thousands of GPUs, small perturbations compound into collapse.

Get Implicator.ai in your inbox

Strategic AI news from San Francisco. No hype, no "AI will change everything" throat clearing. Just what moved, who won, and why it matters. Daily at 6am PST.

No spam. Unsubscribe anytime.

The Manifold Constraint

DeepSeek's solution projects the learnable transformations onto a specific mathematical structure: doubly stochastic matrices. Think of it as a budget. Standard connections let the signal spend as much as it wants—amplify here, shrink there, no limits. The manifold constraint forces accounting: every row must sum to 1, every column must sum to 1, no negative numbers. You can move the money around, but you can't print more.

Here is why the math counts. Operating on a feature vector with a doubly stochastic matrix produces a weighted average of that vector's components. The output can't exceed the input's maximum or fall below its minimum. Signal strength gets bounded automatically, eliminating the explosive growth that killed Hyper-Connections.

The constraint preserves itself under composition. Multiply two doubly stochastic matrices? You get another one. Which means the effective transformation between layer 1 and layer 60 stays bounded. DeepSeek's experiments confirm this: where Hyper-Connections showed gain magnitudes of 3,000, mHC peaked at 1.6.

Implementing the projection required solving a practical problem. Computing exact doubly stochastic matrices is expensive. DeepSeek uses the Sinkhorn-Knopp algorithm, an iterative method that alternately normalizes rows and columns until convergence. Twenty iterations proved sufficient.

Testing on models from 3 billion to 27 billion parameters showed consistent gains. The 27B model improved 2.1% on BIG-Bench Hard. 2.3% on DROP. Smaller gains across six other benchmarks. The gradient norm stayed stable through 50,000 training steps. Training overhead: 6.7%.

The Efficiency Infrastructure

Signal stability solves one problem. Memory overhead creates another. The problem isn't the math; it's the wire. Expand the residual stream from width C to width 4C and you're reading and writing four times as much data per layer. Moving data from memory to the compute core? That takes time. Burns energy. In modern accelerators, that wire often chokes before the processors do.

DeepSeek's infrastructure shows what happens when engineers get desperate. Kernel fusion combines operations that share memory access into single GPU kernels. Fewer round trips to device memory. The backward pass recomputes intermediate activations on-the-fly rather than storing them—trading cheap computation for expensive memory. Pipeline parallelism overlaps communication across GPUs with local computation. Hide the latency behind useful work.

For a block of consecutive layers, mHC stores only the input to the first layer. During backpropagation, it recalculates all intermediate states needed for gradient computation, then discards them immediately. This converts a linear memory cost into a bounded one determined by block size. The optimal block size? Approximately √(n×L/(n+2)) where n is the stream expansion rate and L is the total number of layers. For typical configurations, this aligns with pipeline stage boundaries.

Mixed precision kernels written in TileLang complete the efficiency picture. Most operations run in bfloat16 for speed, with selective use of float32 where numerical stability demands it. The Sinkhorn-Knopp projection runs entirely in float32 but operates on small 4×4 matrices, making the precision cost negligible.

These optimizations are the only reason the architecture works at scale. Without them, mHC would join the long list of promising techniques that work beautifully at 125M parameters and collapse under their own weight at production scale.

What the Authorship Pattern Means

Liang Wenfeng, DeepSeek's CEO, appears as the last author on the paper—the position that typically indicates the senior researcher who directed the work. He also uploaded the paper to arXiv himself. This matters.

DeepSeek's major model releases follow a pattern. Liang publishes research introducing core techniques. Weeks or months later, the company ships a model using those techniques. The V3 technical report preceded V3's release. The R1 reasoning paper came before R1. Now mHC arrives three weeks before Spring Festival 2026, when observers expect DeepSeek's next flagship model.

The pattern serves three ends. It builds technical credibility through peer-reviewable research rather than marketing claims. It signals direction to the research community, inviting scrutiny. It creates a public timestamp for innovation—useful when operating in a geopolitical environment where origin of ideas matters for regulatory and competitive reasons.

But the deeper message addresses capability perception. US export controls rest on an assumption: China's AI sector depends on access to cutting-edge chips to remain competitive. Every DeepSeek release that achieves strong results using older hardware challenges that assumption. Publishing the technical details transforms the challenge into a demonstration. Here's how we did it, explained with enough specificity that you can reproduce it.

The Chip Constraint as Forcing Function

DeepSeek operates without access to Nvidia's H100 or H200 GPUs. The most advanced chips available to Chinese companies? A800s and A100s—older architectures with restricted interconnect bandwidth. On the newer chips DeepSeek can't buy, the wire connecting GPUs is thick and fast. On the A800, it's thin. This forces specific design choices.

That thin wire matters when training large models because modern training splits computation across hundreds or thousands of GPUs. The residual stream must move between GPUs at pipeline stage boundaries. Expand the width 4×? That's 4× the communication volume. On H100s with fast NVLink, the overhead might be tolerable. On A100s with restricted bandwidth, the chip spends more time waiting for data than processing it.

DeepSeek's DualPipe scheduling extension addresses this directly. Overlap residual stream communication with attention and feedforward computations. Hide the latency behind useful work. The recomputation strategy reduces total communication by storing less state across pipeline boundaries. These aren't elegant theoretical contributions. They're pragmatic solutions to a hardware constraint Western labs don't face.

The constraint forces rigor. When you can't throw more compute at a problem, you solve it differently. mHC's mathematical foundations—manifolds, doubly stochastic matrices, Sinkhorn-Knopp projection—aren't the product of unlimited experimentation on infinite hardware. They're the result of careful analysis about what properties matter for training stability and how to enforce them efficiently.

This creates an odd dynamic for AI development. The conventional wisdom says access to cutting-edge hardware provides an insurmountable advantage. Better chips mean faster iteration, more hyperparameter sweeps, larger ablation studies. In practice, hardware abundance can enable sloppier engineering. If training goes unstable at scale, add more gradient clipping, tune the learning rate schedule, expand the warmup period. Engineering discipline takes second place to empirical debugging.

Hardware scarcity forces the opposite approach. Understand the failure mode mathematically, identify the minimal constraint that prevents it, implement that constraint efficiently. The resulting architectures may prove more robust precisely because they can't afford fragility.

What This Means for Competitive Dynamics

Bloomberg Intelligence projects that DeepSeek's forthcoming model "has potential to upend the global AI sector again." The R1 release established precedent—reasoning capabilities competitive with GPT-4 at a fraction of the development cost. If R2 uses mHC and delivers another capability jump, the competitive implications extend beyond benchmarks.

For two years, the assumption in San Francisco has been that money wins. If you can buy 100,000 H100s, you win. This paper suggests that money might just be masking bad engineering. That's a nervous thought for a CFO who just signed a ten-billion-dollar check for a data center.

The AI industry currently operates under an assumption that scale equals capability. Bigger models trained on more data using more compute produce better results. This scaling paradigm justifies massive infrastructure investments. OpenAI raised billions to build computing capacity. Anthropic secured cloud credits from Google. Meta announced plans to deploy over 600,000 H100-equivalent GPUs.

DeepSeek's approach threatens that logic. If architectural innovation can substitute for raw compute, the moat protecting capital-intensive AI development starts looking more like a sunk cost. Models achieving top-15 LLM benchmark performance at one-tenth the training cost don't just compete on price. They show the expensive path isn't the only path.

This matters for markets where compute access faces regulatory or economic constraints. European AI labs can't match US hyperscaler budgets. Developing nations lack data center infrastructure. Academic researchers operate on grant funding. For all these contexts, efficient architectures matter more than abundant compute. DeepSeek's research provides a template.

Western AI labs will study this paper carefully. Some will dismiss it—Chinese research quality, unverified claims, benchmark gaming. Others will attempt replication. The most strategically acute will recognize what it represents: a competitive approach optimized for different constraints that may prove more durable than approaches optimized for unconstrained resources.

The Research Publication Strategy

DeepSeek publishes through arXiv and Hugging Face rather than traditional peer-reviewed venues. This choice trades academic prestige for speed and accessibility. An arXiv paper becomes publicly available immediately. A conference submission enters a 3-6 month review process.

The open publication strategy serves multiple constituencies. Academic researchers get early access to techniques they can build on. Industry practitioners get implementation details without waiting. Regulators see technical capabilities documented transparently. The AI community as a whole benefits from knowledge diffusion.

It also creates competitive pressure. When DeepSeek publishes architectural innovations that deliver measurable gains, Western labs face a choice: adopt similar techniques or explain why they don't need to. The R1 release sparked immediate work on reasoning models from multiple competitors. mHC will likely trigger similar attention on residual stream architectures.

The publication pattern contains a message for the export control regime. Hardware restrictions didn't prevent innovation—they redirected it. Denied access to the fastest chips, Chinese labs developed more efficient algorithms. Blocked from certain supply chains, they built alternative tooling. The controls created short-term obstacles but may have accelerated long-term capability development by forcing fundamental rather than incremental advances.

What Makes This Architecture Matter

Residual connections seemed solved. The 2016 ResNet architecture worked well enough that researchers focused elsewhere. ByteDance's 2024 Hyper-Connections suggested room for improvement but introduced stability problems that limited adoption. DeepSeek's mHC appears to deliver the performance benefits without the training collapse.

The paper works on multiple levels. Technically, it shows that constrained optimization can recover the stability properties of identity mappings while preserving expressivity. Systematically, it shows that efficiency optimizations matter for practical deployment at scale. Strategically, it exemplifies how hardware constraints can drive architectural innovation that proves valuable beyond the original constraint context.

The paper runs 19 pages with extensive ablations, infrastructure details, and scaling experiments. The authors test on models from 3B to 27B parameters, document memory access patterns, visualize gradient flow dynamics, provide specific kernel implementations. This level of detail suggests confidence. You can replicate this work, verify the claims, build on the foundation.

Whether mHC becomes widely adopted depends on factors beyond technical merit. Does it compose well with other architectural innovations? Do the efficiency gains persist at larger scales? Can Western labs with H100 access extract similar benefits? The answers will emerge as the research community digs into implementation.

But the broader pattern holds regardless. The AI capability gap between resource-rich and resource-constrained labs may be narrowing not despite export controls but partly because of them. Efficiency innovations developed under constraint turn out to be useful everywhere. The architectural advances forced by limited hardware become competitive advantages when scaled to more capable systems.

Spring Festival 2026 arrives in six weeks. If the pattern holds, DeepSeek's next model will show what mHC enables at production scale. The benchmark numbers will matter, but the method will matter more.

❓ Frequently Asked Questions

Q: What exactly is mHC and why does it matter?

A: Manifold-Constrained Hyper-Connections (mHC) is an architecture that prevents training collapse in neural networks by constraining residual connections to doubly stochastic matrices—basically forcing the network to operate within a mathematical budget where signals can't explode. It matters because it achieves better performance than unconstrained approaches while adding only 6.7% training overhead, proving efficiency can beat raw compute.

Q: Which chips can DeepSeek actually use versus which are blocked?

A: DeepSeek can access Nvidia's A800 and A100 GPUs—older architectures with restricted interconnect bandwidth. US export controls block them from buying H100 and H200 GPUs, which have faster NVLink connections between chips. The A100s have thinner communication pipes, forcing DeepSeek to develop efficiency optimizations that Western labs with unrestricted hardware access don't need.

Q: What was wrong with ByteDance's Hyper-Connections that mHC fixes?

A: ByteDance's 2024 Hyper-Connections expanded residual stream width for better performance but introduced severe training instability. In DeepSeek's tests, gradient amplification reached 3,000x by layer 60, causing training collapse around step 12,000. The problem: removing mathematical constraints let signals explode or vanish. mHC's doubly stochastic constraint keeps amplification under 1.6x while preserving the performance gains.

Q: How much performance improvement did mHC actually deliver in testing?

A: DeepSeek's 27B parameter model with mHC improved 2.1% on BIG-Bench Hard reasoning tasks and 2.3% on DROP reading comprehension, with smaller gains across six other benchmarks. Testing spanned models from 3B to 27B parameters. The gradient norm stayed stable through 50,000 training steps—no collapse. The infrastructure optimizations kept training overhead to 6.7%.

Q: When should we expect DeepSeek's next model release?

A: Spring Festival 2026 arrives in six weeks (late January/early February). DeepSeek's pattern shows CEO Liang Wenfeng publishing research papers weeks before major model launches—the V3 technical report preceded V3, the R1 paper came before R1. The mHC paper dropped on New Year's Eve, suggesting the next flagship model (widely called R2) launches around Spring Festival.