💡 TL;DR - The 30 Seconds Version

👉 84% of developers now use AI tools (up from 76%) but only 33% trust their accuracy, down from 43% in 2024.

📊 66% are frustrated with "almost right" AI solutions that look correct but need extensive debugging to actually work.

🔧 45% report that fixing AI-generated code takes longer than expected, often more time than writing from scratch.

🤝 75% still ask humans for help when they don't trust AI, with 35% visiting Stack Overflow after AI tools fail them.

🤖 Only 23% use AI agents regularly, and 77% say "vibe coding" isn't part of their professional development work.

🐍 Python usage jumped 7 percentage points as developers learn AI-compatible languages for the growing AI development market.

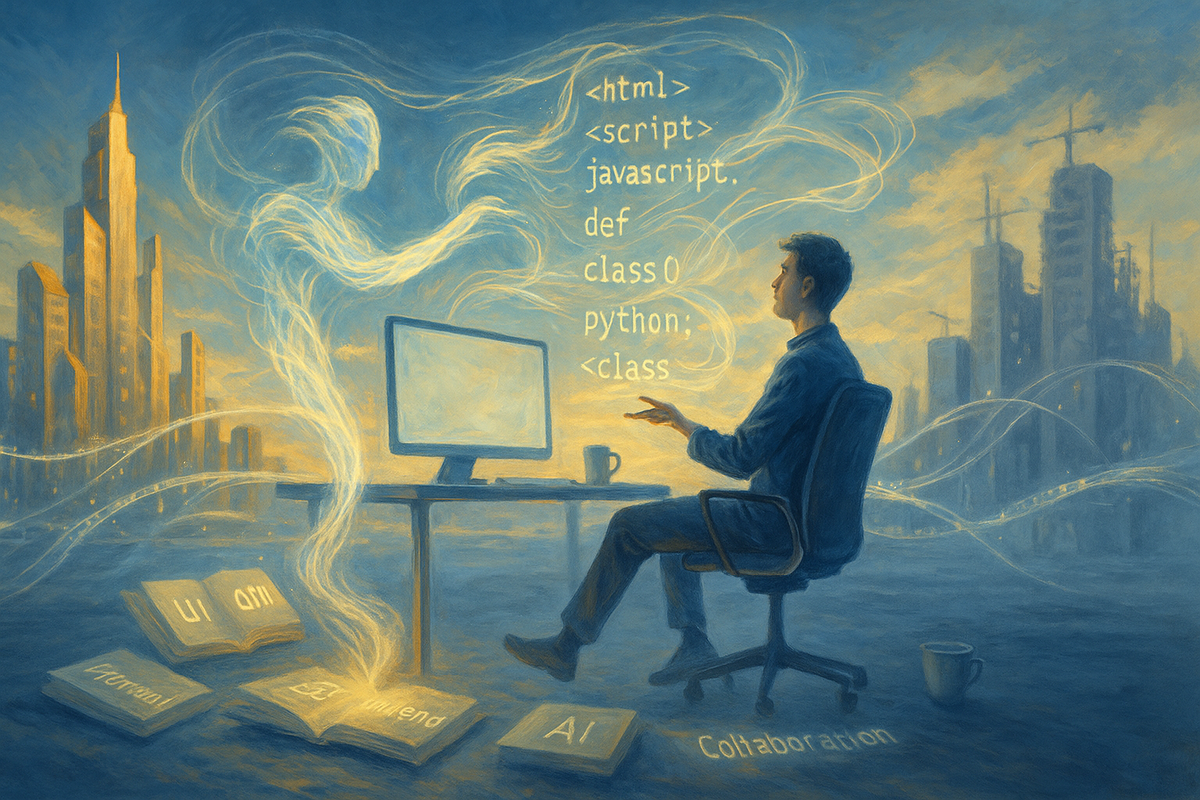

Developers are living through a strange contradiction. They're adopting AI tools faster than ever, but they trust them less each month.

Stack Overflow's 2025 Developer Survey shows this contradiction perfectly. They talked to over 49,000 developers in 177 countries. Usage is up: 84% now use or plan to use AI tools, compared to 76% last year. But trust crashed. Only 33% trust AI accuracy now, down from 43% in 2024. Favorability dropped from 72% to 60%.

This isn't just data points. Development teams everywhere are hitting the same wall. AI tools often create more work than they save. Instead of boosting productivity, they're creating new kinds of technical debt.

The "Almost Right" Problem

The biggest frustration developers face isn't broken AI code. It's code that looks correct but isn't quite right. Sixty-six percent of developers cite "AI solutions that are almost right, but not quite" as their top complaint. Another 45% say debugging AI-generated code takes longer than expected.

This creates a nasty trap. Broken code is easy to spot and toss. But "almost right" code? That takes real work. Developers have to analyze what went wrong, then fix it. Many say they could write it faster from scratch.

Erin Yepis, Senior Analyst at Stack Overflow, captured the problem perfectly: "AI tools seem to have a universal promise of saving time and increasing productivity, but developers are spending time addressing the unintended breakdowns in the workflow caused by AI."

The data backs this up. Only 29% of developers believe AI tools can handle complex problems, down from 35% last year. When the stakes are high and complexity increases, AI tools become less helpful and more burdensome.

The Human Safety Net Grows Stronger

You might expect developers to abandon human resources as AI tools improve. The opposite is happening. Stack Overflow remains the top community platform at 84% usage, with 89% of developers visiting multiple times per month.

Here's the telling part: 35% of developers visit Stack Overflow specifically after encountering issues with AI responses. The platform has become the human safety net in an increasingly automated world.

This pattern extends beyond Stack Overflow. When developers don't trust AI answers, 75% turn to humans for help. Another 61.7% cite "ethical or security concerns about code" as a reason to seek human expertise. The message is clear: AI tools increase rather than decrease the need for experienced developers who can spot and fix AI-generated problems.

GitHub follows Stack Overflow at 67% usage, and YouTube comes in third at 61%. Developers learning to code show an even stronger preference for YouTube (70% vs 60% for professionals), suggesting that human-created educational content remains essential for skill development.

AI Agents Hit Reality

The AI agent revolution everyone's talking about? It's not here yet. Only 23% of developers use AI agents regularly, with another 8% using them infrequently. A significant 38% have no plans to adopt them at all.

When developers do use AI agents, the benefits are mostly personal rather than team-wide. About 70% agree that agents reduce time spent on specific tasks, and 69% report productivity gains. But only 17% say agents improve team collaboration, making it the lowest-rated impact by far.

"Vibe coding" - where you describe what you want and AI builds it - gets even less traction in professional settings. Seventy-seven percent of developers say it's not part of their professional work. For serious development, precision still beats vibes.

Enterprises betting on AI agents should pay attention here. The tech isn't ready for prime time yet. Most developers won't hand over complex or critical work to autonomous agents.

What This Means for Enterprises

The survey data reveals several important patterns for technical decision-makers. First, rapid AI adoption has outpaced governance capabilities. Organizations face potential security and technical debt risks they haven't fully addressed.

Ben Matthews, Senior Director of Engineering at Stack Overflow, warns that AI coding tools can produce mistakes that even knowledgeable developers might miss. LLMs sometimes can't register their own errors, creating blind spots in code quality.

The "almost right" problem compounds these risks. With 45% of developers reporting increased debugging time for AI code, organizations need stronger code review processes and debugging tools designed specifically for AI-generated solutions.

Python's growth shows another trend. It jumped 7 percentage points this year, mostly because it's the go-to language for AI work. Developers are picking up Python and other AI-friendly languages. About 36% learned to code specifically for AI projects in the past year.

But this learning curve creates its own challenges. Organizations need training programs that teach developers not just how to use AI tools, but how to use them wisely. The data shows that developers using AI tools daily report 88% favorability compared to 64% for weekly users, suggesting that proper integration makes a huge difference.

Trust varies by country too. Indian developers are the most optimistic about AI tools. UK developers? Not so much. Only 23% trust AI output there. Cultural and regulatory differences probably play a role.

The Path Forward

Smart AI adoption means adding tools to existing workflows, not replacing everything. Developers already juggle six or more tools daily. Adding more creates chaos unless done carefully.

The best approach is treating AI tools as helpers, not replacements for human expertise. Companies that fix the "almost right" problem will build faster and write better code. Those that don't will just create more technical debt.

This means investing in debugging capabilities, maintaining human expertise pipelines, and implementing staged AI adoption strategies. It also means recognizing that competitive advantage won't come from AI adoption speed, but from developing better capabilities in AI-human workflow integration.

Why this matters:

• The "almost right" problem creates a new category of technical debt that can actually reduce productivity despite AI's promises - enterprises need better debugging and code review processes specifically for AI-generated solutions.

• Developers are doubling down on human expertise and community resources even as they adopt AI tools, suggesting that successful AI integration amplifies rather than replaces the need for experienced developers.

❓ Frequently Asked Questions

Q: What exactly is "vibe coding" that the survey mentions?

A: "Vibe coding" means describing what you want in plain language and letting AI build the entire application. Only 15% of developers do this professionally, while 77% say it's not part of their serious work.

Q: Which AI tools are developers actually using most?

A: OpenAI's GPT models lead at 81% usage, followed by Claude Sonnet at 43%, Google Gemini Flash at 35%, and OpenAI Reasoning at 35%. ChatGPT dominates at 82% for out-of-the-box agents.

Q: How do experienced developers differ from beginners in trusting AI?

A: Experienced developers are much more cautious. Only 2.5% of experienced developers highly trust AI output, compared to 6.1% of beginners. Experience breeds skepticism about AI accuracy.

Q: What does "almost right" AI code actually look like?

A: It's code that compiles and seems correct but has subtle bugs, wrong logic, or security issues. Unlike obviously broken code, it requires careful analysis to spot problems, making debugging time-consuming.

Q: Which countries trust AI tools the most and least?

A: Indian developers show the highest AI optimism, while UK developers are among the most skeptical with only 23% trusting AI output. Cultural and regulatory differences likely influence these patterns.

Q: Is this the first time developer trust in AI has dropped?

A: No, trust has been dropping for years. It went from 42% in 2023 to 43% in 2024, then fell to 33% this year. Favorability dropped too - from 77% in 2023 down to 60% now.

Q: What specific problems do developers have with AI agents?

A: Most developers have three big complaints: 87% worry about accuracy, 81% have privacy and security fears, and 53% say the tools cost too much. Team collaboration gets the worst rating - only 17% think agents actually help there.

Q: How much extra time are developers spending on AI-generated code?

A: 45% report that debugging AI code takes longer than expected. Many say it's often faster to write code from scratch than fix "almost right" AI solutions that look correct but aren't.