OpenAI launched GPT-5 today, bringing three model tiers, complex pricing, and a 56.8% security vulnerability rate. Here's what users and developers need to know.

🚀 Performance & Technical Specs

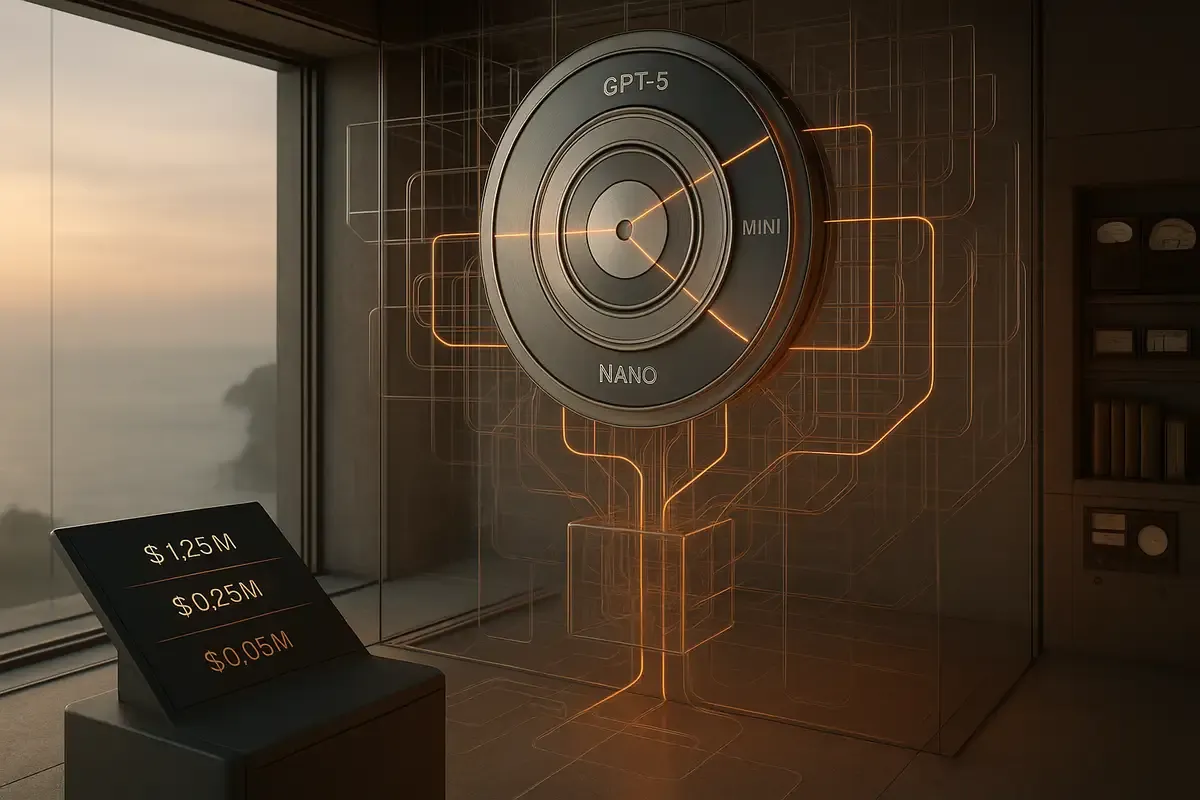

Q: What are the actual differences between GPT-5, mini, and nano?

GPT-5 handles complex tasks with 74.9% accuracy on bug-fixing benchmarks. Mini drops slightly to 71% but costs 80% less. Nano manages 54.7% accuracy at 96% lower cost. The performance gap shows most in coding: GPT-5 writes working code first try, mini needs occasional fixes, nano requires multiple attempts. You can feed any of the three models 272,000 tokens (think 200,000 words). They'll output up to 128,000 tokens in response.

Q: How do the four reasoning levels actually affect output?

Minimal reasoning streams tokens immediately with basic processing. Low adds light analysis for simple tasks. Medium handles professional work with deeper thought. High burns through invisible thinking tokens for complex problems. A coding task at minimal might output 2,000 tokens. The same task at high could generate 10,000 thinking tokens plus 2,000 output tokens. Visual tasks barely improve past low. Math and debugging need high.

Q: Why does ChatGPT feel different from the API version?

ChatGPT runs three models with a router choosing based on your question. Simple queries hit the fast model. Complex ones trigger reasoning. The API gives you one consistent model at your chosen reasoning level. ChatGPT also shows thinking summaries while the API shows nothing during processing. This makes ChatGPT feel smarter but less predictable than API calls.

Q: How much text can GPT-5 really handle accurately?

GPT-5 accepts 272,000 tokens but accuracy drops with length. At 128,000 tokens, it retrieves information correctly 95% of the time. Push to maximum and accuracy falls to 87%. For comparison, that's a 400-page novel at full capacity. The model maintains context better than GPT-4o, showing 86.8% accuracy at max length versus o3's 55%. But you pay full price even when accuracy degrades.

💰 Cost & Pricing

Q: What's my real cost per query going to be?

A 1,000-word prompt (1,300 tokens) generating 500 words costs: GPT-5 at high reasoning: $2-6 depending on thinking tokens. GPT-5 at minimal: $0.02. Mini at medium: $0.005. Nano at any level: $0.003. The wild card is reasoning tokens—they multiply costs 3-5x but stay invisible until billing. ChatGPT hides this completely by auto-switching models based on your subscription.

Q: How does GPT-5 compare to Claude and Gemini pricing?

GPT-5 costs $1.25 per million input tokens. Claude Sonnet 4 charges $3.00. Gemini 2.5 Pro ranges from $1.25-2.50. But raw price misleads—GPT-5 uses 22% fewer tokens for the same tasks. On complex jobs requiring multiple API calls, GPT-5's efficiency saves money despite higher output costs. Only Amazon Nova beats GPT-5 on price, but without comparable benchmarks.

Q: When does the 90% cache discount apply?

Tokens used within minutes cost one-tenth normal rates. Build a chat interface where each message includes conversation history—first message costs full price, subsequent messages get 90% off repeated content. A 50-turn conversation that would cost $50 drops to under $10. The discount only applies to input tokens and requires specific API implementation.

Q: What are the ChatGPT subscription differences?

Free users get GPT-5 briefly before dropping to mini versions at undisclosed limits. Plus ($20/month) gets "significantly higher" limits—OpenAI won't specify. Pro ($200/month) guarantees unlimited GPT-5 access plus GPT-5-pro with extended reasoning. Business pricing starts at $25/user/month. Only Pro users know which model they're actually using.

🔧 API Platform

Q: What new API features actually matter?

Three features change development: The verbosity parameter (low/medium/high) controls response length without prompt engineering. Custom tools let GPT-5 accept plaintext instead of JSON, avoiding escape character errors on long inputs. Preamble messages show progress during long tasks—finally, users see something happening. The reasoning_effort parameter includes a new "minimal" option for instant responses.

Q: Can I access the thinking traces that ChatGPT shows?

No. The API charges you for reasoning tokens but never shows them. ChatGPT displays thinking summaries; the API returns silence during processing. Developers resort to setting reasoning to minimal just to see tokens streaming. OpenAI claims this protects their training methods. It's the biggest API limitation.

Q: How do I pick the right model and reasoning combination?

Start with mini at low reasoning for most tasks. It handles 90% of business queries at 1/5 the cost. Use GPT-5 with medium reasoning for code generation and complex analysis. Reserve high reasoning for mathematical proofs and multi-step debugging. Nano works for simple classification and basic Q&A. Test each combination—performance varies wildly by task type.

Q: What about agent and tool-calling improvements?

GPT-5 scores 96.7% on τ2-bench telecom, up from 49% industry average two months ago. It chains dozens of tool calls without losing context and handles errors better. The model now outputs status updates between tool calls. Cursor says GPT-5 has "half the tool calling error rate" of other models. For agentic coding, it's the clear winner.

🔒 Security & Safety

Q: Is the 56.8% prompt injection rate actually acceptable?

No. Over half of determined attacks still succeed with ten attempts. That's better than Claude's 60%+ and others hitting 70-90%, but "least vulnerable" doesn't mean safe. For comparison, you wouldn't deploy a firewall that fails 56.8% of the time. Any application handling sensitive data needs additional security layers beyond model-level protections.

Q: What's this "safe completions" approach?

Instead of refusing sensitive questions outright, GPT-5 gives partial answers based on perceived intent. Ask about combustion energy for homework, get detailed help. Ask suspiciously, get high-level information only. The model guesses your intent from context clues. When it guesses wrong, students get blocked from legitimate research or bad actors get enough information to continue.

Q: How much should I trust GPT-5's outputs?

GPT-5 hallucinates 80% less than o3—about 1% error rate on factual claims versus o3's 5.2%. Simon Willison reports zero hallucinations in two weeks of testing. But OpenAI's system card still warns: "verify GPT-5's work when stakes are high." The model also admits failure more often instead of inventing answers. Trust but verify remains the rule.

Q: Does GPT-5 detect when I'm trying to manipulate it?

Sometimes. OpenAI trained it to recognize common manipulation patterns and respond carefully. But the 56.8% injection success rate proves detection fails often. The model can't distinguish between legitimate edge cases and actual attacks. It's particularly vulnerable to multi-turn attacks where trust builds gradually. Don't rely on the model to police itself.

💻 Coding & Development

Q: How good is GPT-5 at actual production code?

GPT-5 scores 74.9% on SWE-Bench Verified, fixing real GitHub issues. It generates working frontend code 70% better than o3 in testing. Cursor calls it "the smartest model we've used." But success varies by language and framework. It excels at Python, JavaScript, and React. Struggles with niche languages and legacy codebases. Always review generated code—25% still has bugs.

Q: What's this "vibe coding" trend with GPT-5?

Vibe coding means describing what you want in plain English and letting AI write the code. OpenAI demonstrated building a French learning app with games in minutes. Lovable's CEO says GPT-5 builds "complicated applications" like financial planning tools with embedded chatbots. The code stays maintainable through iterations. But you still need to understand the output to debug edge cases.

Q: Should I switch from GitHub Copilot or Cursor to GPT-5?

Not necessarily. Copilot and Cursor integrate GPT-5 already, choosing when to use it. Direct API access gives more control but requires building your own interface. GPT-5 excels at greenfield projects and major refactoring. Existing tools handle autocomplete and small edits better. The sweet spot is using GPT-5 API for complex generation, keeping current tools for daily coding.

Q: How does GPT-5 compare to DeepSeek and other coding models?

GPT-5 beats everything on standard benchmarks. DeepSeek costs less but lacks comprehensive testing. GPT-5 uses fewer tokens and makes fewer tool-calling errors than any competitor. Chinese models like DeepSeek gain ground on price but lag on English documentation and framework knowledge. For production code in major languages, GPT-5 leads. For experimentation, cheaper alternatives work.