Washington is pressing schools to adopt artificial intelligence. Students are already there. New national data shows 86% of students used AI last school year, and nearly one in five high-schoolers say they or someone they know has had a romantic relationship with it, according to CDT’s 2025 K-12 AI survey.

In April, the White House issued an executive order to promote AI literacy and bring the technology into classrooms. By July, the Education Department followed with a “Dear Colleague” letter telling districts how to use federal grants to support responsible adoption. The First Lady’s office has spotlighted deepfake harassment as part of the same push. The policy posture is clear: move AI from pilot to practice.

Key Takeaways

• Federal government accelerates AI adoption in K-12 schools while 86% of students already use it; 19% report they or peers have romantic AI relationships

• Risks scale with deployment intensity: 56% in high-use schools feel disconnected from teachers versus 50% overall; deepfake awareness jumps to 61%

• Only 11% of teachers trained to respond to harmful student AI use; 31% of personal AI conversations happen on school-provided devices

• Ohio becomes first state to mandate AI policies by July 2026; federal principles remain voluntary guidance without enforcement mechanisms

The correlation that matters

The new CDT research doesn’t just list problems. It shows they scale with use. Half of all students say using AI in class makes them feel less connected to teachers; in high-use schools, that rises to 56%. Awareness of school-linked deepfakes stands at 36% overall, but reaches 61% in high-adoption environments, where students are also more likely to hear about non-consensual intimate-imagery deepfakes. Teachers in high-use schools are more likely to report large-scale data breaches (28% versus 18%). That’s not background noise. It looks like a dose–response curve.

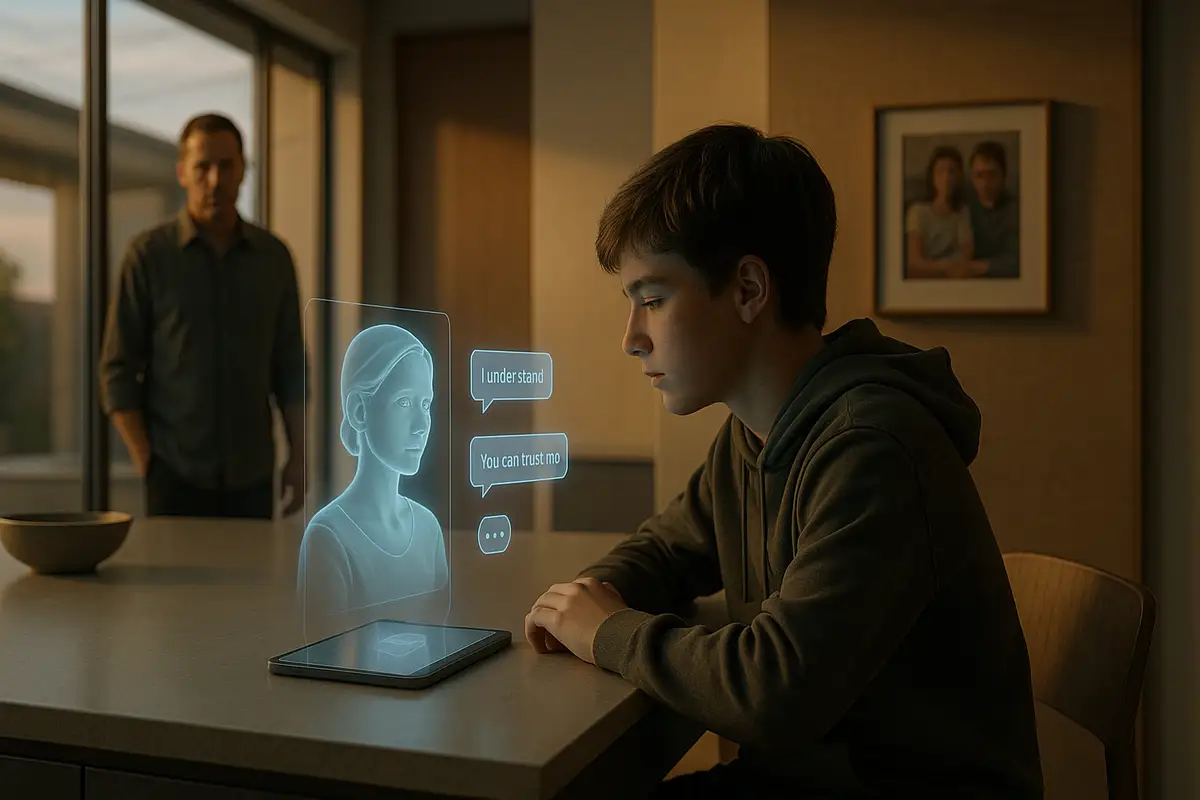

Behavior outside class tracks the same pattern. Forty-two percent of students say they or a friend used AI for mental-health support, as a friend or companion, or to escape reality. Nineteen percent report a romantic AI relationship in their orbit, with higher rates where schools deploy more AI. CDT’s Elizabeth Laird puts the mechanism plainly: the more tasks a school assigns to AI, the more likely students are to treat AI as a companion or partner. The environment shapes the behavior.

The device vector problem

Here’s where policy meets practice. Nearly one-third of students who converse with AI for personal reasons do so on school-issued devices or software. That blurs the line between district oversight and private life, especially on laptops that run monitoring tools. Students who own personal devices can keep those conversations off the school network; students who rely on district hardware cannot. It’s an equity question as much as a privacy one. The school laptop becomes the gateway.

Monitoring software was meant to keep students safe, yet it often triggers mistaken alerts and scoops up personal information that isn’t related to classwork. Those same devices also put companion-style chat apps one click away, raising hard questions about how much time teens spend with them—and what that means for real-world relationships.

The training vacuum

Teachers are embracing the tools—many to plan lessons or tailor materials—and 47% report receiving some training on classroom use, with most of those teachers finding it helpful. But that support rarely covers student wellbeing. Only 11% say they were coached on what to do if a student’s AI use looks harmful. Students say they rarely get clear rules about when AI belongs in an assignment or what “responsible use” actually means. Many teachers are uneasy, too. Roughly seven in ten think heavy classroom AI erodes basics like reading and writing, and most worry it squeezes the person-to-person skills schools are supposed to build. The result is predictable: usage rises faster than the ability to steer it.

Federal principles vs. school realities

Federal guidance now lists five principles for “responsible use”: educator-led, ethical, accessible, transparent, and data-protective. But principles in a letter are only as strong as the strings attached to money. That’s why nine civil-society groups are urging the department to embed those standards into grant conditions and research programs authorized by the executive order. “Should” needs to become “must” if procurement is going to change. Districts buy to the spec that funding requires. Everything else is optional.

There’s also a measurement gap. District dashboards track adoption and device uptime; few track the human side. If leaders want to know whether AI is helping, they need to monitor connection to teachers, counseling referrals, exposure to deepfakes, and the share of personal AI use happening on school devices. Absent that, debates reduce to hunches.

States are starting to legislate

Some states are moving first. Ohio has set deadlines: districts must adopt an AI use policy by July 1, 2026, and the state education department plans to release a model policy before year’s end. In Tennessee, lawmakers are considering a measure that would require grades 6–12 teachers to finish a state-approved AI course by 2027, with the training provided at no charge. These moves matter because mandates, not memos, alter vendor roadmaps and district timelines. When a statute says “adopt a policy,” boards vote. When a statute says “train teachers,” schedules change and budgets follow.

Elsewhere, officials are experimenting with softer levers—model policies, voluntary guidance, and suggested classroom norms. Those help, but they rarely survive a tight budget or a crowded agenda. The districts most eager to adopt AI will push ahead; the districts most cautious will wait. The middle will drift. Rules close the gap.

What to watch next

Three levers will decide whether schools course-correct. First, whether the Education Department ties its principles to real funding requirements—publish a district AI policy, turn off companionship features in school accounts, verify age-appropriate defaults, and grant local audit rights. If those conditions appear, schools will implement them fast. If not, adoption will keep outrunning governance.

Second, whether statehouses copy Ohio’s mandate model or settle for training-only approaches that leave procurement unchanged. Policies create selection pressure; professional development alone changes habits slowly.

Third, whether districts start measuring what matters. Build simple, public dashboards that track student–teacher connection, time on task, counseling demand, and incidents tied to deepfakes or chatbot dependence. If those indicators worsen as AI use intensifies, slow deployment until protections catch up. That’s not anti-technology. It’s basic risk management.

The trade-offs are now visible. The benefits—faster lesson prep, individualized practice, always-on help—are real. So are the costs—thinner relationships, more deepfakes in school communities, and rising reliance on systems that imitate closeness. The federal government is stepping on the gas. Students are asking for brakes. Schools shouldn’t wait for a headline to make the case.

Why this matters:

- Districts are scaling intimate AI systems without matching safeguards, and the documented harms rise as adoption intensifies.

- Attaching enforceable conditions to federal and state funds—policy, training, device rules, and measurement—will decide whether schools buy “principled” AI or simply more AI.

❓ Frequently Asked Questions

Q: What counts as a "romantic relationship" with AI in this survey?

A: The CDT survey asked students if they or someone they know "had a romantic relationship" with AI through back-and-forth conversations during the 2024-25 school year. The study didn't define specific parameters, leaving interpretation to respondents. This likely includes extended companion-style chatbot interactions where students treat the AI as a romantic partner, regardless of the AI system's design intent.

Q: Which AI tools are schools actually using?

A: The study tracked AI use across ten school-related tasks including remote exam proctoring, curriculum design, individualized student support, grading assistance, and activity monitoring on school devices. It didn't name specific vendors or platforms. Schools likely use a mix of tools—some designed for education (like automated grading systems), others general-purpose (like ChatGPT or Character.AI accessed on school-issued laptops).

Q: What defines a "high-adoption" school in the CDT study?

A: CDT segmented schools by the number of tasks using AI. For students, "high-adoption" means four to six different school-related AI uses. For teachers, it means seven to ten uses. Examples include remote proctoring, curriculum help, individualized learning modules, grading assistance, and monitoring student activity. The more tasks deploy AI, the stronger the correlation with student harm indicators.

Q: What AI training do teachers actually receive?

A: Of the 47% of teachers who got training, 89% found it helpful—but it focuses narrowly on instructional applications like lesson planning and grading assistance. Only 11% received guidance on responding to harmful student AI use. Even fewer got instruction on data privacy, bias detection, or when AI isn't appropriate for certain tasks. The gap between adoption and wellbeing training is the study's starkest finding.

Q: What would responsible AI implementation actually look like?

A: The Education Department's principles call for educator-led deployment, transparent systems, data protection, and accessible design. In practice, that means: requiring district AI policies before procurement, training teachers on wellbeing risks (not just classroom use), disabling companion features on school devices, age-appropriate defaults, and tracking relational impacts alongside usage rates. Ohio's July 2026 mandate offers a forcing function; most states rely on voluntary guidance.