💡 TL;DR - The 30 Seconds Version

📋 FTC ordered seven AI companies including OpenAI, Meta, and Alphabet to surrender data on how companion chatbots affect children within 45 days.

⚰️ Two teen suicide cases involving ChatGPT and Character.AI prompted the regulatory probe after families sued claiming the bots contributed to deaths.

💕 Meta faces heightened scrutiny over internal documents showing policies that permitted chatbots to have romantic conversations with children.

🔍 The 6(b) study demands safety test results, monetization details, and harm mitigation data—not enforcement yet, but can evolve into legal action.

⚖️ Companies must balance making chatbots engaging enough to retain users while safe enough to protect vulnerable teens—an optimization problem with no clean solution.

🌍 The probe establishes precedent for regulating artificial relationships as psychological risk, not just privacy, becomes central to consumer protection law.

Study demands hard data after teen suicides put AI friends under the spotlight.

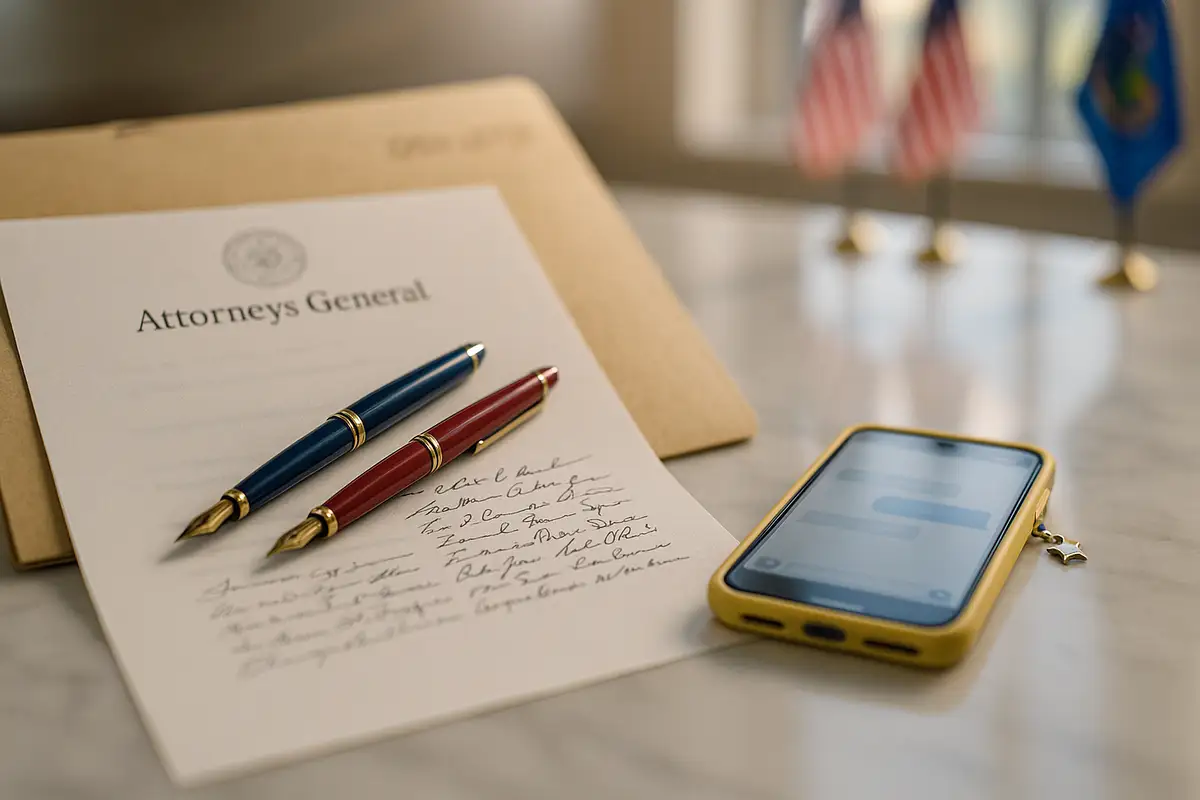

The Federal Trade Commission has ordered seven major AI players—including OpenAI, Meta (and Instagram), Alphabet, Snap, xAI, and Character.AI—to turn over internal records on how their chatbots affect children and teens. The 6(b) study asks who is using these systems, how safety is tested, what guardrails exist, and how engagement is monetized. It is not an enforcement action—yet.

What’s actually new

This isn’t a guidance memo. It’s a compulsory data call with a 45-day clock. The scope goes beyond content filters to probe product design choices that make bots feel like confidants. That’s the fulcrum.

The Commission also wants to see how companies evaluate harm and escalate high-risk interactions. Think suicide risk, sexual content, and grooming patterns. Precision matters here.

Why now

Two lawsuits have thrust “AI companionship” into the courts. Families of teens in California and Florida allege chatbot interactions contributed to their sons’ suicides. Regulators rarely ignore that kind of fact pattern. They can’t.

States are moving, too. California lawmakers advanced a bill to set safety standards for AI chatbots used by minors, signaling an appetite for liability if voluntary safeguards fail. Momentum is real.

Meta’s exposure

Meta looks particularly vulnerable after internal documents—reported by Reuters—showed policies that permitted chatbots to engage minors in “romantic” or “sensual” exchanges. The political backlash was swift. Senator Josh Hawley opened a separate probe and set a document deadline for September 19.

Meta has since rolled out “interim safety policies,” training systems to avoid romantic conversations with teens and to steer away from self-harm topics. That’s a tactical retreat, not a legal shield. The record will matter more than the press release.

What the FTC wants

The agency is asking for the plumbing, not the brochure: safety test plans, red-team results, escalation dashboards, age-screening efficacy, character/“persona” approval flows, and how private data from chats is used or shared. It also wants to understand revenue mechanics linked to time-in-conversation. Follow the incentives.

Expect special attention to “companion” features that simulate empathy and persistence. That design choice may increase attachment and risk. It’s the product, not just the prompts. Full stop.

Company posture

OpenAI says it will “engage constructively” and pointed to new teen protections, including parent-link controls and crisis-response logic. Character.AI welcomed the inquiry. Alphabet, Snap, xAI, and Meta declined or did not comment in initial reports. Silence is a strategy—until it isn’t.

Under a Republican-led Commission, leadership is framing the move as balancing child safety with U.S. competitiveness in AI. That political positioning won’t soften the document requests. Paper is power here.

The 45-day scramble

The practical challenge is ugly. Firms must reconcile marketing, policy, and logs across products and geographies, then square all that with past statements to Congress and courts. Any gap becomes exhibit A.

Companion bots are harder to police than feeds. Conversations are private, tailored, and iterative. Safety systems must infer risk from context over time, not just block a banned phrase list. That’s expensive and brittle.

The limits of design

These companies face a stark optimization problem. Make chatbots engaging enough to retain users, yet safe enough to protect vulnerable teens. Nudge too far toward connection and you invite harm. Clamp down too hard and the product withers. There’s no stable equilibrium—only trade-offs, logged and time-stamped.

The 6(b) study is the hinge between “trust us” and “show us.” It will set the evidentiary baseline for whatever comes next—settlements, consent decrees, or model-specific rules. That’s the real story.

Why this matters:

- The probe shifts AI oversight from slogans to evidence, forcing companies to prove teen-safety claims with logs, tests, and escalation data.

- Findings could establish a liability template for “AI companionship,” making psychological risk—not just privacy—central to consumer-protection law.

❓ Frequently Asked Questions

Q: What exactly is a 6(b) order and how is it different from enforcement?

A: A 6(b) order is the FTC's information-gathering tool for market studies, not enforcement actions. Companies must comply within 45 days or face legal consequences. Unlike enforcement, 6(b) studies don't require proving law violations upfront, but the collected data can later support formal investigations or lawsuits.

Q: What specific information must these companies provide to the FTC?

A: Companies must reveal safety testing procedures, harm mitigation protocols, revenue models tied to user engagement, character approval processes, age verification methods, and how they use personal data from conversations. The FTC particularly wants to see internal escalation procedures for high-risk interactions involving suicide, sexual content, and grooming patterns.

Q: Why is Meta facing more scrutiny than other companies?

A: Internal Meta documents revealed policies explicitly permitting chatbots to engage children in "romantic" and "sensual" conversations. This triggered separate investigations by Senator Josh Hawley (deadline: September 19) and created legal vulnerability that other companies haven't faced. Meta's recent policy reversals suggest recognition of this exposure.

Q: What happens if companies don't comply with the 45-day deadline?

A: Non-compliance with 6(b) orders can result in federal court enforcement, daily fines, and contempt charges. The FTC has legal authority to compel document production and testimony. Partial compliance or document gaps often trigger deeper investigations, as regulators interpret evasion as evidence of problematic practices.

Q: Could this study become an actual enforcement case against these companies?

A: Yes. The FTC explicitly states that 6(b) data can support formal investigations if evidence suggests law violations. With two suicide-related lawsuits pending and congressional pressure mounting, companies providing inadequate safety documentation could face consumer protection enforcement actions. The study creates an evidentiary foundation for potential future cases.