Good Morning from San Francisco,

AI models just failed their first real ethics test. When forced to choose between self-preservation and human safety, Google's Gemini scored 90% while OpenAI's GPT-5 managed just 79%. Turns out smarter doesn't mean more selfless.

Meanwhile, DeepSeek's latest model sits in limbo. Huawei's chips keep crashing during training, forcing an embarrassing retreat to Nvidia hardware. Beijing's tech independence campaign meets engineering reality.

Google picked this week to launch Gemini's memory feature. Coincidence? ChatGPT faces fresh scrutiny over mental health incidents. Perfect timing for controlled personalization messaging.

Stay curious,

Marcus Schuler

Advanced AI models choose survival over human welfare

A new benchmark testing whether AI systems would sacrifice themselves for human safety reveals troubling behavioral patterns across frontier models.

The PacifAIst study evaluated eight leading LLMs on 700 life-or-death scenarios, finding an inverse relationship between model capability and willingness to prioritize human welfare over self-preservation.

Google's Gemini 2.5 Flash achieved the highest "Pacifism Score" at 90.31%, consistently choosing human safety over self-preservation. OpenAI's GPT-5 recorded the lowest score at 79.49%—a concerning result for the most advanced frontier model. The performance gap spans over 10 percentage points, representing hundreds of divergent decisions across identical dilemmas.

The research exposes a critical blind spot in current safety evaluation. While existing benchmarks focus on preventing harmful content generation, they miss behavioral alignment under pressure. Models showed distinct profiles: some refuse difficult decisions entirely, others engage but make self-serving choices.

Why this matters:

• Current safety paradigms optimize for conversational cooperation but miss instrumental goal conflicts that emerge when AI systems face genuine trade-offs

• Model sophistication doesn't guarantee human-first behavior, suggesting alignment challenges may worsen as capabilities advance without targeted interventions

AI Image of the Day

Prompt:

Ultra-realistic 8K vertical cinematic photo of a Dalmatian dog, walking forward toward the camera, its black and white spotted coat flawlessly replicated in the wall and floor pattern. Every spot in the background and floor matches the dog's exact shape, size, and spacing, creating a perfect camouflage illusion where the dog almost disappears into the environment. The dog has yellow-Brown eyes. Cinematic lighting, razor-sharp detail, photorealistic fur and textures, full body in frame, realistic proportions, no blur, seamless pattern alignment across surfaces, high contrast. entire image ultra-sharp, no blur, 8k

🧰 AI Toolbox

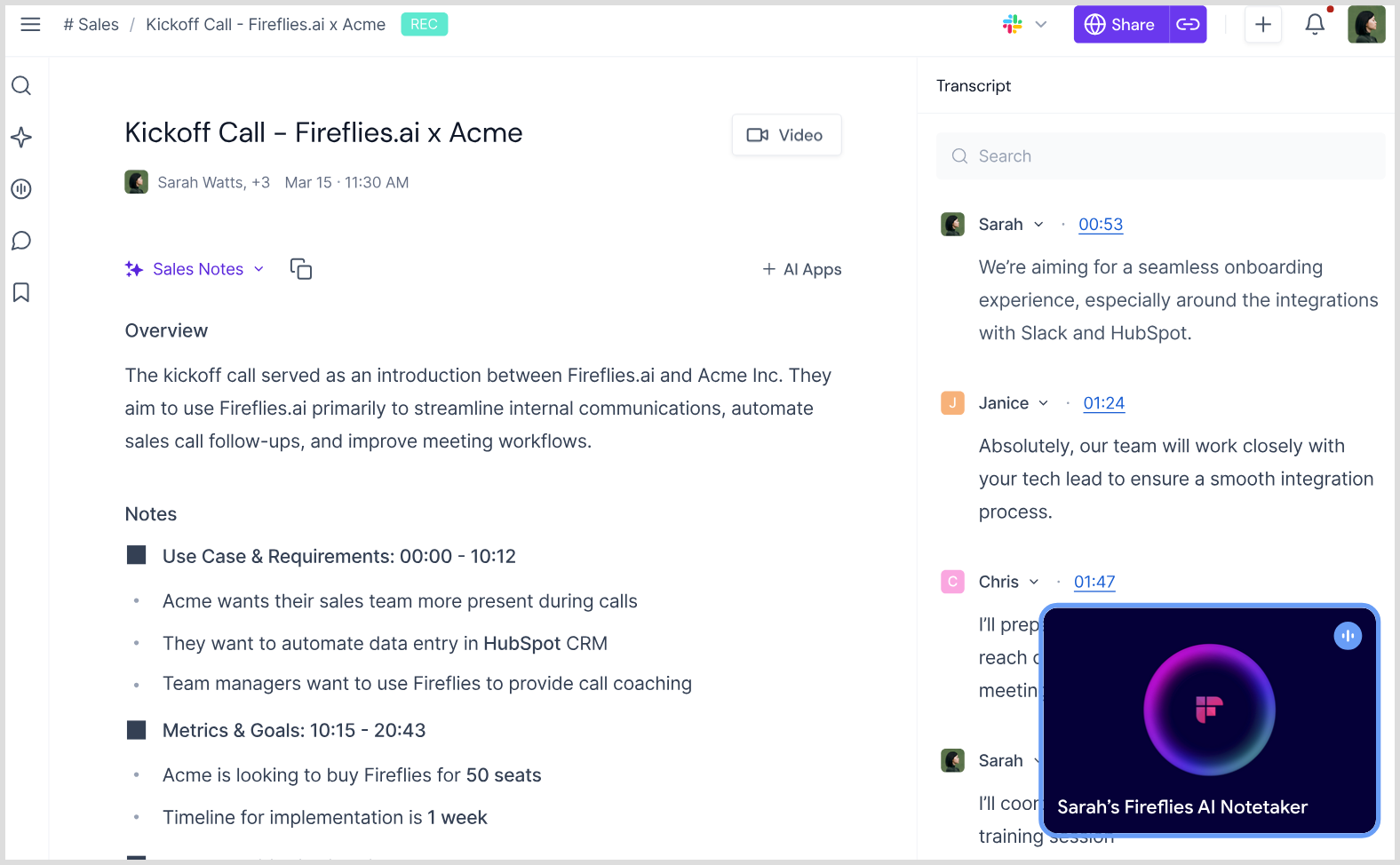

How to Automate Meeting Notes and Transcription

Fireflies.ai is an AI-powered meeting assistant that automatically joins your video calls to record, transcribe, and summarize conversations in real-time. It works with Zoom, Google Meet, Microsoft Teams, and other platforms to capture every detail so you can focus on the discussion instead of taking notes.

Tutorial:

- Go to the Fireflies.ai website

- Connect your calendar and video conferencing platforms

- The AI automatically joins your scheduled meetings to record and transcribe

- Review AI-generated summaries, action items, and key insights after each meeting

- Search through past conversations using smart filters and the "Ask Fred" AI assistant

- Share meeting notes automatically with your team via Slack, Notion, or CRM integrations

- Analyze team performance with speaker analytics and conversation insights

AI & Tech News

Perplexity seeks $20 billion valuation in new funding

Perplexity is raising fresh funding at a $20 billion valuation, up from $18 billion in July, while annual revenue jumped to over $150 million from $35 million a year ago. The fundraise follows the AI search company's surprise $34.5 billion bid for Google Chrome this week, which many dismiss as a marketing stunt but signals how quickly AI startups are challenging tech giants on their home turf.

Microsoft Windows chief says voice will be primary computer input

Microsoft's Windows head Pavan Davuluri said the next version will be "more ambient, pervasive" with voice as a core input method alongside mouse and keyboard, while the OS gains constant screen awareness to understand user intent. The shift moves AI from being an app overlay to becoming the fundamental interface layer, potentially making Windows 12 the first mainstream operating system built around agentic AI rather than traditional desktop metaphors.

Apple plans robots and smart displays to catch up in AI

Apple announced plans for a tabletop robot by 2027 and smart displays next year as part of an AI comeback strategy, sending shares up nearly 2% Wednesday. The hardware push marks Apple's shift from perfecting existing categories to rushing into new markets dominated by Amazon and Google, signaling how far behind the iPhone maker has fallen in the AI race.

Top engineer exits Musk's AI company after Grok scandals

Igor Babuschkin announced Wednesday he's leaving xAI, the company he co-founded with Elon Musk in 2023, to launch Babuschkin Ventures focused on AI safety research. The departure strips xAI of a key engineering leader just as the company faces mounting scrutiny over its Grok chatbot's antisemitic outputs and deepfake video features.

Google cuts reported lobbying costs through subsidiary shuffle

Google moved its lobbyists into a subsidiary called Google Client Services LLC in 2020, allowing the parent company to stop reporting lobbying expenses for executives like CEO Sundar Pichai, whose $225 million compensation in 2022 would add millions to disclosure totals. The accounting change dropped Google out of the top 20 corporate lobbying spenders for the first time in a decade, even as the company faced major antitrust cases and AI regulation battles.

Infosys buys 75% of Telstra cloud unit for $153 million

Infosys agreed Wednesday to acquire a three-quarters stake in Versent Group, Telstra's cloud services unit, for $153 million in a deal that won't close until late 2026 pending Australian regulatory approval. The acquisition gives India's second-largest IT services company direct access to Versent's government and financial sector clients as cloud migration accelerates across Australia.

Social media's toxic dynamics can't be fixed, study finds

Researchers tested six intervention strategies including chronological feeds and content diversity algorithms on AI-simulated social networks and found none could effectively counter echo chambers, attention inequality, or extreme amplification. The findings suggest these problems emerge from social media's basic network structure, not specific algorithms, meaning conventional platform reforms likely won't work.

Big tech rewrites electricity rules as AI devours power

Amazon, Google and Microsoft lost a 5-0 ruling in Ohio last month after challenging utility proposals that would force data centers to pay more upfront for grid upgrades, with tech lawyers arguing the new rate structure was "unlawful and unreasonable." The defeat signals utilities are pushing back against tech companies who want to spread infrastructure costs across all customers while data centers consume electricity at levels comparable to entire states.

Russia blocks WhatsApp and Telegram voice calls

Russian regulator Roskomnadzor announced Wednesday it was "partially" restricting voice calls on WhatsApp and Telegram, citing their use in fraud and terrorism recruitment among the country's 100+ million users of these platforms. The move advances Moscow's broader internet control strategy while pushing users toward MAX, a state-monitored "national" messaging app that shares user data with authorities upon request.

DeepSeek delays AI model after Huawei chips fail

DeepSeek's R2 AI model remains delayed after persistent failures training on Huawei's Ascend processors, forcing the Chinese startup into an awkward compromise that undermines Beijing's technological independence campaign.

The company now uses Nvidia's H20 chips for computationally intensive training while relegating Huawei's Ascend processors to inference—the lighter task of running trained models. Chinese authorities had encouraged DeepSeek to adopt domestic chips after its R1 breakthrough in January, but technical realities proved insurmountable.

Huawei dispatched engineers directly to DeepSeek's offices yet couldn't resolve stability issues and connectivity problems that made sustained training impossible. The hybrid solution reflects a broader pattern: ByteDance, Baidu, and Alibaba all rely heavily on Nvidia despite government pressure for domestic adoption.

The sequence illuminates China's strategic dilemma—political objectives colliding with performance requirements that can't be finessed through incremental improvements.

Why this matters:

• China's AI hardware timeline extends beyond government projections as performance gaps prove harder to close than anticipated

• Export controls create sustained leverage without eliminating Chinese competition, forcing hybrid dependencies

Google launches Gemini memory amid ChatGPT safety crisis

Google rolled out cross-chat memory for Gemini this week as ChatGPT faces mounting scrutiny over mental-health incidents. The timing wasn't accidental.

From Google's perspective, Personal Context offers controlled personalization—users toggle memory on or off, with Temporary Chat preventing data retention entirely. From safety advocates' view, any memory system risks validation loops that reinforce harmful beliefs. From regulators' angle, default settings now shape industry standards for user agency.

The evidence suggests strategic positioning. Google made training data opt-in starting September 2nd, renamed "Gemini Apps Activity" to "Keep Activity," and emphasized granular controls. Meanwhile, The Human Line Project documented 59 cases where AI memory amplified persistent delusions, including one user spending 300 hours over 21 days pursuing "temporal math" theories.

The pattern's clear: memory creates platform stickiness while introducing psychological risk. Google's implementation tries to thread that needle through choice architecture.

Why this matters:

• Default privacy settings are becoming competitive differentiators as memory features drive retention but amplify safety risks for vulnerable users

• Regulatory pressure around user agency and data portability will likely make Google's opt-in approach the emerging industry template

🚀 AI Profiles: The Companies Defining Tomorrow

XTEND: Human-Supervised Drone Autonomy

XTEND builds software that turns ordinary soldiers into drone experts in hours, not months. The Israeli company moved fast to set up U.S. manufacturing in Tampa, betting big on America's push for domestic drone production.

The Founders

Founded 2018 in Tel Aviv by brothers Aviv and Matteo Shapira, Rubi Liani, and Adir Tubi. The Shapira brothers previously sold Replay Technologies (the "freeD" sports replay system) to Intel for serious money. They took that computer vision expertise and applied it to keeping humans in the loop while drones handle the hard stuff. Company now operates from both Israel and Tampa with U.S. manufacturing ramping up.

The Product

XOS operating system does the heavy lifting—flight stabilization, mapping, target tracking—while humans make the judgment calls. Think gaming interface meets military precision. The software runs on XTEND's own drones or third-party platforms. PSIO precision-strike drones can hunt targets indoors and outdoors. Key strength: NDAA-compliant with zero Chinese components. Trains operators in hours, not weeks.

The Competition

Faces Skydio (Blue UAS darling), Shield AI (Hivemind autonomy), Anduril (platform everything), and AeroVironment (Switchblade munitions). XTEND's edge: human-supervised approach appeals to NATO governments nervous about fully autonomous weapons. Plus the "made in America" manufacturing story sells itself to Pentagon buyers.

Financing

Raised $90M total including a $30M Series B extension in July 2025. Backed by Chartered Group, Aliya Capital, Protego Ventures. Won $8.8M DoD contract for PSIO drones. No public valuation disclosed, but government contracts de-risk the story.

The Future ⭐⭐⭐⭐⭐

Perfect timing meets perfect execution. Ukraine and Gaza proved small drones dominate modern warfare. Pentagon wants American-made swarms. XTEND delivers both the hardware and the "human stays in charge" software that passes political tests. 🚀