💡 TL;DR - The 30 Seconds Version

🎭 Google revealed that "nano-banana," the anonymous model topping image editing benchmarks, is actually Gemini 2.5 Flash Image now shipping across all platforms.

📊 ChatGPT logs over 700 million weekly users while Gemini reached 450 million monthly users, creating a usage gap Google needs to close.

💰 Developer pricing hits roughly 4 cents per image at $30 per million tokens, targeting enterprise batch processing over social media posts.

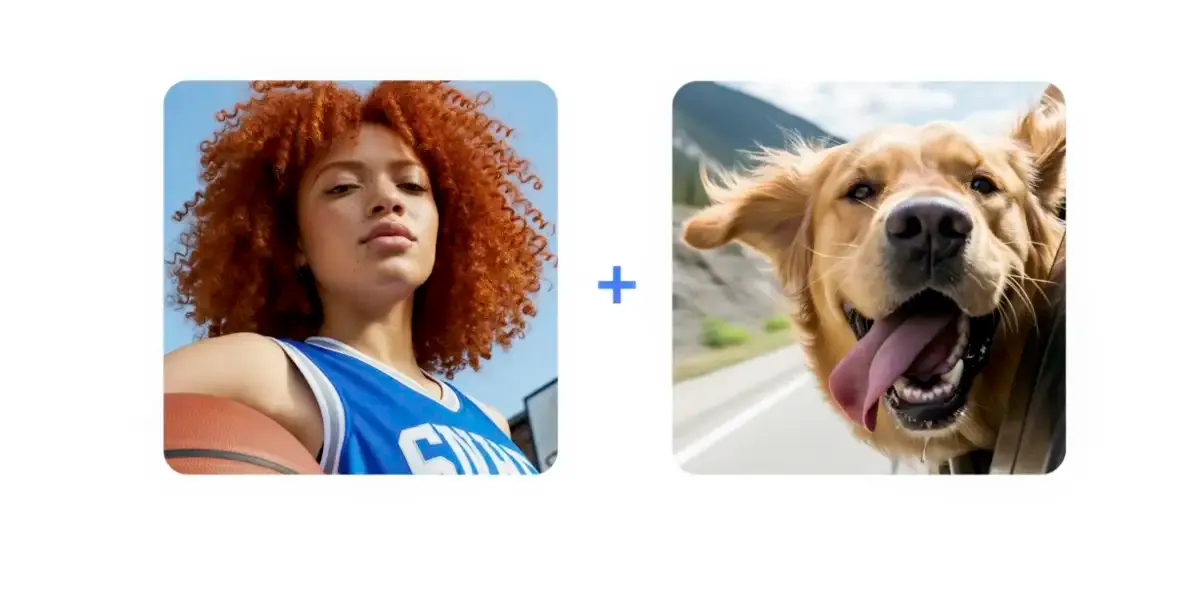

🎨 The breakthrough preserves character likeness through multi-step edits—faces stay consistent when changing outfits, backgrounds, or hairstyles across iterations.

🛡️ All outputs include visible watermarks and SynthID metadata to mark AI-generated content, positioning safety as a competitive advantage.

🚀 Google bets that superior editing consistency plus enterprise distribution can challenge OpenAI's image generation dominance through creative workflow capture.

Breakthrough in likeness consistency, but the real contest is user growth

Google has taken the wrapper off its anonymous, top-ranked “nano-banana” model and shipped it as Gemini 2.5 Flash Image across the Gemini app, with access for developers via the Gemini API, Google AI Studio, and Vertex AI. The pitch is simple: catch OpenAI’s momentum by making image edits that keep people, pets, and products looking like themselves while users make complex, multi-step changes. The timing is no accident.

What’s actually new

The upgrade centers on likeness consistency. Most consumer tools can swap a shirt color or restyle a room, but faces warp, hands multiply, and backgrounds drift. Google’s model is built to preserve the subject through multi-turn edits—outfits, haircuts, props, and environments—without turning the person into someone else. That claim is the headline.

It also blends multiple references in one go. Users can merge a living-room photo, a specific sofa, and a color palette into a single, coherent render. The same fusion works for product and lifestyle shots, placing the identical object into varied scenes while keeping geometry and branding intact.

Evidence and access

The model is no longer a mystery on community leaderboards. The “nano-banana” that topped crowdsourced image-editing tests is now explicitly Google’s native editor inside Gemini 2.5 Flash. Google says the system is state-of-the-art on external benchmarks, and early public demos focused on edits that historically break likeness—clothes, hair, backgrounds, and composite scenes.

Availability is broad: the Gemini app on web and mobile gets the editor, while developers can call it through Google AI Studio and Vertex AI. Google has also seeded distribution through partner platforms that serve large developer communities. Pricing for the API follows Gemini 2.5 Flash economics, with per-image costs that pencil out to roughly four cents; that positions the tool to be used in bulk, not just for one-off social posts. This matters for businesses.

Competitive context

OpenAI showed how powerful native image features can be for growth. When ChatGPT shipped its latest image stack in the spring, downloads and usage spiked. Image generation, it turned out, is a mass-market onramp: fast feedback, fun shareables, and obvious utility for small creative tasks. That surge widened a user gap that Google now needs to close.

Google’s counterpunch isn’t louder generation—it’s steadier editing. If Gemini can deliver consistent faces and objects under heavy, iterative changes, it attacks a known weak spot in rival tools. Editing is where creative work actually happens. It’s also where enterprise use cases live: catalogs, real-estate visuals, ad variants, training materials, and internal documentation. Fewer retakes mean lower costs.

Product substance, not sizzle

Three capabilities stand out beyond the marketing:

- Targeted, local edits. Users can alter a pose, remove an object, blur a background, or clean a stain without collateral damage elsewhere in the image. That’s table stakes for professional workflows.

- Multi-image fusion. A consistent subject can be dropped into different scenes or composited with other photos for photorealistic results. This supports personalization at scale.

- Template adherence. The system can follow layout or design templates, which makes it useful for batch-producing badges, listing cards, or product mockups that still look brand-correct.

Each reduces manual cleanup. That’s the real win.

Safety, signals, and trade-offs

After last year’s misfires, Google is taking a stricter line. Images made or edited in Gemini carry a visible label and an invisible SynthID tag in the file’s metadata, and the service blocks categories such as non-consensual intimate imagery. That may dull some viral appeal among users who want unmarked pictures, but it’s meant to reassure brands and regulators that provenance isn’t optional.

The company is also signaling lessons learned from last year’s misfires around historically inaccurate people imagery. The promise now is “creative control” with boundaries. If delivered, that’s a competitive posture more than a compliance checkbox: reliable editing with on-by-default provenance.

Economics and distribution

For developers, the economics target scale, not novelty. A list price around $30 per million output tokens works out to roughly four cents per image under the current accounting. That invites real throughput: e-commerce backfills, ad-set variants, and localization runs. And because the tool ships on Gemini, Vertex AI, and common dev portals, the friction to trial is low. Try it, wire it, then automate it.

On consumer surfaces, the story is about retention. Multi-turn edits in chat keep people in the app longer and produce more “keepable” images. If Google’s editor truly avoids the uncanny drift that breaks shareability, it will convert more experiments into saved outputs—and more saved outputs into habit. That’s the loop that matters.

The open question

Benchmarks and slick demos aside, the question is whether this closes the user gap with ChatGPT. Editing quality can win working users. But habits form around ecosystems—search, documents, messaging, and the social graph. Google is betting that steadier edits, plus distribution across its own surfaces and cloud channels, can bend that curve. It’s a plausible plan. Execution will decide it.

Why this matters

- Editing, not just generation, is the battleground: The harder problem—keeping a subject consistent through many changes—is where real creative and commercial value lives.

- Provenance becomes product: Default watermarking and metadata are turning into competitive features as regulators, brands, and platforms push for trustworthy synthetic media.

❓ Frequently Asked Questions

Q: What was the "nano-banana" mystery and why did Google keep it anonymous?

A: "Nano-banana" appeared anonymously on LMArena, a crowdsourced AI evaluation platform, where it topped image editing benchmarks for weeks. Google likely tested it anonymously to gather unbiased user feedback and create buzz before the official reveal. The strategy worked—users were already "going bananas" over it.

Q: How much does it actually cost to use this for business applications?

A: The API costs $30 per million output tokens, with each image counting as 1,290 tokens. That works out to roughly 4 cents per image. For context, generating 1,000 product variants for an e-commerce catalog would cost about $40—designed for bulk business use, not casual social media posts.

Q: Why is keeping faces consistent during edits so technically difficult?

A: AI models typically treat each edit as a new generation task, losing reference to the original subject. When you ask most tools to change a shirt color, they regenerate the entire image, often distorting faces, multiplying hands, or altering backgrounds. Google's model maintains a consistent "identity anchor" across multiple modifications.

Q: Why does Google add watermarks when competitors like Grok don't require them?

A: Google applies both visible watermarks and invisible SynthID metadata to address deepfake concerns and regulatory scrutiny. This builds trust with enterprise customers and policymakers, even if it limits viral social media spread. It's a long-term platform risk management strategy over short-term user acquisition.

Q: How does this compare to established tools like Midjourney or DALL-E?

A: While Midjourney excels at artistic generation and DALL-E handles general prompts well, Google's focus is iterative editing consistency. The key difference is multi-turn conversations—you can progressively modify the same subject across dozens of edits while maintaining likeness. Most competitors struggle after 2-3 modifications.