💡 TL;DR - The 30 Seconds Version

👉 OpenAI launches GPT-5 today at 10 AM Pacific in four versions (standard, mini, nano, chat), but early testers say improvements over GPT-4 are modest—not revolutionary.

📊 Context window doubles to 256,000 tokens (400 pages of text), while the o3-pro reasoning model charges $80 per complex question and takes minutes to answer.

🏭 OpenAI's first GPT-5 attempt (codenamed Orion) failed so badly it became GPT-4.5, which ran slower and cost more before disappearing quickly.

⚔️ Competition forced OpenAI's hand—Google's Gemini matches GPT-4, Anthropic's Claude beats them at coding, and Meta gives away comparable models for free.

🌍 Microsoft's $13.5 billion investment and OpenAI's $157 billion valuation drive the rushed release despite June tests showing no models worthy of the GPT-5 name.

🚀 This marks the end of AI's hockey-stick growth—the transformer architecture has hit its limits, training data is exhausted, and future gains will cost more for less improvement.

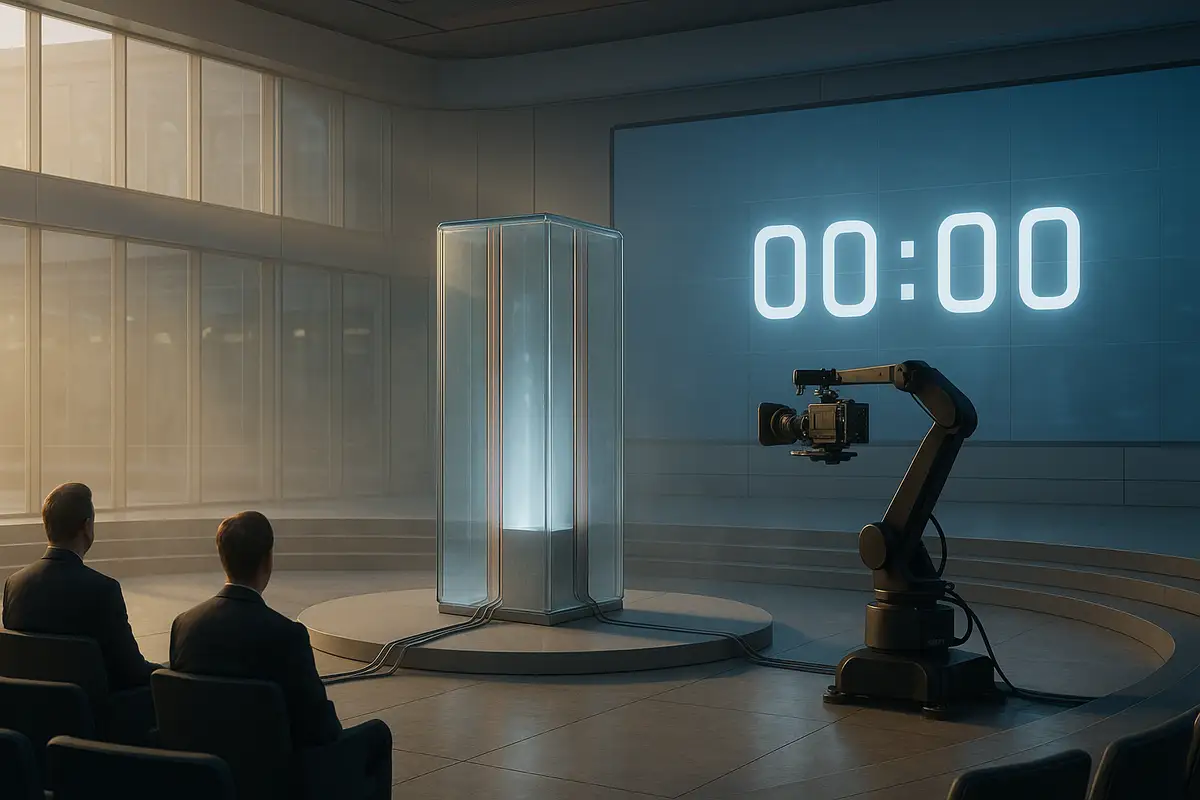

OpenAI launches GPT-5 today at 10 AM Pacific. The wait's over after months of delays and botched attempts. But don't expect fireworks. This isn't the massive jump we saw from GPT-3 to GPT-4. Not even close.

The company posted "LIVE5TREAM" on X yesterday. Subtle as a brick. Sam Altman's been sharing screenshots with "ChatGPT 5" visible in the corner. OpenAI's head of applied research said he's "excited to see how the public receives GPT-5." Corporate speak for nervous.

Here's what early testers are saying: GPT-5 is better. Not mind-blowing. Not revolutionary. Just better. Better at coding. Sharper at math. Handles complex instructions without getting confused. One source inside OpenAI put it bluntly—as recently as June, none of their experimental models felt worthy of the GPT-5 name. They're shipping it anyway.

The Version Parade

GPT-5 arrives in flavors. Four of them, according to a quickly-deleted GitHub blog post that spilled the beans early.

First, there's standard GPT-5. This one handles logic and multi-step tasks. Think of it as the workhorse. Then comes GPT-5 mini for when you need quick answers without burning through computing power. GPT-5 nano runs fast enough for real-time applications. Finally, GPT-5 chat specializes in conversations, especially for business users who need context-aware responses.

GitHub's accidental reveal also mentioned "enhanced agentic capabilities." Translation: the model can handle complex coding tasks with minimal hand-holding. Whether that actually works remains to be seen.

The tiered access structure tells its own story. Free users get basic GPT-5. Nothing fancy. Plus subscribers at $20 monthly unlock "advanced reasoning" with higher limits. Pro subscribers—the ones paying serious money—get GPT-5 Pro with what OpenAI calls "research-level" performance. Teams get everything.

This isn't generosity. It's market positioning. OpenAI needs to justify that $157 billion valuation somehow.

The Technical Reality Check

Let's talk specs. The context window doubles—128,000 tokens to 256,000. That's 400 pages of text it can track at once. No more amnesia halfway through your conversation.

Everything happens in one model now. Text, images, audio, possibly video. No switching between different versions for different tasks. They call it a unified multimodal approach. Sounds impressive until you hear about the computing costs.

The o3-pro model powering GPT-5's advanced reasoning charges $80 per complex question. One tester asked it "Hi, I'm Sam Altman." The model thought about this greeting for several minutes, burning through server time like a philosophy student discovering espresso. That's not intelligence. That's overthinking as a service.

OpenAI uses something called mixture-of-experts architecture. Different parts of the model activate for different tasks. More efficient in theory. We'll see if it works when millions of users hit the servers simultaneously.

Why Launch Now?

Simple. Competition.

Google's Gemini keeps improving. Last month's update matched GPT-4 on most benchmarks. Anthropic's Claude currently beats OpenAI at coding—the exact area where OpenAI built its reputation. Meta gives away models for free that would've cost thousands to access a year ago.

Then there's Microsoft. They dropped $13.5 billion into OpenAI. They want returns. Not promises. Not research papers. Products that generate revenue.

Behind closed doors, investors push OpenAI to convert from nonprofit to for-profit by December. The clock's ticking. Some reports suggest OpenAI might declare artificial general intelligence just to escape its Microsoft contract. Sounds desperate? That's because it is.

The timing reveals everything. OpenAI owned 2023 when GPT-4 launched. Then 2024 arrived and suddenly everyone had something comparable. Now in 2025, they need wins. Even small ones.

The Orion Disaster

Want to know why GPT-5 took so long? Meet Orion, the model that wasn't.

OpenAI built Orion to directly succeed GPT-4. Internal codename. Big expectations. When testing showed it barely improved on GPT-4, they panicked. Rather than admit failure, they released it as GPT-4.5.

Remember GPT-4.5? Neither does anyone else. It ran slower than GPT-4. Cost more. Delivered worse results on several benchmarks. Users complained. OpenAI killed it quietly.

The Orion failure exposed a harsh truth. The techniques that got OpenAI from GPT-3 to GPT-4 stopped working. Adding more data didn't help. Making models bigger backfired. Training runs that cost millions and took months would fail because one server glitched on day 47.

Multiple sources confirm OpenAI spent massive resources training models that flopped. The June revelation is particularly damning. After burning through millions in compute costs, nothing they built deserved the GPT-5 label.

Boris Power, OpenAI's head of applied research, posted Monday that he's excited to see public reception. The subtext is clear. They're launching out of necessity, not confidence.

What You Actually Get

Strip away the marketing fluff. Here's GPT-5 in practical terms.

The doubled context window means real conversations. You can work on a document for hours without the model forgetting your instructions. Upload a 300-page PDF and actually discuss page 250 while referencing page 10.

Multimodal integration works smoother. No more uploading images to one model and asking questions to another. Drop in a photo, ask about it, get text analysis, and generate a related image—all in one thread.

For coders, GPT-5 supposedly reclaims ground lost to Claude. Early testing shows it handles complex debugging better. Can trace through multiple files without losing track. Whether it matches Claude's current capabilities remains questionable.

The "research-level" performance in GPT-5 Pro sounds impressive until you consider the cost. If simple questions cost $80, imagine pricing for actual research tasks. This isn't democratizing AI. It's stratifying it.

Speed varies by version. GPT-5 nano responds almost instantly but with less sophistication. Standard GPT-5 takes longer but thinks deeper. GPT-5 Pro can spend minutes on a single response. Choose your own adventure: fast, good, or expensive.

The Plateau Nobody Discusses

Here's the uncomfortable truth OpenAI won't say directly: the transformer architecture has hit its ceiling.

Bill Gates called it in fall 2023. Gary Marcus has been warning about this for years. Even Ilya Sutskever, OpenAI's former chief scientist who helped build GPT-3 and GPT-4, now admits pure scaling won't get us to superintelligence.

What's left? Tricks. Reinforcement learning. Test-time compute—letting the model think longer about hard problems. Universal verifiers that automatically rate responses. These help. The model that won the International Mathematical Olympiad used these techniques. But they're Band-Aids on a fundamental limitation.

The industry calls it the "data wall." They've scraped the entire internet. Twice. There's no more high-quality text to train on. Making synthetic data doesn't help much. Humans aren't writing new content fast enough to feed these hungry models.

Training costs explode while improvements shrink. GPT-4 cost an estimated $100 million to train. GPT-5? Nobody's saying, but insiders suggest it's multiples higher. For what? Incremental improvements that users might not even notice.

Altman's Mixed Messages

Sam Altman's messaging around GPT-5 has been bizarre. Almost schizophrenic.

One day he's comparing development to the Manhattan Project. Scientists racing against time. World-changing implications. He tells a podcaster that GPT-5 made him feel "useless." Says he's "scared" about releasing it.

Next day? He's posting screenshots of GPT-5 recommending TV shows. The model suggests "Pantheon," an animated series about uploaded consciousness. It accurately pulls Rotten Tomatoes scores. Calls the show "cerebral, emotional, and philosophically intense."

Cool party trick. Not exactly splitting the atom.

This disconnect defines the GPT-5 story. Altman knows it's an incremental update. The investors know. The engineers know. But they need the world to believe otherwise. Hence the fear. The historical comparisons. The carefully orchestrated leaks.

The one-hour livestream scheduled for today will probably follow the script. Big promises. Impressive demos. Careful dodging around the core truth.

The Efficiency Era Begins

GPT-5 marks a turning point. Not because it's revolutionary. Because it's not.

The days of 10x improvements between model generations are over. What's left is optimization. Making models run cheaper. Faster. More reliably. Less hallucination. Better memory. Smoother interfaces.

That's why OpenAI launches three versions instead of one breakthrough model. Different tools for different jobs. It's admission through product design—we can't build one model that does everything perfectly anymore.

Competition shifts from raw capability to practical implementation. Who can deliver 95% of GPT-5's performance at 10% of the cost? Who can run models locally? Who can specialize for specific industries?

China's open-source models already match GPT-4 on many tasks. They're free. They run on your hardware. No API costs. No rate limits. No terms of service.

What Happens Next

Today's livestream kicks off soon. We'll see polished demos. Complex reasoning examples. Multimodal magic tricks. GPT-5 will write code, analyze documents, create presentations. All real capabilities. All genuinely useful. Just not revolutionary.

Plus subscribers will get access within days. Free users wait weeks or months—OpenAI needs those subscription conversions. Enterprise customers get white-glove onboarding and custom pricing that would make your eyes water.

Competitors will benchmark against GPT-5 within hours. If Claude still beats it at coding, expect Anthropic to tweet about it. If Gemini matches its reasoning, Google will make sure everyone knows.

The real test comes next week. When developers get API access. When users try real work instead of demos. When the $80 reasoning charges hit credit cards. That's when we'll know if GPT-5 delivers modest value or just modest improvements.

Why this matters:

• The AI industry's exponential growth phase just ended. Future improvements will cost more and deliver less. Winners will be companies that optimize and specialize, not those chasing artificial general intelligence.

• OpenAI's release of multiple GPT-5 versions admits what critics have been saying—one-size-fits-all AI is dead. The future is narrow models that do specific things well, not broad models that do everything adequately.

❓ Frequently Asked Questions

Q: When will free users get GPT-5?

A: Paying subscribers ($20/month) should see it within days. Free users? Expect to wait months. GPT-4 launched for Plus members in March 2023. Free users got it 14 months later in May 2024.

Q: Why did GPT-4.5 fail so badly?

A: OpenAI tried to build GPT-5 and called it Orion. Didn't work. The thing ran slower than GPT-4, cost more money, and did worse on tests. They shipped it as GPT-4.5 anyway. Nobody used it. They killed it fast.

Q: What's this 256,000 token context window about?

A: GPT-5 tracks 200,000 words at once that's about (roughly) 400 pages of text. GPT-4 managed 100,000 words. So you can dump in massive documents, talk for hours, and it won't lose track. Big deal for serious work.

Q: How much does it cost OpenAI to train these models?

A: OpenAI burns through about $8 billion annually on research and training. Individual training runs cost millions and can take months. The company expects $12.7 billion in revenue this year but still loses money on each model development cycle.

Q: What's the "data wall" everyone keeps mentioning?

A: AI companies have basically scraped the entire internet for training data. There's no more high-quality text left to feed these models. Making fake data doesn't help much, and people aren't writing new stuff fast enough for what AI training needs.

Q: What's test-time compute and why should I care?

A: Instead of making models bigger, OpenAI now lets them think longer on hard problems. The o3-pro model spends minutes and $80 worth of computing power per complex question. It works but shows that brute force scaling has hit its limits.

Q: Will GPT-5 be available through OpenAI's API for developers?

A: OpenAI hasn't confirmed API availability yet. Based on past releases, expect API access for paying developers within weeks of launch. GPT-4's API opened to all developers four months after its initial release.

Q: Why does Ilya Sutskever's opinion matter?

A: This guy co-founded OpenAI. Ran all the tech stuff until last May. Built GPT-3, built GPT-4. So when he comes out and says throwing more compute at models won't get us to superintelligence? That's huge. He spent years saying the opposite.