💡 TL;DR - The 30 Seconds Version

👉 OpenAI launches GPT-5 on Thursday at 10 AM PT, but early testers say improvements over GPT-4 are modest—nothing like the huge leap from GPT-3.

📊 The first GPT-5 attempt (codenamed Orion) failed so badly it became GPT-4.5, which ran slower and cost more before quickly disappearing.

💰 The o3-pro model powering GPT-5's reasoning charges $80 and takes minutes to answer simple questions like "Hi, I'm Sam Altman."

🏭 Context window doubles to 256,000 tokens and combines text, images, and audio in one model—coming in three versions: full, mini, and nano.

⚔️ Competition forced OpenAI's hand: Google's Gemini keeps improving, Anthropic's Claude beats them at coding, and Meta gives away comparable models for free.

🚀 This marks the end of AI's explosive growth phase—the transformer architecture has hit its limits and the industry has run out of training data.

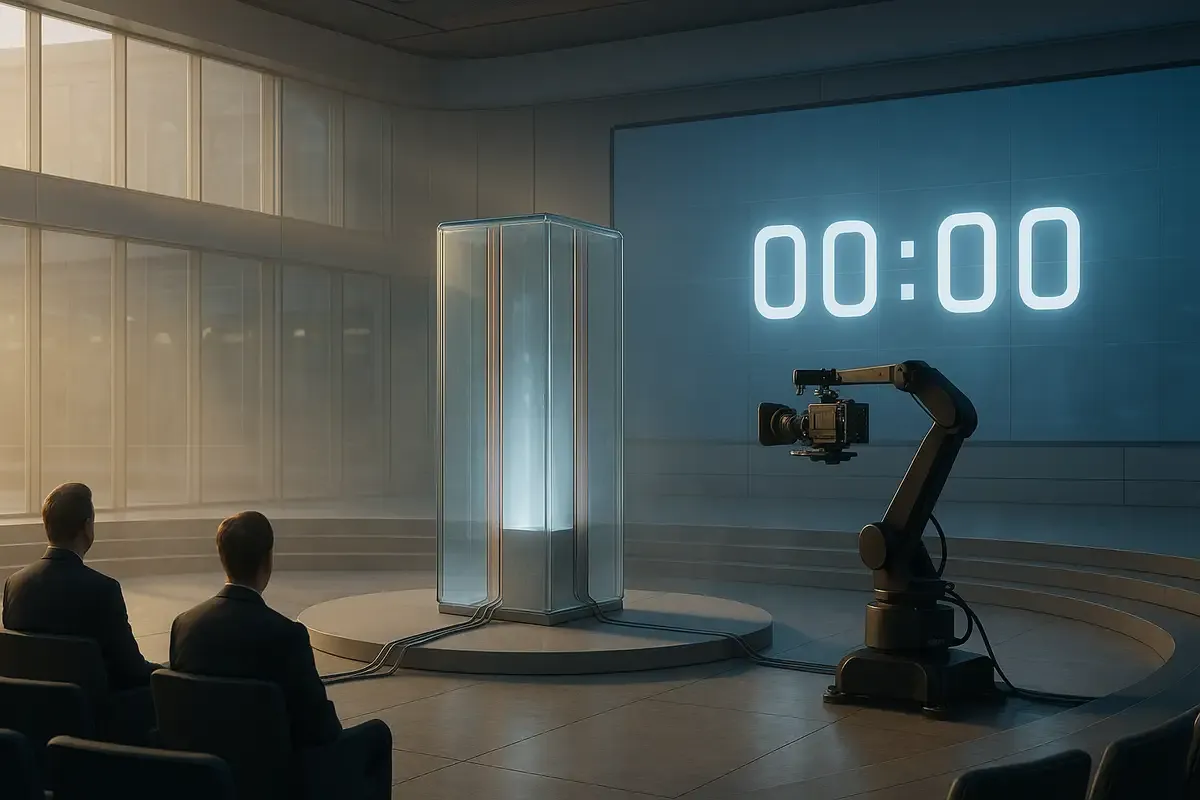

OpenAI will unveil GPT-5 on Thursday at 10 AM Pacific. The company teased it with a post about a "LIVE5TREAM"—subtle as a brick through a window. After months of delays and false starts, the flagship model finally arrives. But here's the thing: the people who've tested it aren't exactly blown away.

Two early testers told Reuters the improvements are real but modest. Better at coding. Sharper at math. But nothing like the jaw-dropping jump from GPT-3 to GPT-4. One source inside OpenAI put it bluntly: as recently as June, none of their experimental models felt worthy of the GPT-5 name. They're shipping it anyway.

Sam Altman's been all over the map about this release. One day he's comparing it to the Manhattan Project and saying he's "scared." The next, he's posting screenshots of GPT-5 recommending TV shows about AI. He told podcaster Theo Von that GPT-5 made him feel "useless"—because it wrote a good email. An email. That's the revolution we've been waiting for?

The Orion That Never Flew

OpenAI's first crack at building GPT-5 went sideways. They called it Orion internally, expecting it to blow past GPT-4. When it didn't deliver, they quietly released it as GPT-4.5 instead. Remember GPT-4.5? Neither does anyone else. It ran slower than GPT-4, cost more, and vanished faster than a startup's runway.

The problem wasn't just one bad model. OpenAI hit two walls at once. First, they ran out of high-quality training data. Turns out you can't scrape the entire internet twice. Second, the models stopped behaving predictably at scale. What worked in small tests fell apart when they cranked up the size. Training runs that take months would fail because of hardware problems, and researchers couldn't predict performance until the very end.

Boris Power, OpenAI's head of applied research, posted Monday that he's "excited to see how the public receives GPT-5." Corporate speak for "we're nervous but launching anyway."

What You Actually Get

So what's actually new? Three main things. First, the context window jumps to 256,000 tokens, double what GPT-4 could handle. Basically, it won't forget what you said five minutes ago. Second, they've crammed everything into one model—text, images, sound, possibly video. No more juggling between different versions. Third, they're using something called mixture-of-experts, which supposedly runs more efficiently. We'll see.

The model comes in three flavors: full GPT-5 for heavy lifting, GPT-5 mini for quick stuff, and GPT-5 nano for embedded systems. ChatGPT Plus subscribers get first dibs. Free users wait, as usual.

Early testing shows PhD-level performance on reasoning tasks. Sounds impressive until you hear about the costs. The o3-pro model underlying some of GPT-5's capabilities charges $80 and takes minutes to answer simple questions. One test: "Hi, I'm Sam Altman." The model overthought it for several minutes, burning through computing power like a philosophy major discovering coffee.

Competition Breathing Down Their Neck

Why release now if it's not ready? Look around. Google's Gemini keeps getting better. Anthropic's Claude actually beats OpenAI at coding now—OpenAI's supposed specialty. Meta gives away models for free that match what OpenAI was charging for a year ago.

Microsoft dropped $13.5 billion into OpenAI and wants results. Investors are pushing the company to switch from nonprofit to for-profit by year's end. The $157 billion valuation needs justification. Some reports suggest OpenAI might declare artificial general intelligence just to escape its Microsoft contract. You know what that looks like? Panic.

The timing matters. OpenAI owned 2023 when GPT-4 dropped. Then 2024 rolled around and suddenly everybody had something just as good. Now in 2025, they need something—anything—to stay ahead. Even if that something is more evolutionary than revolutionary.

The Plateau Nobody Wants to Discuss

Gary Marcus called it. Bill Gates predicted it. Even Ilya Sutskever, OpenAI's former chief scientist, admitted it: pure scaling isn't getting us to superintelligence. The transformer architecture that powers these models is running out of room to grow.

OpenAI increasingly relies on tricks like reinforcement learning and "test-time compute"—basically letting the model think harder about tough problems. It works, sort of. Sure, they got their model to ace the International Mathematical Olympiad. But here's the reality: you're souping up an engine that needs replacing, not tweaking.

The industry calls this the "data wall." You can't train on data that doesn't exist. You can't make models bigger when they already cost millions to train. You can't predict what works when small improvements disappear at scale.

Multiple sources confirm OpenAI spent massive resources training models that didn't meet benchmarks. The June revelation is particularly damning: after months of work, nothing they built deserved the GPT-5 label. But competitive pressure trumps perfectionism.

Altman's Strange Dance

The CEO's messaging has been bizarre. He writes about humanity approaching "digital superintelligence" in his "Gentle Singularity" post. Then he demonstrates GPT-5's powers by... having it recommend TV shows. He name-drops the Manhattan Project while showing off email composition.

Sunday's screenshot revealed GPT-5 accurately pulling Rotten Tomatoes scores for an animated show called "Pantheon." The model called it "cerebral, emotional, and philosophically intense." Accurate? Yes. Revolutionary? Please.

This disconnect between rhetoric and reality defines the GPT-5 story. Altman knows the model represents incremental progress, not transformation. But he needs the world to believe otherwise. Hence the fear, the historical comparisons, the carefully orchestrated leaks.

Thursday's livestream will likely follow the pattern. Big promises, impressive demos, careful avoidance of the core truth: the easy gains are gone. What's left is expensive, incremental, and uncertain.

Why this matters:

• The days of massive AI breakthroughs are over. Getting better now costs a fortune and takes forever. That changes everything about who can compete and how.

• The race now shifts from raw capability to efficiency and specialization, explaining why OpenAI is launching three different versions instead of one breakthrough model

❓ Frequently Asked Questions

Q: When will free users get GPT-5?

A: Paying subscribers ($20/month) should see it within days. Free users? Expect to wait months. GPT-4 launched for Plus members in March 2023. Free users got it 14 months later in May 2024.

Q: Why did GPT-4.5 fail so badly?

A: OpenAI tried to build GPT-5 and called it Orion. Didn't work. The thing ran slower than GPT-4, cost more money, and did worse on tests. They shipped it as GPT-4.5 anyway. Nobody used it. They killed it fast.

Q: What's this 256,000 token context window about?

A: GPT-5 tracks 200,000 words at once—about 400 pages of text. GPT-4 managed 100,000. So you can dump in massive documents, talk for hours, and it won't lose track. Big deal for serious work.

Q: How much does it cost OpenAI to train these models?

A: OpenAI burns through about $8 billion annually on research and training. Individual training runs cost millions and can take months. The company expects $12.7 billion in revenue this year but still loses money on each model development cycle.

Q: What's the "data wall" everyone keeps mentioning?

A: AI companies have basically scraped the entire internet for training data. There's no more high-quality text left to feed these models. Making fake data doesn't help much, and people aren't writing new stuff fast enough for what AI training needs.

Q: What's test-time compute and why should I care?

A: Instead of making models bigger, OpenAI now lets them think longer on hard problems. The o3-pro model spends minutes and $80 worth of computing power per complex question. It works but shows that brute force scaling has hit its limits.

Q: Will GPT-5 be available through OpenAI's API for developers?

A: OpenAI hasn't confirmed API availability yet. Based on past releases, expect API access for paying developers within weeks of launch. GPT-4's API opened to all developers four months after its initial release.

Q: Why does Ilya Sutskever's opinion matter?

A: This guy co-founded OpenAI. Ran all the tech stuff until last May. Built GPT-3, built GPT-4. So when he comes out and says throwing more compute at models won't get us to superintelligence? That's huge. He spent years saying the opposite.