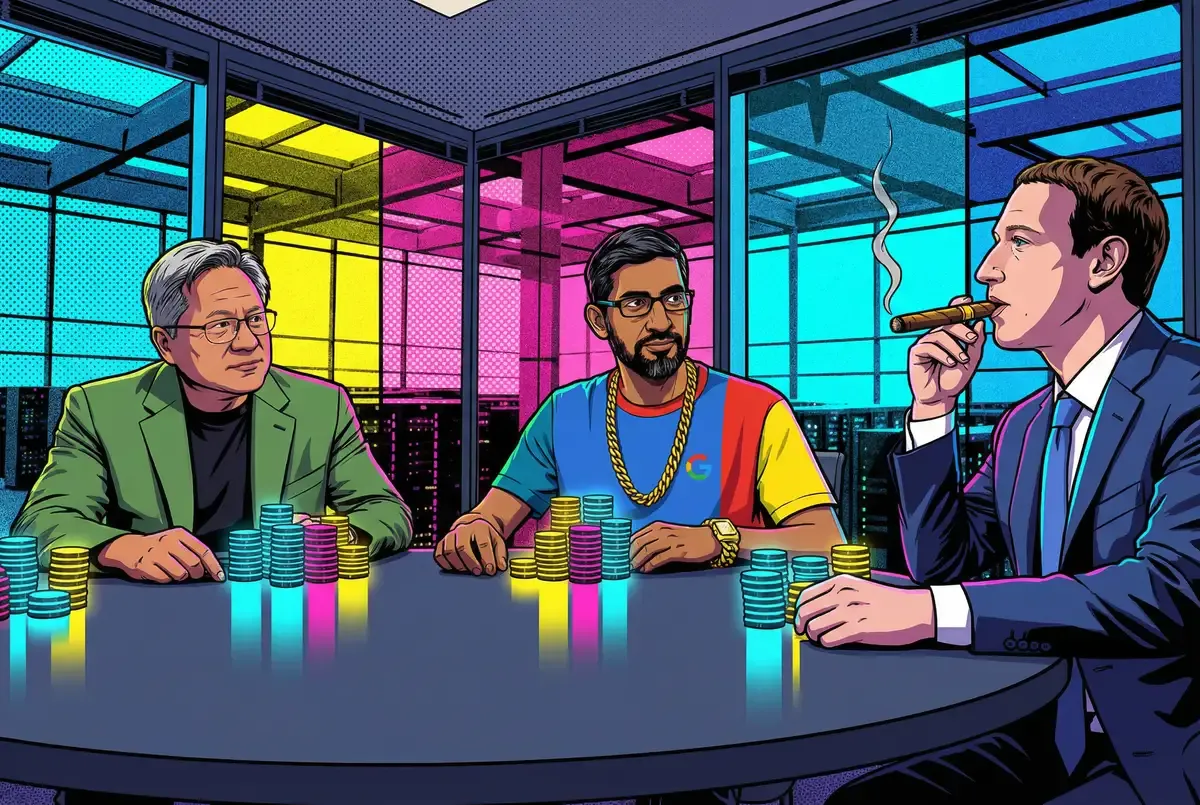

Wall Street saw the headline and panicked. Meta Platforms, one of Nvidia's largest customers with $72 billion in planned AI spending this year, is reportedly negotiating to buy Google's tensor processing units. Nvidia shares dropped as much as 7% on Tuesday morning. AMD cratered 8%. Alphabet climbed past $2.4 trillion in market cap, with traders pricing in a potential run toward the $4 trillion mark.

Pundits rushed to declare this the moment Google finally cracks Nvidia's GPU stranglehold. The chipmaker's biggest customers abandoning ship. That framing misses what's actually unfolding here. Badly.

What the Meta-Google discussions actually reveal is messier and more interesting than supplier defection. Competing incentives. Ecosystem politics. Strategic maneuvering dressed up as procurement. This is what AI infrastructure looks like in 2025. Meta isn't walking away from Nvidia. The game being played involves chips, sure, but the silicon might matter less than everything surrounding it.

The Breakdown

• Meta's talks with Google likely focus on inference optimization for Llama models, not replacing Nvidia for core training workloads

• PyTorch compatibility creates friction—Meta invented the dominant AI framework, and Google's TPUs require a translation layer to run it

• Anthropic signed for one million Google TPUs and a separate $30 billion Microsoft/Nvidia partnership—everyone's hedging, not switching

• AMD's 8% drop versus Nvidia's 2.6% decline signals markets fear custom ASICs threaten AMD's positioning more than Nvidia's dominance

The PyTorch Problem Nobody's Discussing

Here's the detail that should give pause to anyone declaring this the beginning of Nvidia's decline: Meta invented PyTorch. The deep learning framework that dominates machine learning development worldwide came out of Facebook's AI Research lab. Four million developers have built their workflows around Nvidia's CUDA platform. PyTorch sits at the center of that relationship, linking developer habits to Nvidia hardware in ways that took years to cement.

TPUs require a workaround. Google's chips can't run PyTorch directly. You need a translation layer, PyTorch/XLA, and that introduces friction. Performance overhead. Added complexity in the development pipeline. If Meta were to adopt TPUs at scale for its core AI development, it would be undermining the very framework it created and maintains.

The strategic implications run deeper. PyTorch's dominance helps Meta. Every researcher who learns PyTorch, every company that builds production systems on it, every startup that defaults to the framework, they all reinforce an ecosystem where Meta's AI expertise translates directly into influence. Legitimizing a hardware platform that requires workarounds for PyTorch doesn't serve that interest.

So why would Meta even entertain these conversations? The answer likely has nothing to do with training its own models.

What Meta Actually Needs From Google

The Information's reporting suggests the talks involve Meta potentially leasing TPUs through Google Cloud starting next year, with deployment in Meta's own data centers beginning in 2027. That timeline matters. If this were about replacing Nvidia for core model training, Meta would need chips yesterday, not in two years.

The Register's analysis offers a more plausible explanation: inference optimization for Llama. Meta has released its family of large language models publicly on repositories like Hugging Face, where enterprises can download and run them on various hardware platforms. Including Google's TPUs. If Llama doesn't run efficiently on TPUs, enterprises choosing Google Cloud have less reason to adopt Meta's models over alternatives.

Meta doesn't need to own TPUs to benefit from this. It needs Llama to work well wherever enterprises want to run it. The conversations with Google could be primarily about ensuring that compatibility, with chip purchases serving as both a development testbed and a goodwill gesture toward a distribution partner.

Consider the economics. Running inference on a model requires an order of magnitude fewer compute resources than training one. Inference workloads also benefit from proximity to end users. Google operates one of the world's largest cloud infrastructures, already hosting countless enterprise AI deployments. If Meta wants Llama to compete with OpenAI's models and Anthropic's Claude in the enterprise market, it needs Google Cloud customers to have a good experience running it.

The chip deal, if it happens, might be less about Meta's infrastructure needs and more about ecosystem positioning. That's not a threat to Nvidia. It's just business development.

The Leverage Game

There's another explanation that requires no complex strategic analysis: negotiating leverage. The simplest interpretation of Meta's public flirtation with Google chips is that it strengthens Meta's hand in conversations with Nvidia.

Nvidia's Blackwell GPUs remain supply-constrained. Allocation decisions carry enormous weight when every major tech company wants more chips than Nvidia can deliver. Demonstrating credible alternatives, even imperfect ones, gives buyers leverage they otherwise lack.

Look at when this leaked. Nvidia had just posted earnings that cleared analyst estimates. The stock was riding momentum from that beat. Then Meta's chip talks hit the news cycle, injecting doubt into what had been a clean dominance story. Maybe coincidence. Procurement executives know better.

Nvidia's response suggests it got the message. The company posted a statement on X that read like a masterclass in competitive positioning dressed as magnanimity: "We're delighted by Google's success, they've made great advances in AI and we continue to supply to Google. NVIDIA is a generation ahead of the industry, it's the only platform that runs every AI model and does it everywhere computing is done."

That's not a company feeling secure. That's a company reminding everyone, including its own customers, why it still matters. The emphasis on running "every AI model" and working "everywhere computing is done" directly addresses the flexibility concerns that make ASICs like TPUs attractive in the first place.

Everybody's Hedging. That's The Point.

The Meta-Google talks don't exist in isolation. Last month, Anthropic announced plans to use up to one million Google TPUs to train and serve its next generation of Claude models. A deal worth tens of billions of dollars. Then, just last week, Anthropic announced a separate strategic partnership with Microsoft and Nvidia, purchasing $30 billion in Azure compute capacity with Nvidia committing up to $10 billion in investment and Microsoft adding $5 billion.

One million Google TPUs and $30 billion with Nvidia's cloud partner. Anthropic isn't choosing sides. It's choosing all of them.

This is the new normal in AI infrastructure. The stakes are too high and the future too uncertain for any major player to bet everything on a single supplier relationship. Google Cloud executives have reportedly suggested their TPU strategy could capture 10% of Nvidia's annual revenue. That's billions of dollars, but it's also an implicit acknowledgment that Nvidia will retain the other 90%.

Core Scientific's CEO Adam Sullivan captured the competitive dynamics bluntly: "The biggest story in AI right now is that Google and Nvidia are being extraordinarily competitive. They're in a race to secure as much data-center capacity as they can." Both companies are offering financing arrangements to ease chip purchases. Both are courting the same customers. The goal isn't market share in traditional terms. As Sullivan put it, "They don't care about how much revenue they generate. This is about who gets to AGI first."

That framing explains why Meta's discussions with Google don't necessarily threaten Nvidia's position. In a race where everyone needs every possible advantage, diversifying suppliers makes sense regardless of which chips are technically superior. Meta can use Google TPUs for specific workloads while still consuming enormous quantities of Nvidia hardware for others.

The Technical Realities Nvidia Is Betting On

Nvidia's claim to be "a generation ahead of the industry" isn't empty boasting. The company's Blackwell Ultra provides 10 petaflops of performance on four-bit floating point calculations. Its upcoming Rubin architecture promises further gains. And then there's CUDA. Nvidia has spent close to twenty years building out that software ecosystem, layer upon layer of optimization work that competitors would need a decade to match. That moat doesn't show up on spec sheets, but it shapes purchasing decisions every day.

Google's Ironwood TPUs have their own advantages. The chips scale to pods containing up to 9,216 accelerators, far beyond Nvidia's 72-GPU rack configurations. A single cluster can deliver 42.5 exaflops of performance. For organizations running massive training jobs on Google Cloud, that scale advantage matters.

But TPU deployments look nothing like the AMD and Nvidia-based clusters Meta currently operates. TPUs connect via optical circuit switches into toroidal mesh topologies, requiring different programming models and integration approaches than traditional GPU clusters. The transition cost isn't just financial. It's organizational and technical.

The Register's analysis suggests that even if Google agreed to sell chips directly to Meta rather than leasing them through Google Cloud, the Facebook parent would face significant integration challenges. Meta uses custom server and rack designs in its data centers. Adapting them for TPU deployments would require substantial engineering investment.

None of this kills the deal. The scope, though, is probably narrower than headlines suggested. TPUs for certain inference jobs where Google's architecture shines. Nvidia for training runs and the bulk of AI compute. Both suppliers, different roles.

What AMD's Crash Actually Reveals

Here's the number that should concern analysts more than Nvidia's 2.6% decline: AMD dropped 8% on the same news. That's not a reaction to Meta potentially buying fewer AMD chips. AMD isn't Meta's primary AI supplier.

The steeper AMD decline reflects a different fear entirely. AMD has been positioning its MI300 series as a viable alternative to Nvidia's data center GPUs. If Google successfully sells TPUs to external customers, it validates the custom ASIC approach that threatens AMD's GPU-based strategy more directly than it threatens Nvidia's.

Nvidia has scale, ecosystem lock-in, and generational performance leads. AMD has been competing on price-performance ratios and openness to customers burned by Nvidia's allocation constraints. Google entering the market with custom chips optimized for AI workloads undercuts AMD's positioning as the alternative, not Nvidia's position as the default.

The market's differential reaction suggests investors understand this distinction even if the headline narrative doesn't capture it. Nvidia losing a few points of market share to purpose-built AI accelerators is priced into expectations. AMD losing its role as the credible alternative is a more fundamental threat to its data center strategy.

Why This Matters

The Meta-Google chip discussions will likely result in some form of deal. Meta gains leverage, inference optimization, and risk diversification. Google gains a marquee customer that validates its TPU-as-product strategy. Neither outcome requires Nvidia to lose its dominant position.

For enterprise AI buyers: More suppliers means more options. TPUs from Google, Trainium from Amazon, AMD's MI chips. Each one becomes a bargaining chip in Nvidia conversations. Even buyers who end up with GPUs anyway benefit from the competition.

For investors: Right now, this market isn't winner-take-all. Demand outstrips supply across every major chip platform. Meta buying TPUs probably means Meta is buying more total chips, not shifting spend away from Nvidia.

For Nvidia specifically: The company's real challenge isn't customer defection. It's whether scaling laws continue to hold. If AI development shifts toward efficiency rather than raw compute, the assumption that customers will always need more and faster chips becomes questionable. Jensen Huang texting Demis Hassabis about scaling laws being "intact" reveals where Nvidia's attention is actually focused. The existential question isn't Google's TPUs. It's whether the AI compute treadmill keeps accelerating.

❓ Frequently Asked Questions

Q: What exactly are TPUs and how do they differ from Nvidia's GPUs?

A: TPUs (tensor processing units) are custom chips Google designed specifically for AI workloads. Unlike Nvidia's general-purpose GPUs, TPUs are ASICs—application-specific integrated circuits built for one task. This makes them more energy-efficient for AI but less flexible. Google's Ironwood TPUs can scale to 9,216 chips per cluster, delivering 42.5 exaflops of performance.

Q: What's the difference between training and inference in AI?

A: Training teaches an AI model by processing massive datasets, requiring enormous compute power. Inference is when a trained model generates responses to user queries. Inference needs roughly 10x less compute than training. This distinction matters because Meta might use TPUs for inference (running Llama for customers) while keeping Nvidia GPUs for the heavy lifting of training new models.

Q: What is CUDA and why does it give Nvidia such a strong advantage?

A: CUDA is Nvidia's software platform that lets developers write code for its GPUs. Over nearly 20 years, Nvidia has built optimization tools that 4 million developers now depend on. Switching to different hardware means rewriting code and abandoning years of accumulated expertise. This software lock-in protects Nvidia more than raw chip performance does.

Q: What are "scaling laws" and why does Nvidia care about them?

A: Scaling laws suggest that AI models improve predictably when you add more data and compute power. If true, companies will keep buying more chips indefinitely. Jensen Huang texted Demis Hassabis specifically about scaling laws being "intact." If this theory breaks down—if efficiency gains replace brute-force compute—Nvidia's growth story changes fundamentally.

Q: Why would Google start selling TPUs now after keeping them internal for years?

A: Google Cloud executives reportedly believe external TPU sales could capture 10% of Nvidia's annual revenue—billions of dollars. Google has used TPUs internally since 2015 and offered cloud rentals since 2018, but never sold chips outright. Landing Meta as a customer would validate TPUs as a real Nvidia alternative and attract other buyers.