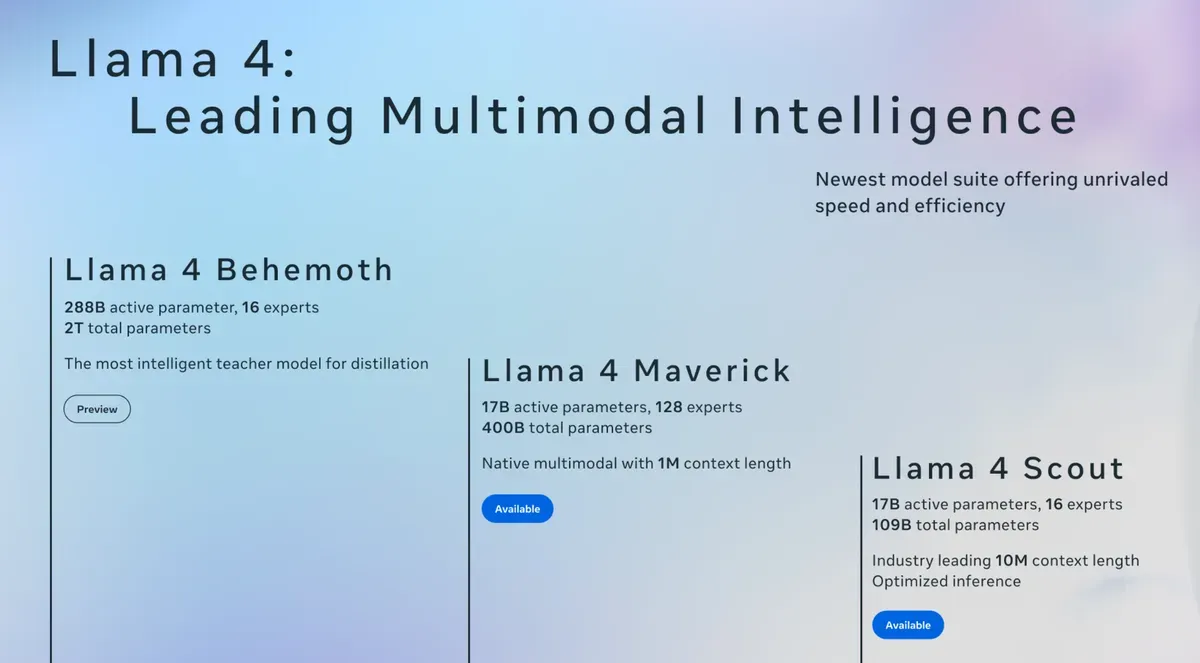

A third model, the massive Llama 4 Behemoth, remains in training. Meta claims these models match or beat competitors like GPT-4 and Google's Gemini on key benchmarks.

Scout runs on a single high-end GPU chip. That's remarkable for an AI model that processes up to 10 million tokens - about 20 novels worth of text. It outperforms Google's Gemma 3 and Mistral 3.1 across standard tests.

Maverick targets more demanding tasks. Meta says it matches DeepSeek v3's abilities in coding and reasoning while using half the computing power. The model excels at understanding images and writing creative text.

Both models use a clever trick called "mixture of experts." Instead of activating all parts of the model for every task, they only wake up the specialists needed. Think of it as assembling the perfect team for each job rather than calling an all-hands meeting.

This approach cuts computing costs and energy use. Maverick, for example, has 400 billion total parameters but only uses 17 billion at a time. That efficiency lets it run on standard hardware while matching the capabilities of much larger models.

Meta's biggest bet sits in the training room: Llama 4 Behemoth. With 288 billion active parameters and 2 trillion total parameters, it aims to lead the AI race. Early tests show it beating GPT-4.5 and Claude 3.7 Sonnet on science and math problems.

The models work across text, images, and video frames. They understand temporal sequences and can analyze up to 48 images at once. Meta tested them with eight images in real-world use, finding strong results in visual reasoning tasks. Meta tackled bias head-on. Previous AI models often showed political leanings and refused to engage with certain topics. Llama 4 cuts refusal rates from 7% to under 2% on debated issues. It matches Grok's political balance while halving Llama 3's bias rates.

The company built in safety features at multiple levels. These include:

- Pre-training filters to screen problematic content

- Post-training techniques to ensure helpful responses

- System-level tools like Llama Guard to catch harmful inputs and outputs

- Automated testing through their new Generative Offensive Agent Testing system

Developers can download Scout and Maverick today from llama.com or Hugging Face. The models also power Meta's AI assistant across WhatsApp, Messenger, Instagram, and meta.ai.

Meta calls these models "open source," but some disagree. The license requires big platforms (over 700 million monthly users) to get Meta's permission first. The Open Source Initiative said similar restrictions on Llama 3 made it "not truly open source."

The release comes amid intense competition. Chinese tech giants like Alibaba and Tencent launched new AI models recently. Baidu made its Ernie Bot free. Western companies scramble to respond.

Meta plans to reveal more at LlamaCon on April 29. The conference should clarify how Meta sees AI's future and its role in shaping it.

Why this matters:

- Meta just showed you can build powerful AI models that run on normal hardware. That could democratize AI development and cut its environmental impact

- The "mixture of experts" approach might solve AI's biggest problem: the massive computing power needed to run modern models. If it works, we'll see more capable AI in more places

Read on, my dear:

- Meta: The Llama 4 herd: The beginning of a new era of natively multimodal AI innovation