Satya Nadella walked Dwarkesh Patel and Dylan Patel through Microsoft's new Atlanta data center last week, past rows of cooling manifolds and fiber-optic cables stretching across 85 acres. The facility hosts hundreds of thousands of Nvidia GB200 GPUs in a two-story configuration. SemiAnalysis estimates the GPU block at roughly 300 megawatts. Nadella called it a "super factory." He didn't call it a surrender.

But that's what the numbers say.

What Changed

• Microsoft froze 3.5 gigawatts of AI capacity mid-2024 after dominating with 60% market share, losing sites to competitors

• OpenAI signed over $300 billion with Oracle after Microsoft couldn't match execution speed on gigawatt-scale infrastructure buildout

• SemiAnalysis projects Oracle surpassing Microsoft in AI capacity by 2027, growing from one-fifth of Microsoft's size in eighteen months

• Microsoft now targets 9.5 gigawatts by 2028, down from 12-13 gigawatts before the pause, per SemiAnalysis estimates

The Pause That Wasn't Strategic

Microsoft dominated AI infrastructure buildout in 2023 and early 2024. The company accounted for 60% of all hyperscaler data center pre-leasing at peak, according to SemiAnalysis tracking. No competitor came close. Microsoft signed leases for gigawatts of capacity in Phoenix, Chicago, the UK, the Nordics, Australia, Japan, India. Then in mid-2024, the company froze.

Not slowed. Froze.

Microsoft walked away from gigawatts of non-binding letters of intent. Sites weeks from lease execution went to Oracle, Meta, Google, Amazon. Self-build programs already under construction? SemiAnalysis documents roughly 950 megawatts of IT capacity where Microsoft pumped the brakes. The firm estimates the company walked away from 3.5 gigawatts that would've been operational by 2028. Competitors are building those sites now.

Nadella's explanation in the Dwarkesh interview frames this as strategic wisdom. "We didn't want to just be a hoster for one company and have just a massive book of business with one customer," he said, referring to OpenAI. Microsoft wanted a "fungible fleet" serving diverse AI workloads, not bare-metal GPU clusters locked into multi-year contracts with foundation model companies.

The timing tells a different story. Microsoft's pause began around the time OpenAI started actively diversifying its compute contracts beyond Microsoft's offerings. Oracle's Abilene, Texas facility went from groundbreaking to initial operations within months, according to SemiAnalysis, while Microsoft's Wisconsin Fairwater site, which broke ground two years earlier, still wasn't fully operational. OpenAI has commitments to Oracle that public reporting typically cites as a $300 billion, five-year deal, though SemiAnalysis models total OpenAI-Oracle contract value over the last twelve months at more than $420 billion. Microsoft lost the race it had been winning.

Nadella claims Microsoft chose not to chase that business. "That's not a Microsoft business," he told the interviewers. But SemiAnalysis data shows Microsoft is now buying capacity from neoclouds like Iris Energy, Nebius, and Lambda Labs, reselling GPUs Microsoft doesn't own to customers Microsoft couldn't serve. That's not strategy. That's scrambling.

OpenAI's Escape Velocity

The revised Microsoft-OpenAI agreement announced last month tells you which company gained leverage. Microsoft keeps exclusive rights to OpenAI's stateless APIs through 2030, with its broader IP and model access now extended through 2032. Those are meaningful rights. But OpenAI can now run its SaaS business, ChatGPT, on any infrastructure. Partners building stateful applications with OpenAI, outside specific exceptions like US government work, must use Azure.

That sounds like Microsoft won exclusivity. Look closer. OpenAI's revenue is growing in its direct consumer and enterprise business, the SaaS layer where Microsoft has no guaranteed access to the compute. The API business Microsoft locked down serves developers who need inference endpoints. That's valuable, but it's not where OpenAI will generate $100 billion in revenue by 2027 if the bullish projections that Altman and some analysts are making actually materialize.

Microsoft also gained access to OpenAI's custom chip development with Broadcom. Nadella emphasized this in the interview: "As they innovate even at the system level, we get access to all of it." Microsoft plans to instantiate OpenAI's designs first for OpenAI, then extend them for Microsoft's own use. That IP access matters, especially since Microsoft's own Maia chip program is the last of the big cloud accelerators to hit the market and, by all accounts, is still in limited deployment, with Microsoft several years behind Google's TPU and Amazon's Trainium in terms of mature, large-scale custom silicon rollout.

But here's what changed. In 2023, OpenAI needed Microsoft for compute. By 2025, OpenAI has Oracle building gigawatt-scale facilities, has contracts with CoreWeave, Nscale, SB Energy, Amazon, and Google. Microsoft went from sole provider to one option among many. The revised agreement formalizes that shift.

Nadella described this as Microsoft avoiding over-concentration risk. "If we're going to build out Azure to be fantastic for all stages of AI, we just need fungibility of the fleet," he explained. Translation: Microsoft couldn't keep pace with OpenAI's scaling demands, so the company repositioned around serving smaller customers with diverse workloads.

Oracle's Surge

SemiAnalysis projects Oracle going from one-fifth of Microsoft's AI infrastructure capacity to larger than Microsoft by the end of 2027. That shift would happen in eighteen months. Oracle now operates the Abilene campus approaching gigawatt scale, with similar projects advancing in other locations. SemiAnalysis estimates Oracle's bare-metal business serving foundation model companies at roughly mid-30s gross margins, which in their model is actually higher than the returns Microsoft is currently getting on AI infrastructure once OpenAI revenue-share is stripped out.

Microsoft's response? Nadella doesn't track those numbers closely. "It doesn't mean I have to chase those," he said when asked about competing hyperscaler capacity. "I have to chase them for not just the gross margin that they may represent in a period of time. What is this book of business that Microsoft uniquely can go clear, which makes sense for us to clear?"

That's a CEO explaining why his company chose not to compete for the fastest-growing segment of cloud computing. Maybe that's wisdom. Or maybe it's accepting defeat and calling it strategy.

Oracle's execution advantage is real. The company moves faster on site selection, power procurement, and construction timelines. Oracle signs agreements and energizes capacity before Microsoft completes environmental reviews. That matters when foundation model companies need compute in quarters, not years. Microsoft's advantages, deeper software integration and enterprise relationships, matter less when the customer wants 50,000 GPUs in a rack-scale configuration running for three years.

The bare-metal business isn't prestigious. It carries lower margins than platform services. But it's where demand is growing fastest, and it's the business Microsoft pioneered with OpenAI's early training clusters. Ceding that market to Oracle doesn't position Microsoft well for the next phase, when today's bare-metal customers become tomorrow's platform users.

Fairwater's Paradox

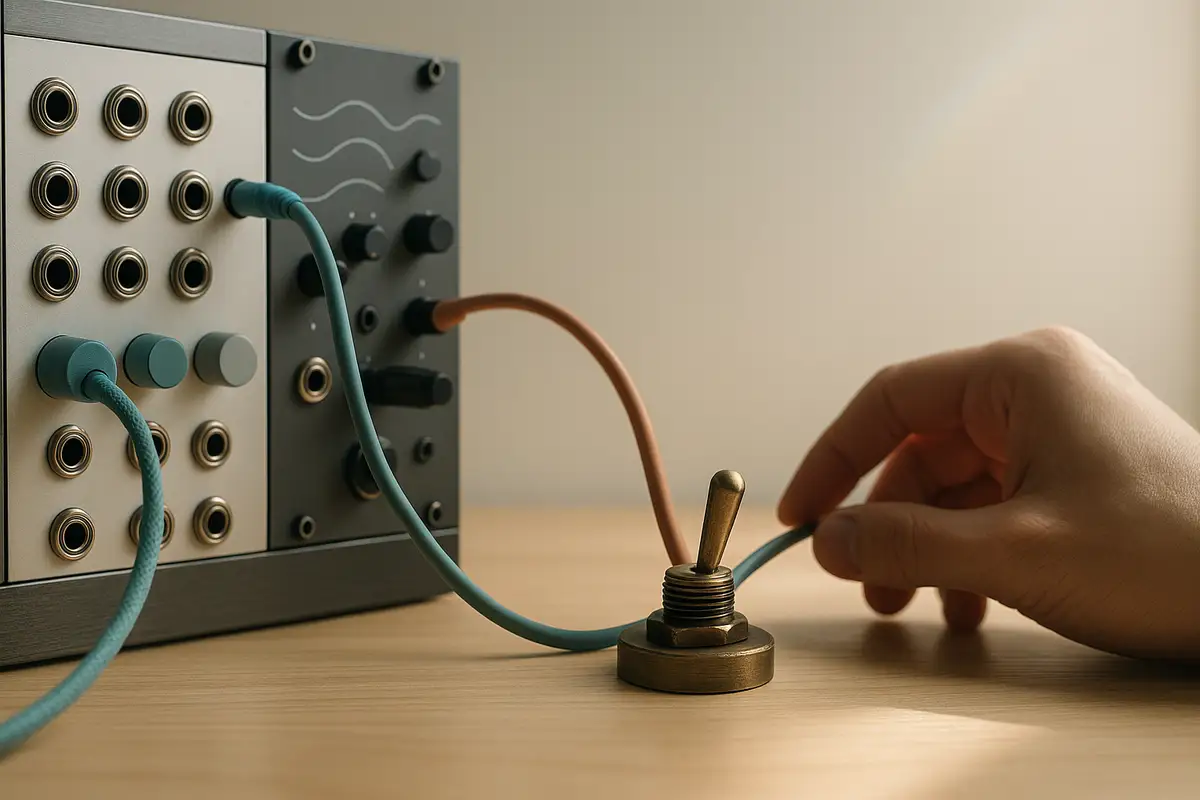

Microsoft's Atlanta Fairwater facility is legitimately impressive. Two-story construction cuts cable runs between racks. Matters when you're connecting hundreds of thousands of GPUs where milliseconds compound. Liquid cooling enables rack densities that air-cooled facilities can't reach without melting. Microsoft ran 120,000 miles of fiber to link Fairwater sites. SemiAnalysis estimates the AI WAN Microsoft built at over 300 terabits per second today, with engineering paths above 10 petabits.

Training runs across multiple data centers need that kind of bandwidth. Otherwise checkpoints corrupt and days of compute disappear. Liquid cooling extends runway as next-gen chips draw more power per rack.

But innovation doesn't equal market position. Microsoft built Fairwater to host the largest training runs in the world. By the time Atlanta came online in November 2024, OpenAI was running those training runs on Oracle and CoreWeave infrastructure. Microsoft's technical achievement arrived after the company already surrendered strategic position.

Nadella frames Fairwater as evidence of Microsoft's commitment to leading-edge infrastructure. The facility proves Microsoft can build at scale. What it doesn't prove is that Microsoft will deploy that capability at the pace and in the locations that matter most to customers driving demand. Oracle is building in Abilene, a location chosen for power availability and speed to operational status. Microsoft is building in Atlanta and Wisconsin, locations that serve Microsoft's global Azure footprint but may not align with where frontier model training happens next.

The AI WAN connecting Fairwater sites is real technical achievement. It enables Microsoft to run distributed training jobs across geographic regions, something no other hyperscaler can match at similar scale. But distributed training introduces communication overhead and complexity. Model companies prefer concentrated compute in single locations when possible. Microsoft built infrastructure for a future training paradigm while competitors serve today's demand.

What Microsoft Actually Won

Microsoft's pivot away from bare-metal hosting toward a "fungible fleet" serving diverse AI workloads makes sense if you believe foundation model training will diffuse across many smaller customers rather than concentrate in a few labs. Nadella clearly believes that. He described the business he wants to be in: "A real workload needs all of these things to go build an app or instantiate an application. The model companies need that to build anything. It's not just like, 'I have a token factory.' I have to have all of these things. That's the hyperscale business."

Right. Enterprise AI workloads need databases, storage, networking, identity management, security, observability. Microsoft offers those services better than Oracle does. If AI deployment follows the cloud computing pattern, where initial infrastructure buildout concentrates in a few large players but monetization comes from millions of smaller customers, Microsoft's position improves over time.

But two problems complicate that thesis. First, Microsoft is now starting from behind in raw capacity. SemiAnalysis estimates Microsoft will operate around 9.5 gigawatts of AI infrastructure capacity by 2028, down from the 12-13 gigawatts the company was tracking toward before the pause. Microsoft will need to catch up while also serving growing demand from existing customers.

Second, the foundation model companies Microsoft deprioritized are themselves becoming platforms. OpenAI's API business runs on Azure, but ChatGPT does not. Anthropic serves Claude through its own API and through partnerships with Amazon and Google. These model companies are building the abstractions that matter most to developers. Microsoft's Azure services sit below that layer. The margins may accrue to whoever controls the model interface, not the infrastructure underneath.

Nadella's bet is that trust in American technology infrastructure, combined with Microsoft's enterprise relationships and global footprint, will matter more than winning the raw gigawatts race. "The key, key priority for the US tech sector and the US government is to ensure that we not only do leading innovative work, but that we also collectively build trust around the world on our tech stack," he said. Microsoft's sovereign cloud offerings in Europe, its government clouds, its compliance frameworks, these differentiate Azure from Oracle's bare-metal focus.

Maybe that's the winning strategy. But it's not the strategy Microsoft was pursuing eighteen months ago.

What This Means

Nadella's pre-Ignite media tour served a purpose. Before his deputy Judson Althoff takes the stage in San Francisco next week, Nadella needed to explain why Microsoft's AI infrastructure strategy looks nothing like what the company was building in 2023. The official story is strategic repositioning toward higher-margin platform services and away from low-margin bare-metal hosting. The alternative reading is that Microsoft lost its nerve, moved too slowly, and is now rationalizing that outcome as intentional.

Both can be partly true. Microsoft's Fairwater facilities represent real technical achievement and will serve important workloads. But Oracle is now the infrastructure partner for the most important AI company in the world, after Microsoft spent 2023-24 building that exact business and then walked away from it.

The question for enterprises evaluating AI infrastructure isn't whether Microsoft's pivot toward platform services makes long-term sense. It probably does. The question is whether Microsoft can execute on that vision faster than model companies build their own platforms, and faster than Oracle scales up the stack into the services Microsoft wants to own.

Nadella spent an hour explaining why Microsoft made the right choices. The facilities his team is building suggest the company has world-class technical capability. Whether Microsoft can convert that capability into market leadership after ceding first-mover advantage is what the next two years will determine.

Azure is still growing. GitHub Copilot still leads in subscriptions. Microsoft's Office 365 integration still reaches more knowledge workers than any competitor. But when CEO Satya Nadella has to spend interview time explaining why his company chose not to compete for the fastest-growing segment of its market, something went wrong between vision and execution.

Why This Matters

- For enterprises: Microsoft's infrastructure pause likely implies tighter Azure capacity over at least the next several quarters. Companies planning large AI deployments should model scenarios where Azure availability constrains their roadmaps. Factor in alternative providers even if Azure is the preferred platform.

- For Microsoft investors: SemiAnalysis modeling suggests the company's AI infrastructure business is pulling lower returns than projected, with gross margins compressed by neocloud reselling and OpenAI revenue-sharing arrangements. The platform services Nadella is pivoting toward won't generate comparable revenue until 2027-28, creating a potential near-term growth gap that Q4 and Q1 earnings may need to address.

- For the AI industry: Oracle's emergence as a credible hyperscaler changes infrastructure competition. Foundation model companies now have alternatives to Amazon, Google, and Microsoft. That competitive pressure will benefit model companies but may slow infrastructure innovation as hyperscalers focus on cost reduction over capability expansion.

❓ Frequently Asked Questions

Q: What exactly is Fairwater?

A: Fairwater is Microsoft's designation for its newest class of AI data centers designed specifically for training large models. Each Fairwater site consists of two buildings: a standard 48-megawatt facility for CPU and storage, and a massive 300-megawatt GPU building with two-story construction housing roughly 150,000 Nvidia chips. Microsoft has built Fairwater facilities in Wisconsin and Atlanta, connected by 120,000 miles of fiber optic cable.

Q: Does Microsoft still have exclusive access to OpenAI's technology?

A: Partially. Microsoft keeps exclusive rights to OpenAI's stateless APIs (the developer-facing inference endpoints) through 2030, with broader IP and model access through 2032. But OpenAI can now run ChatGPT and other consumer products on any infrastructure provider, which is where most of OpenAI's revenue growth is happening. This means Oracle, Amazon, and Google can host OpenAI's fastest-growing business lines.

Q: Why can Oracle build data centers faster than Microsoft?

A: Oracle focuses exclusively on speed and power availability, often building in less-regulated locations. The Abilene, Texas facility reportedly went from groundbreaking to initial operations in months. Microsoft builds for its global Azure footprint, which requires navigating environmental reviews, local regulations, and enterprise compliance requirements across multiple jurisdictions. Foundation model companies needing compute immediately choose speed over Microsoft's broader infrastructure advantages.

Q: How much is Microsoft spending on AI infrastructure?

A: Microsoft spent over $34 billion on capital expenditures in its fiscal first quarter of 2025 alone, with the bulk going toward AI data centers. The company has committed to increasing infrastructure investments further. For comparison, all major tech companies combined are expected to spend roughly $400 billion on AI infrastructure in 2025. Microsoft's pause means it's now tracking toward 9.5 gigawatts by 2028 instead of 12-13 gigawatts.

Q: What does Microsoft mean by a "fungible fleet"?

A: Microsoft wants data centers that can switch between different AI workloads rather than locking hardware into multi-year contracts with single customers. This means the same GPUs could handle training runs, inference serving, data generation, or research compute depending on demand. Nadella argues this approach generates better returns than becoming a bare-metal provider for foundation model companies, though it means accepting lower market share in the fastest-growing infrastructure segment.